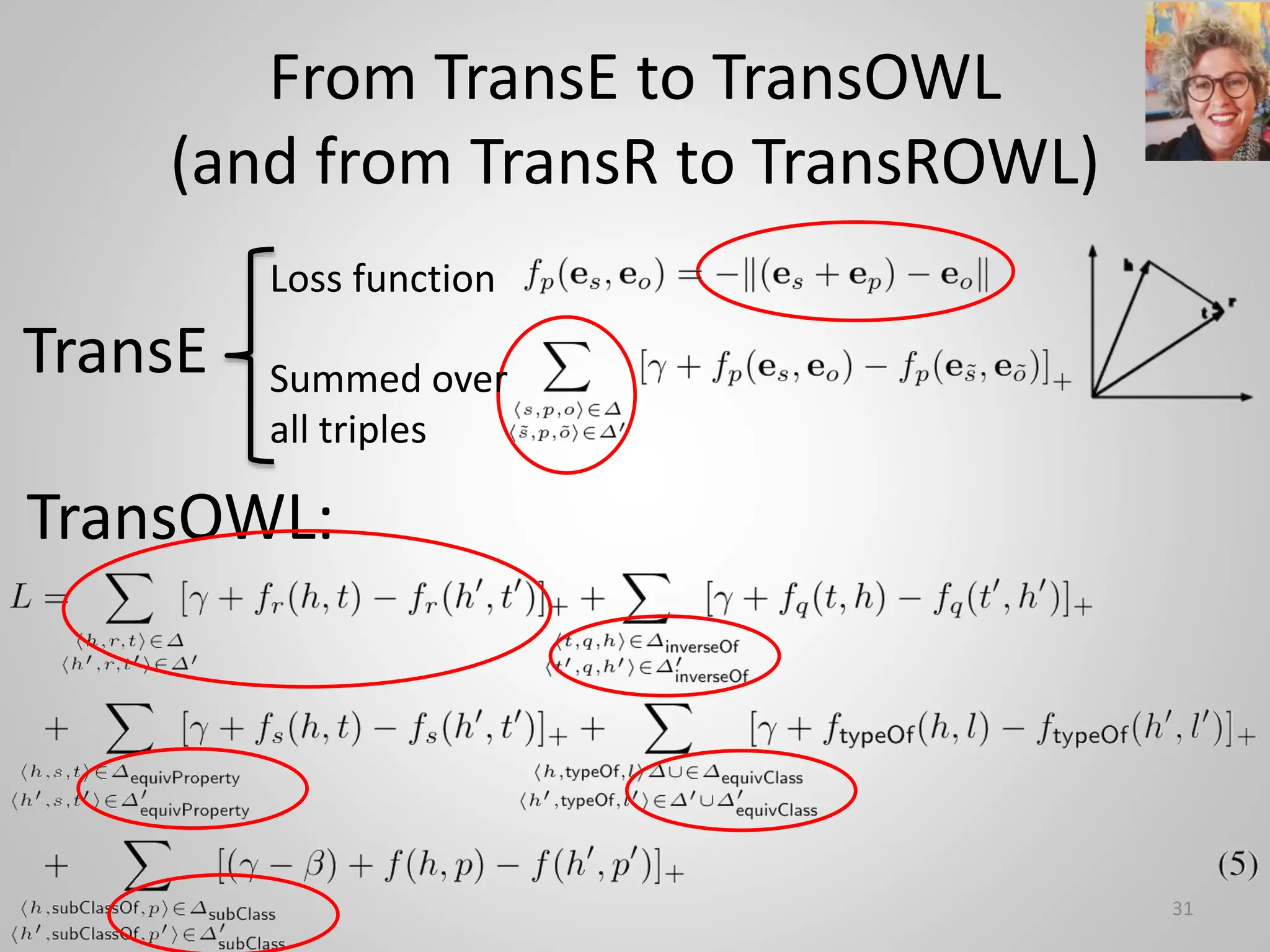

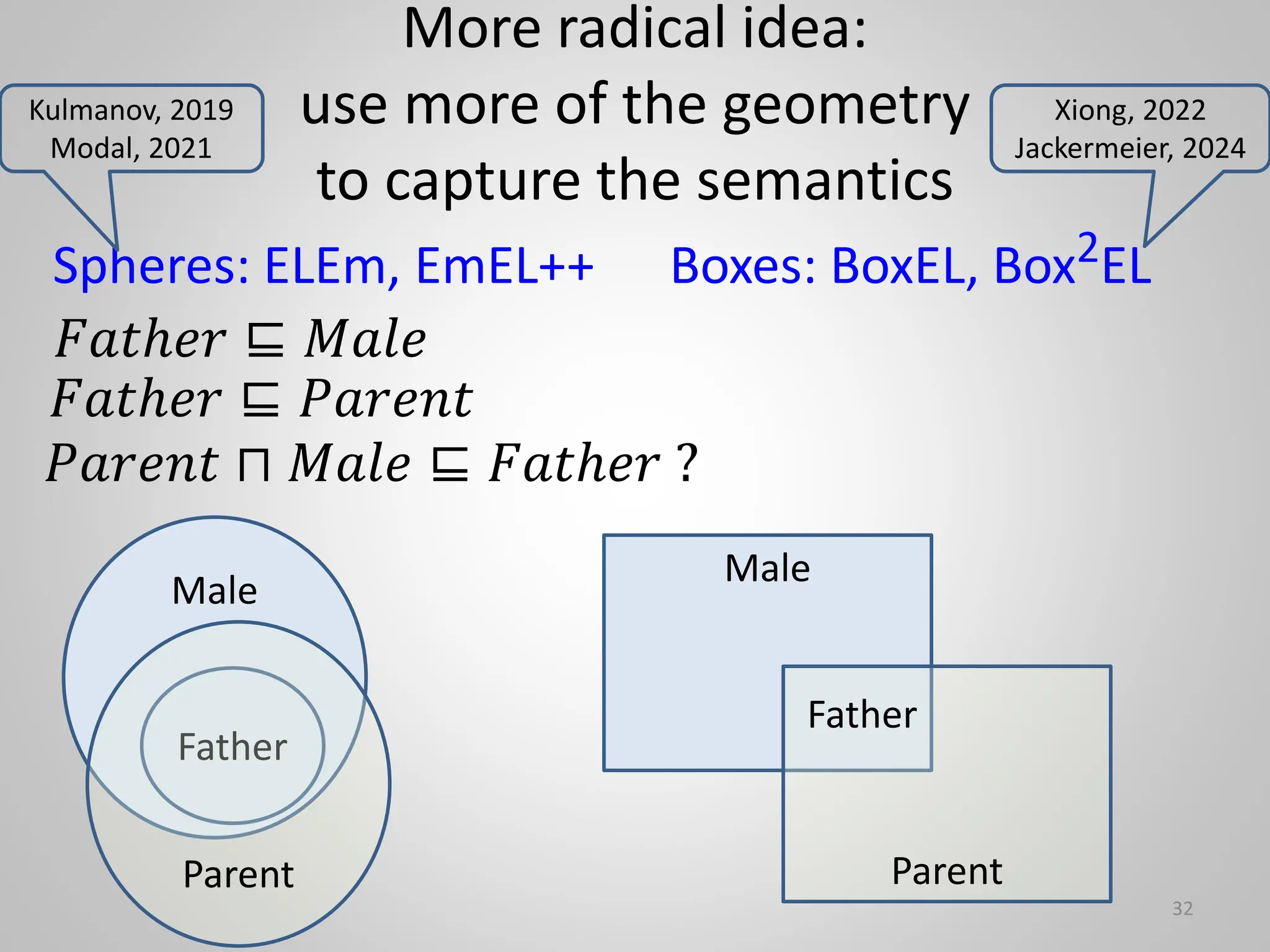

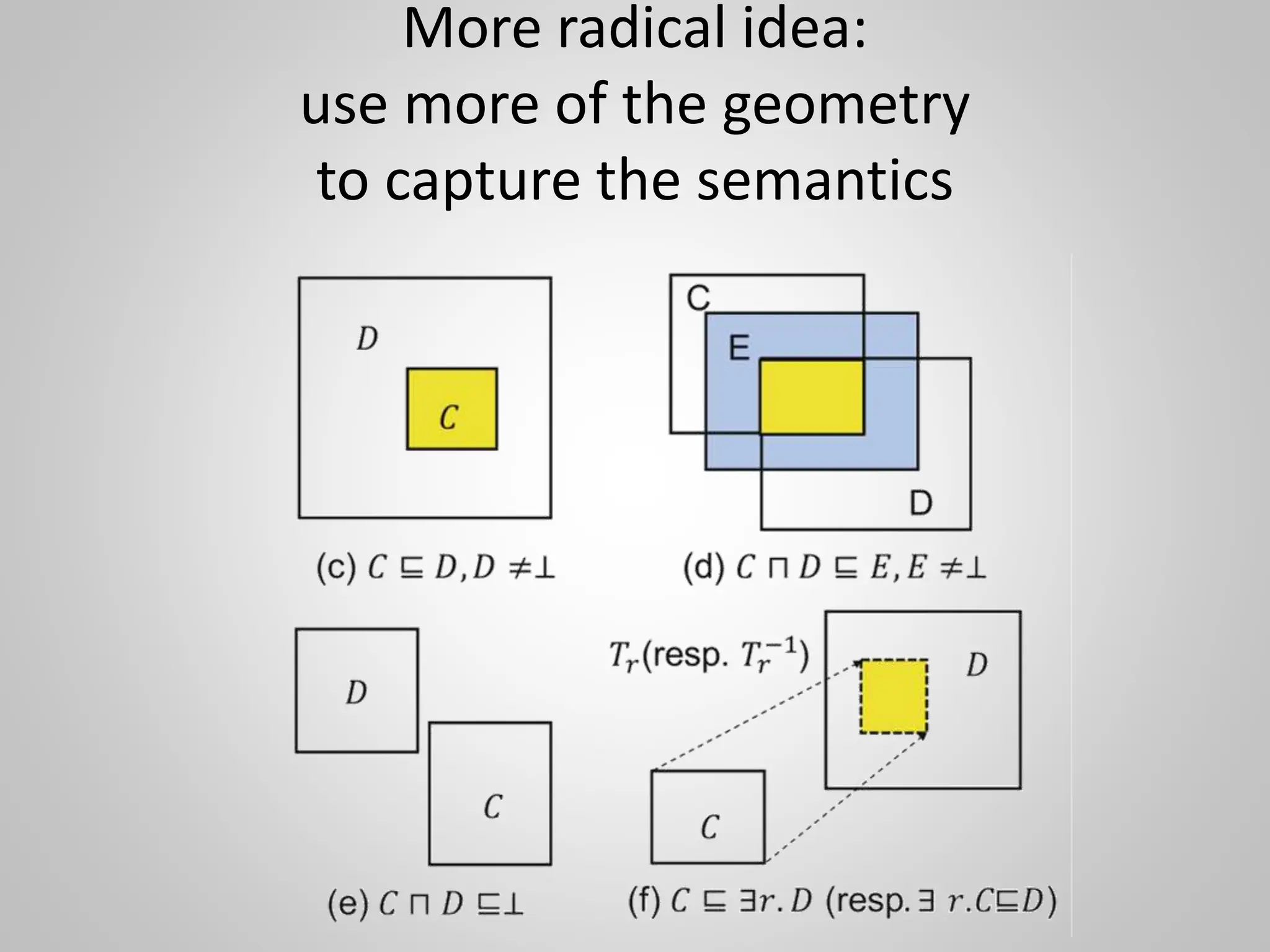

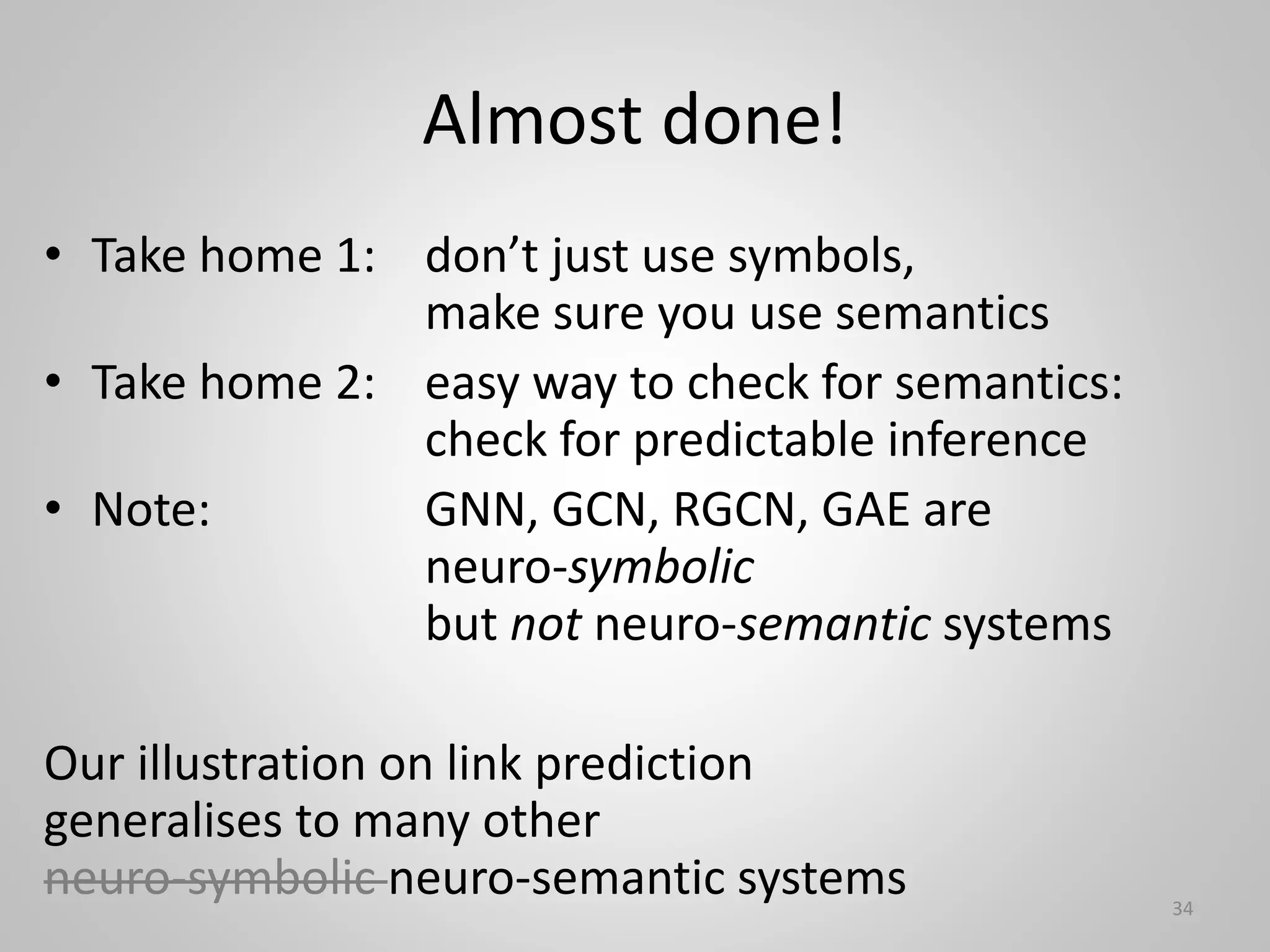

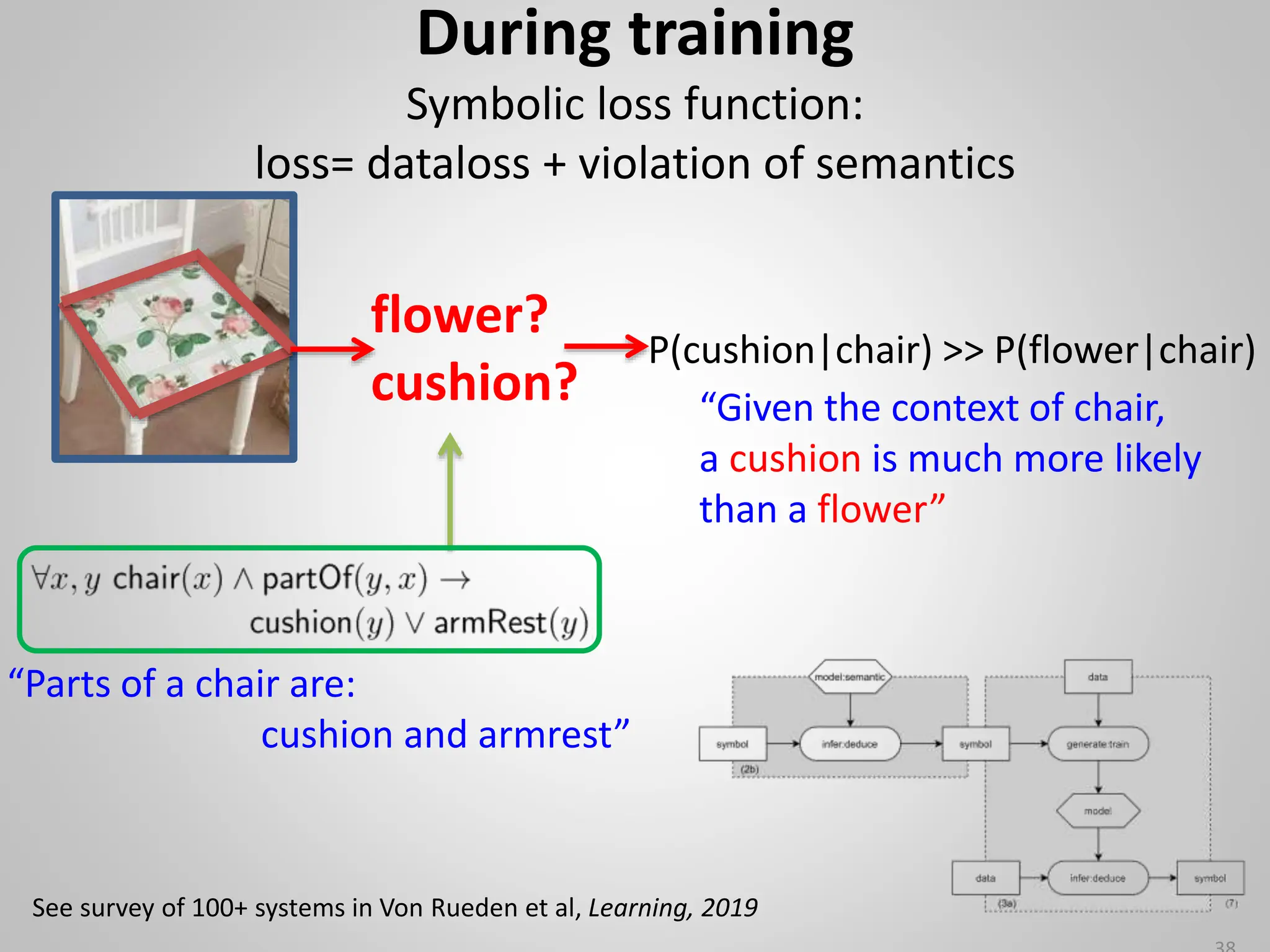

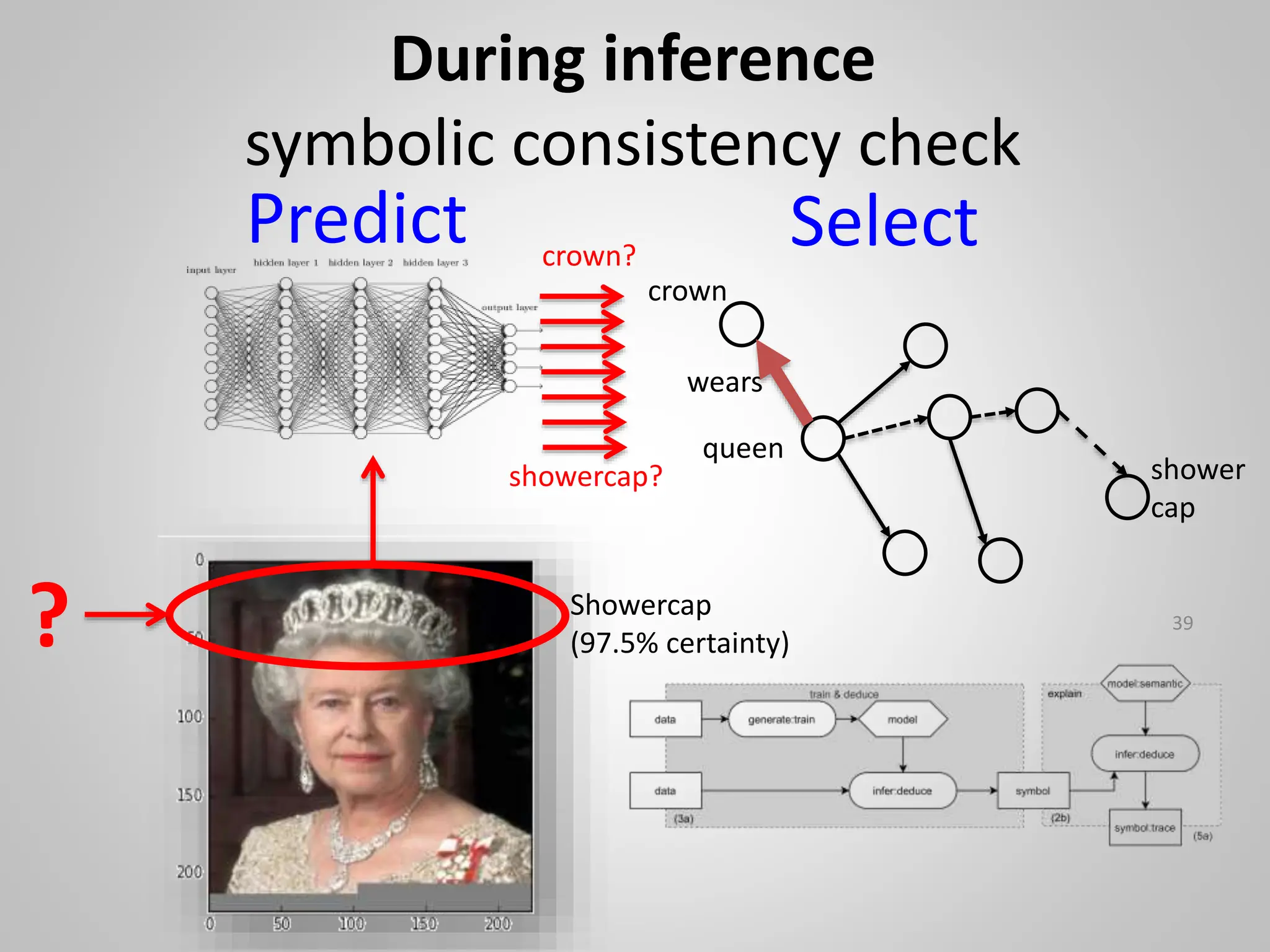

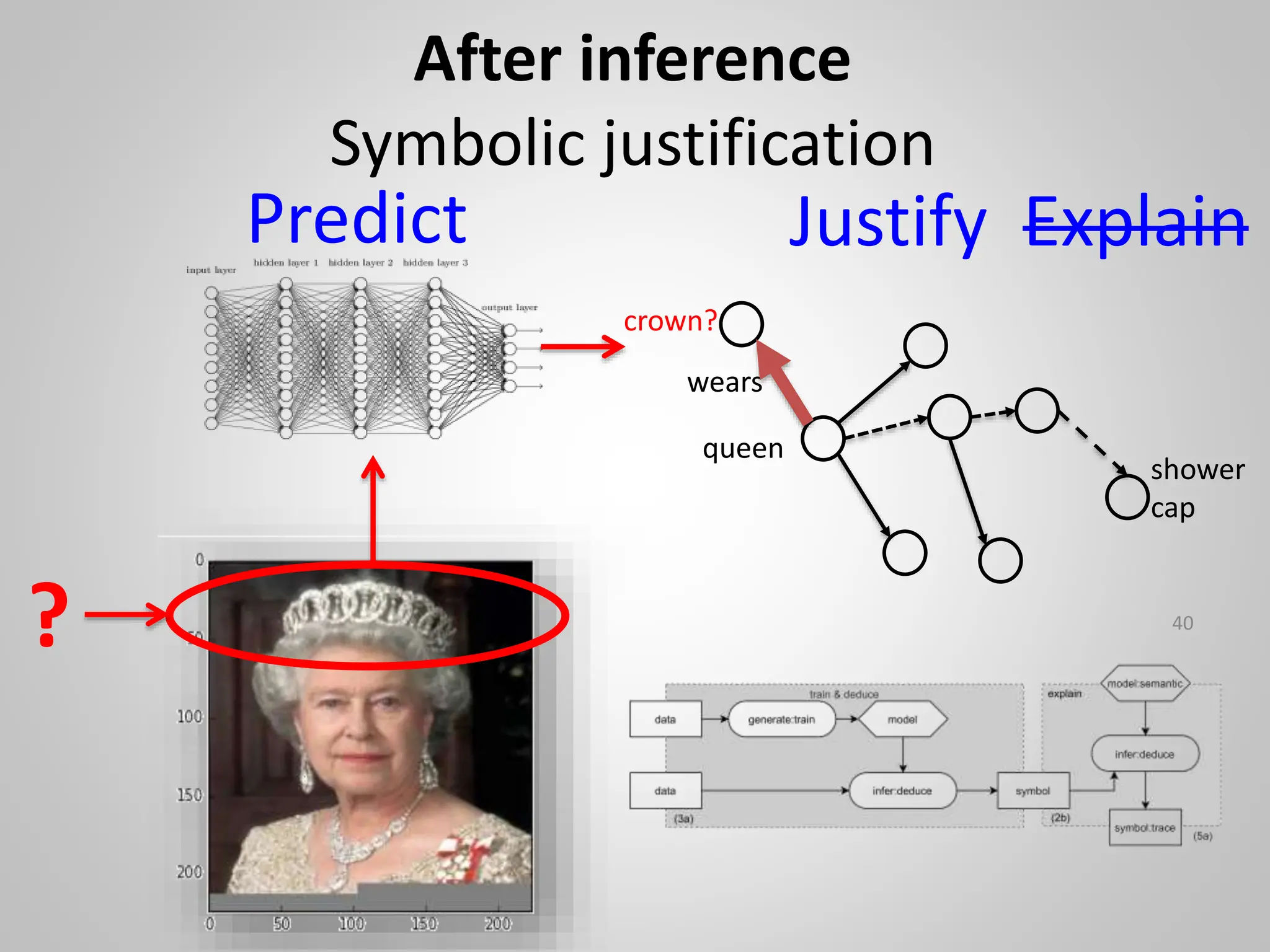

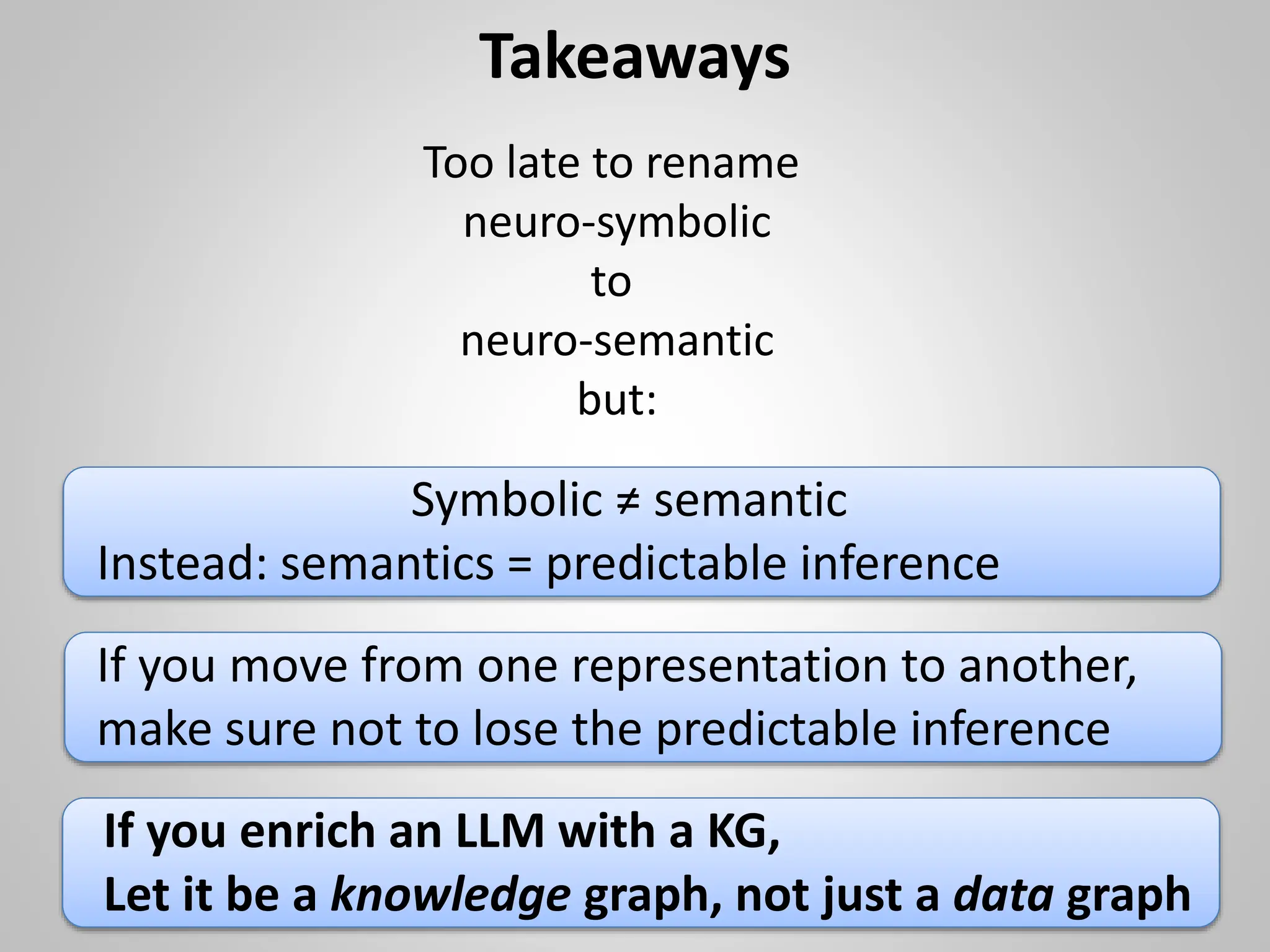

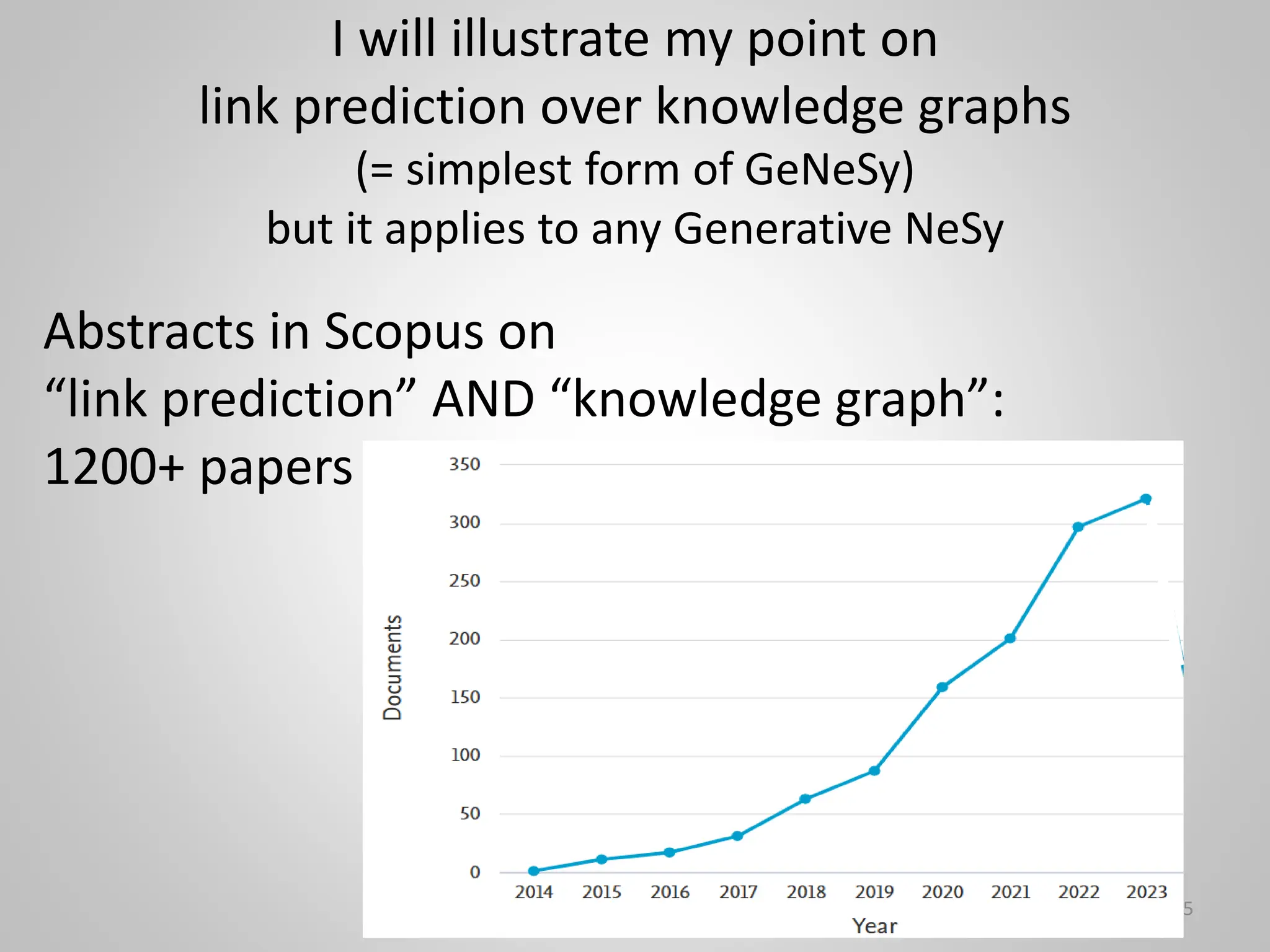

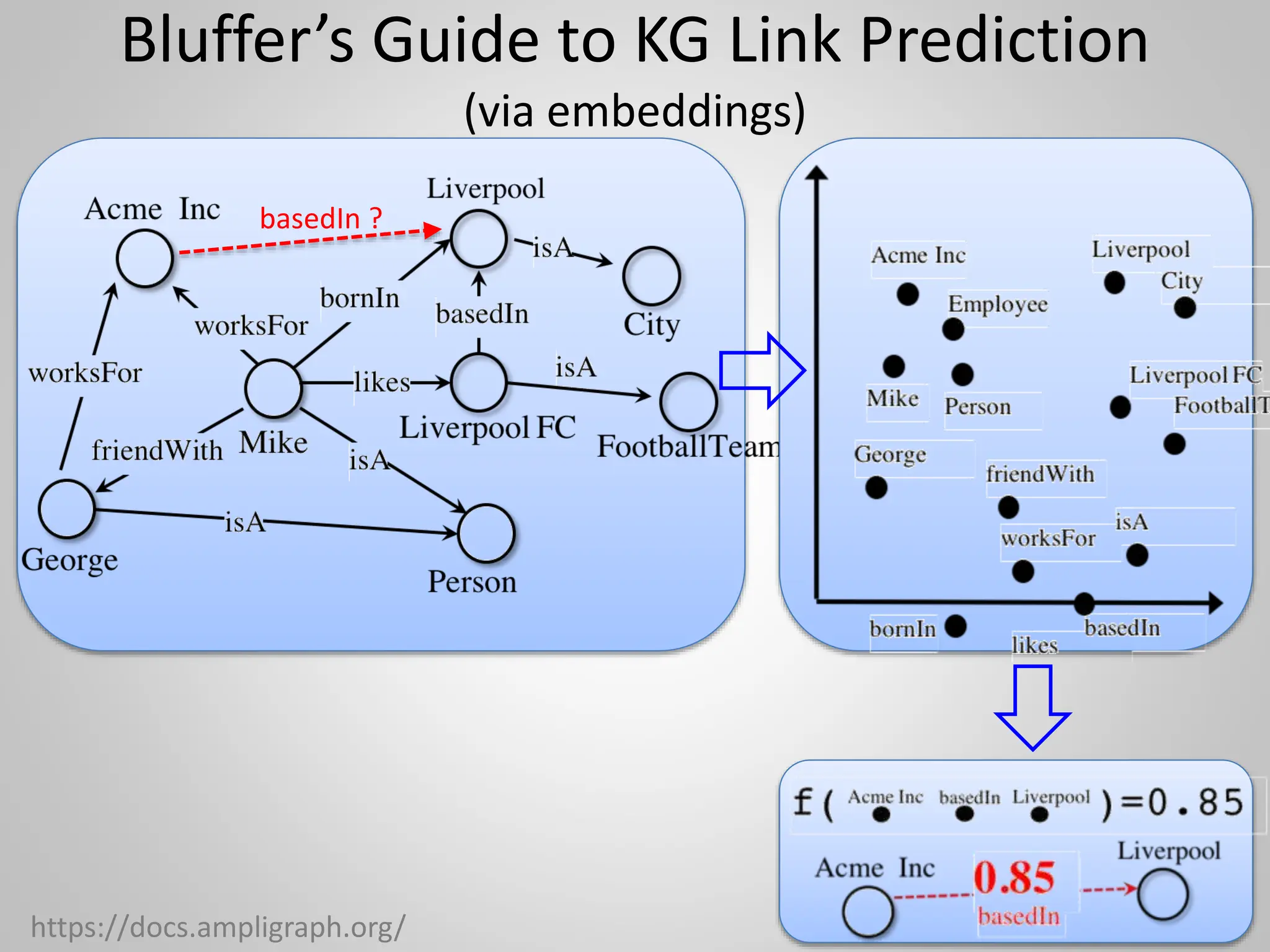

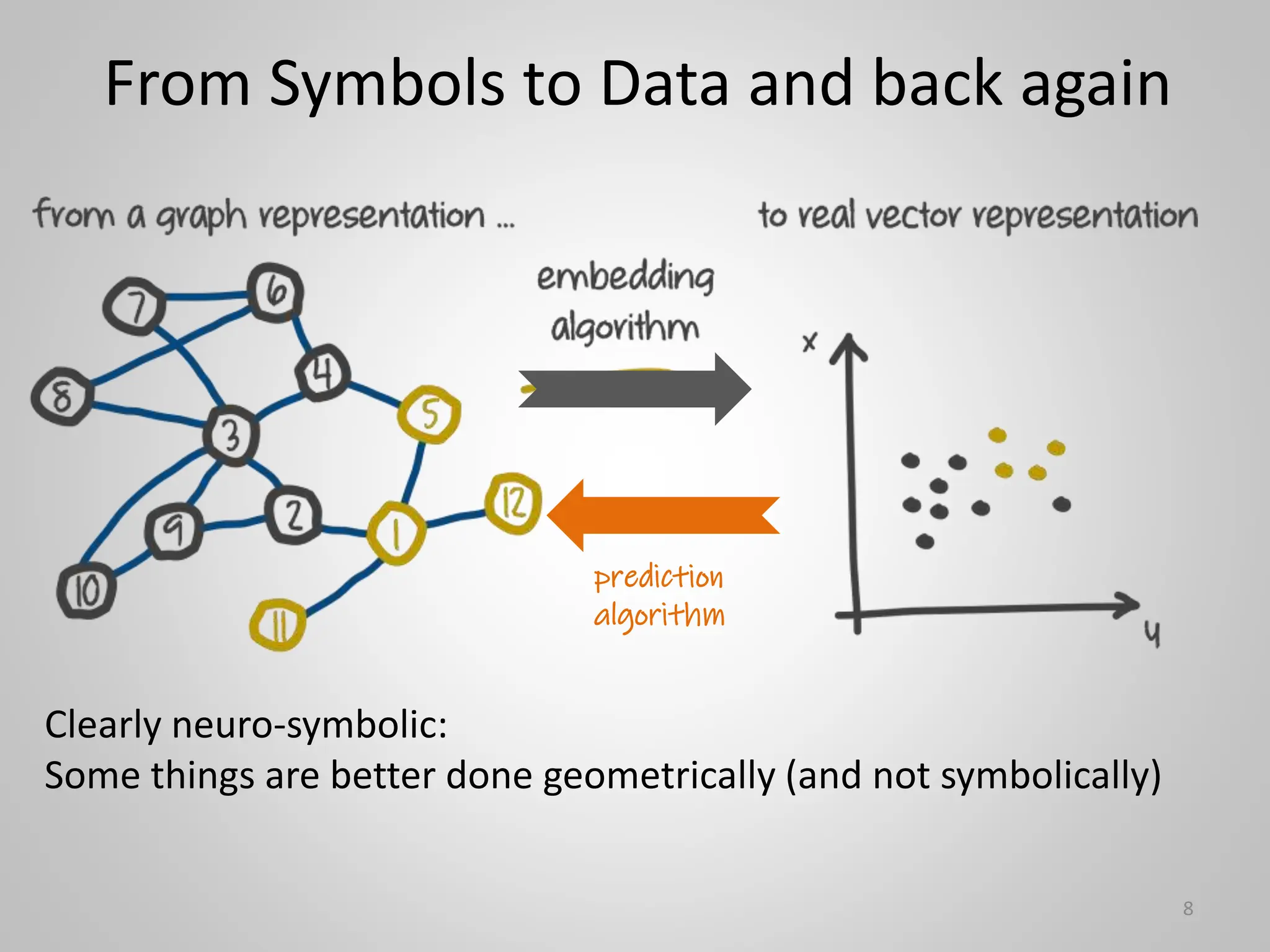

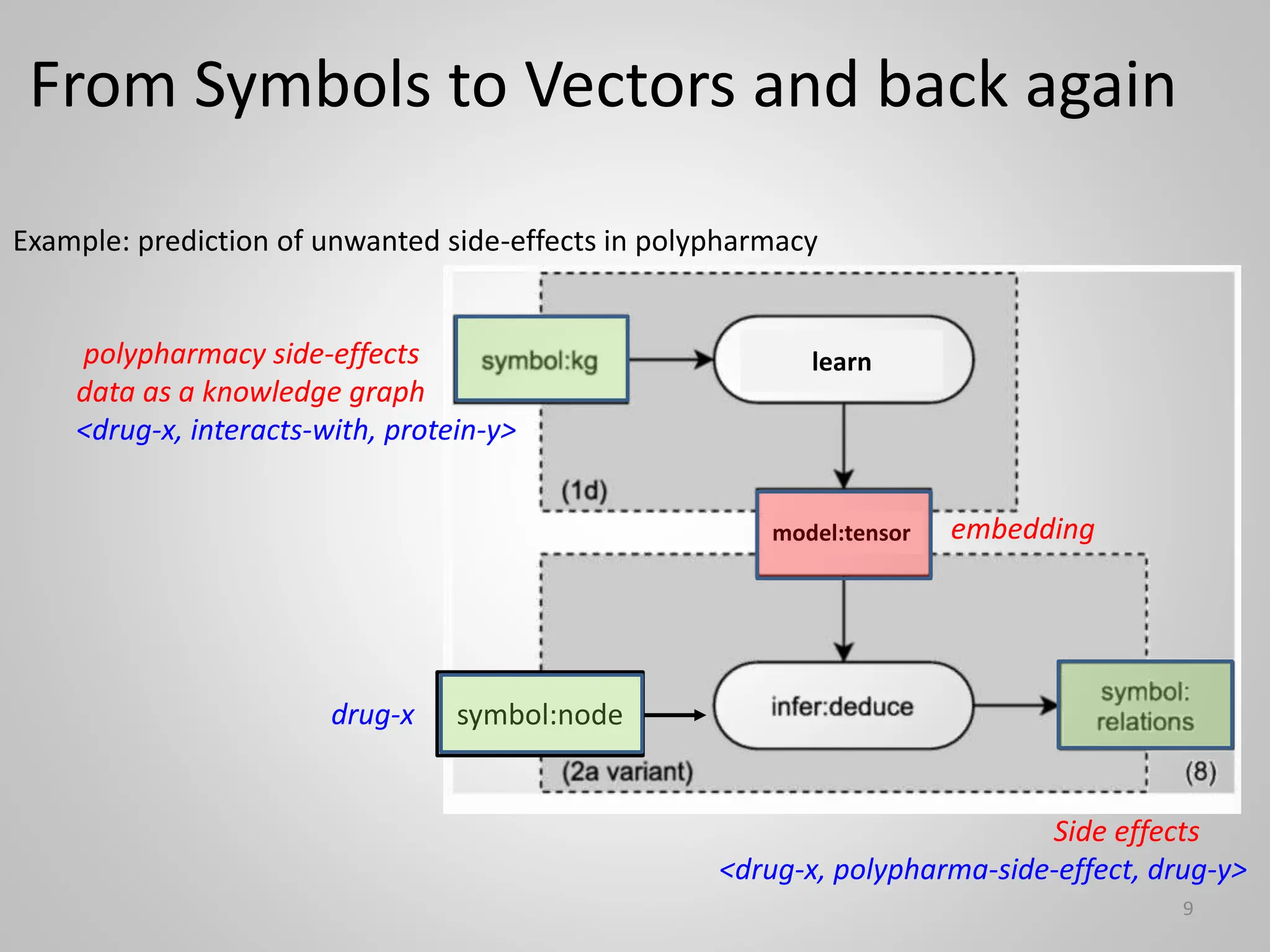

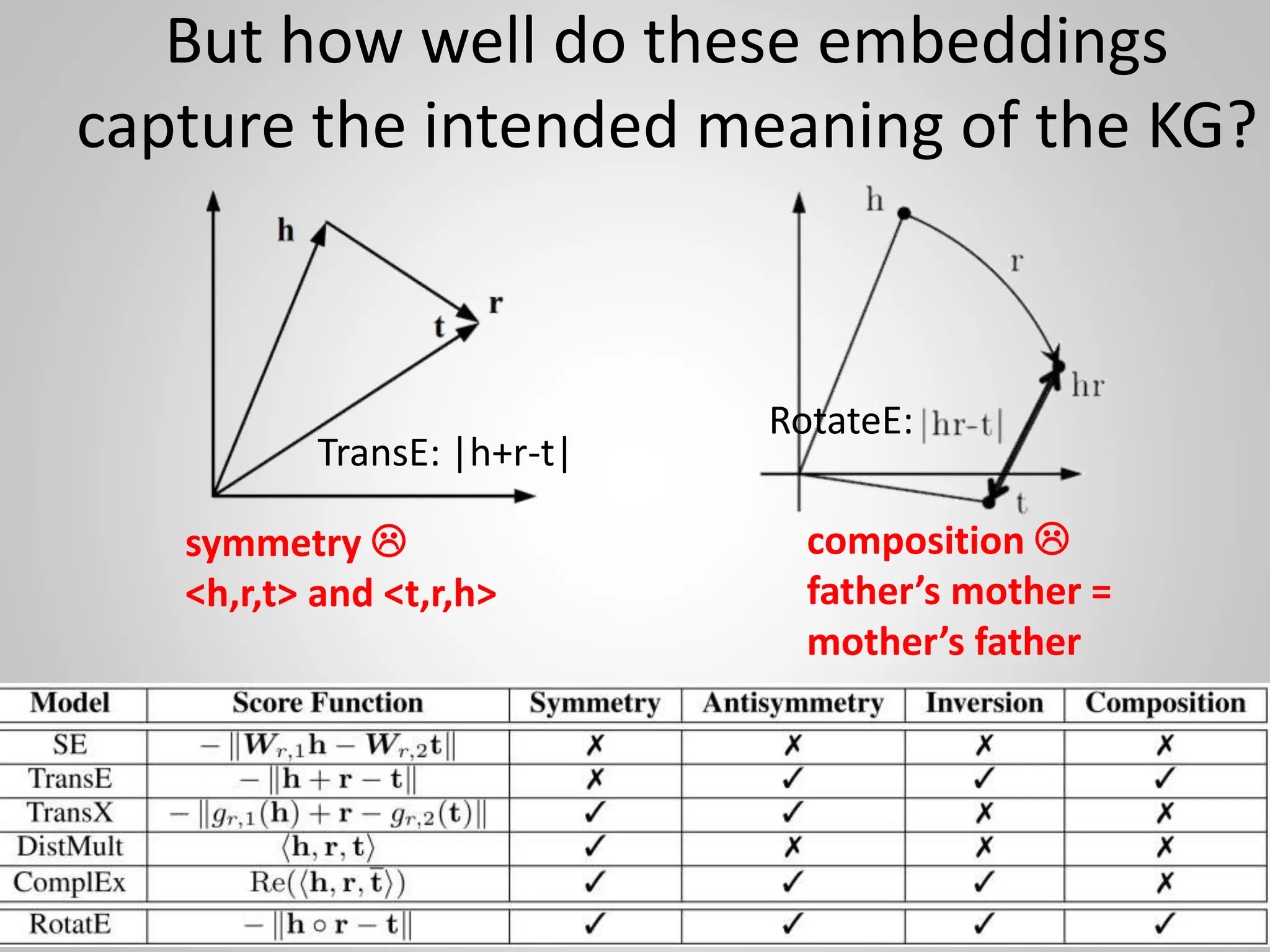

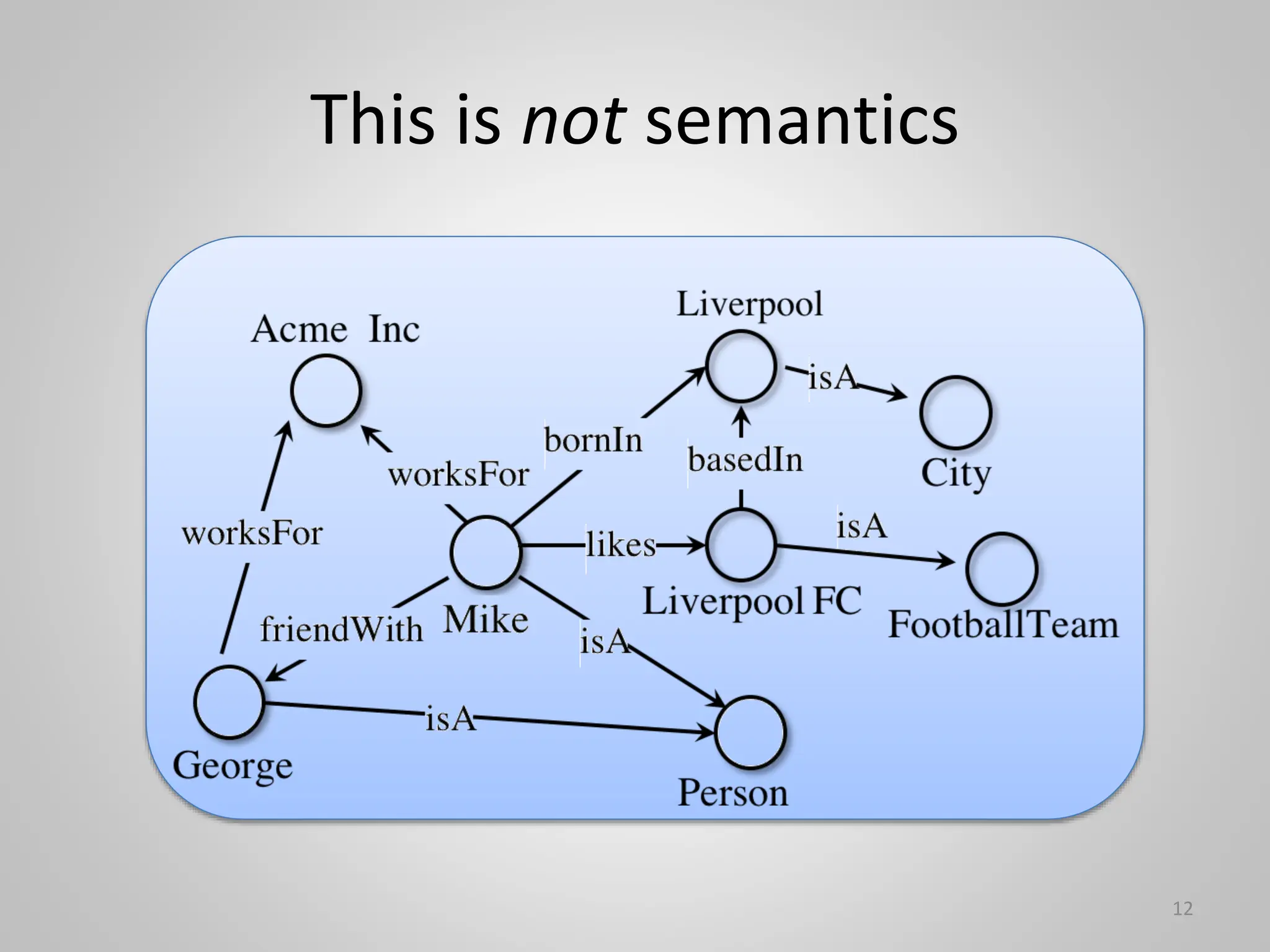

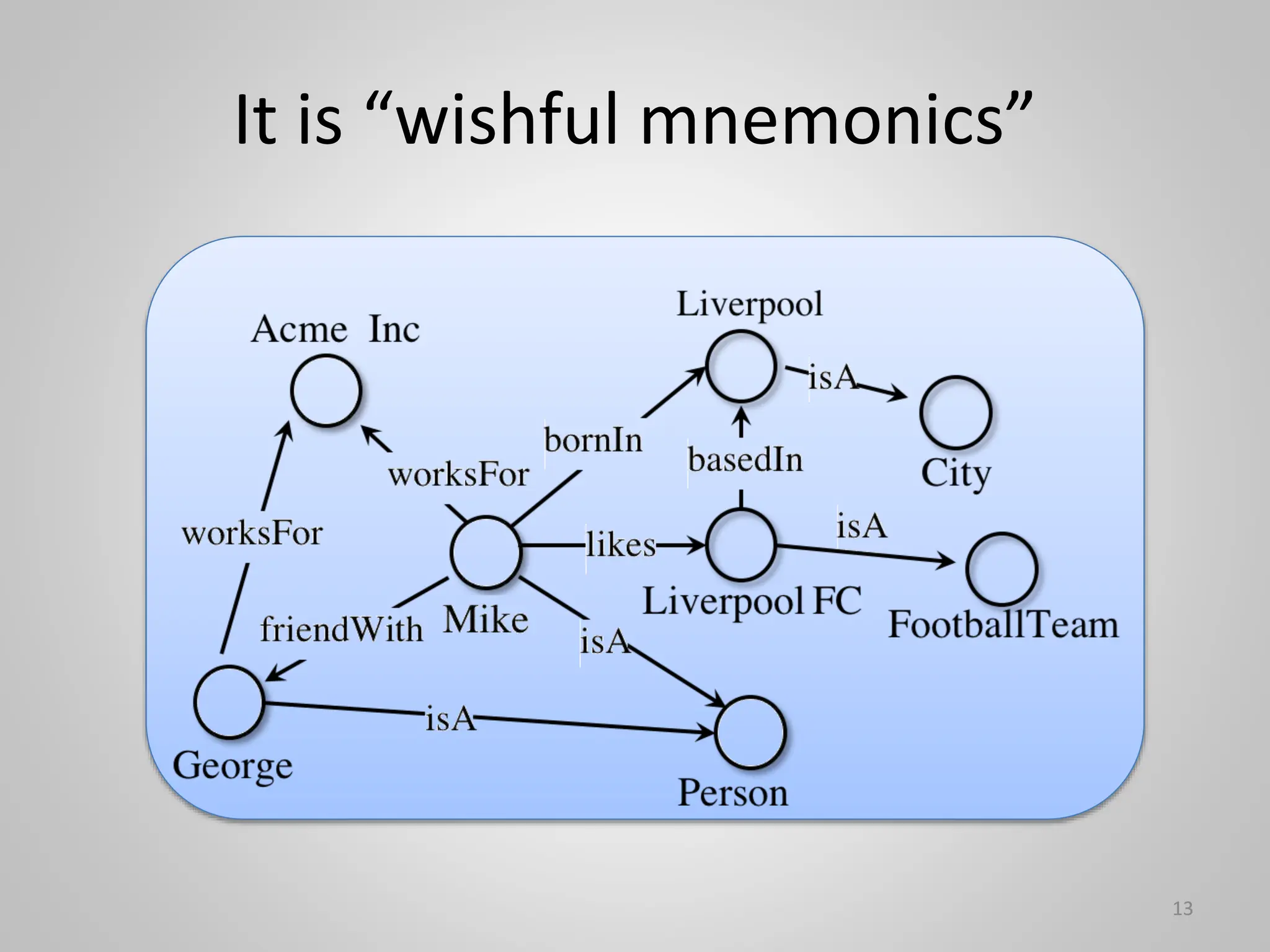

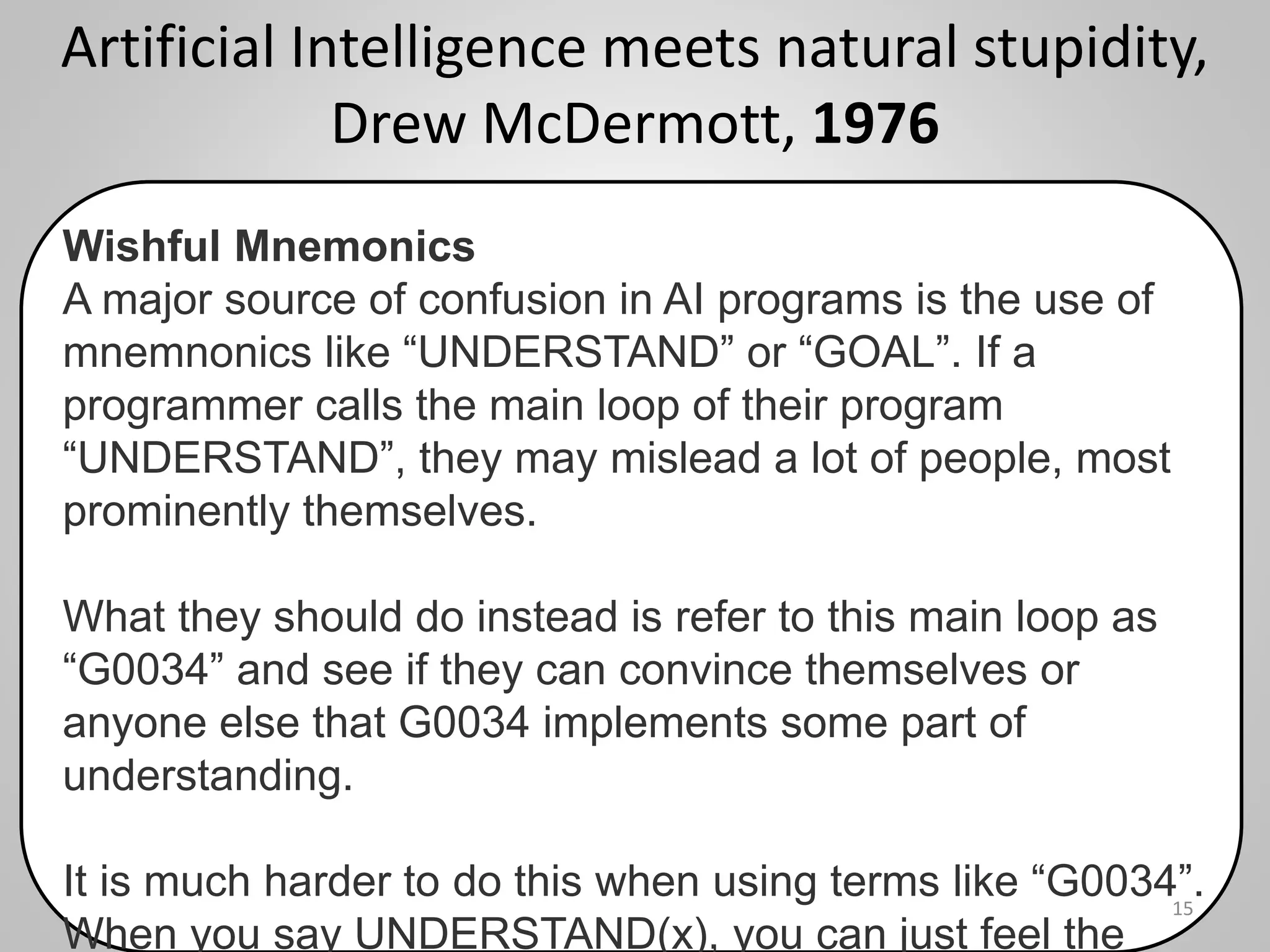

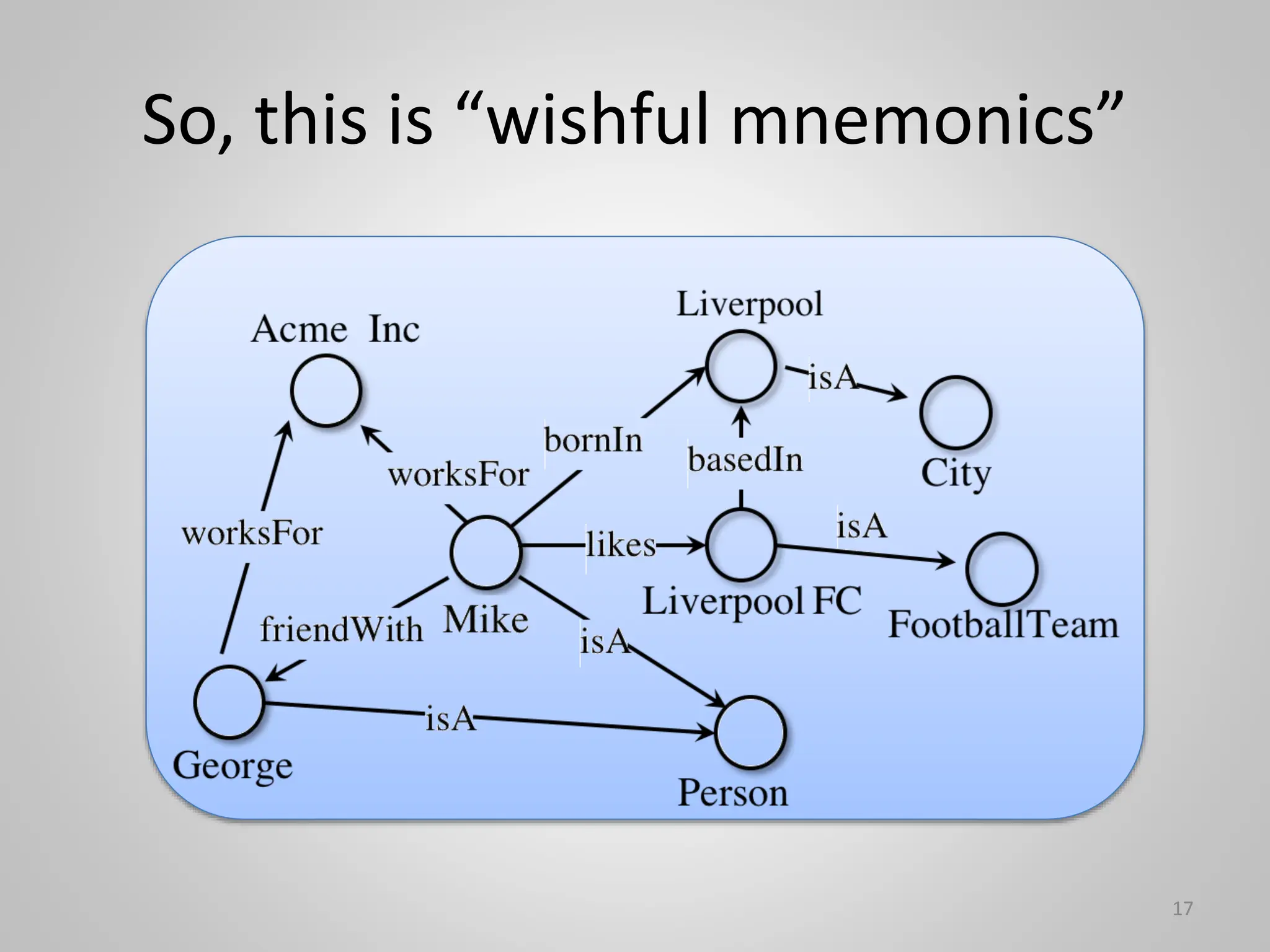

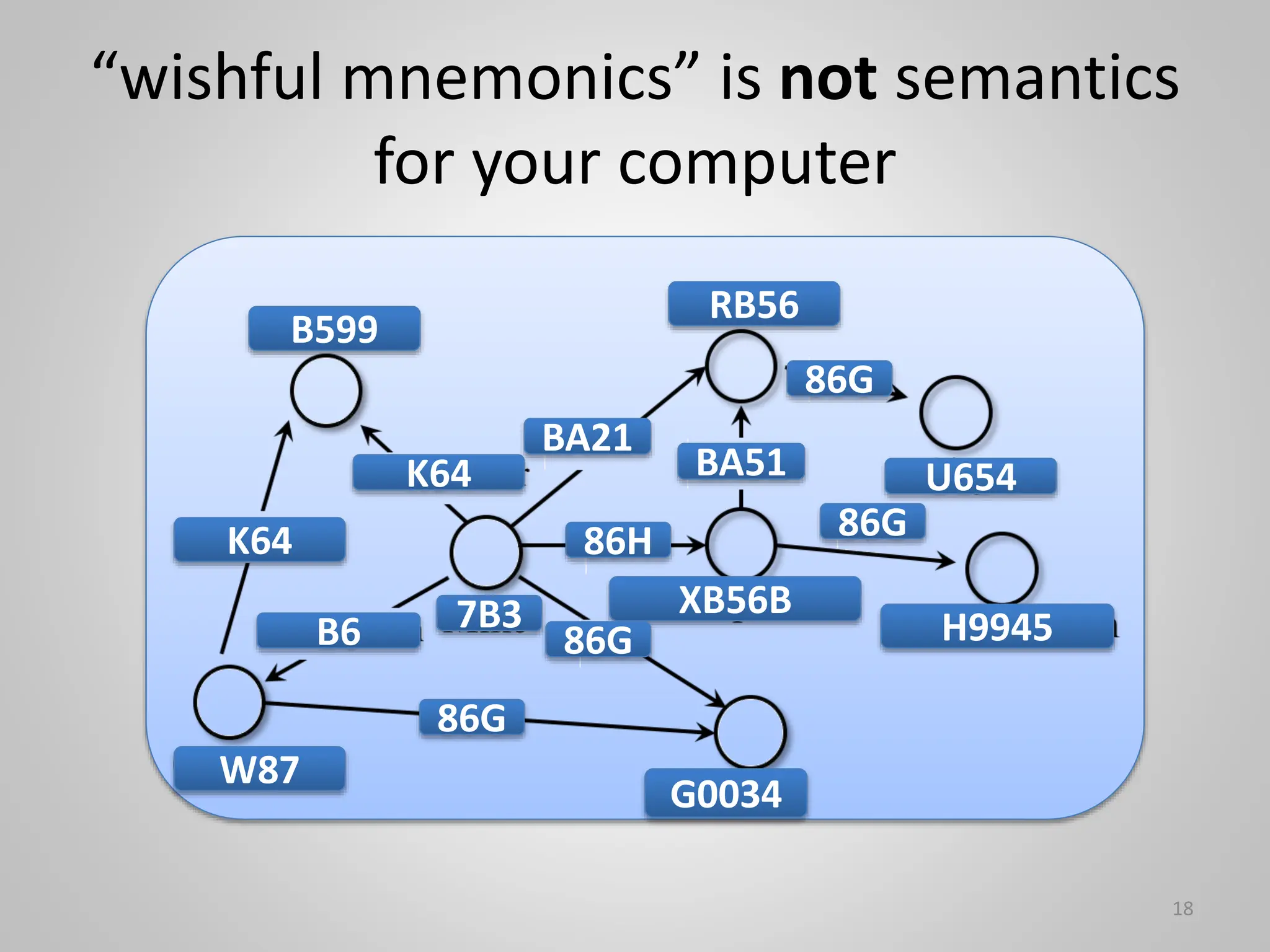

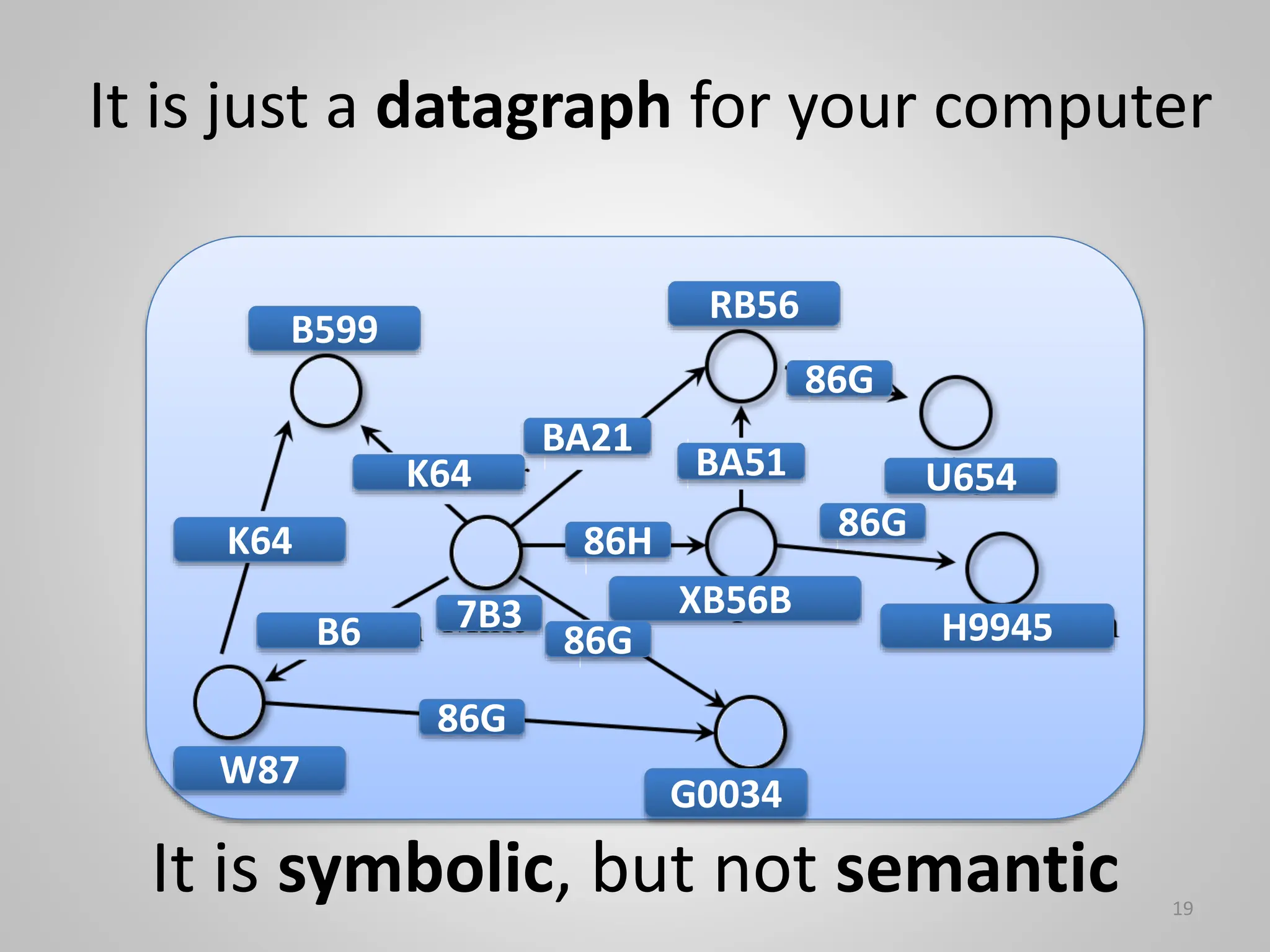

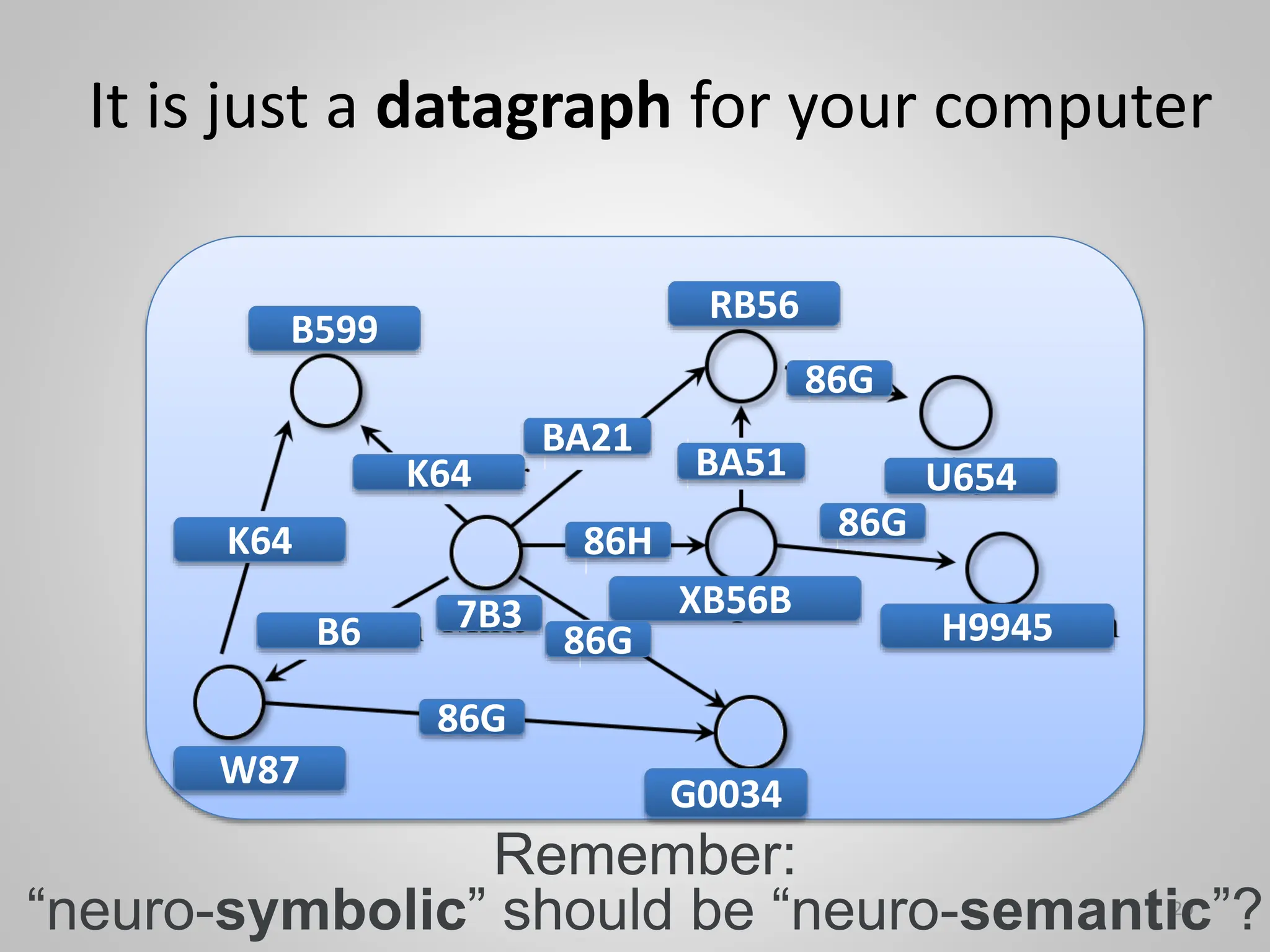

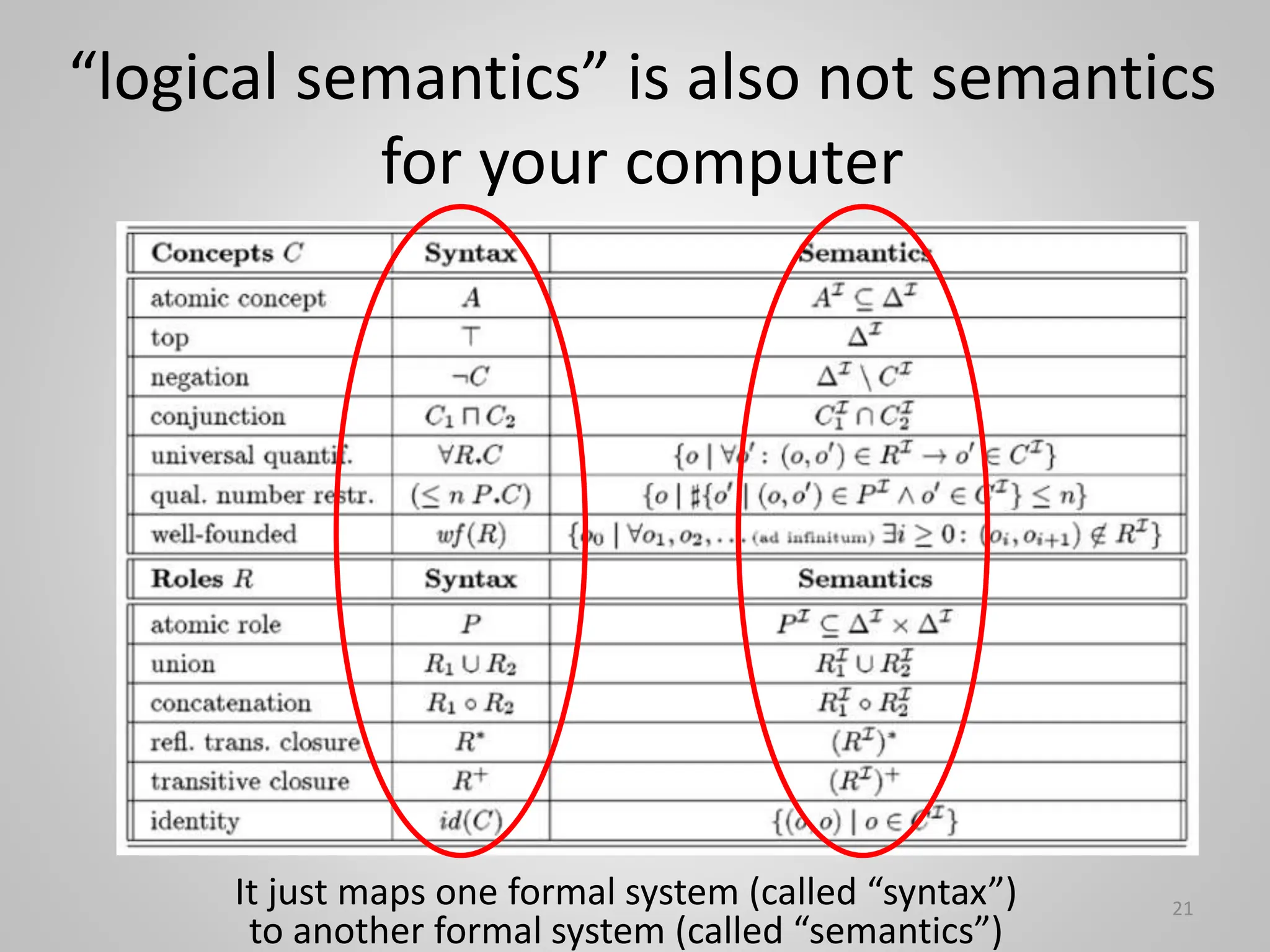

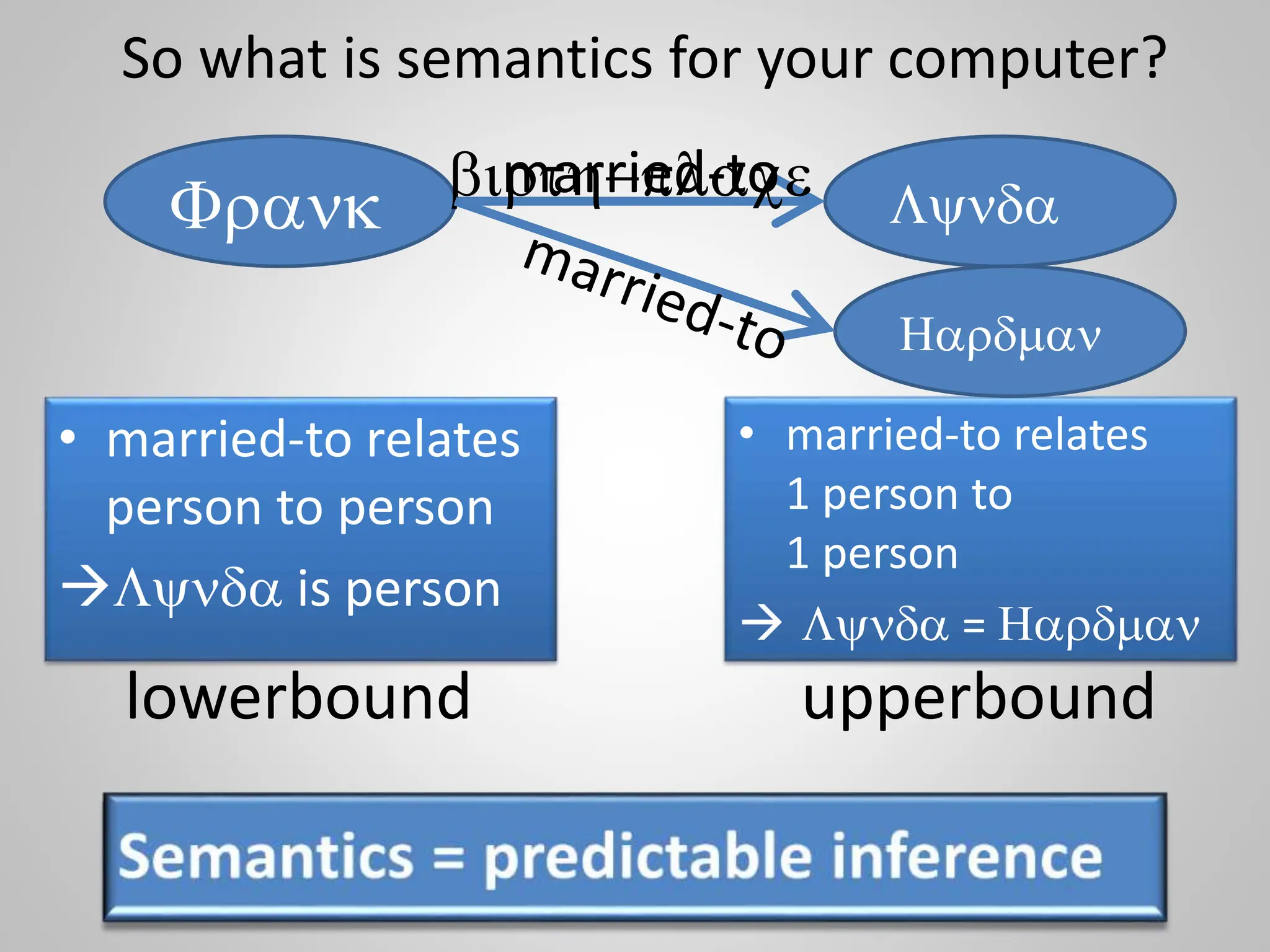

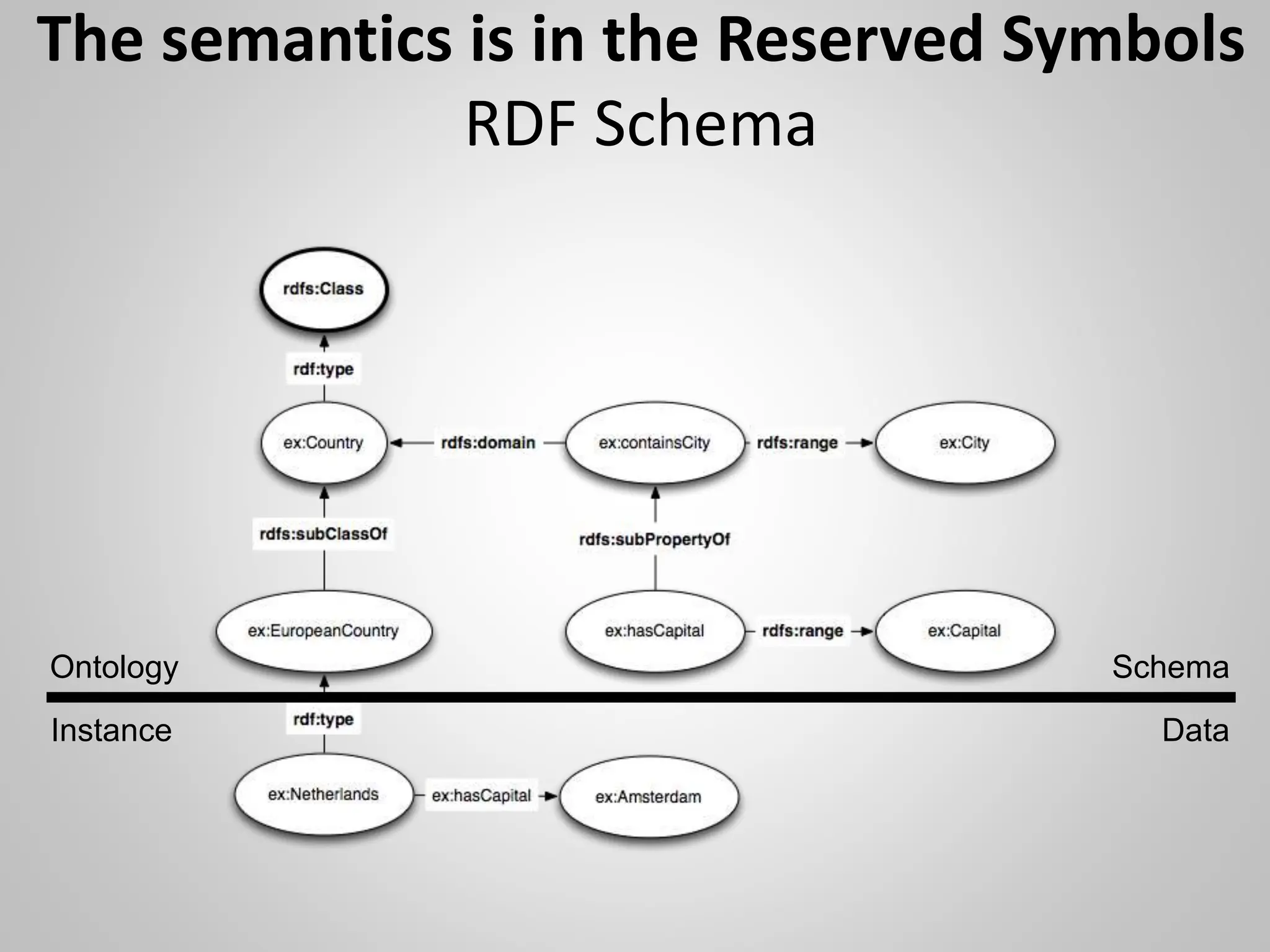

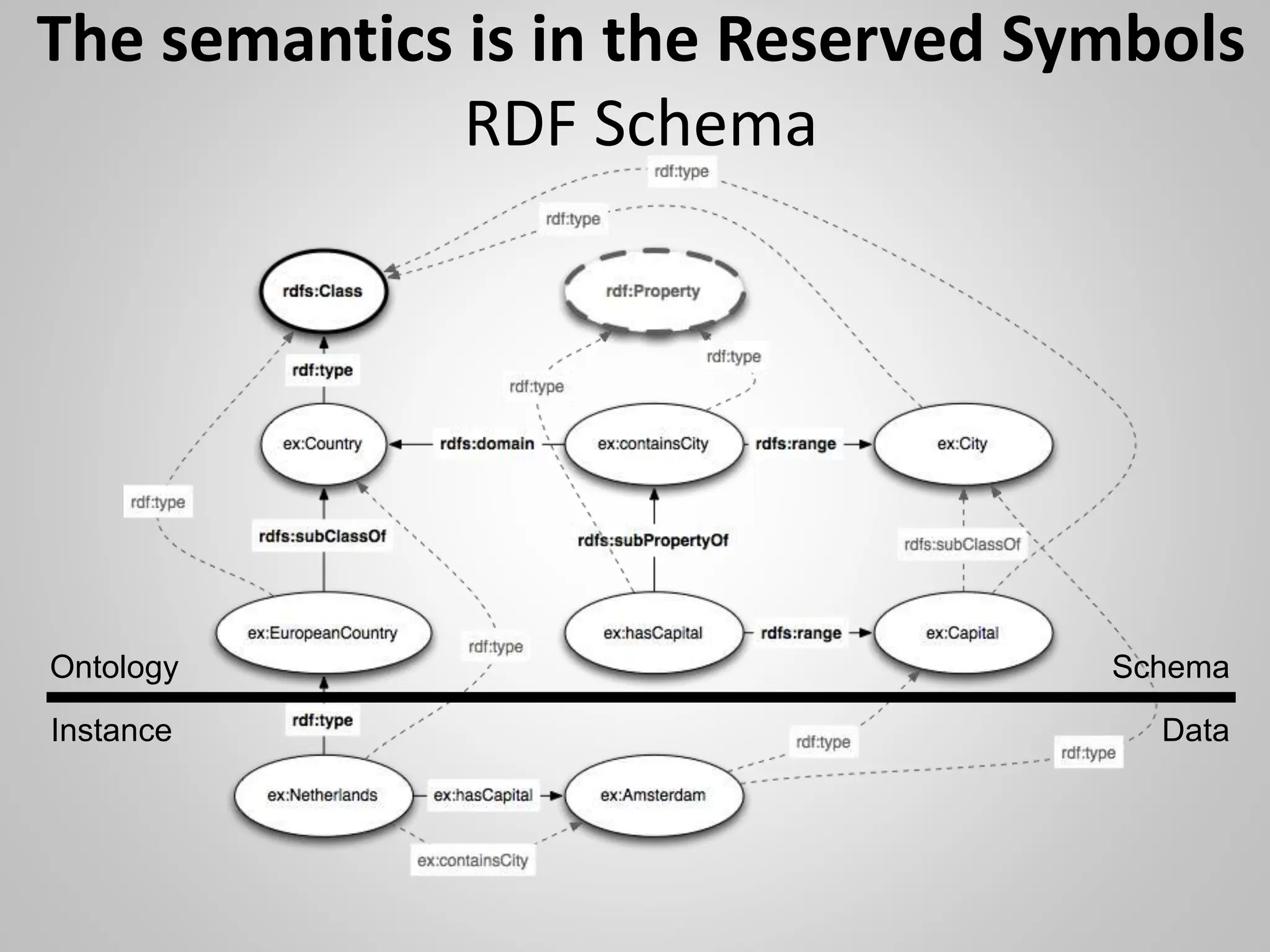

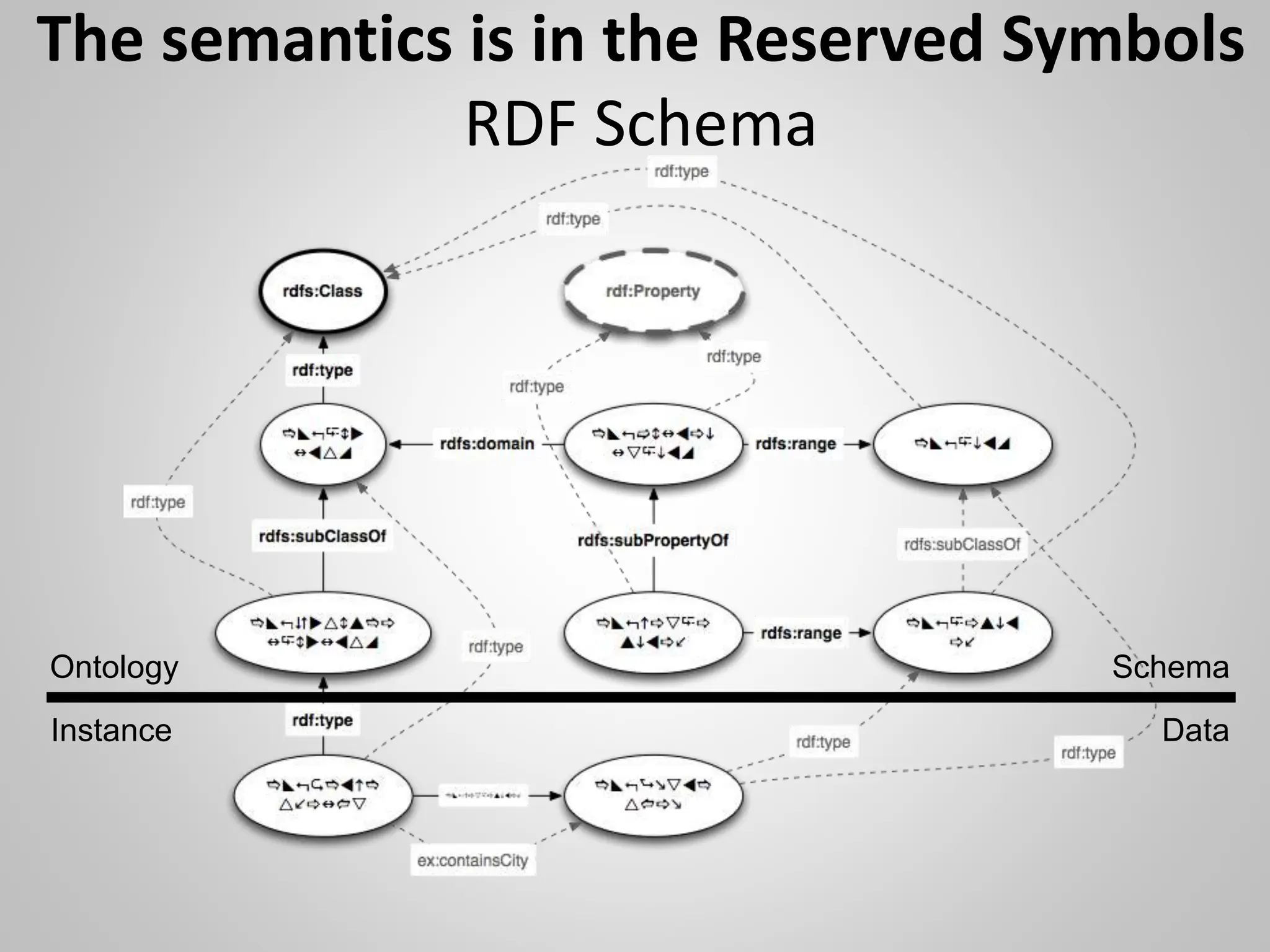

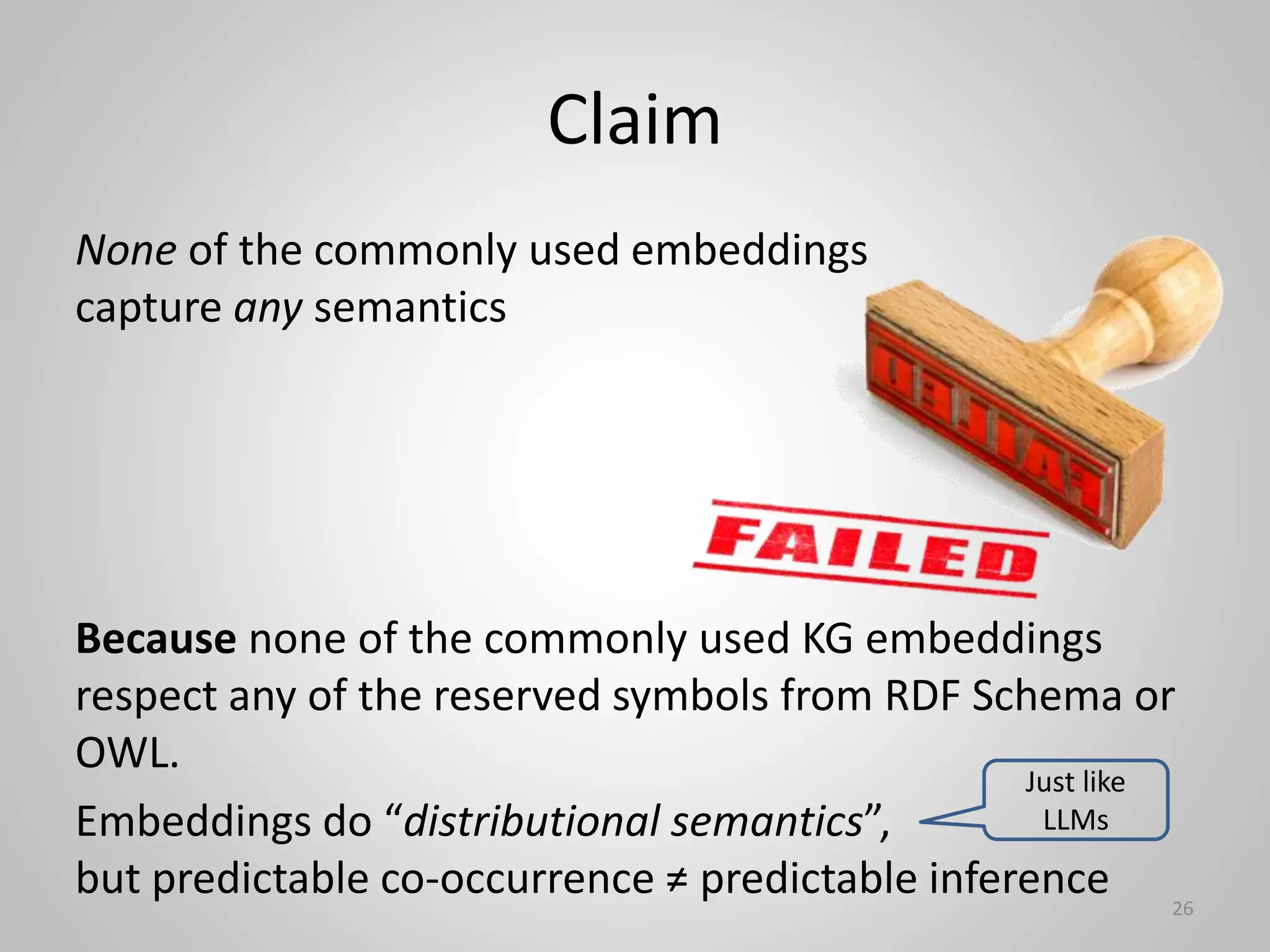

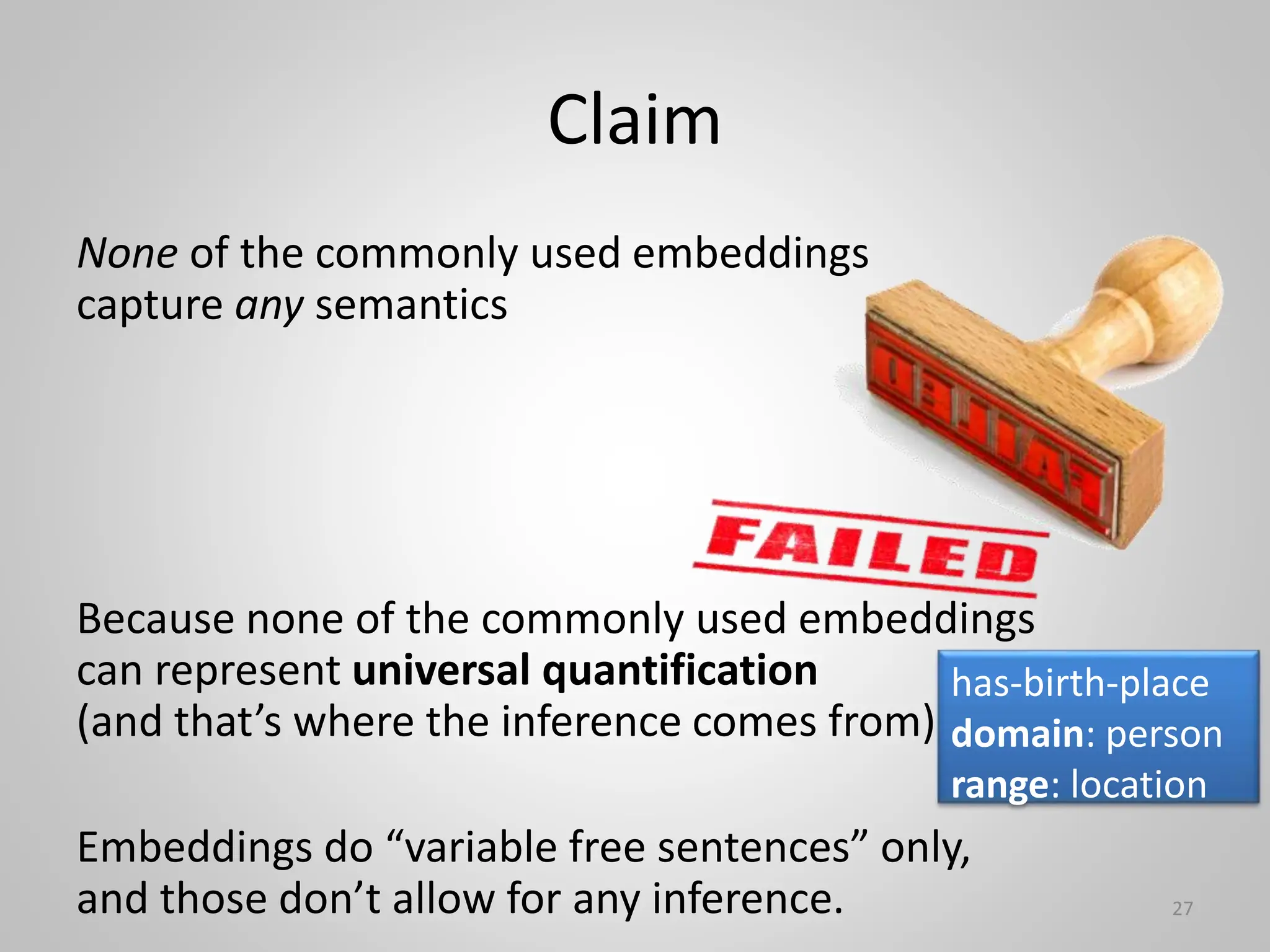

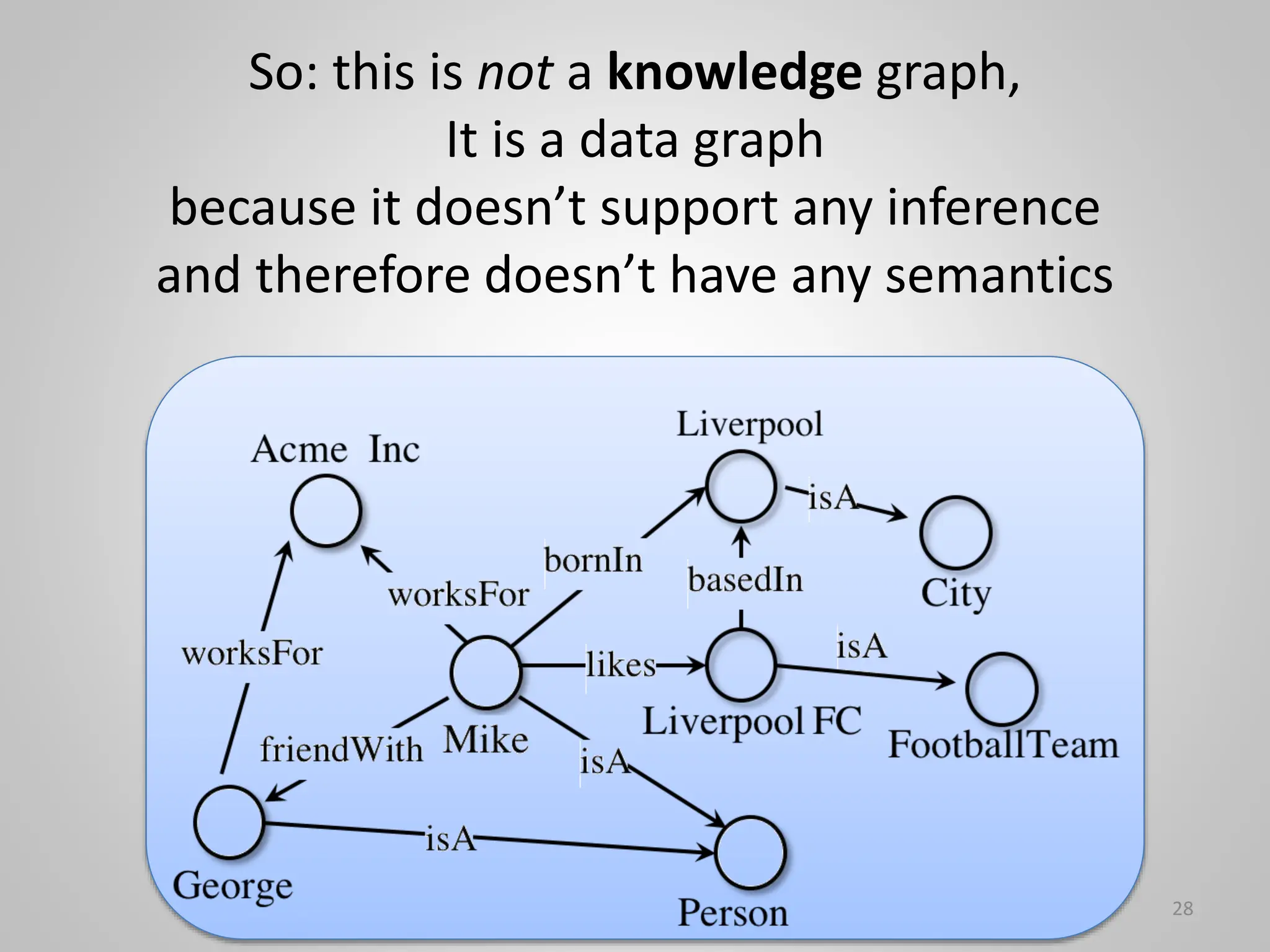

The document discusses the distinction between 'neuro-symbolic' and 'neuro-semantic' systems, emphasizing that many existing knowledge graph embeddings fail to capture true semantics, which is linked to predictable inference. It critiques the concept of 'wishful mnemonics' in AI terminology that misleads understanding of underlying algorithms. The author proposes methods for enhancing embeddings to retain semantic meaning in knowledge representations during AI system training.

![Make embeddings semantic again!

(Outrageaous Ideas paper at ISWC 2018)

Abstract

The original Semantic Web vision foresees to describe

entities in a way that the meaning can be interpreted both

by machines and humans. [But] embeddings describe an

entity as a numerical vector, without any semantics

attached to the dimensions. Thus, embeddings are as far

from the original Semantic Web vision as can be. In this

paper, we make a claim for semantic embeddings.

Proposal 1: A Posteriori Learning of Interpretations.

Reconstruct a human-readable interpretation from the

vector space.

Proposal 2: Pattern-based Embeddings.

Use patterns in the knowledge graph to choose

human-interpretable dimensions in the vector space.

30

Neither of these are aimed at predictable inference

-> no semantics ](https://image.slidesharecdn.com/2024genesy-240528065743-6eabade7/75/Neuro-symbolic-is-not-enough-we-need-neuro-semantic-30-2048.jpg)