This document summarizes Jeremy Carroll's presentation on HBase operations at Amazon. It discusses how Amazon uses HBase across 5 clusters with billions of page views. Key points include:

- HBase is deployed on Amazon Web Services using CDH and customized for EC2 instability and lack of rack locality

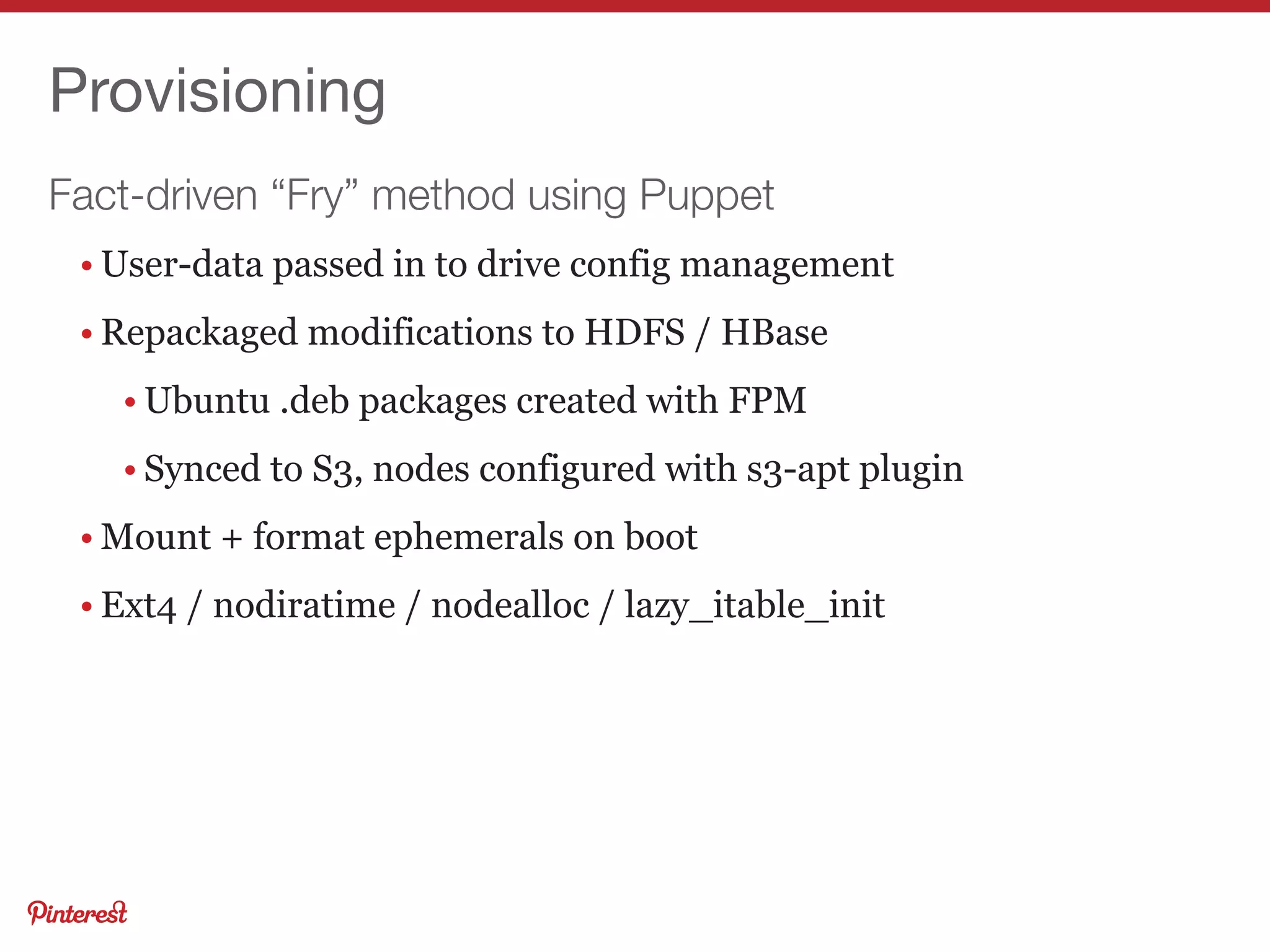

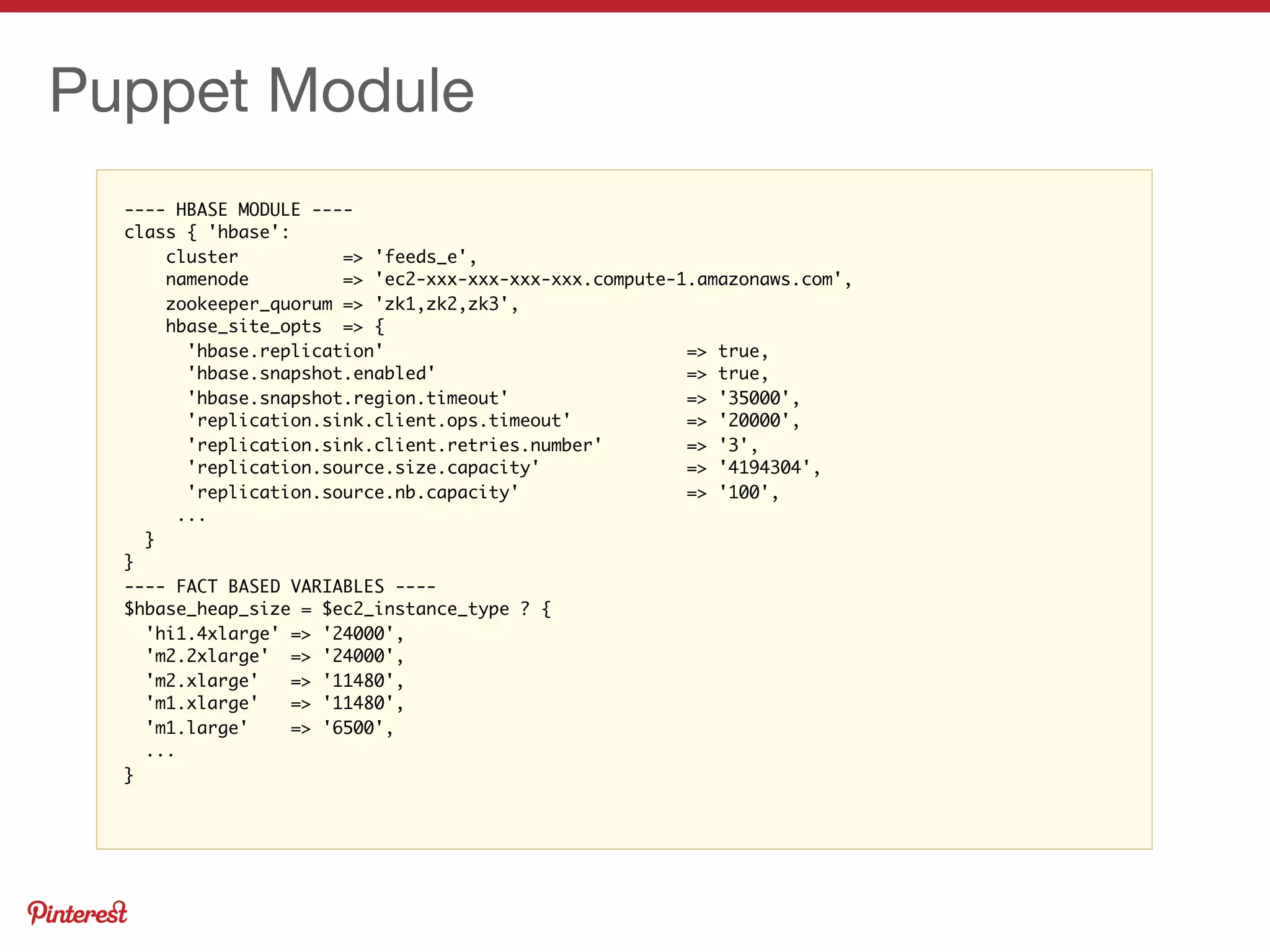

- Puppet is used to provision nodes and apply custom HDFS and HBase configurations

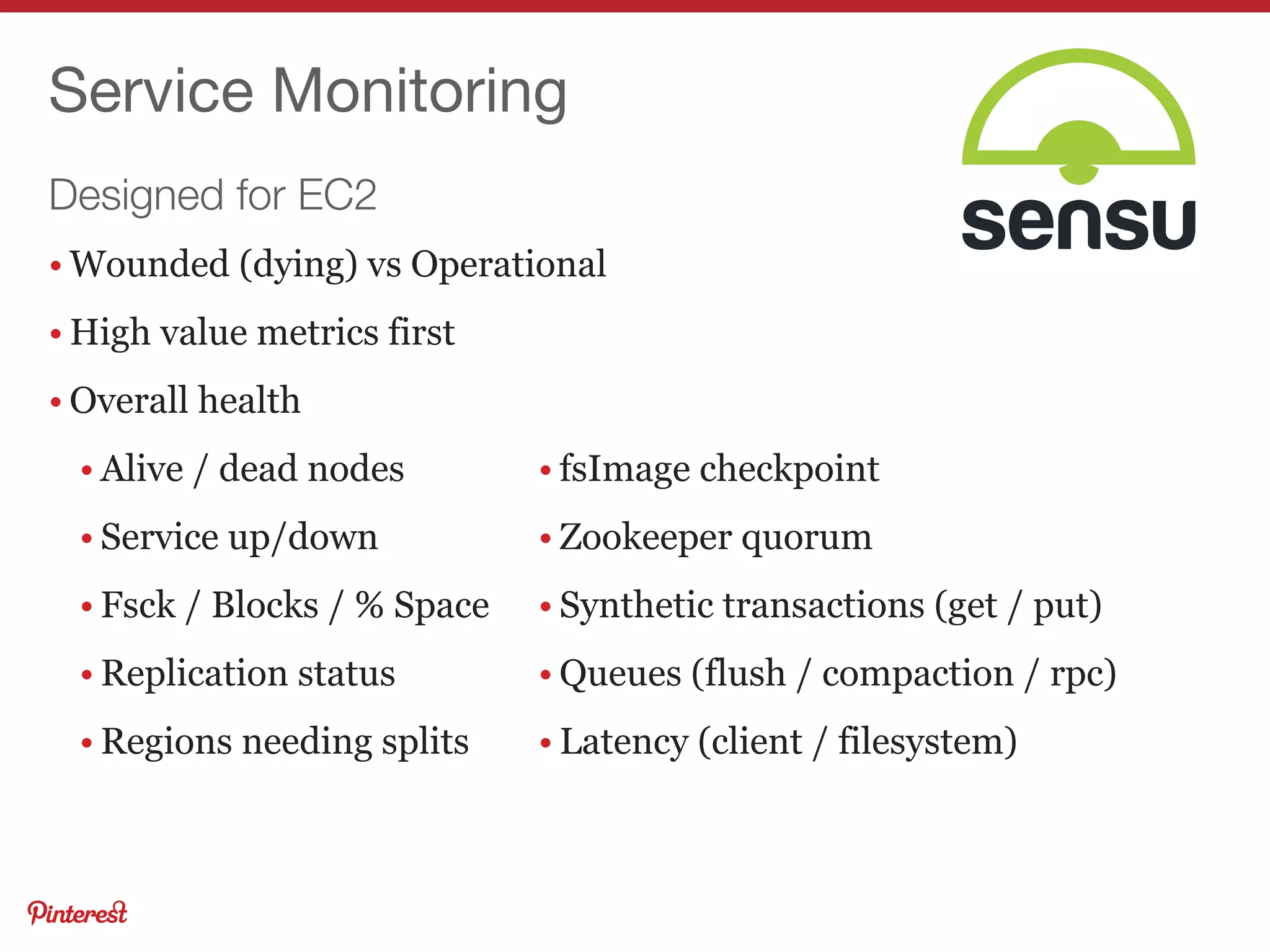

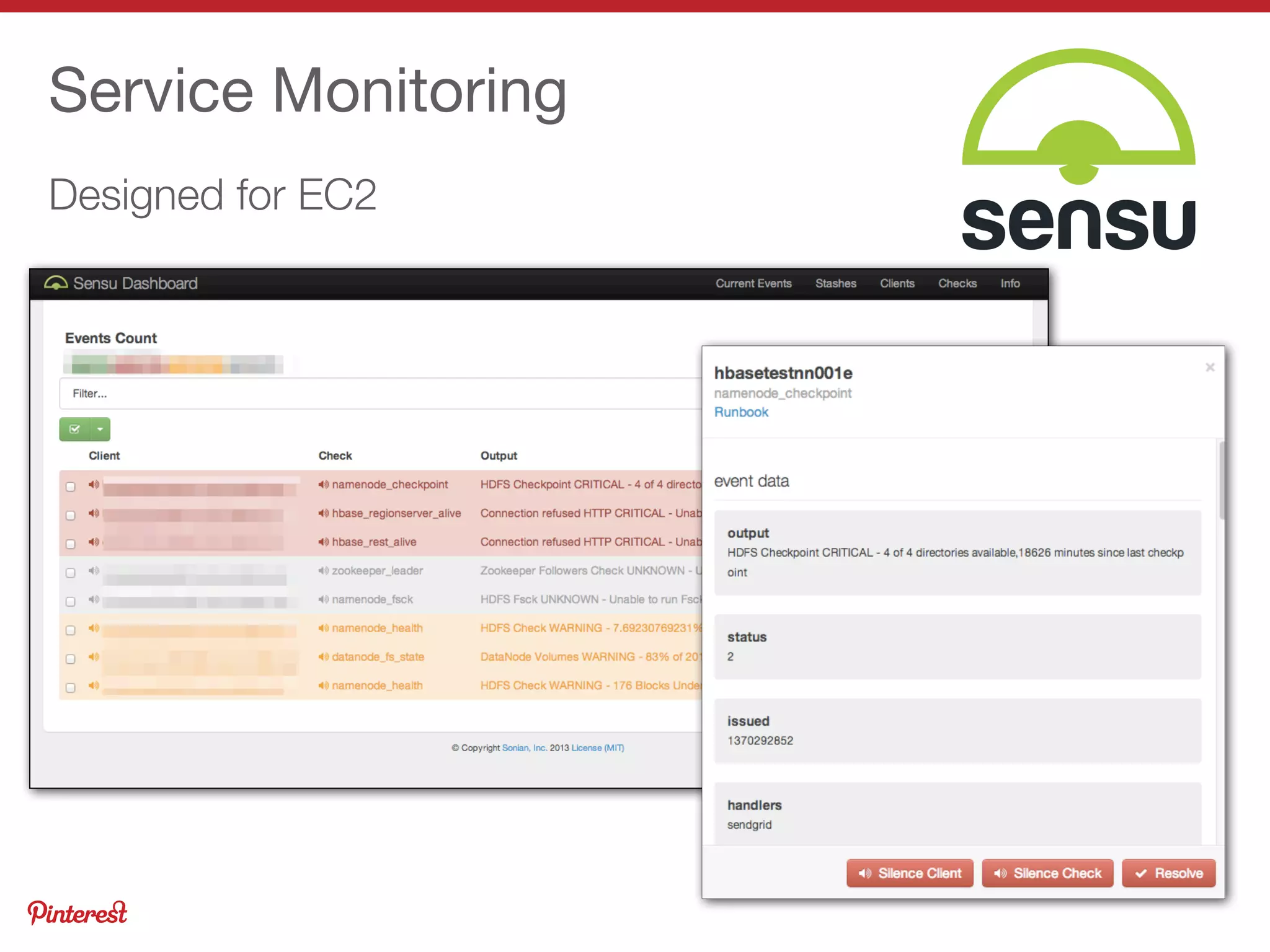

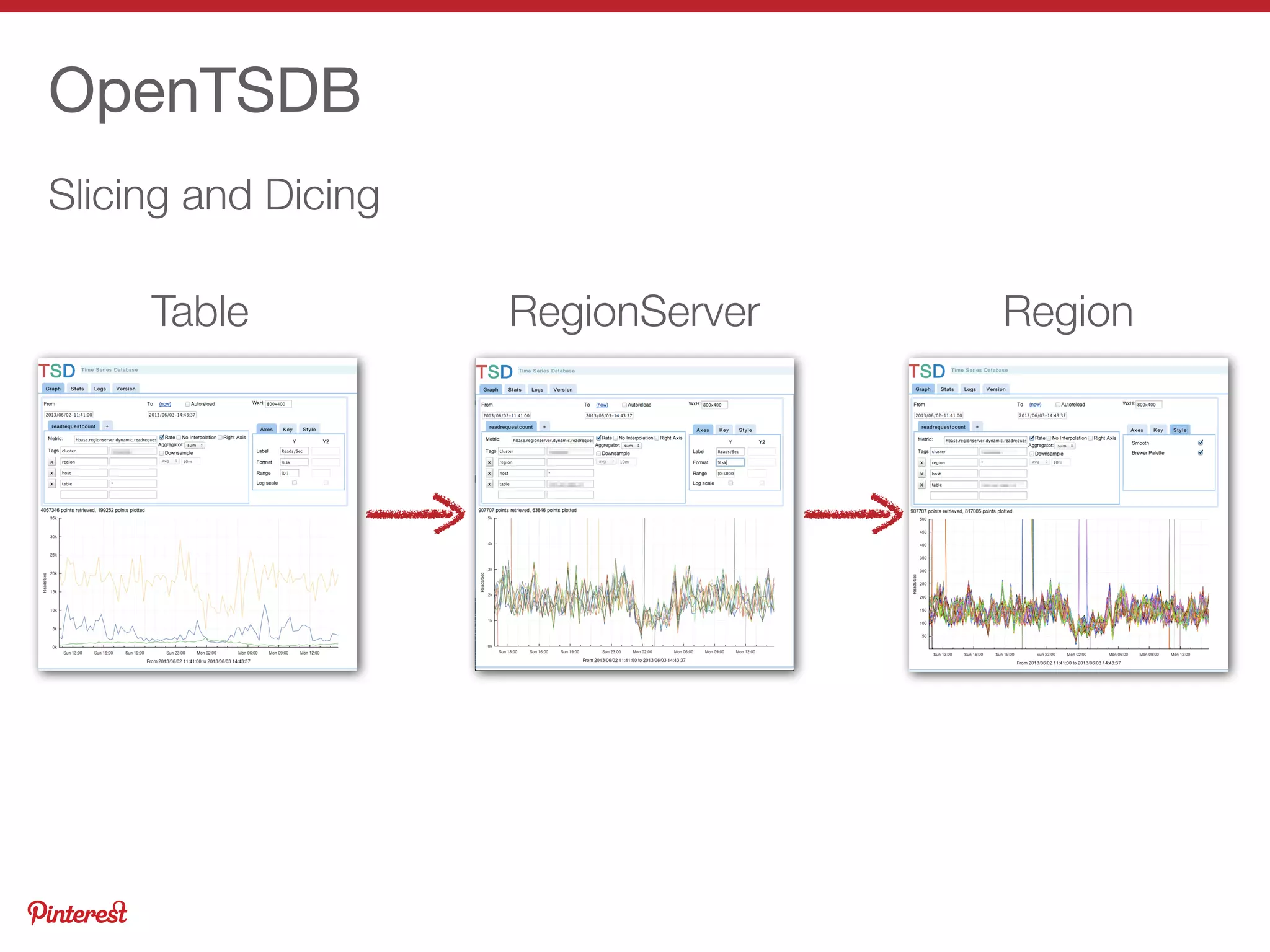

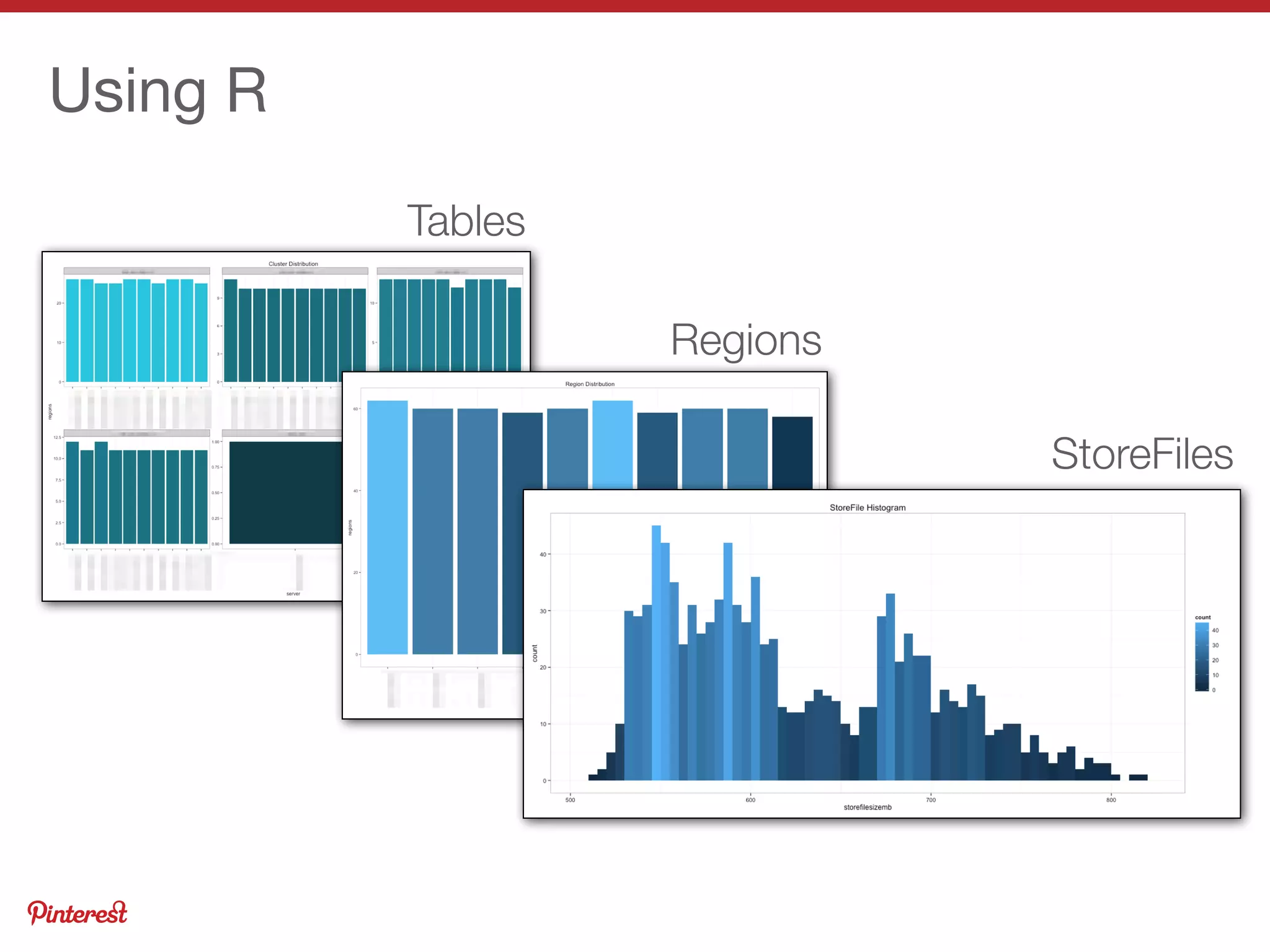

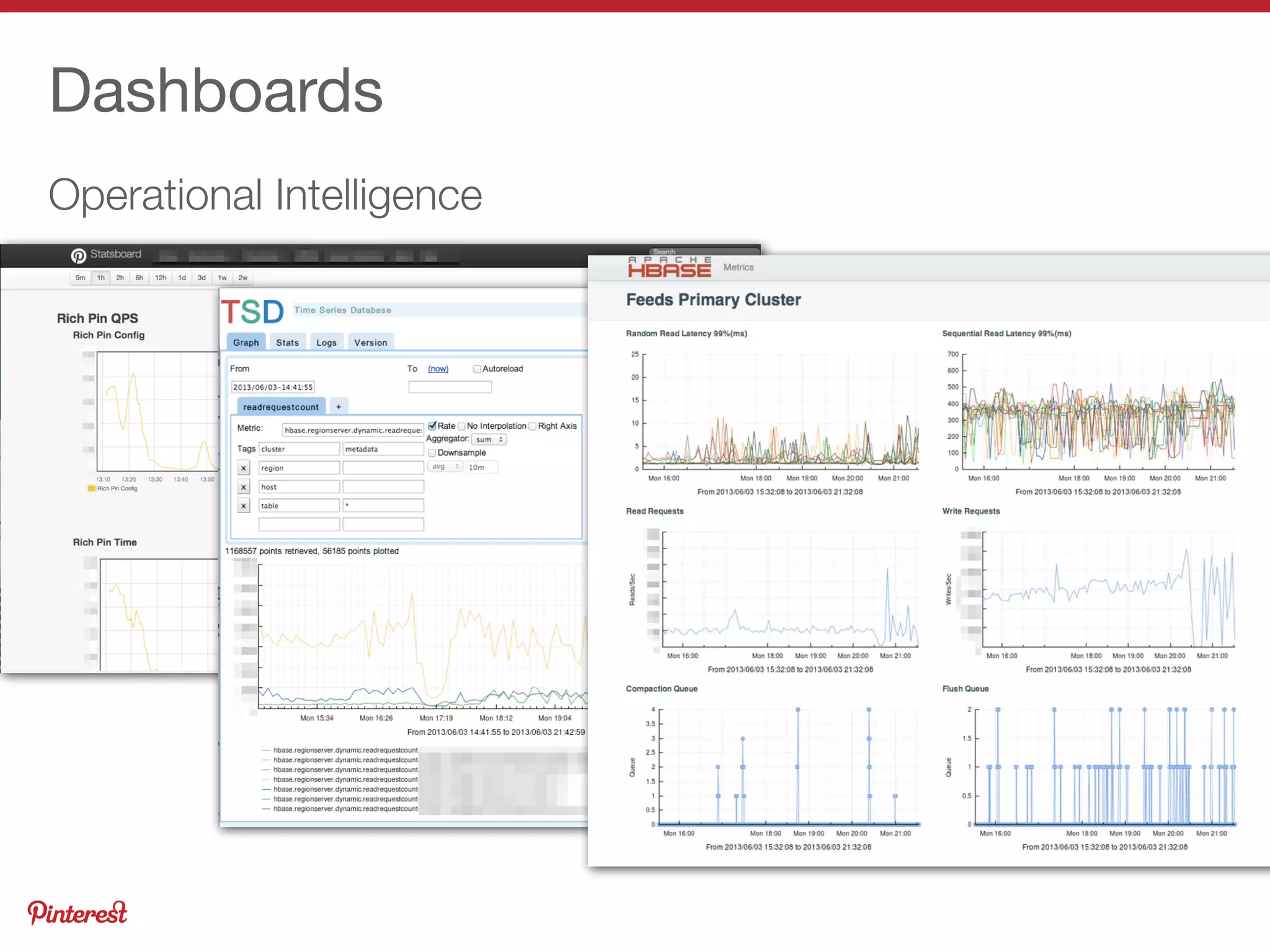

- Extensive monitoring of the clusters is done using OpenTSDB, Ganglia, and custom dashboards to ensure high availability

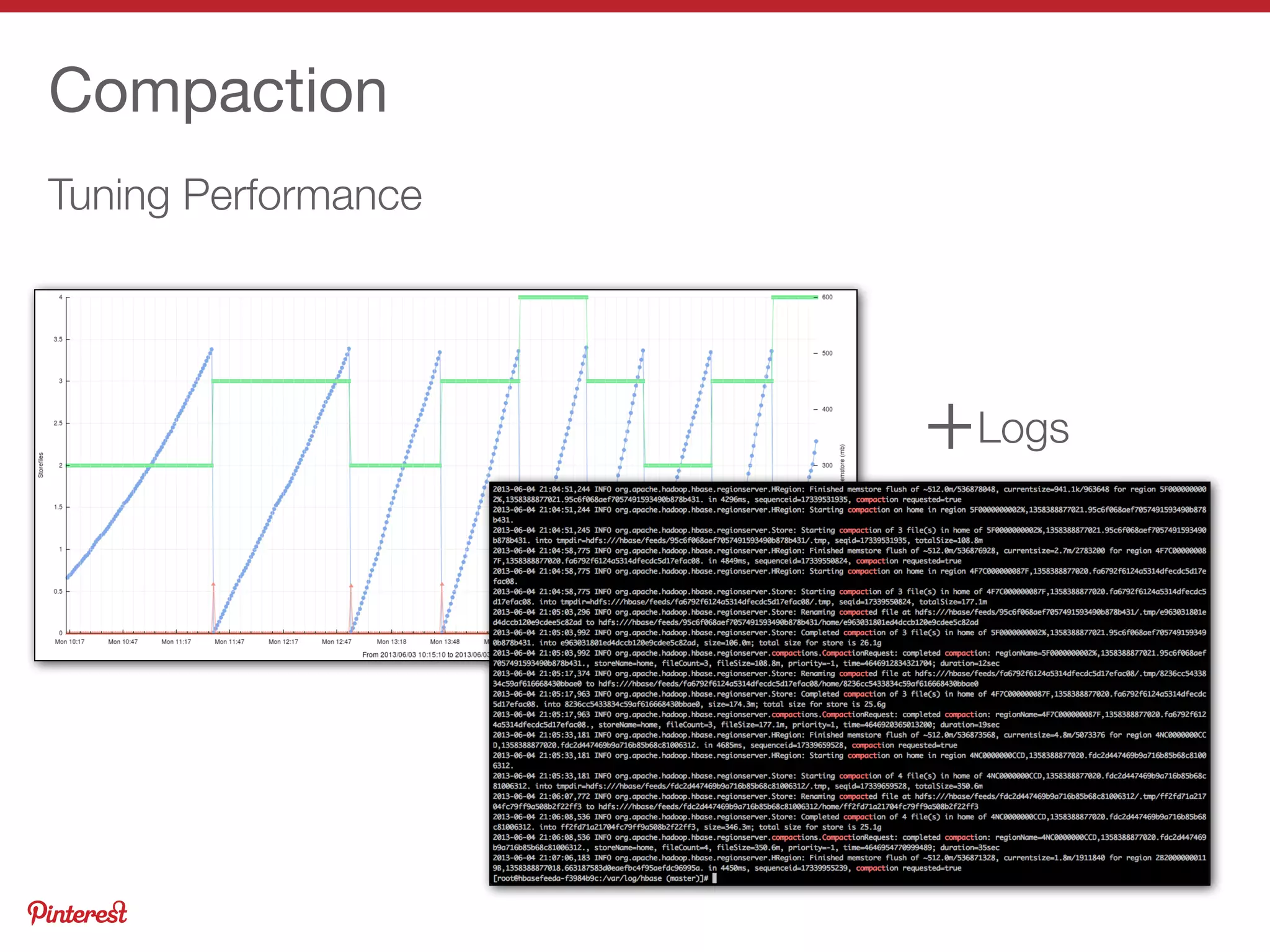

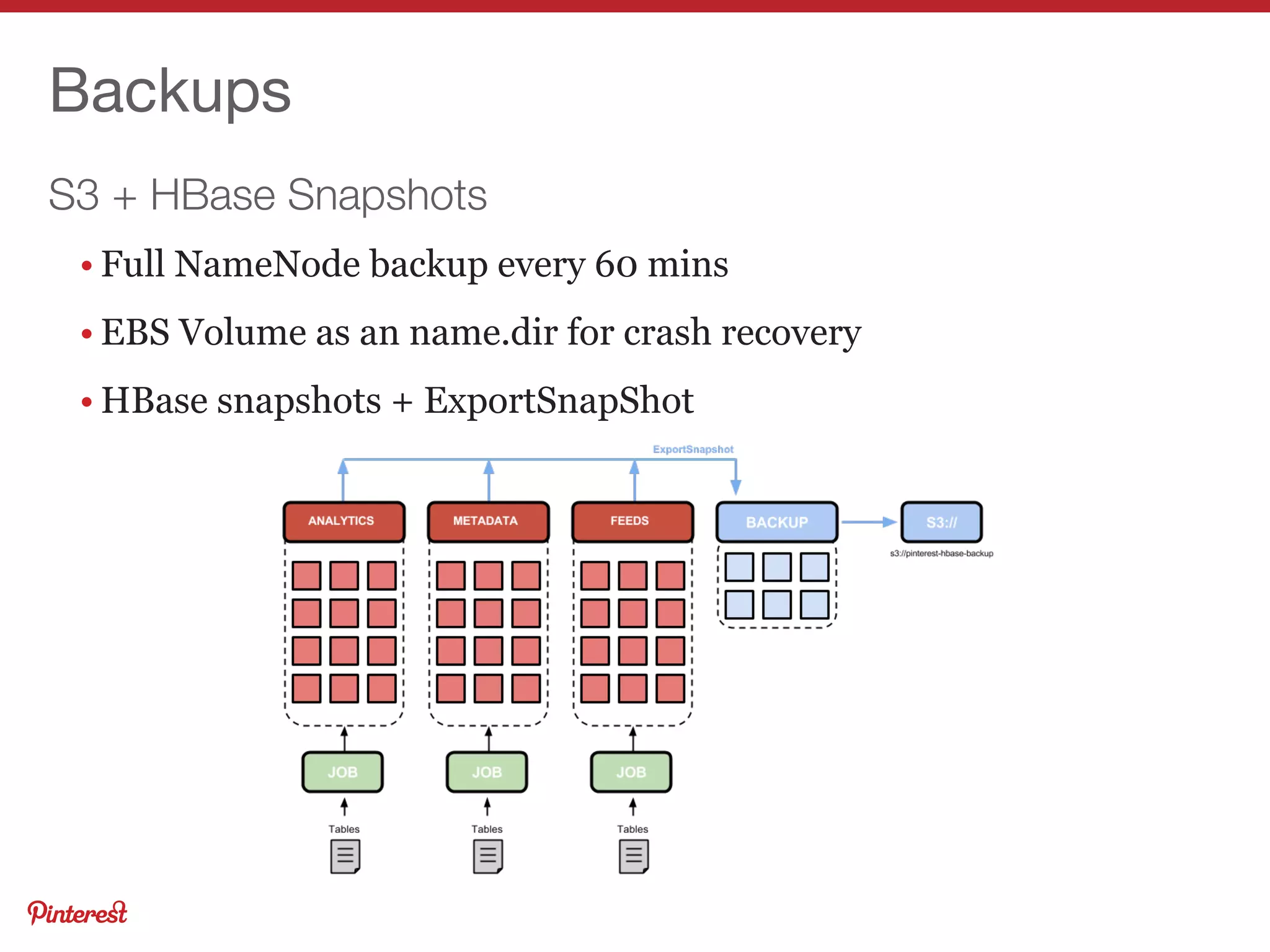

- Various techniques are used to optimize performance, handle large volumes, and back up data on EC2 infrastructure.