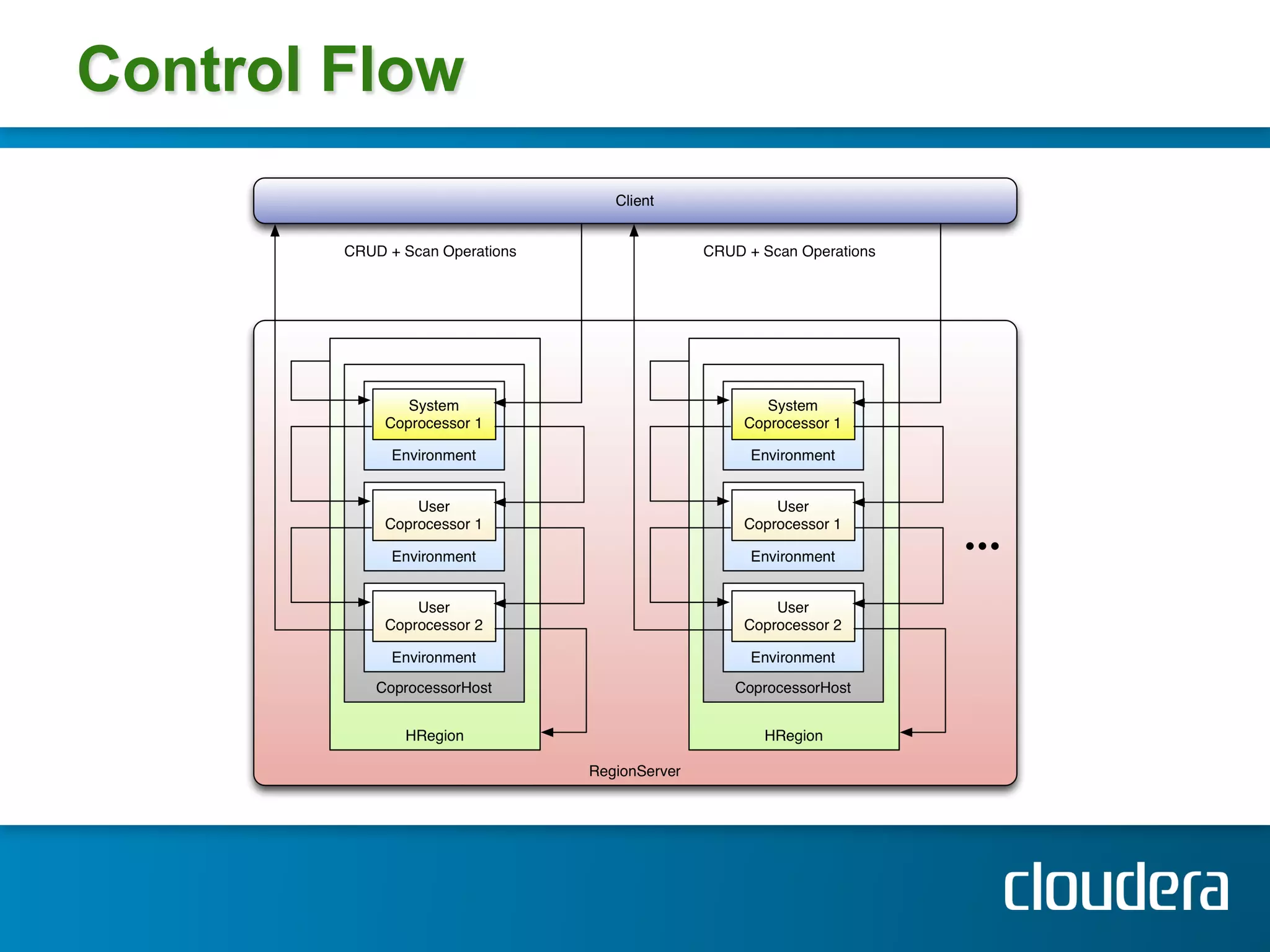

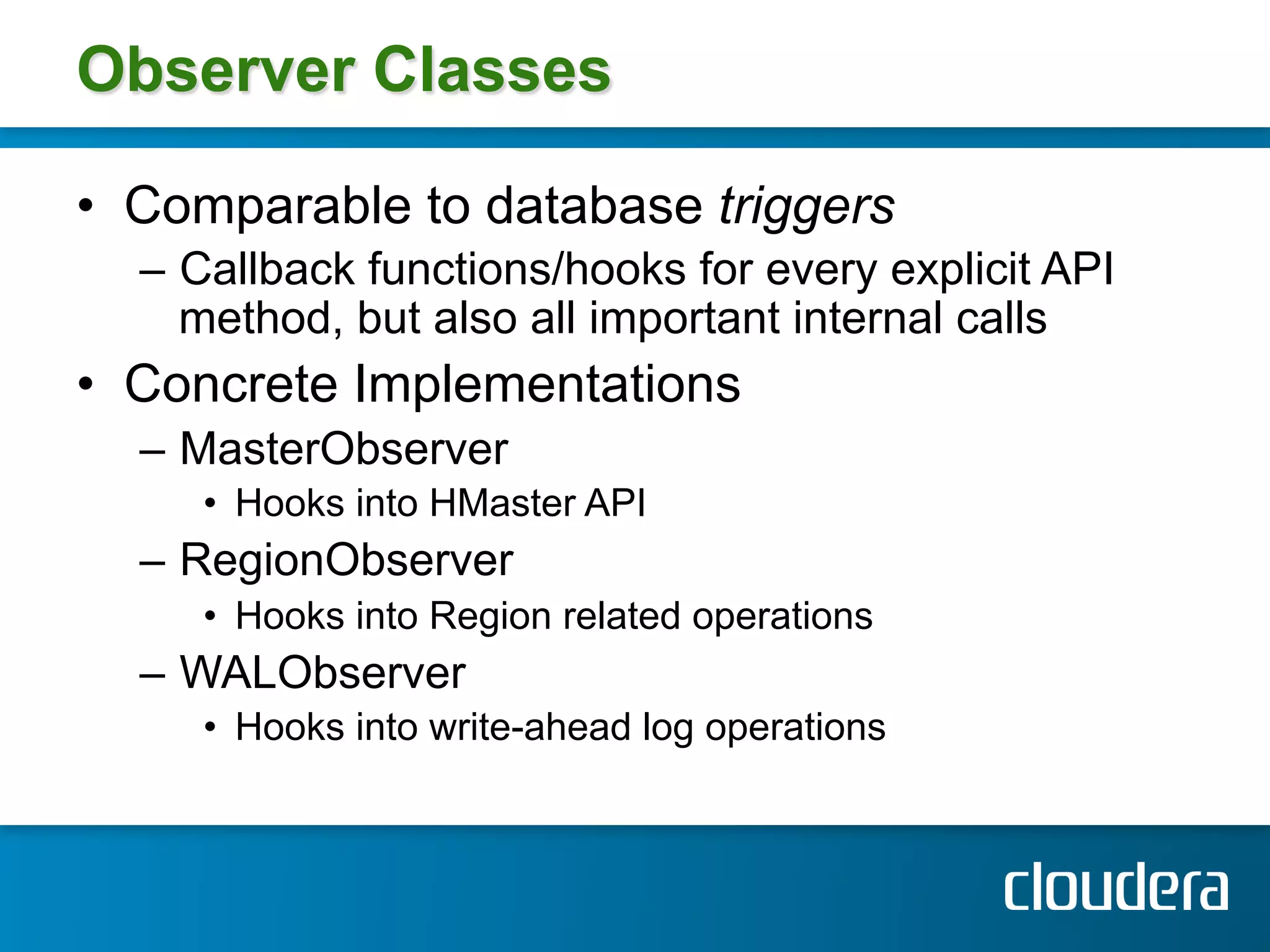

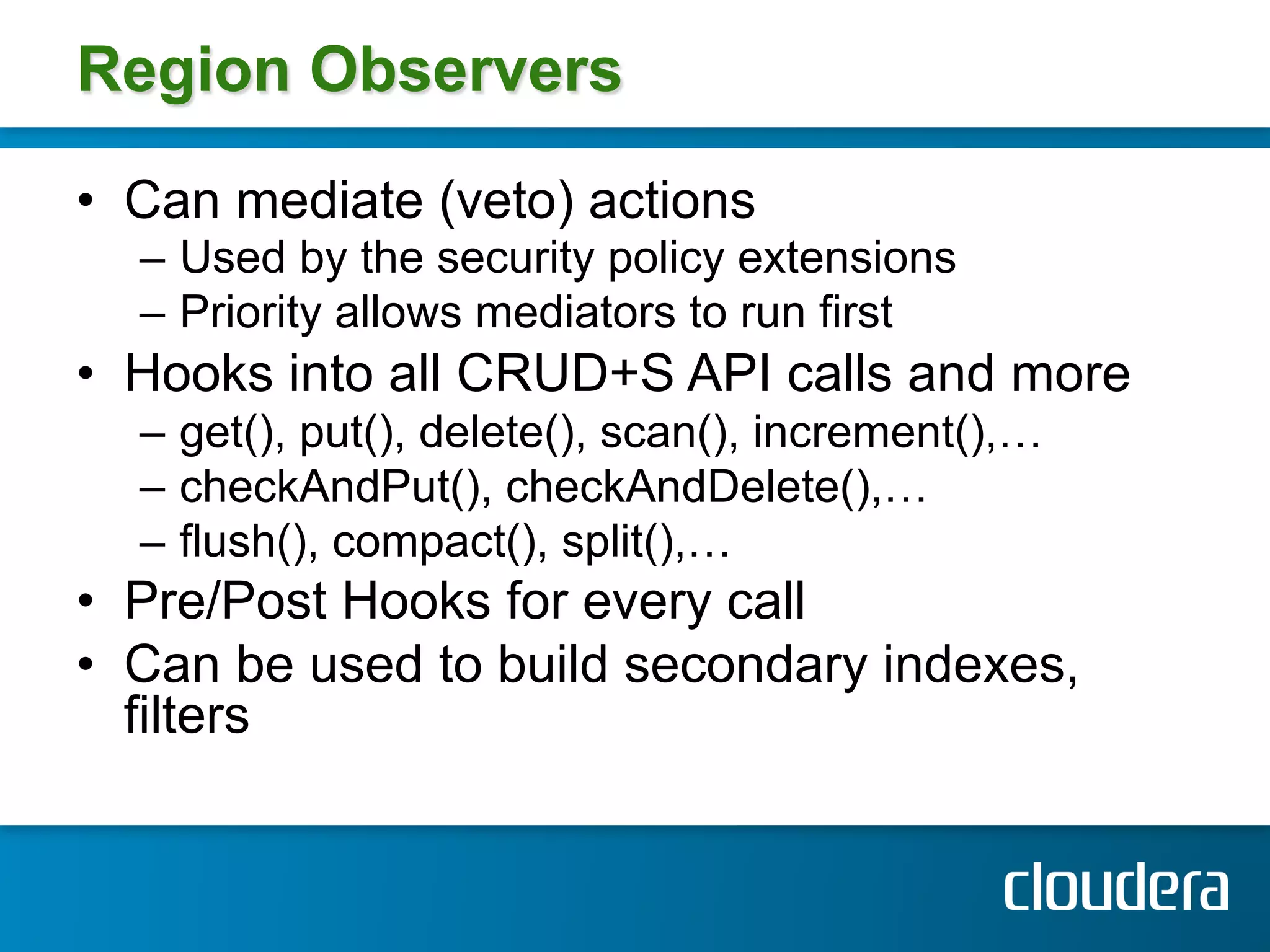

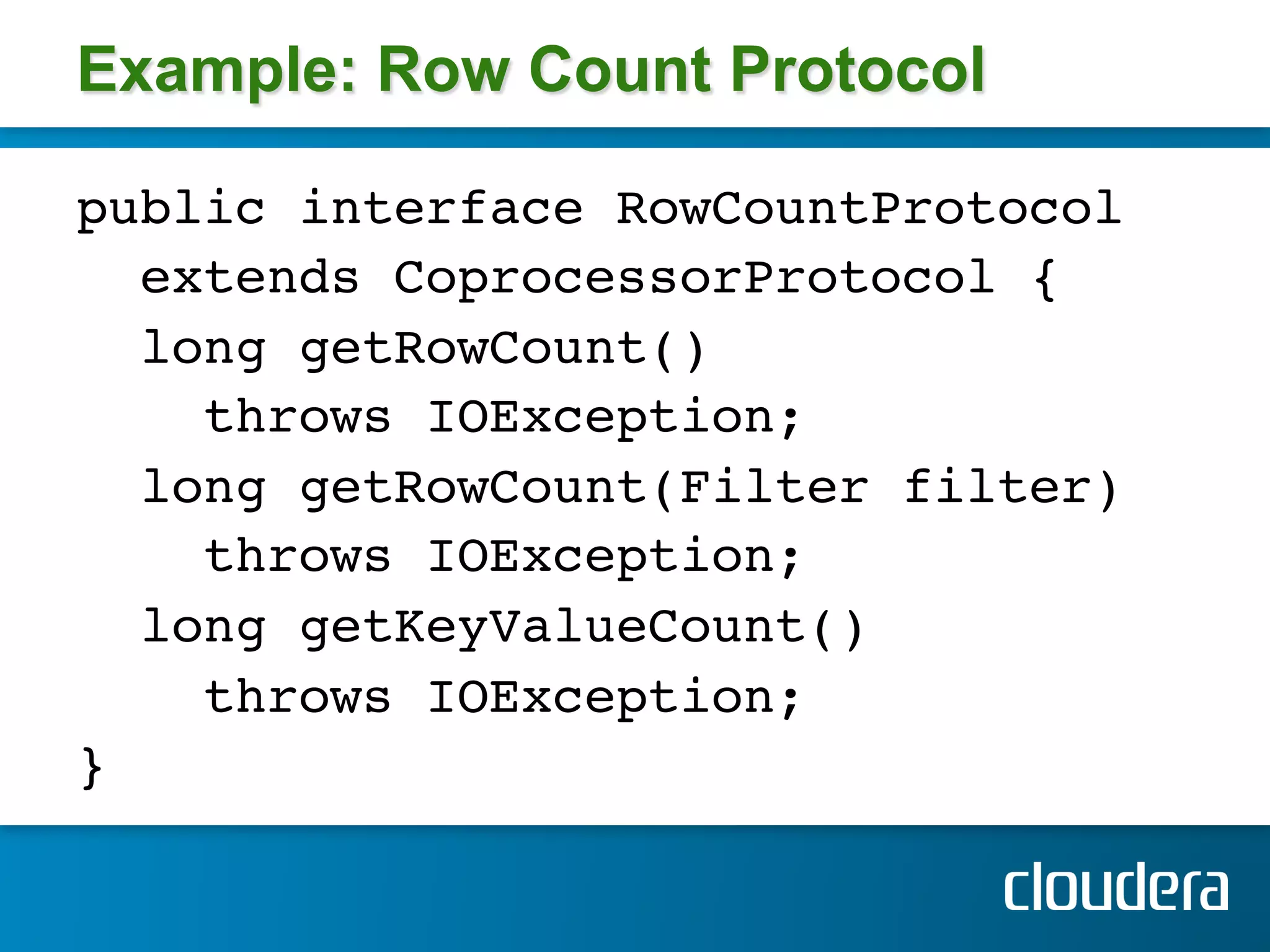

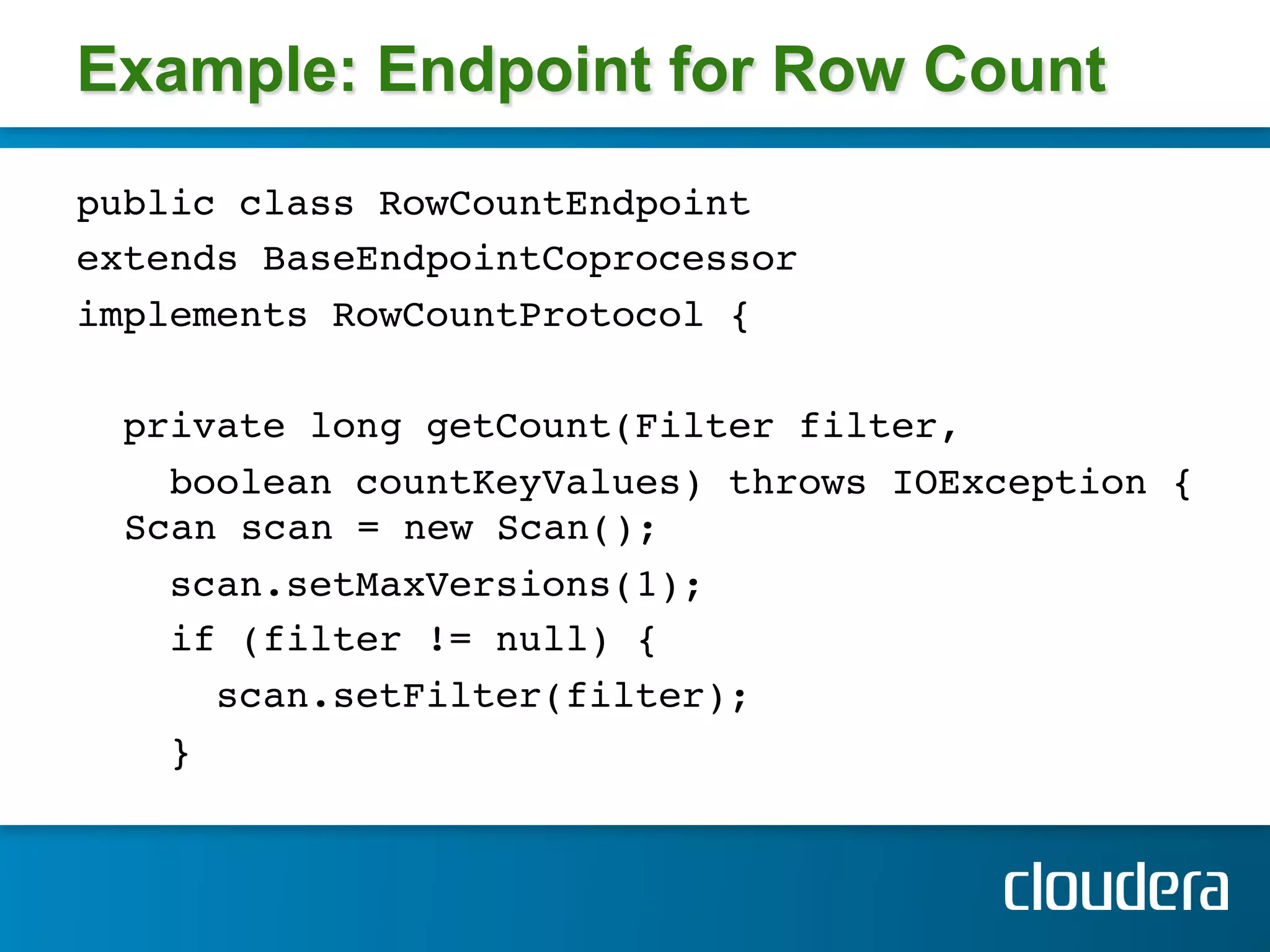

HBase Coprocessors allow user code to be run on region servers within each region of an HBase table. Coprocessors are loaded dynamically and scale automatically as regions are split or merged. They provide hooks into various HBase operations via observer classes and define an interface for custom endpoint calls between clients and servers. Examples of use cases include secondary indexes, filters, and replacing MapReduce jobs with server-side processing.

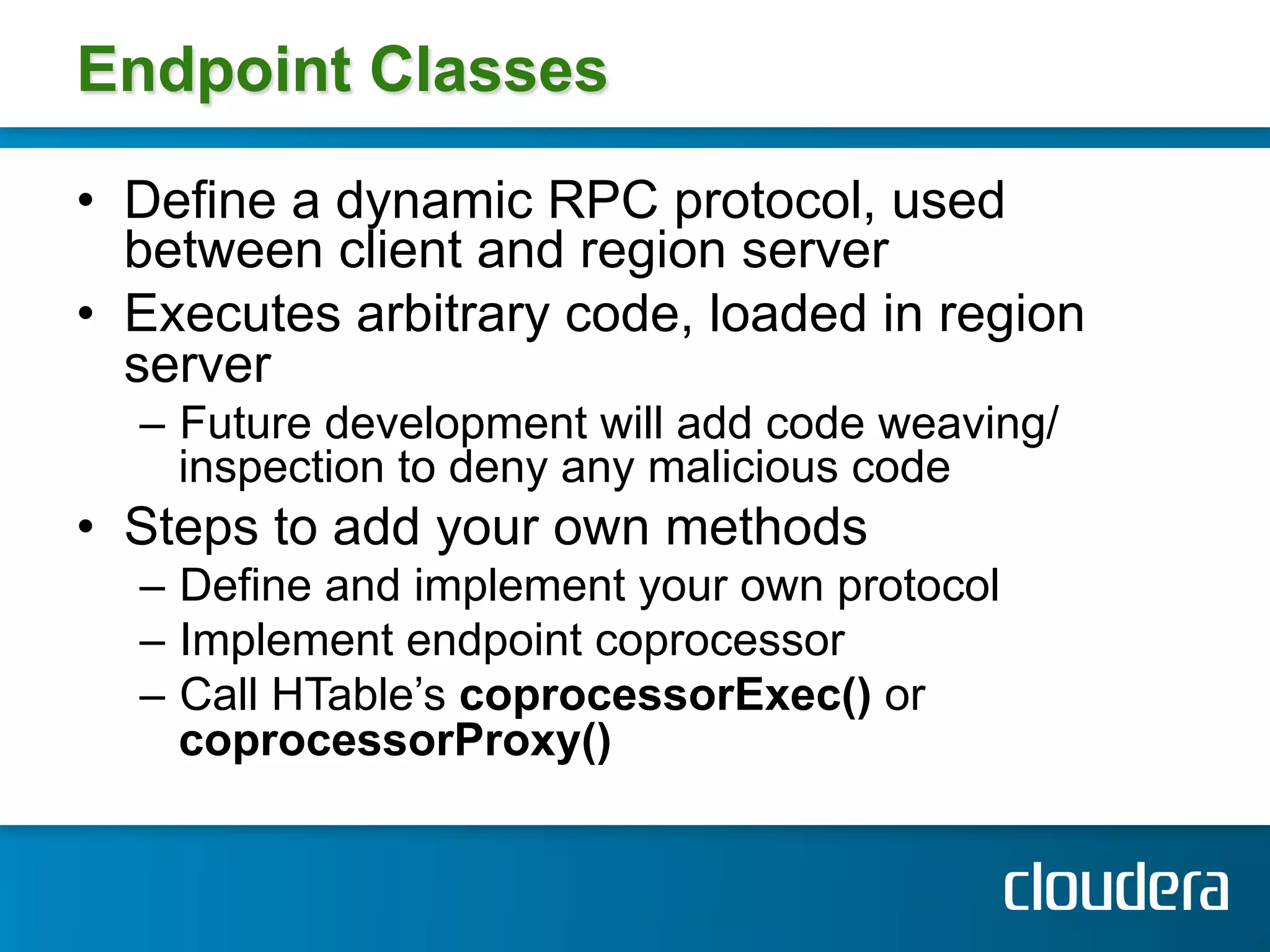

![Example: Add Coprocessor

public static void main(String[] args) throws IOException { !

Configuration conf = HBaseConfiguration.create(); !

FileSystem fs = FileSystem.get(conf);

Path path = new Path(fs.getUri() + Path.SEPARATOR +!

"test.jar"); !

HTableDescriptor htd = new HTableDescriptor("testtable");!

htd.addFamily(new HColumnDescriptor("colfam1"));!

htd.setValue("COPROCESSOR$1", path.toString() +!

"|" + RegionObserverExample.class.getCanonicalName() +!

"|" + Coprocessor.PRIORITY_USER); !

HBaseAdmin admin = new HBaseAdmin(conf);!

admin.createTable(htd); !

System.out.println(admin.getTableDescriptor(!

Bytes.toBytes("testtable"))); !

} !](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-19-2048.jpg)

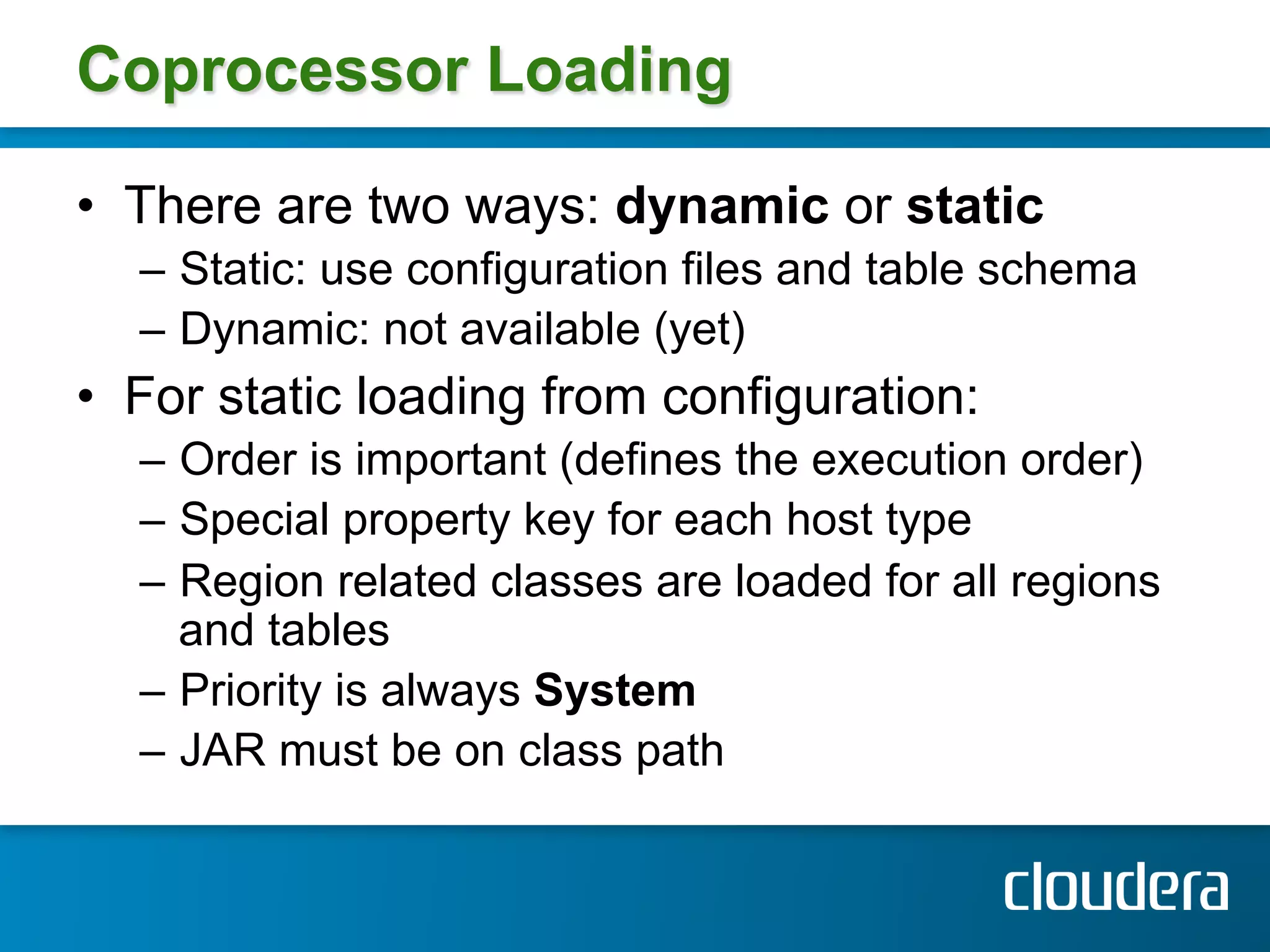

![Example Output

{NAME => 'testtable', COPROCESSOR$1 =>!

'file:/test.jar|coprocessor.RegionObserverExample|

1073741823', FAMILIES => [{NAME => 'colfam1',

BLOOMFILTER => 'NONE', REPLICATION_SCOPE => '0',

COMPRESSION => 'NONE', VERSIONS => '3', TTL =>

'2147483647', BLOCKSIZE => '65536', IN_MEMORY =>

'false', BLOCKCACHE => 'true'}]} !

!](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-20-2048.jpg)

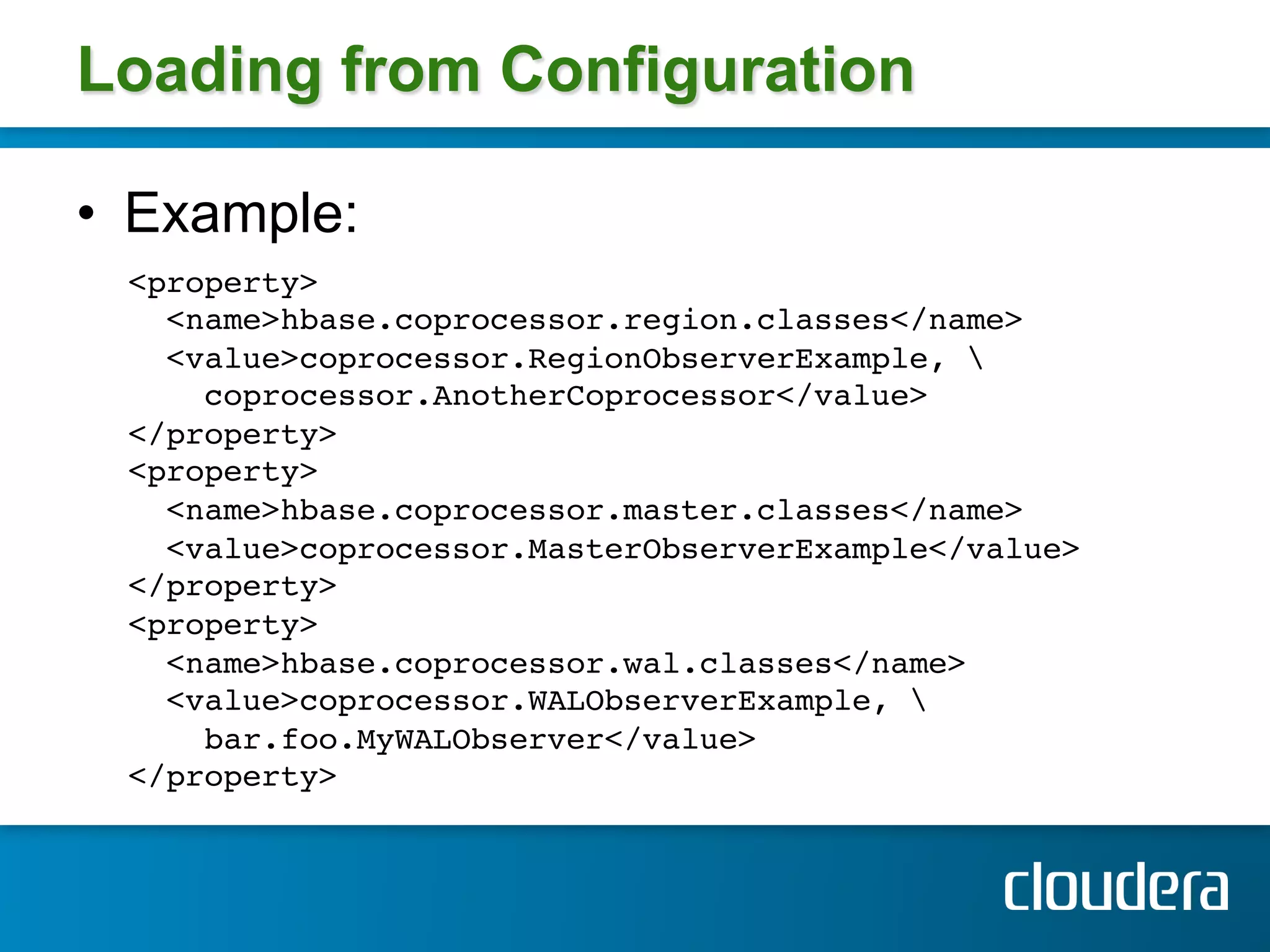

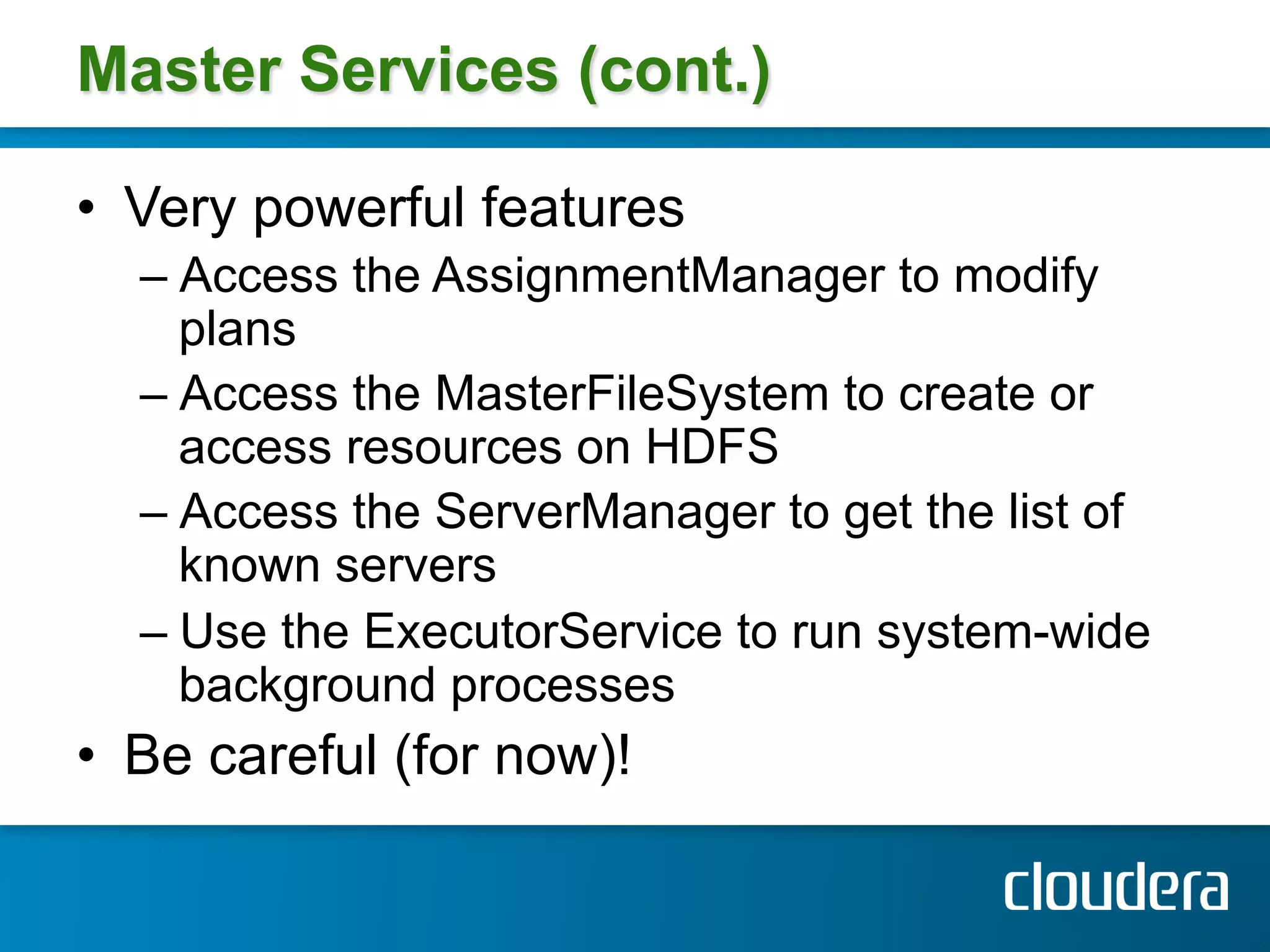

![Example: Master Post Hook

public class MasterObserverExample !

extends BaseMasterObserver { !

@Override public void postCreateTable( !

ObserverContext<MasterCoprocessorEnvironment> env, !

HRegionInfo[] regions, boolean sync) !

throws IOException { !

String tableName = !

regions[0].getTableDesc().getNameAsString(); !

MasterServices services =!

env.getEnvironment().getMasterServices();!

MasterFileSystem masterFileSystem =!

services.getMasterFileSystem(); !

FileSystem fileSystem = masterFileSystem.getFileSystem();!

Path blobPath = new Path(tableName + "-blobs");!

fileSystem.mkdirs(blobPath); !

}!

} !

!](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-31-2048.jpg)

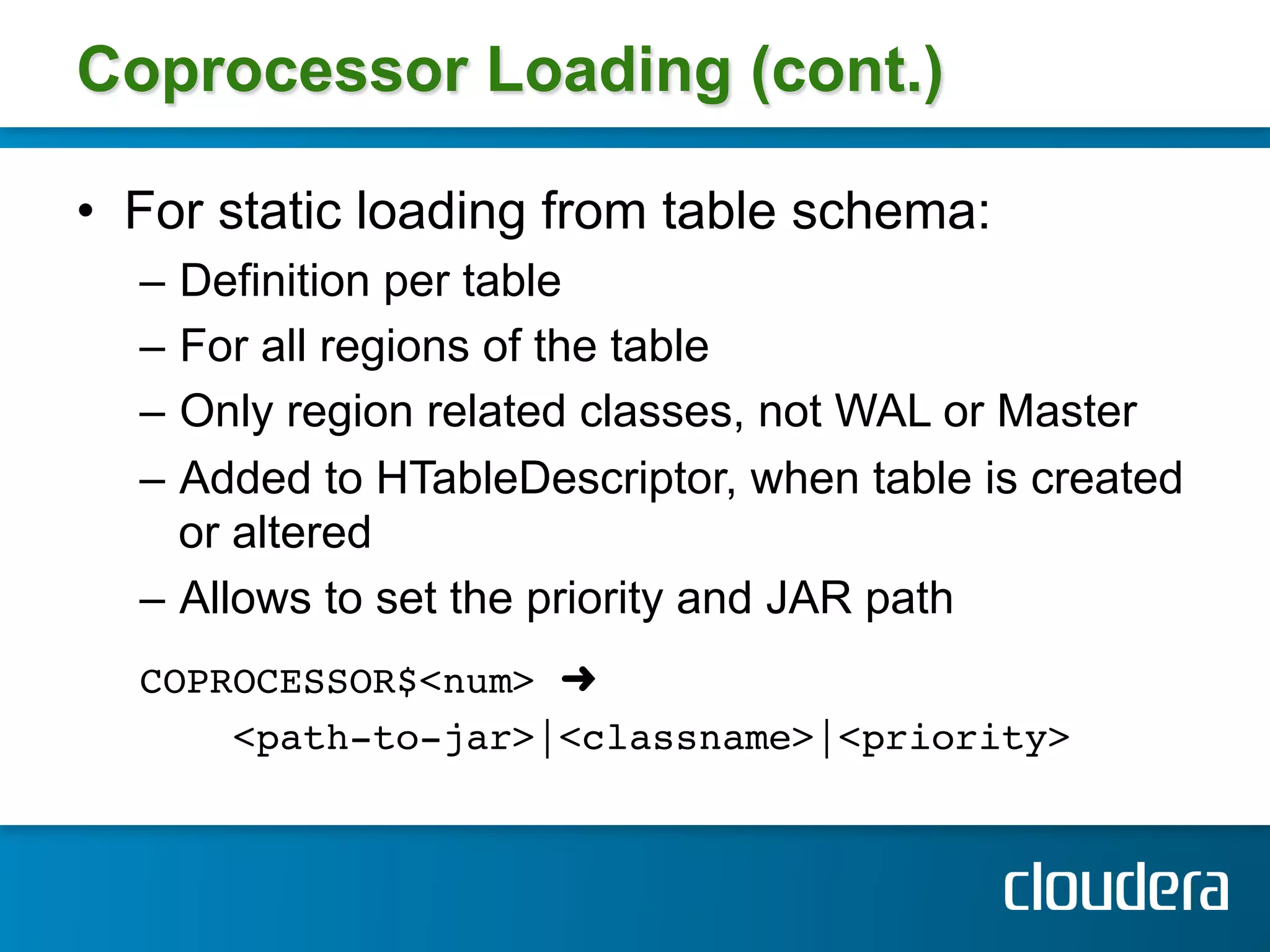

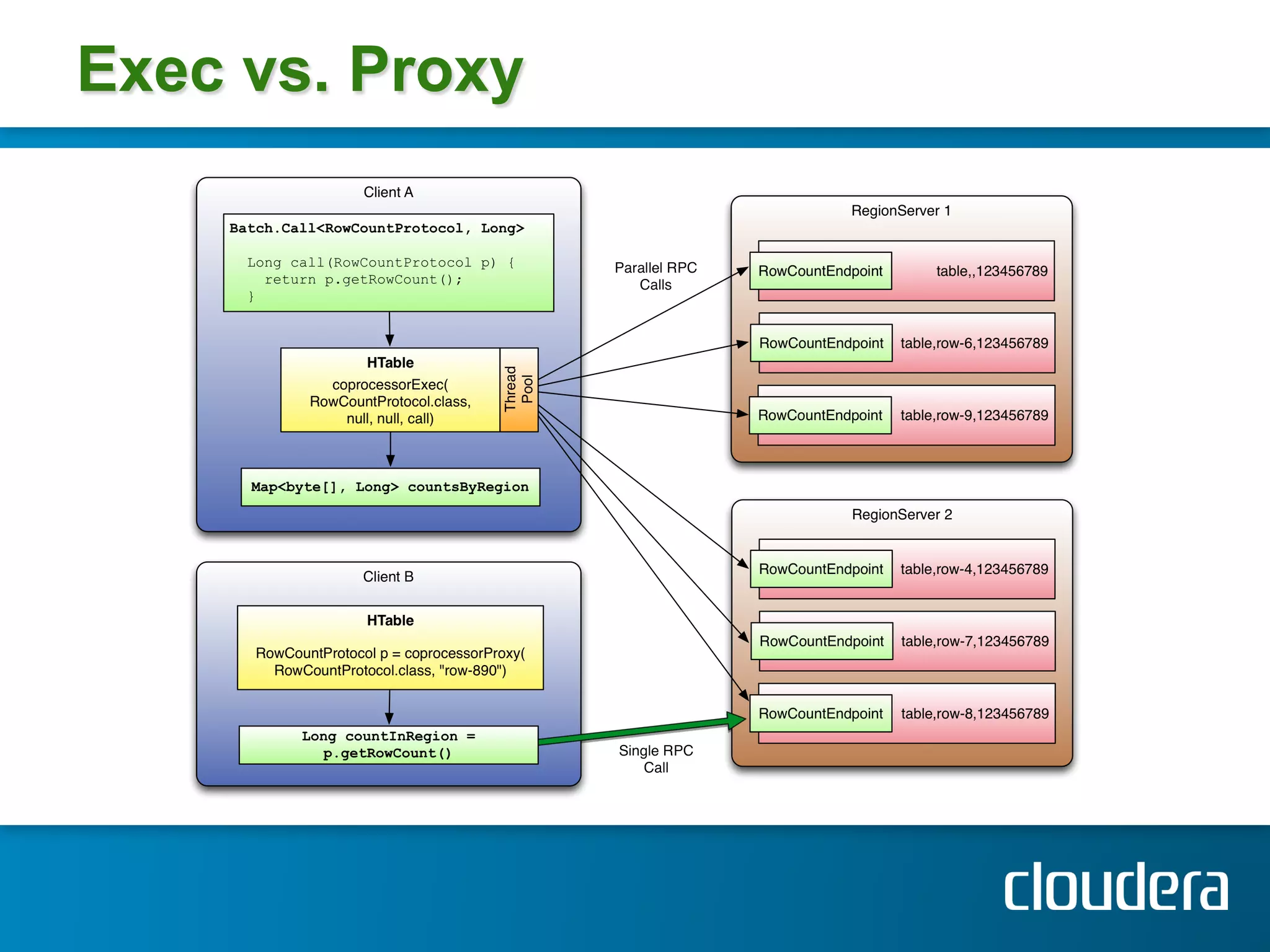

![Example: Invocation by Exec

public static void main(String[] args) throws IOException { !

Configuration conf = HBaseConfiguration.create(); !

HTable table = new HTable(conf, "testtable");!

try { !

Map<byte[], Long> results = !

table.coprocessorExec(RowCountProtocol.class, null, null,!

new Batch.Call<RowCountProtocol, Long>() { !

@Override!

public Long call(RowCountProtocol counter) !

throws IOException { !

return counter.getRowCount(); !

} !

}); !

!](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-42-2048.jpg)

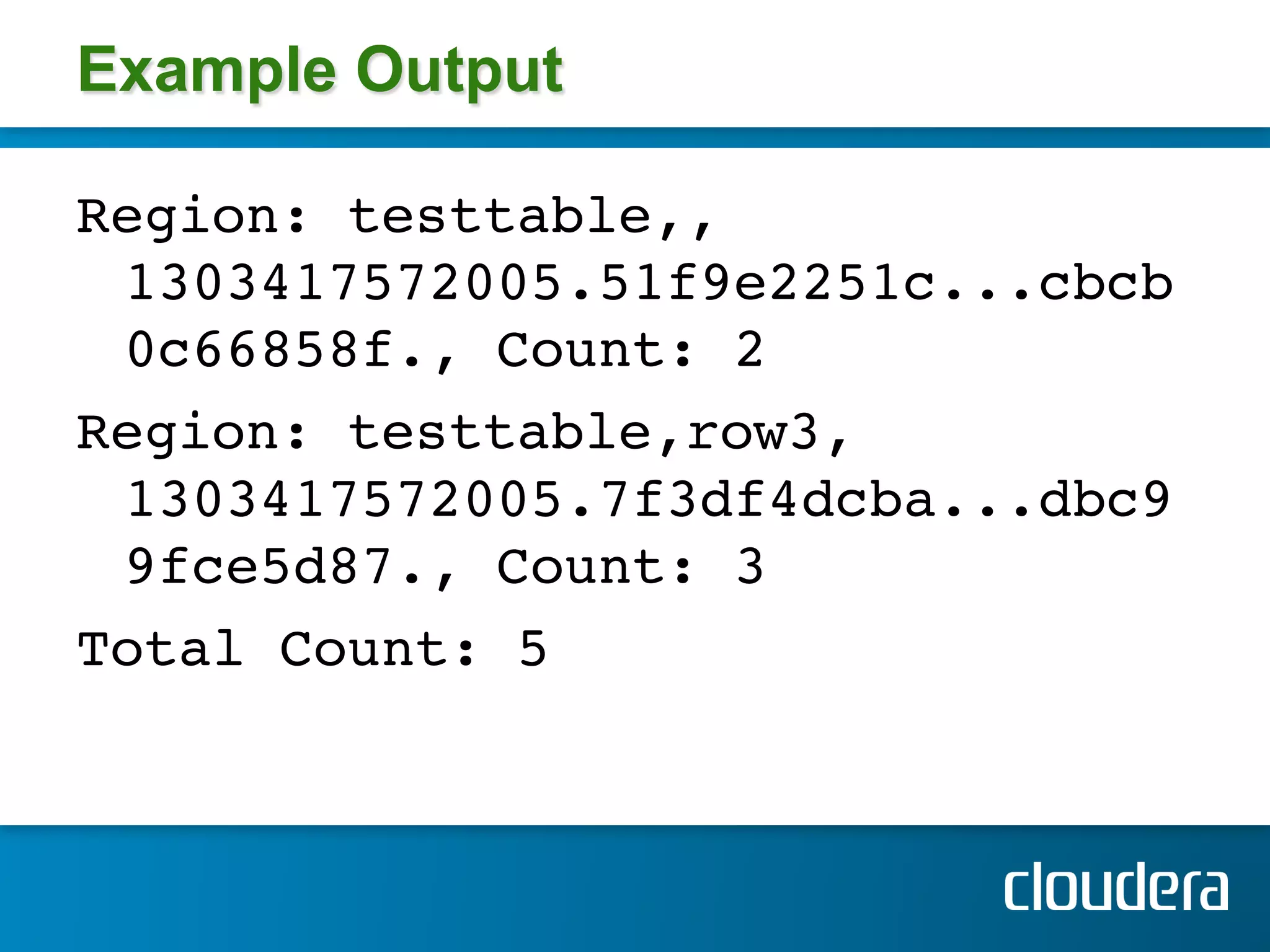

![Example: Invocation by Exec

long total = 0;!

for (Map.Entry<byte[], Long> entry : !

results.entrySet()) { !

total += entry.getValue().longValue();!

System.out.println("Region: " + !

Bytes.toString(entry.getKey()) +!

", Count: " + entry.getValue()); !

} !

System.out.println("Total Count: " + total); !

} catch (Throwable throwable) { !

throwable.printStackTrace(); !

} !

} !](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-43-2048.jpg)

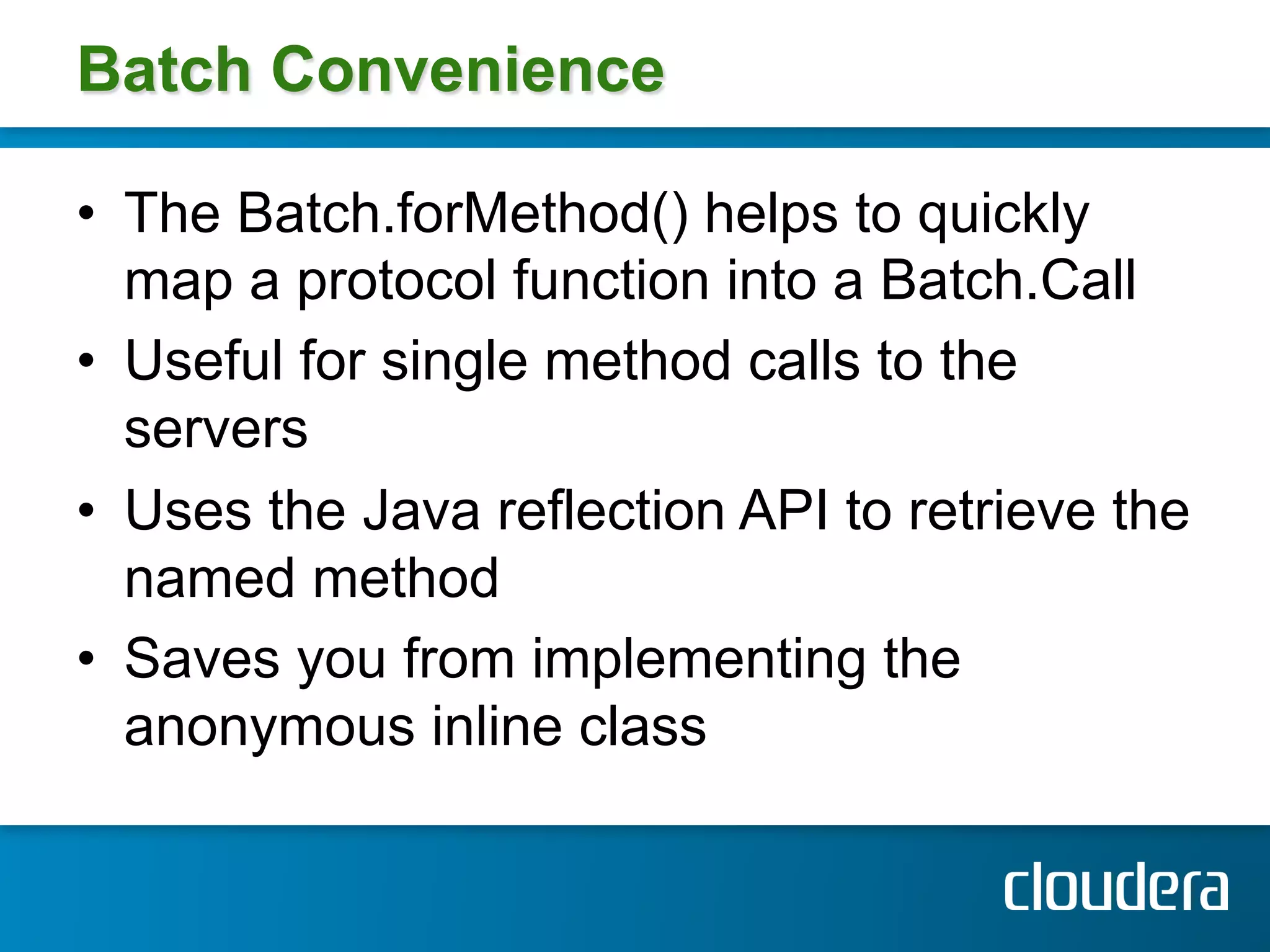

![Batch Convenience

Batch.Call call =!

Batch.forMethod(!

RowCountProtocol.class,!

"getKeyValueCount"); !

Map<byte[], Long> results =!

table.coprocessorExec(!

RowCountProtocol.class, !

null, null, call); !

!](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-46-2048.jpg)

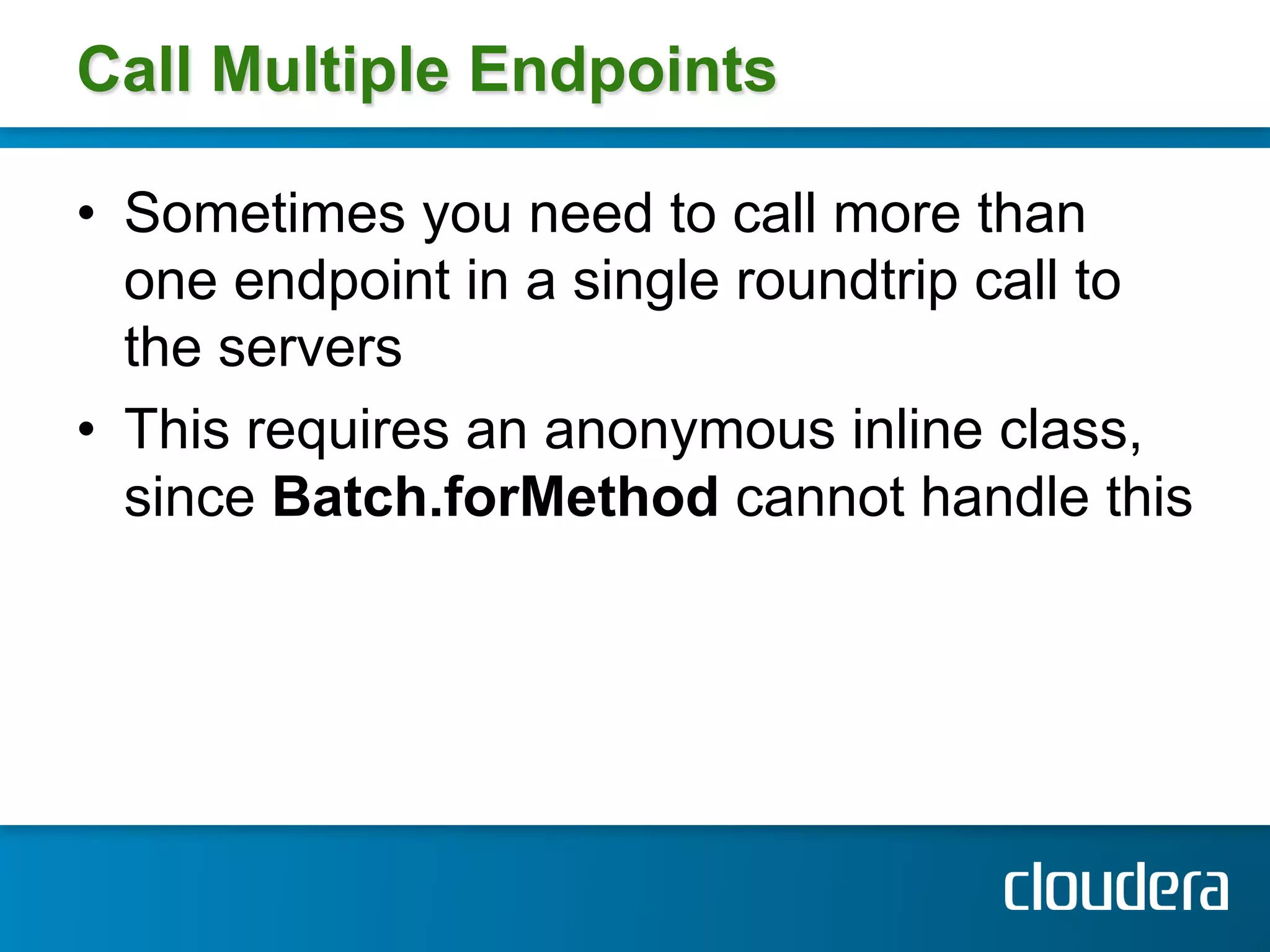

![Call Multiple Endpoints

Map<byte[], Pair<Long, Long>> !

results = table.coprocessorExec( !

RowCountProtocol.class, null, null,!

new Batch.Call<RowCountProtocol,!

Pair<Long, Long>>() { !

public Pair<Long, Long> call(!

RowCountProtocol counter) !

throws IOException {

return new Pair(!

counter.getRowCount(), !

counter.getKeyValueCount()); !

}!

}); !](https://image.slidesharecdn.com/3hbasecoprocessorshbaseconmay2012-120530140257-phpapp02/75/HBaseCon-2012-HBase-Coprocessors-Deploy-Shared-Functionality-Directly-on-the-Cluster-Cloudera-48-2048.jpg)