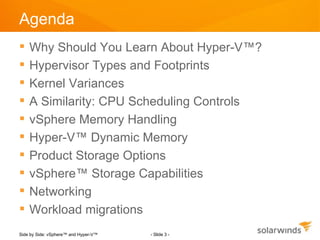

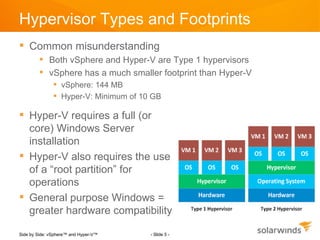

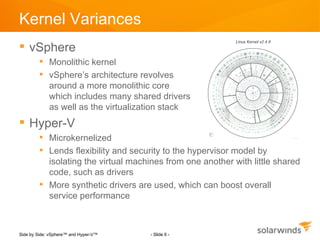

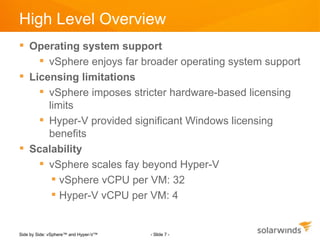

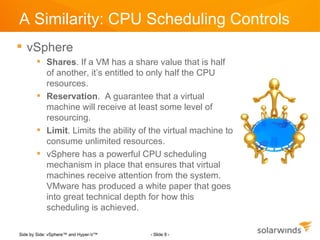

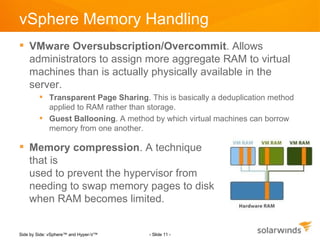

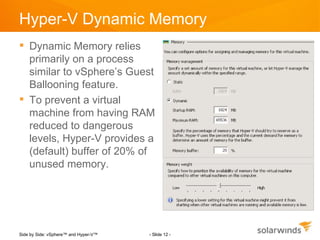

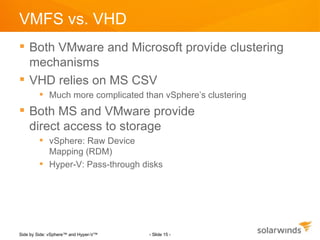

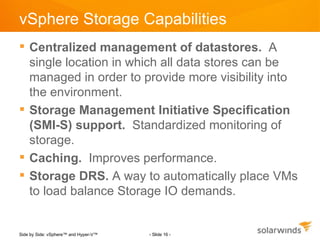

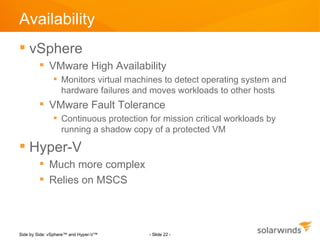

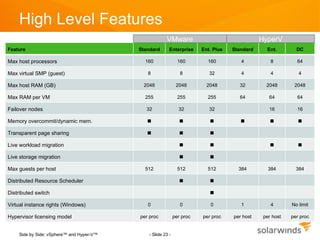

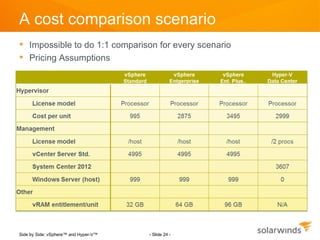

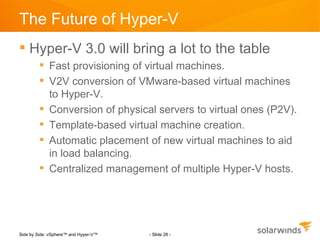

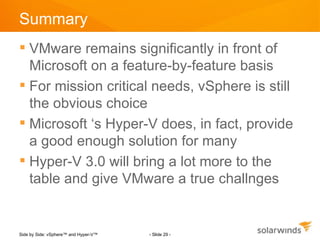

This document compares VMware's vSphere and Microsoft's Hyper-V, highlighting differences in architecture, resource management, and features. VMware vSphere is noted for its broader operating system support, superior scalability, and advanced resource scheduling capabilities, while Hyper-V is recognized for being sufficient for many organizations, especially those already using Microsoft systems. The upcoming Hyper-V 3.0 version promises new features that may enhance its competitiveness against VMware.