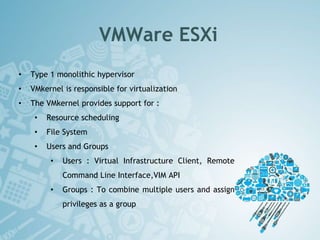

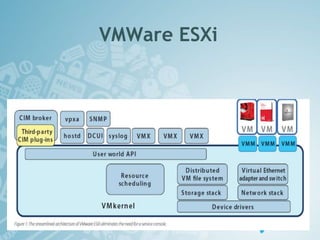

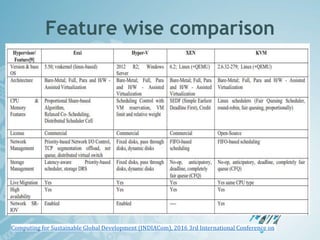

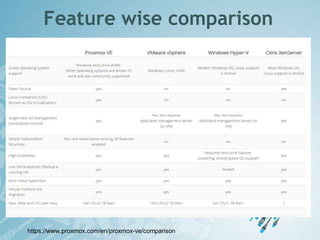

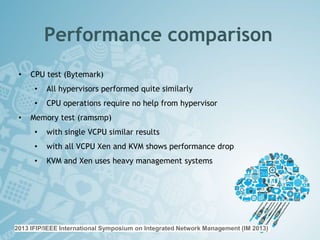

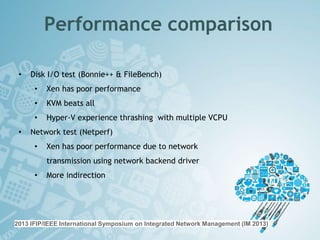

This document compares several hypervisors, including VMWare ESXi, Xen, KVM, and Microsoft Hyper-V. It classifies hypervisors as either monolithic or microlithic based on their kernel organization. It provides details on the architecture and components of VMWare ESXi, Xen, KVM, and Microsoft Hyper-V. It also summarizes the results of various performance tests conducted on these hypervisors for CPU, disk I/O, memory, and network I/O. In these tests, KVM generally had the best performance, while Xen showed relatively poorer performance.