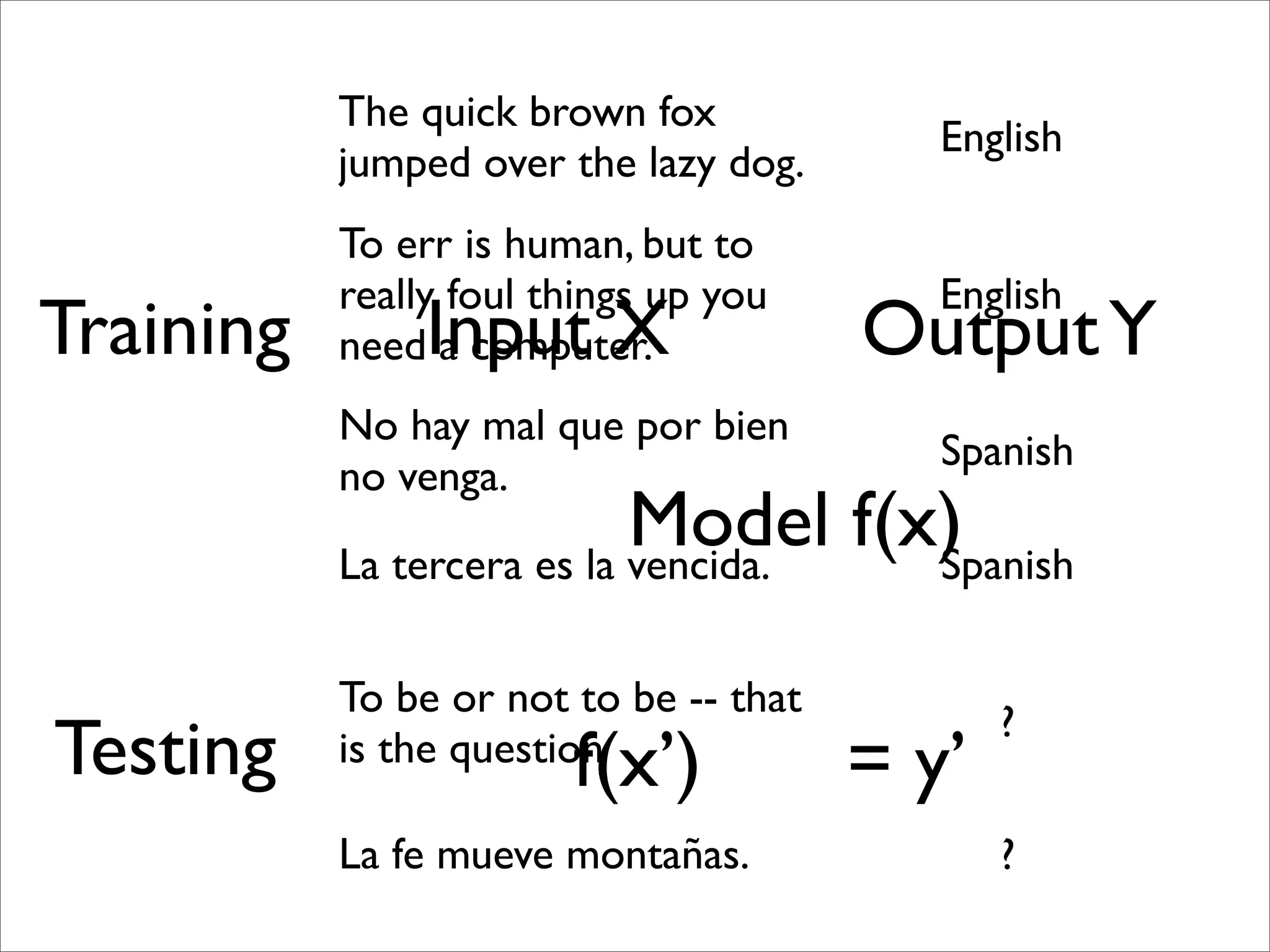

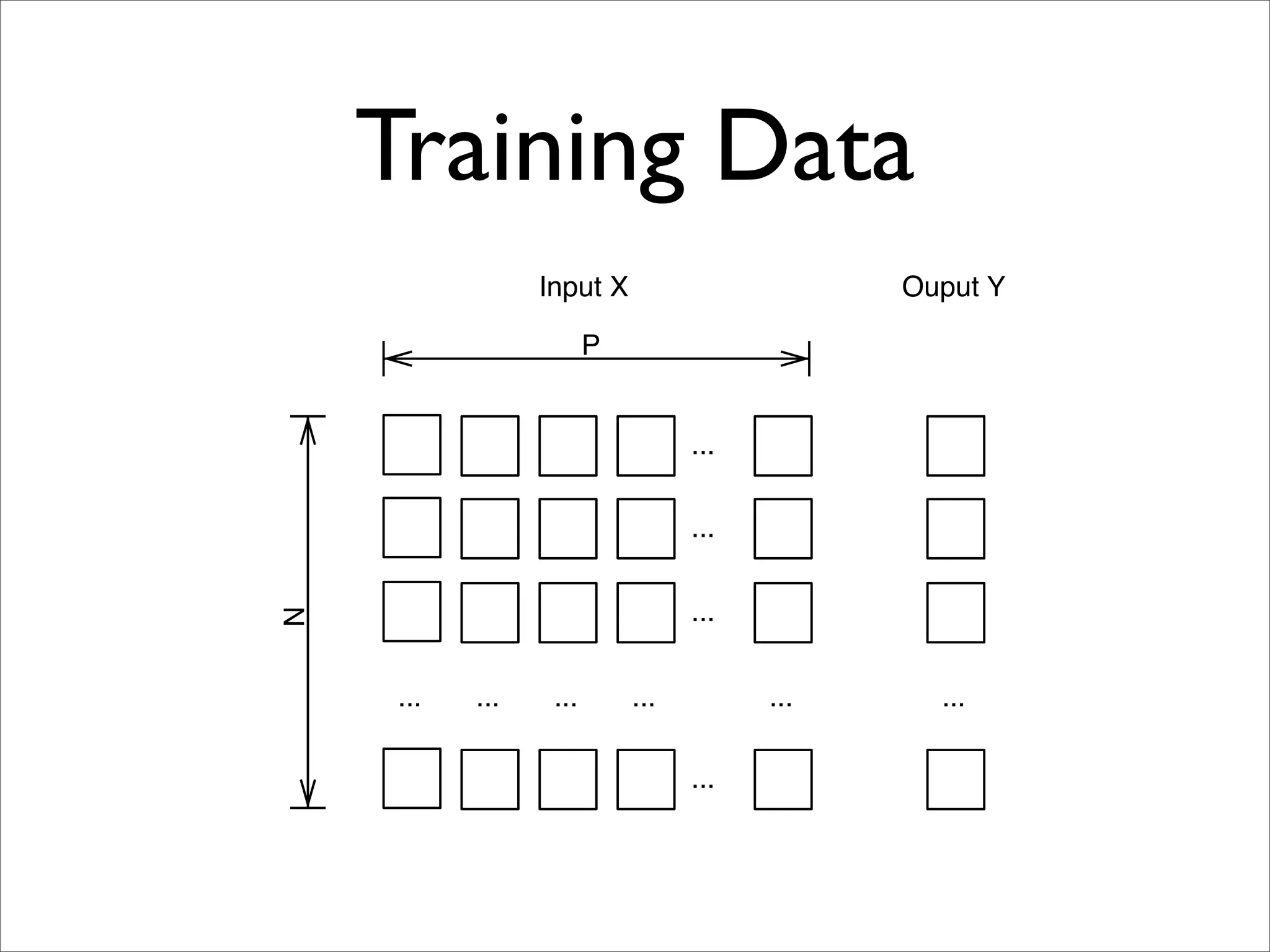

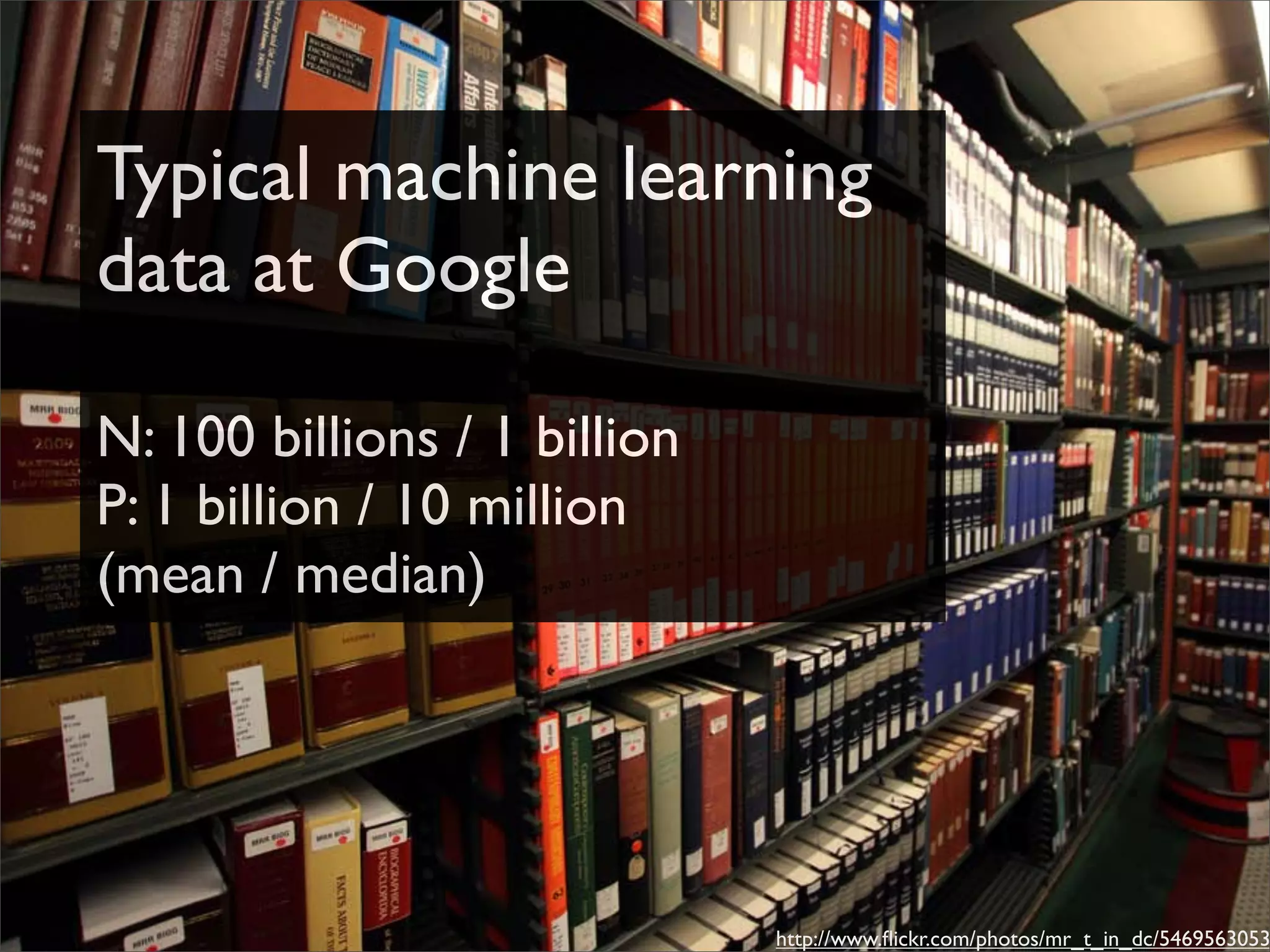

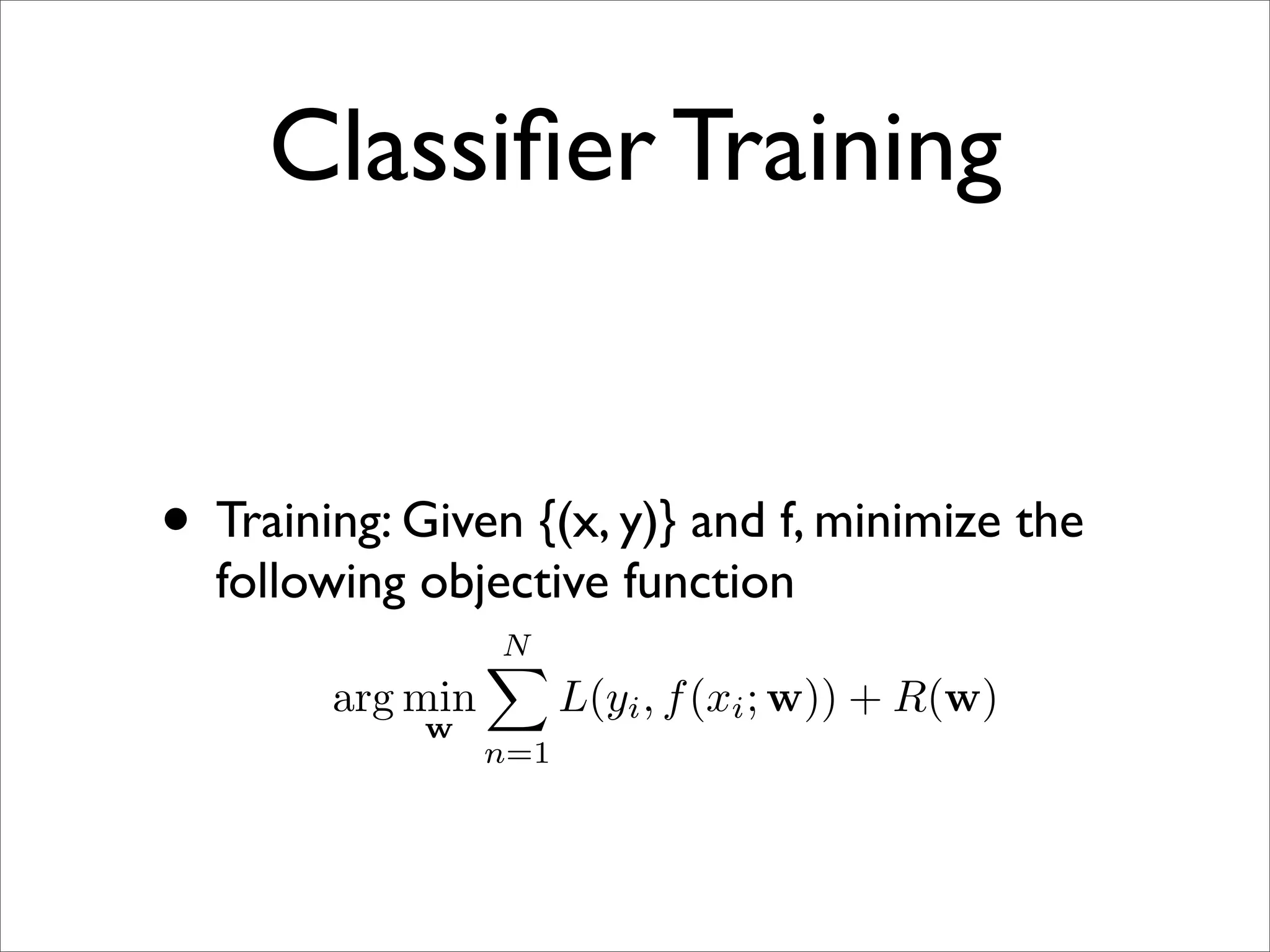

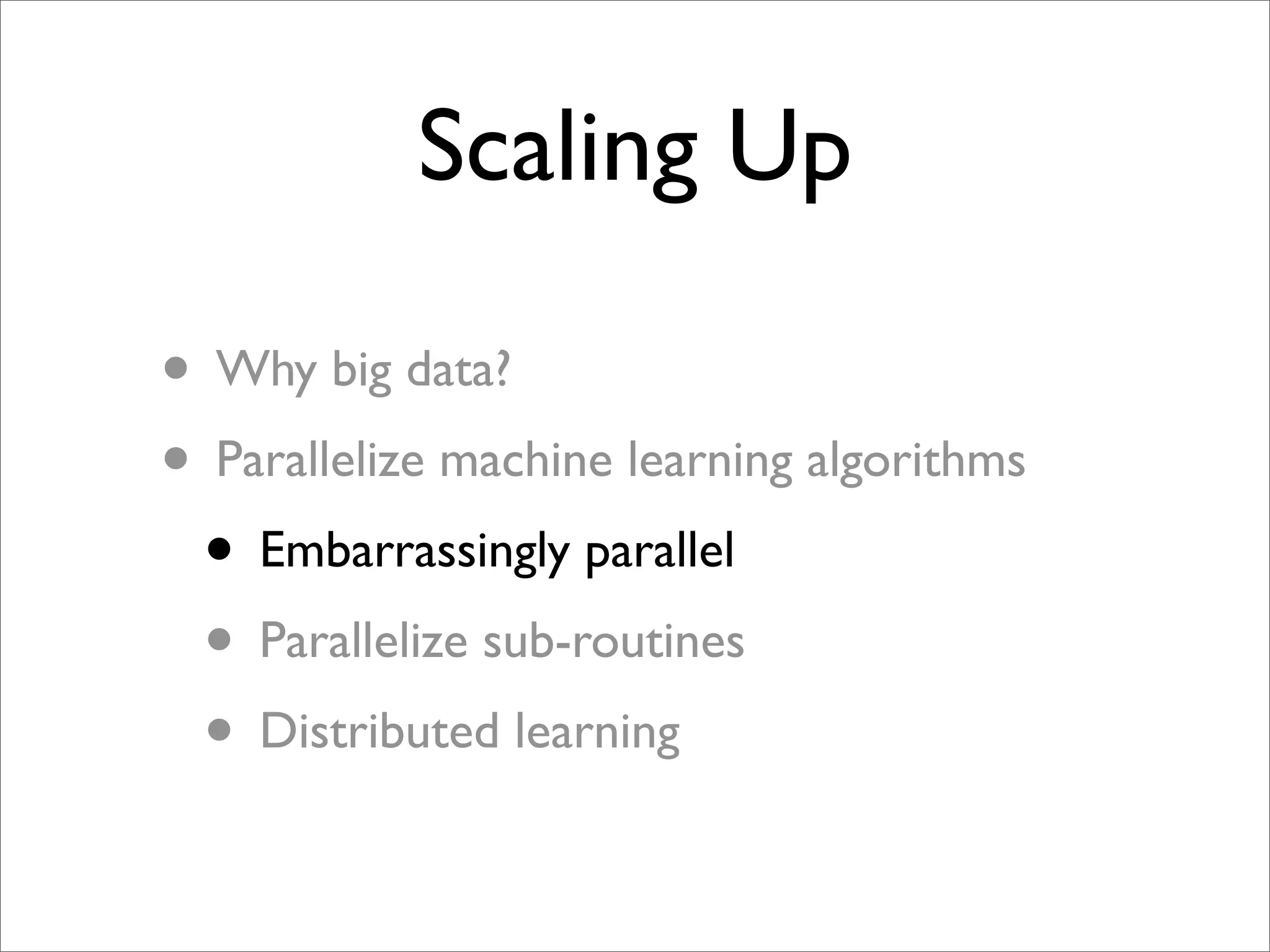

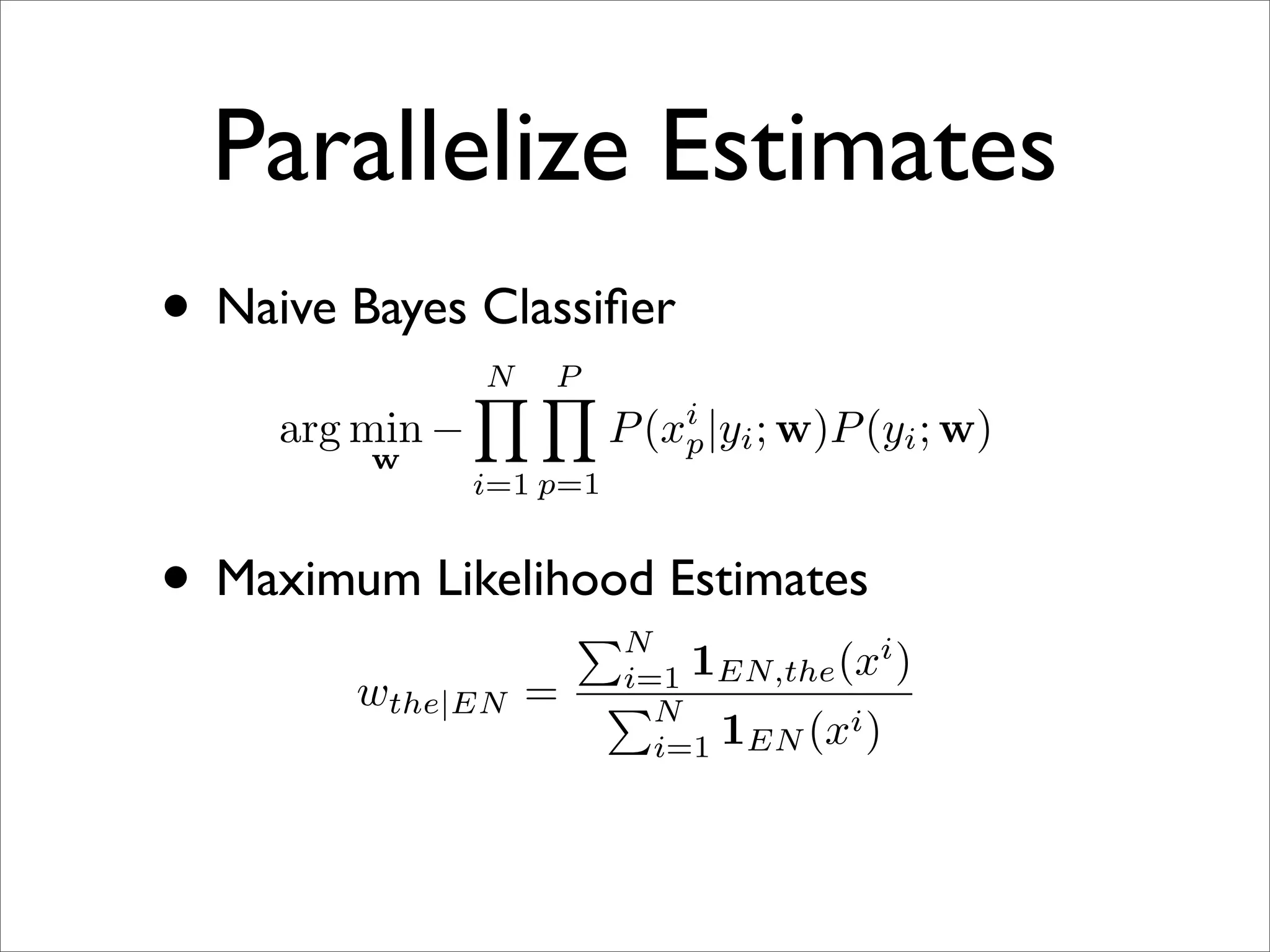

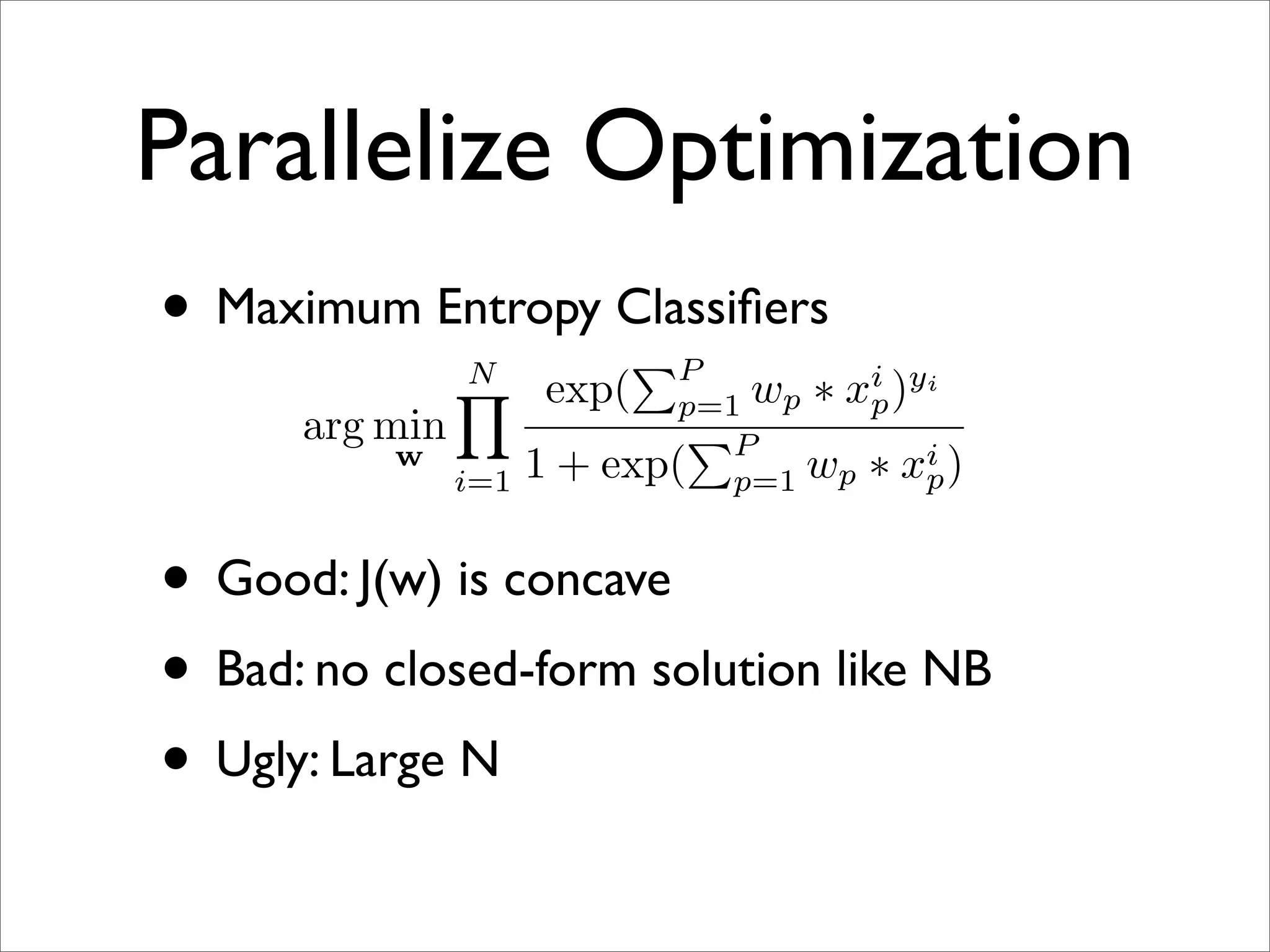

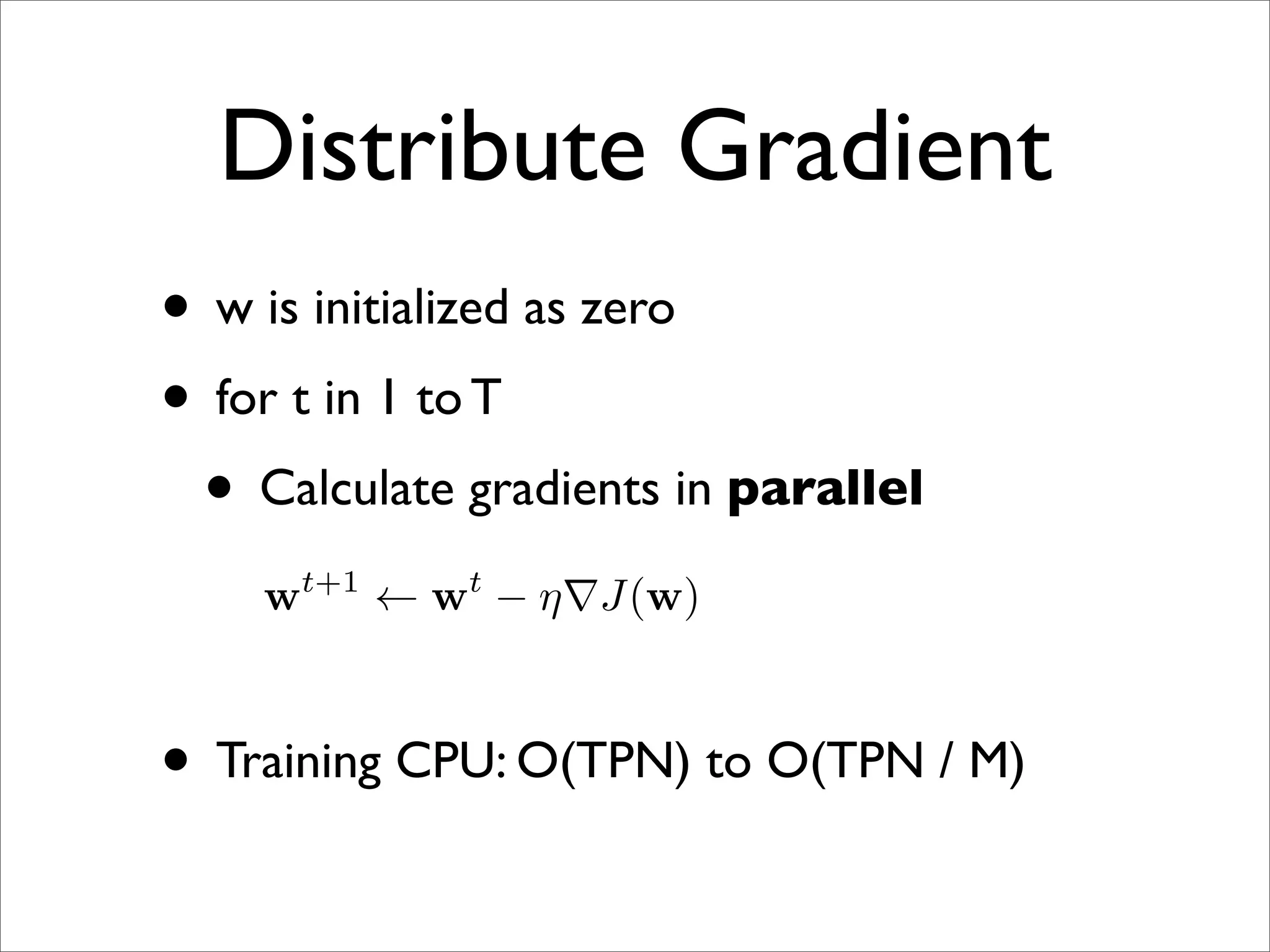

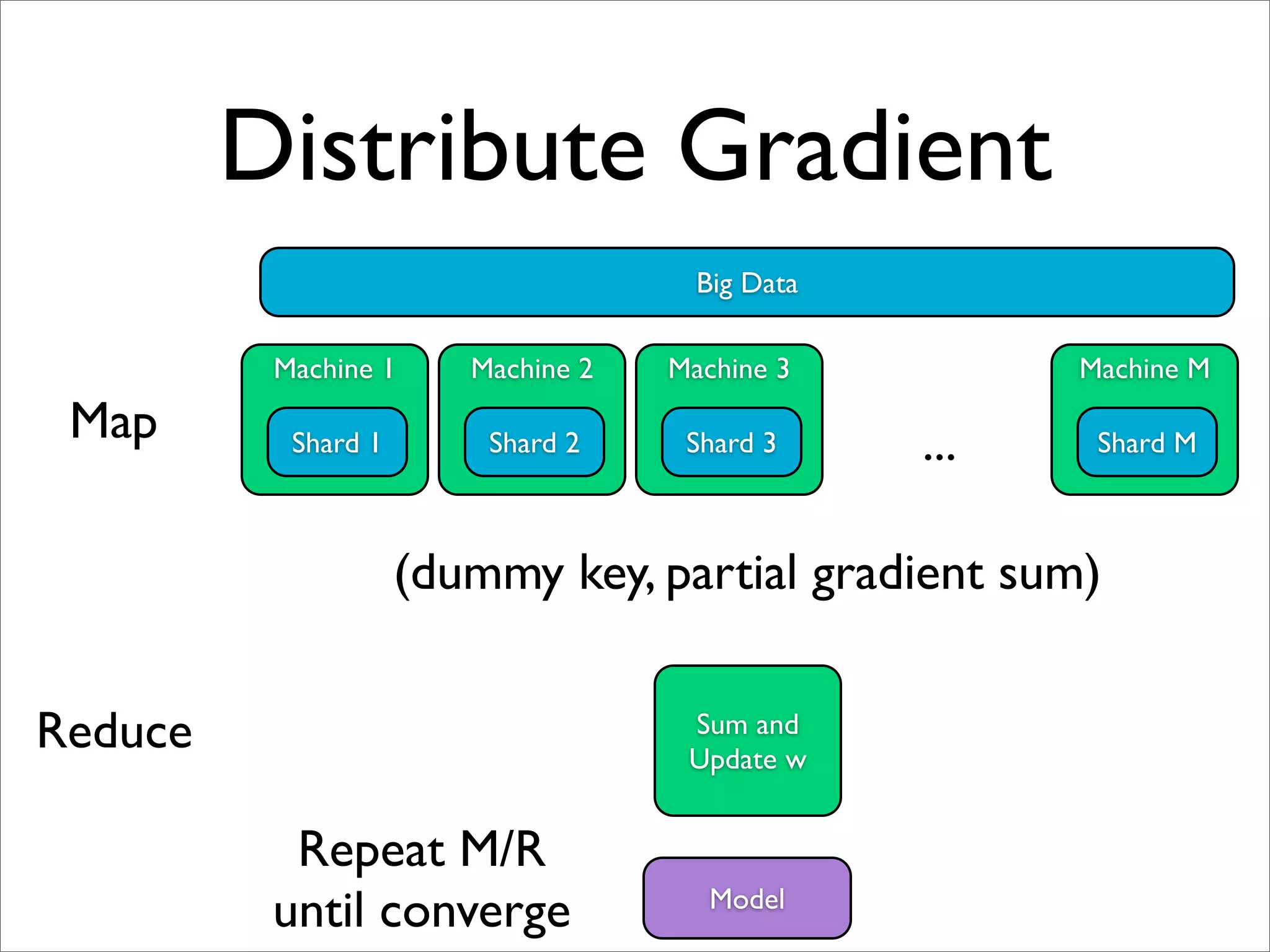

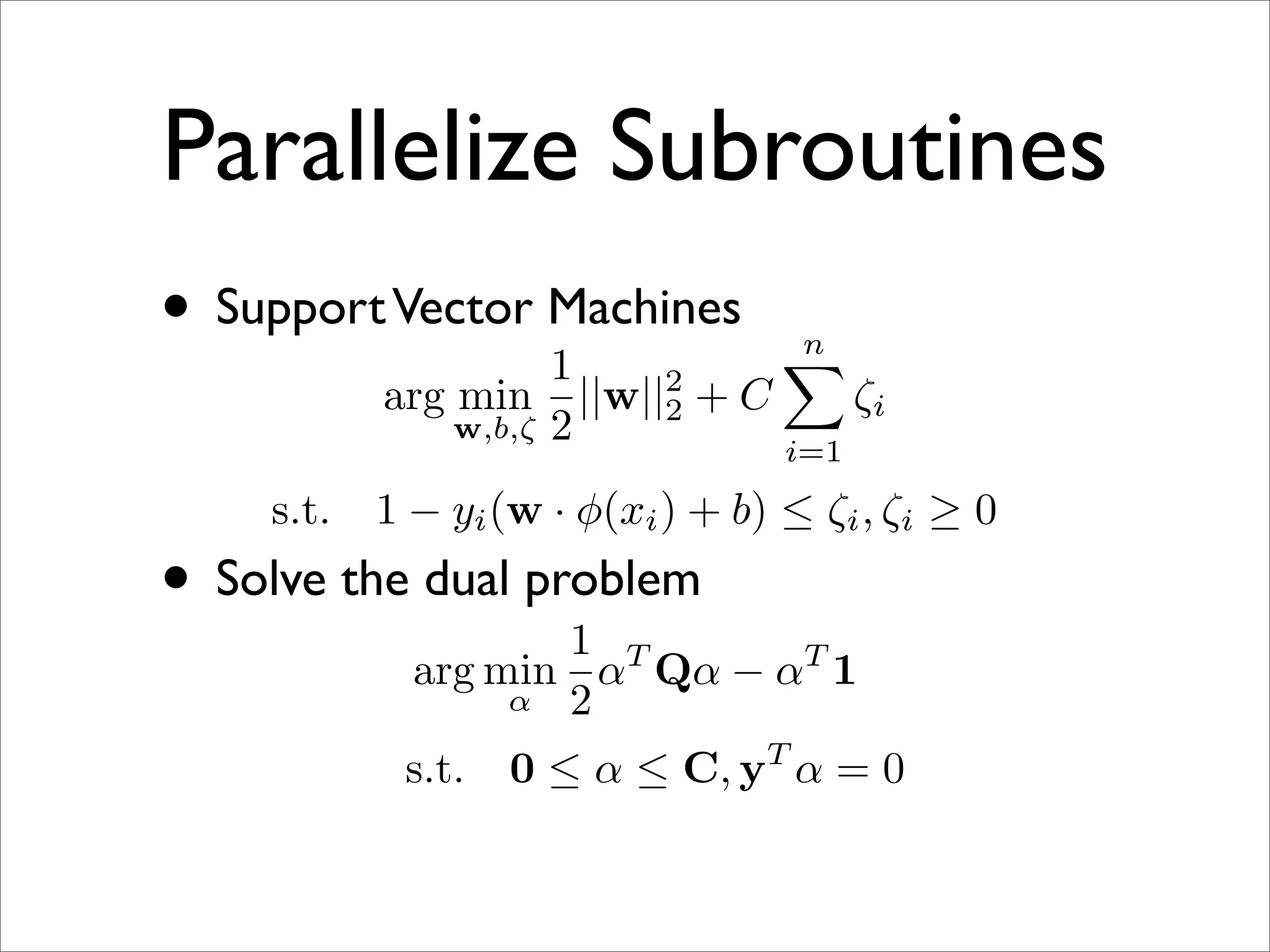

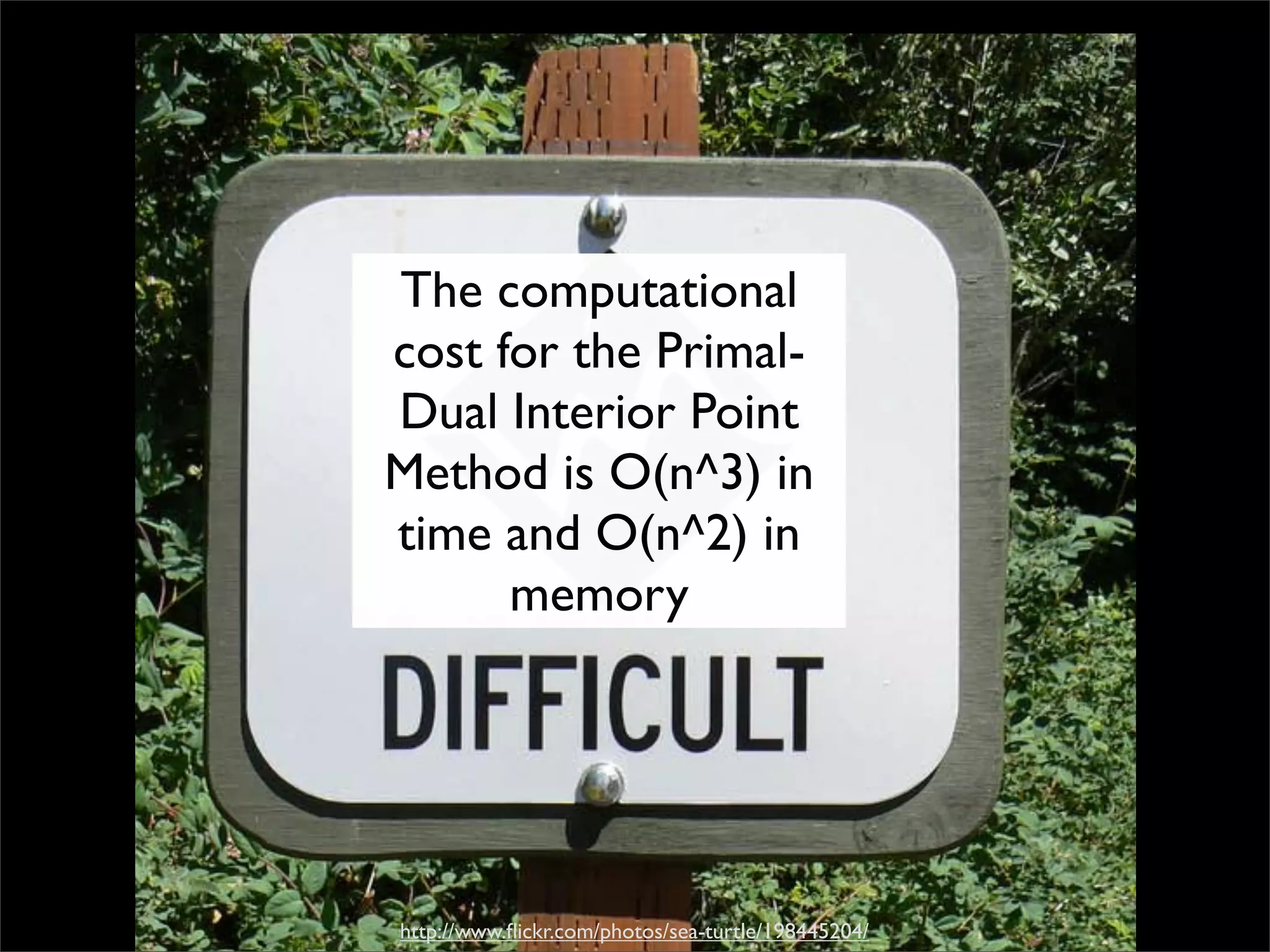

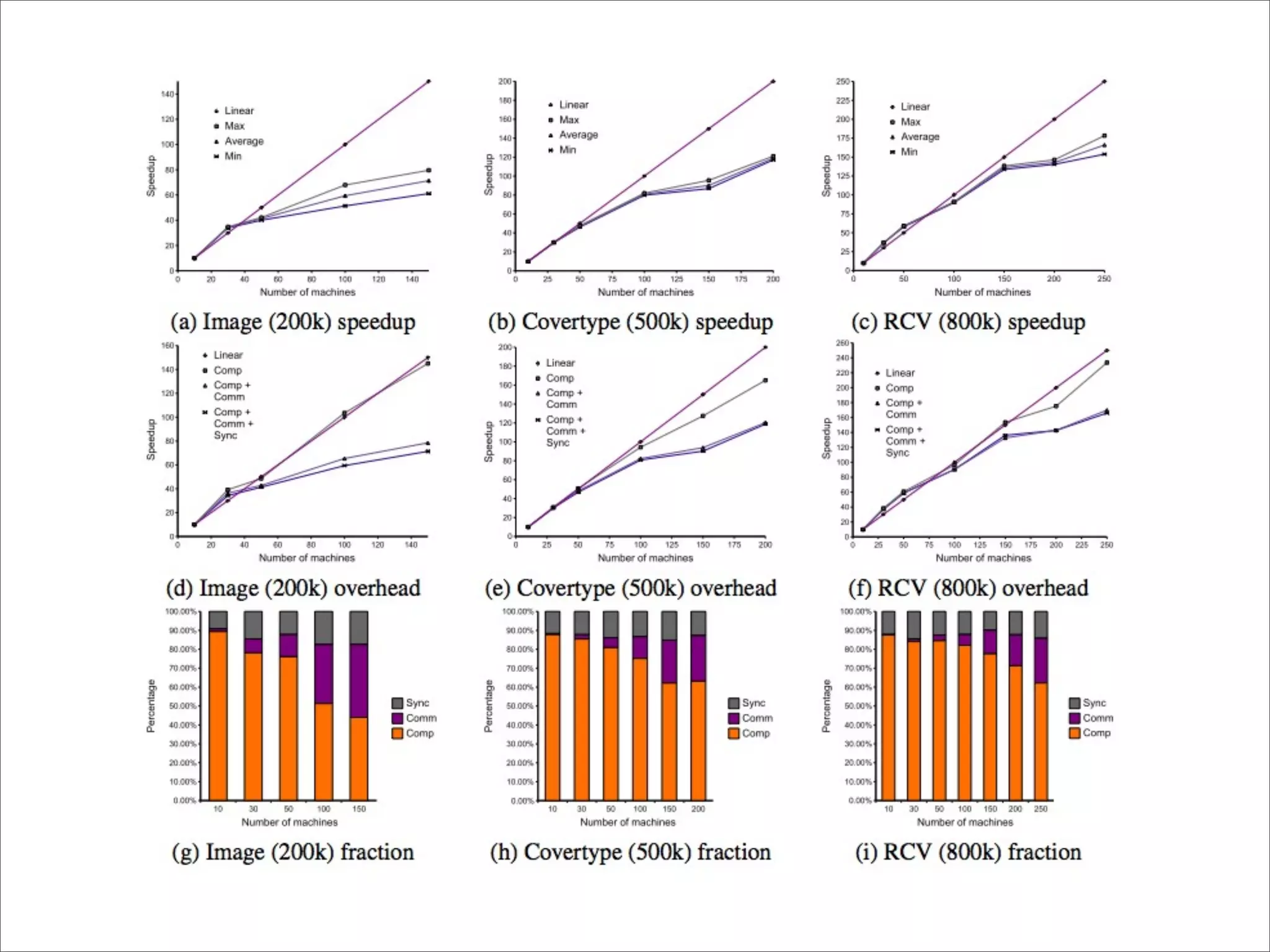

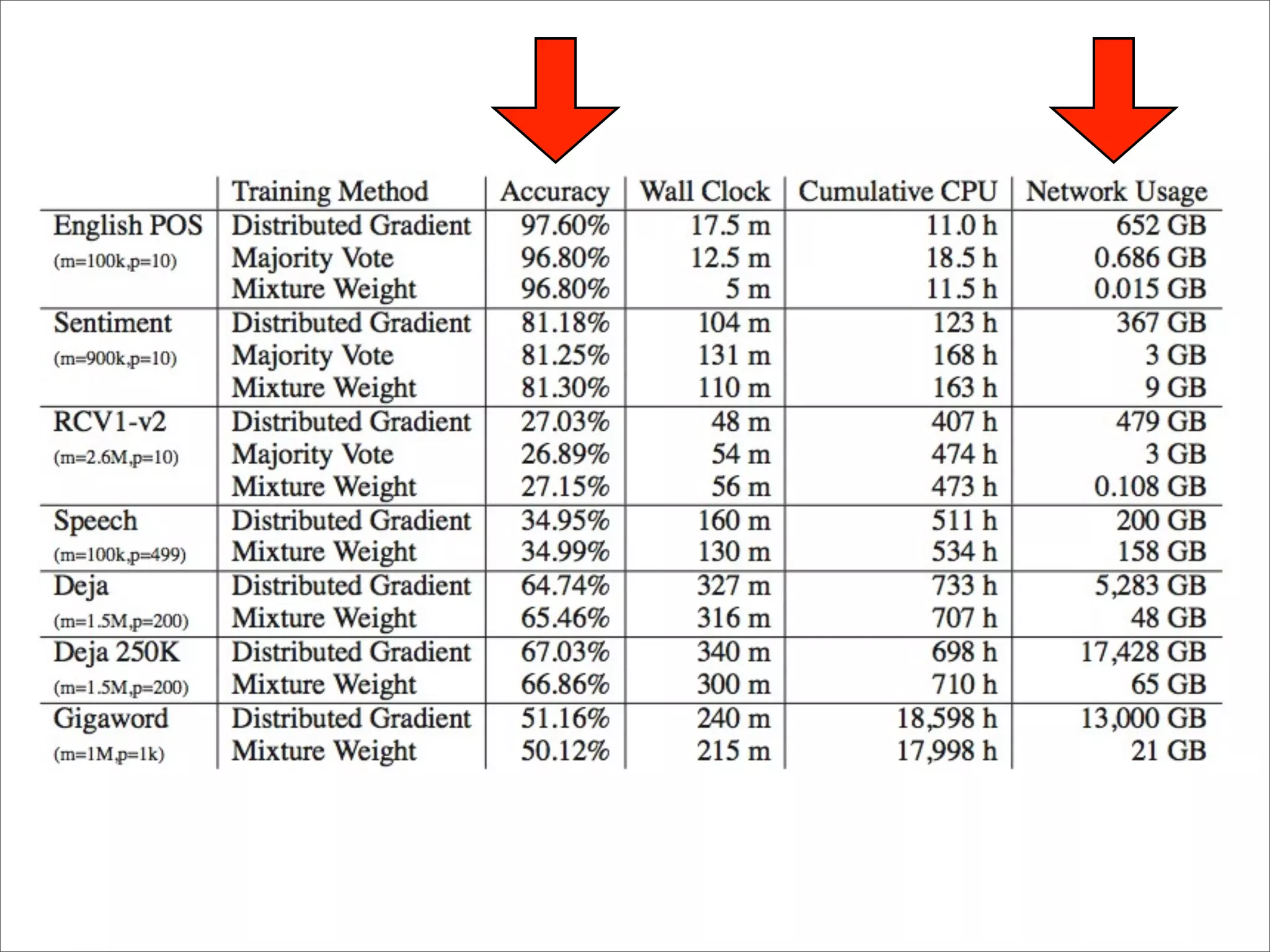

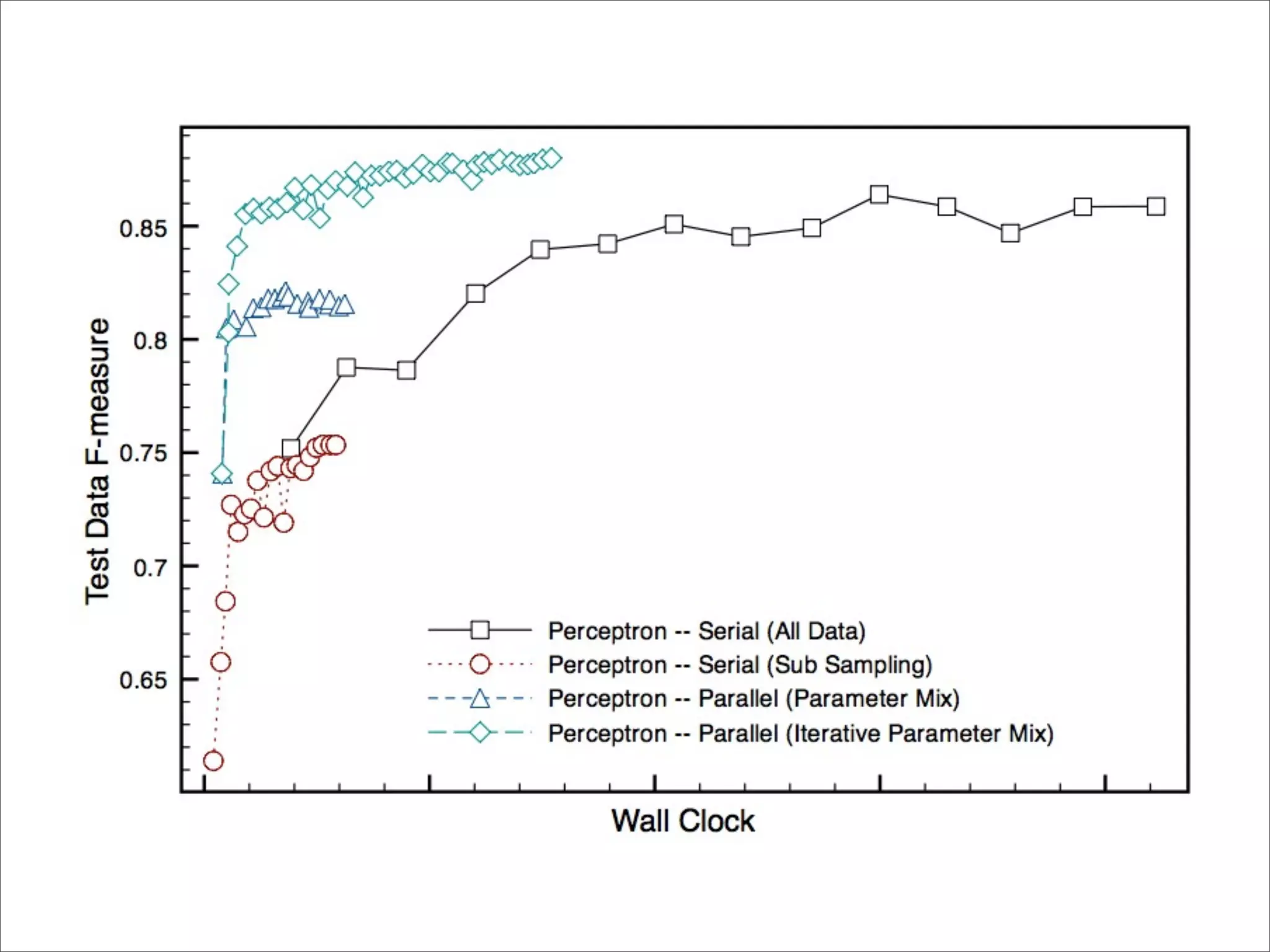

The document discusses the principles and practices of scaling machine learning algorithms for big data, drawing lessons from Google's projects. It emphasizes the importance of parallelizing algorithms, distributed learning, and optimization techniques to efficiently handle large datasets. Various strategies, including the use of classifiers and support vector machines, are highlighted for improving machine learning performance in a cloud environment.

![Linear Classifier

The quick brown fox jumped over the lazy dog.

‘a’ ... ‘aardvark’ ... ‘dog’ ... ‘the’ ... ‘montañas’ ...

x [ 0, ... 0, ... 1, ... 1, ... 0, ... ]

w [ 0.1, ... 132, ... 150, ... 200, ... -153, ... ]

P

f (x) = w · x = wp ∗ xp

p=1](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-11-2048.jpg)

![Why not Small Data?

[Banko and Brill, 2001]](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-19-2048.jpg)

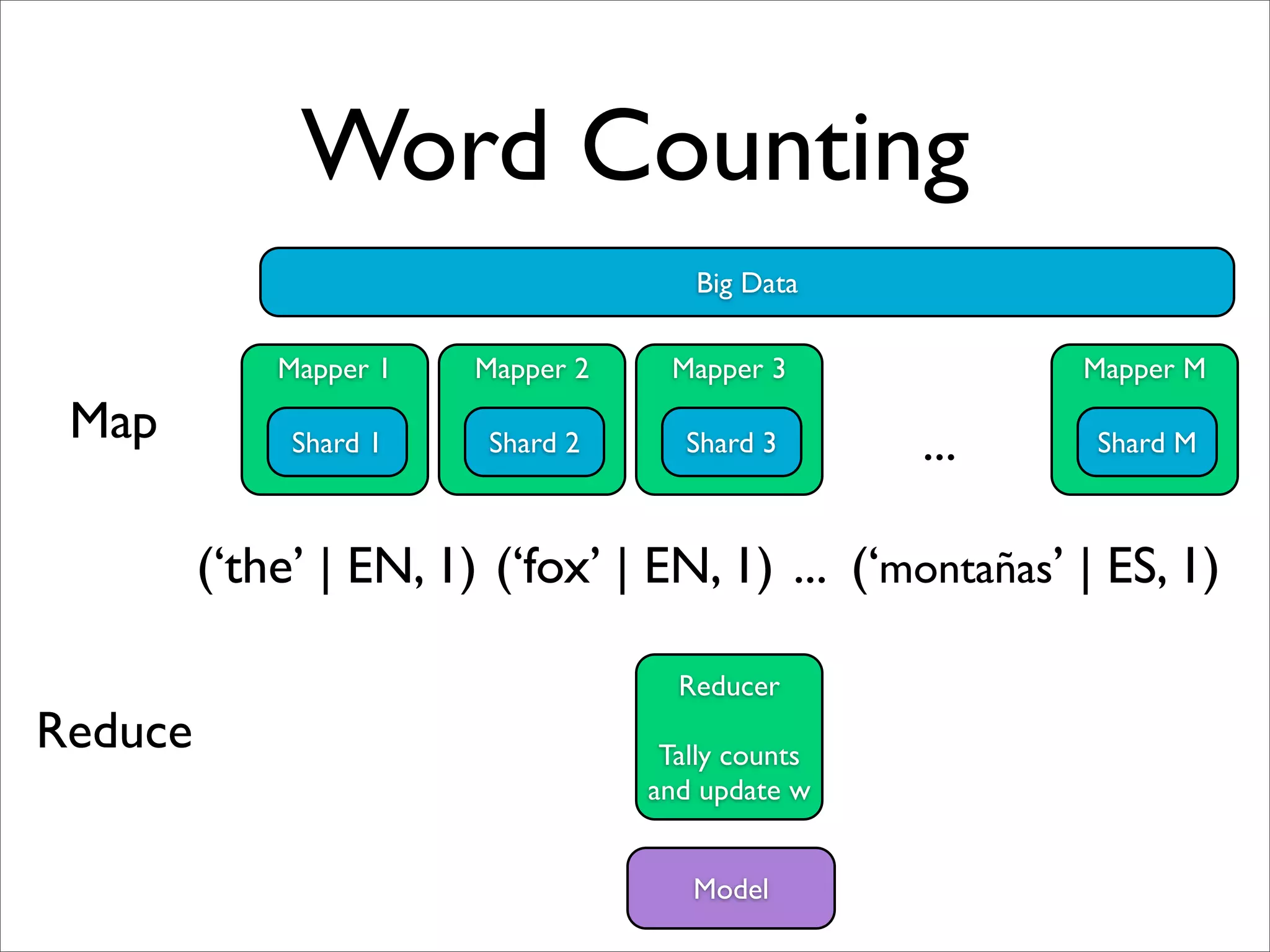

![Word Counting

(‘the|EN’, 1)

X: “The quick brown fox ...”

Map (‘quick|EN’, 1)

Y: EN

(‘brown|EN’, 1)

Reduce [ (‘the|EN’, 1), (‘the|EN’, 1), (‘the|EN’, 1) ]

C(‘the’|EN) = SUM of values = 3

C( the |EN )

w the |EN =

C(EN )](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-22-2048.jpg)

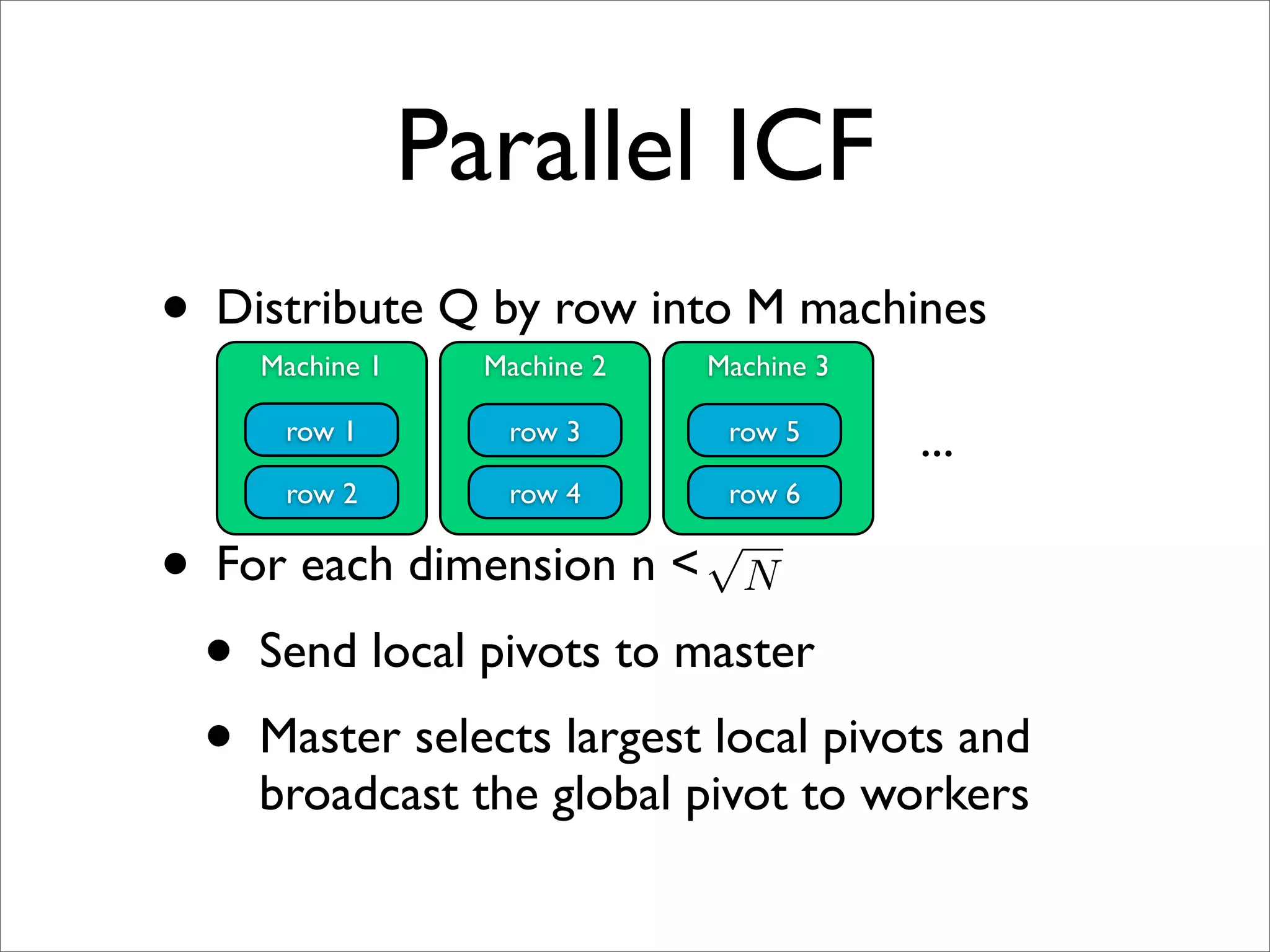

![Parallel SVM [Chang et al, 2007]

• Parallel, row-wise incomplete Cholesky

Factorization for Q

• Parallel interior point method

• Time O(n^3) becomes O(n^2 / M)

√

• Memory O(n^2) becomes O(n N / M)

• Parallel Support Vector Machines (psvm) http://

code.google.com/p/psvm/

• Implement in MPI](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-32-2048.jpg)

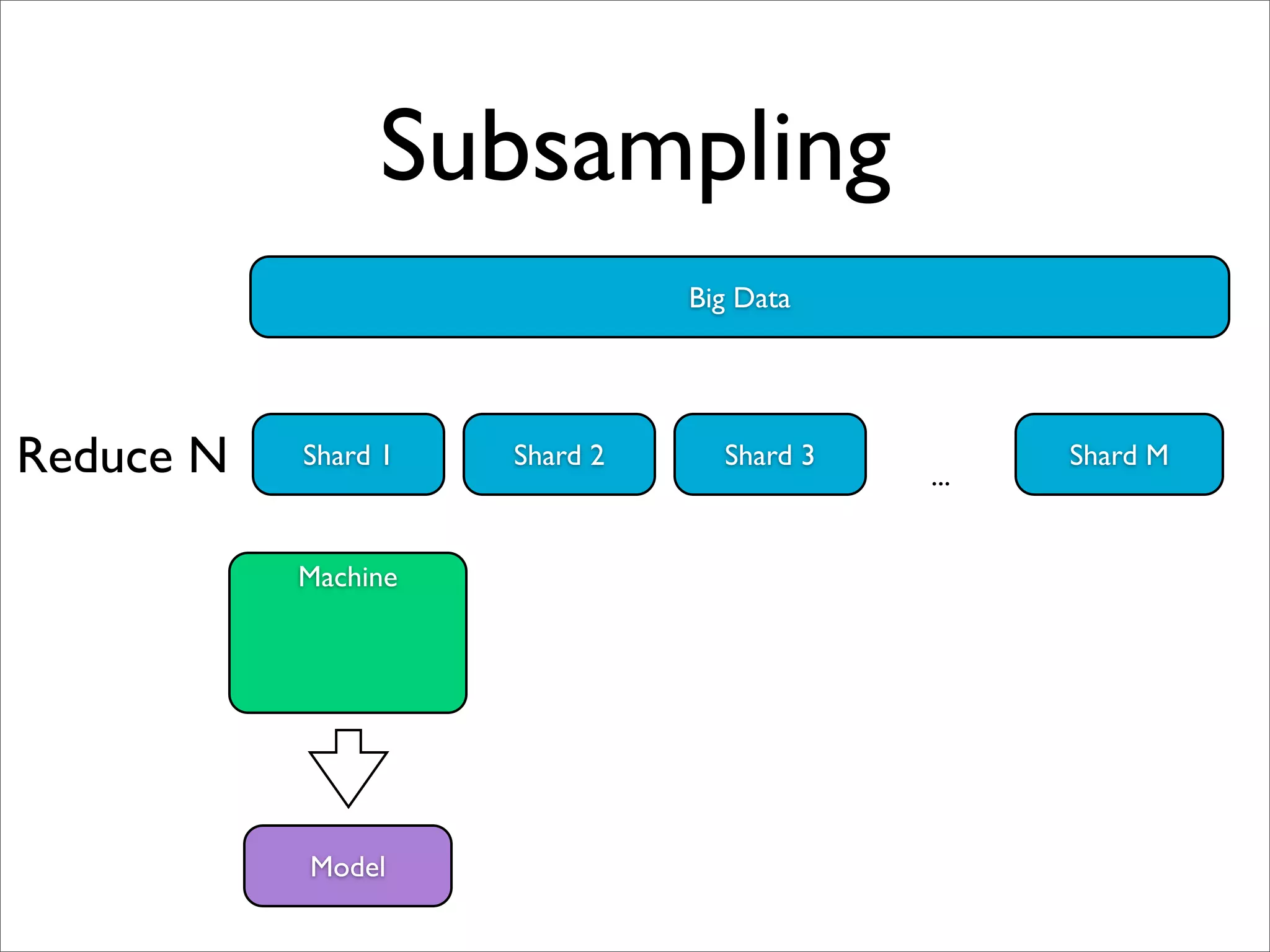

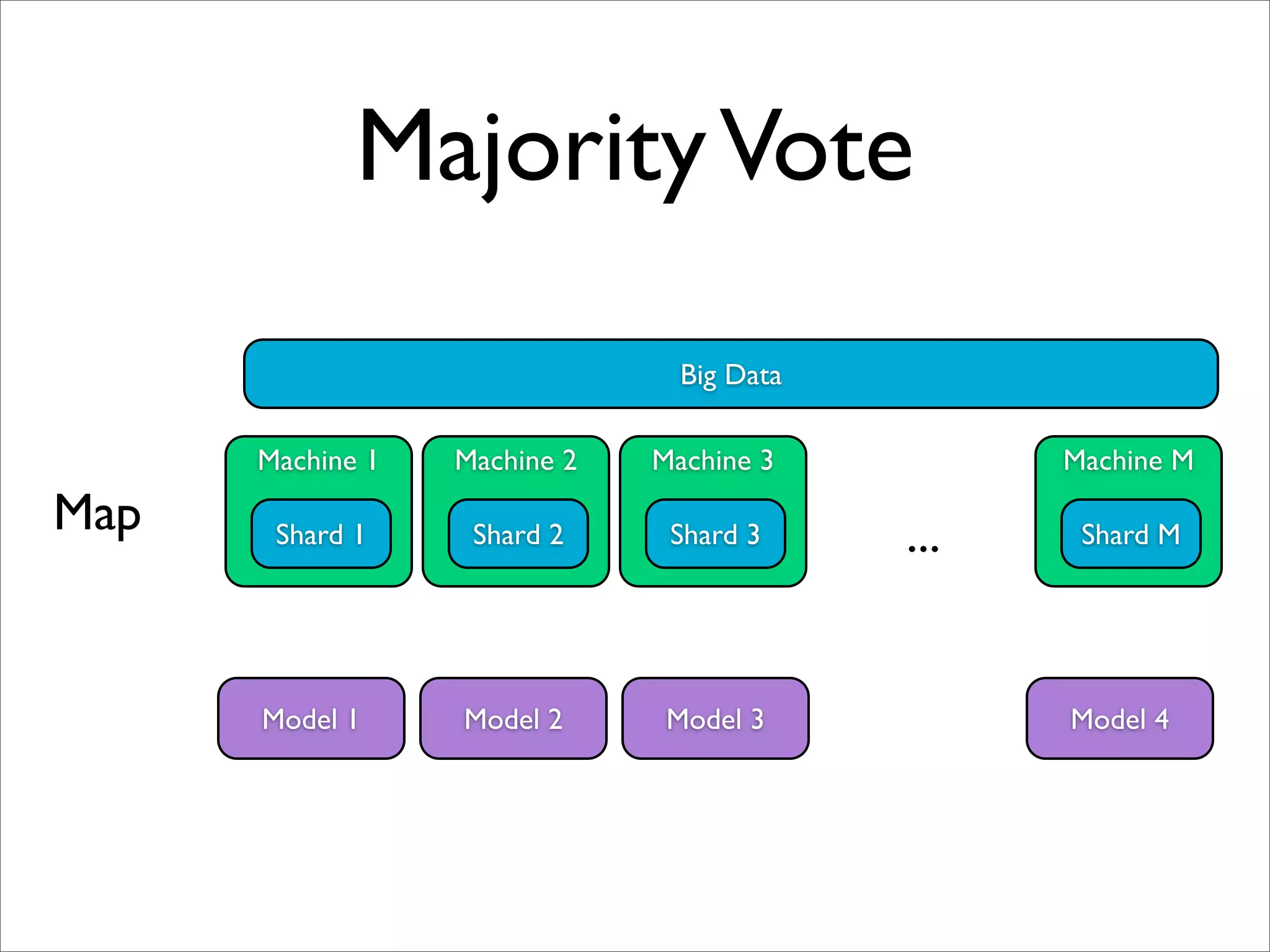

![Parameter Mixture [Mann et al, 2009]

Big Data

Machine 1 Machine 2 Machine 3 Machine M

Map Shard 1 Shard 2 Shard 3 ... Shard M

(dummy key, w1) (dummy key, w2) ...

Reduce Average w

Model](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-38-2048.jpg)

![Iterative Param Mixture [McDonald et al., 2010]

Big Data

Machine 1 Machine 2 Machine 3 Machine M

Map Shard 1 Shard 2 Shard 3 ... Shard M

(dummy key, w1) (dummy key, w2) ...

Reduce

after each Average w

epoch

Model](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-41-2048.jpg)

![Multi-shard Combiner

[Chandra et al., 2010]](https://image.slidesharecdn.com/machinelearningbigdata-maxlin-cs264opt-110331195757-phpapp01/75/Harvard-CS264-09-Machine-Learning-on-Big-Data-Lessons-Learned-from-Google-Projects-Max-Lin-Google-Research-56-2048.jpg)