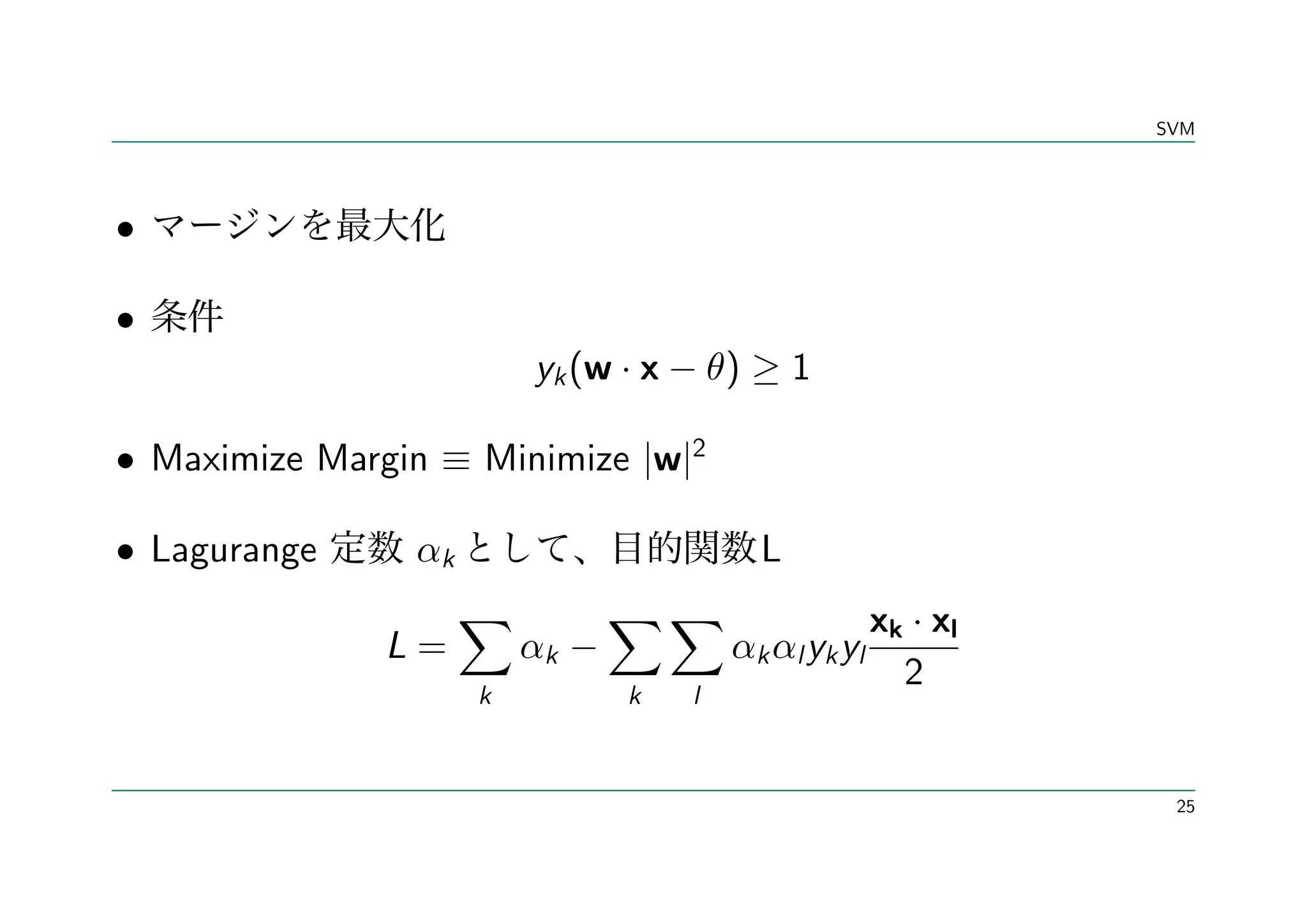

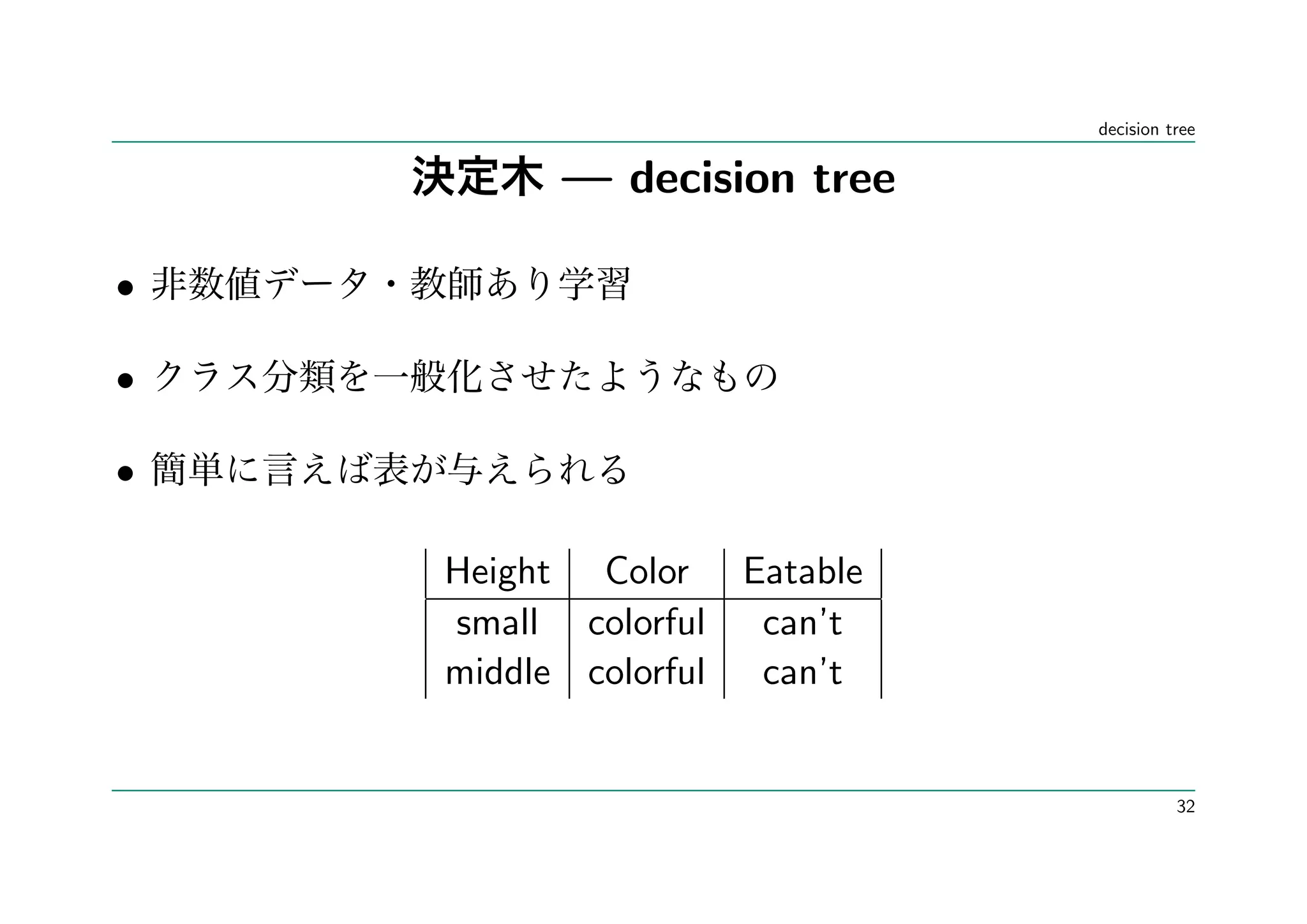

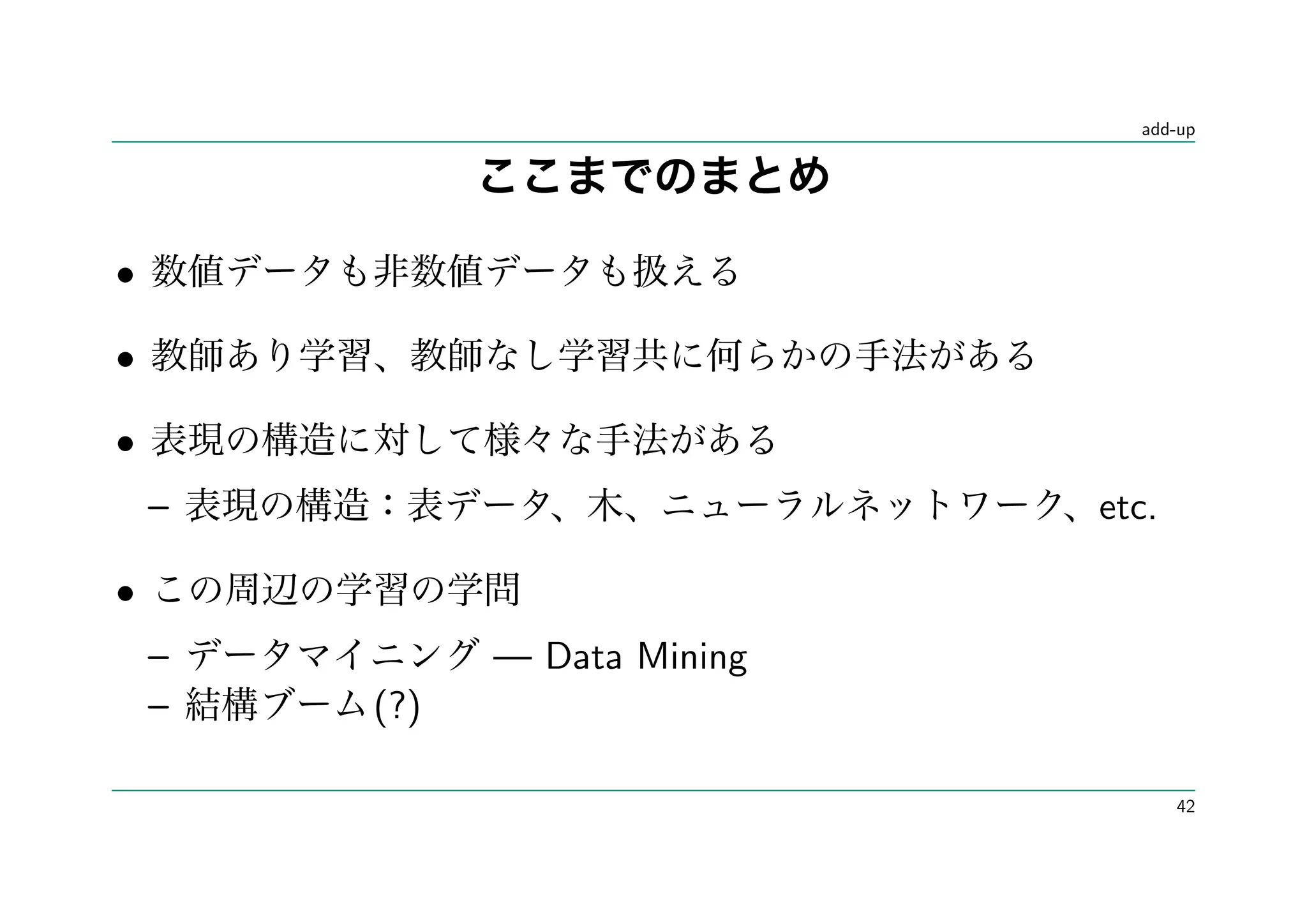

This document discusses various machine learning topics including supervised learning techniques like support vector machines, decision trees, and neural networks. It also discusses unsupervised learning techniques like clustering algorithms. It provides short code examples for algorithms like quicksort in Haskell and OCaml. Finally, it introduces other concepts like probably approximately correct learning and boosting.

![•

• Haskell 1.0 Haskell 98 Haskell 2010

•

qsort [] = []

qsort (p:xs) = qsort lt ++ [p] ++ qsort gteq

where

lt = [x | x <- xs, x < p]

gteq = [x | x <- xs, x >= p]

4](https://image.slidesharecdn.com/online-100813072552-phpapp02/75/Foils-4-2048.jpg)

![• quicksort 4

• 2

qs [] = []

qs (p:xs) = qs [x|x<-xs,x<p]++[p]++qs[x|x<-xs,x>=p]

www

5](https://image.slidesharecdn.com/online-100813072552-phpapp02/75/Foils-5-2048.jpg)

![let rec qsort= function

| [] -> []

| pivot :: rest ->

let il x = x < pivot in

let left, right = List.partition il rest in

qsort left @ [pivot] @ qsort right

(

8](https://image.slidesharecdn.com/online-100813072552-phpapp02/75/Foils-8-2048.jpg)