The document discusses recommender systems and sequential recommendation problems. It covers several key points:

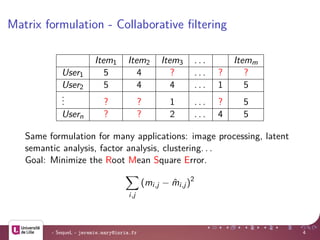

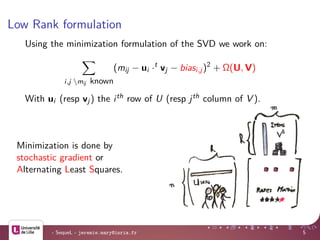

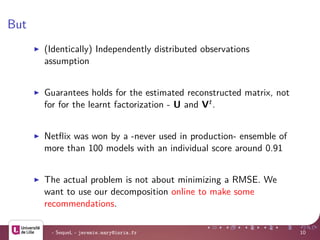

1) Matrix factorization and collaborative filtering techniques are commonly used to build recommender systems, but have limitations like cold start problems and how to incorporate additional constraints.

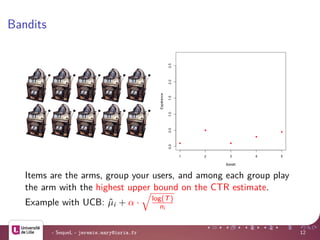

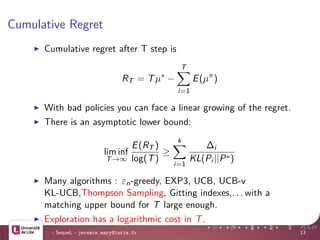

2) Sequential recommendation problems can be framed as multi-armed bandit problems, where past recommendations influence future recommendations.

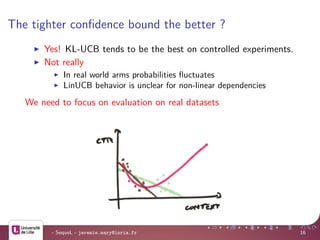

3) Various bandit algorithms like UCB, Thompson sampling, and LinUCB can be applied, but extending guarantees to models like matrix factorization is challenging. Offline evaluation on real-world datasets is important.

![Nice Theoretical Problem 1/2

Minimizing Ut

V ∗

where M ∗ = tr(MM∗)1/2 is the trace norm and with no

modification of known values and with no noise.

We have a convex relaxation which is going to promote

sparsity on U and V.

[Cand`es and Tao, 2010] If the rank of M of dimension n × m

is k and has a strong incoherence property, with high

probability we recover from a random uniform sample

provided that the number of known rating is νk logO(1)

(ν),

where ν = max(n, m)

- SequeL - jeremie.mary@inria.fr 6](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-6-320.jpg)

![Nice Theoretical Problem 2/2

With Ω(U, V) = 0, zero padding and iterative thresholding of

singular values [Chatterjee, 2012] get a guarantee in

||M∗||1

m

√

np + 1

np with p is the fraction of -iid- observed values.

With Ω(U, V) = λ UtV 1, in the noisy setting and relaxed

conditions on the sampling [Klopp, 2014] achieve a bound on

the MSE in O(k

n log(m + n) max(n, m))

Can also be cast in the bayesian framework. This is known as

variants of Probabilistic Matrix Factorization. Allows insertion

of prior knowledge.

- SequeL - jeremie.mary@inria.fr 7](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-7-320.jpg)

![Nice Practical problem 1/2

Alternate Least Square strategy can be efficiently distributed

thought the Map Reduce paradigm and even better nodes can

cache a part of the data to minimize network cost. This is a

part of MLlib with Spark and of Vowpal Wabbit.

It is possible to obtain efficient approximations of the

decomposition using randomly sampled subsets of given

matrices. The complexity can even be independent of the size

of the matrix using the length squared distribution of the

columns! [Frieze et al., 2004]

- SequeL - jeremie.mary@inria.fr 8](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-8-320.jpg)

![Nice Practical problem 2/2

Possible to play with regularizations and bias.

[Zhou et al., 2008] regularizes using:

Ω(U, V)

def

= λ

i

#J (i)||ui ||2

+

j

#I(j)||vj||2

J (i) (resp I(j)) is the number of rates given by user i (resp

given to item j). 0.89 RMSE.

Possible to play with the content of the matrix

[Hu et al., 2008].

Adaptations as generalized linear factorization -including

logistic one and to ranks [Guillou et al., 2014] and subsumed

by Factorization Machines [Rendle, 2010]

- SequeL - jeremie.mary@inria.fr 9](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-9-320.jpg)

![LinUCB / Kernel UCB

- SequeL - jeremie.mary@inria.fr 14

O

Rk

ˆu

confidence ellipsoid

v2

v1

˜u

(1)

n+1

argmax

j

ˆu.vj(t)T

+α vj(t)A−1vj(t)T ,

α param`etre d’exploration

A = t−1

t =1 vjt

(t ).vjt

(t )T + Id.

Analysis in

[Abbasi-yadkori et al., 2011].

Extended to kernels

[Valko et al., 2013] and

generalized model

[Filippi et al., 2010].](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-14-320.jpg)

![Low rank bandits

At timestep t, user it is drawn randomly. Items are seen as bandits

arms with their description given by the learnt V matrix. Can be

reversed for new items [Mary et al., 2014a].

Close to a set of regularized set of LinUCB but the

factorization part does not allow to carry the analysis.

Contextual bandits regret bound is in O(d log2

T). Can we

take advantage of the lower dimensional space?

When some new items (or users) occurs at constant rate, the

regret growing will be linear.

- SequeL - jeremie.mary@inria.fr 15](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-15-320.jpg)

![Evaluation [Li et al., 2011]

For a policy π the CTR estimate is computed using rejection

sampling [Li et al., 2011] on a dataset collected with a random

uniform policy.

h0 ← ∅ , GA ← 0, T ← 0

for all t ∈ {1..T} do

π ← A(hT )

if π(xt) = at then

hT+1 ← hT + {(xt, at, rt)}

GA ← GA + r, T ← T + 1

else

/* Do nothing, the record is completely ignored.*/

end if

end for

return ˆgA = GA/T

- SequeL - jeremie.mary@inria.fr 19](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-19-320.jpg)

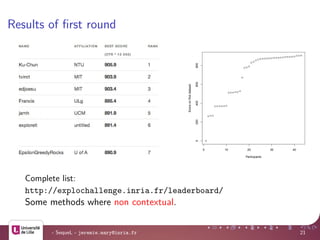

![Remarks

Reported score is the CTR ∗ 10 000. Two rounds : only one

submission allowed for second round.

Only one data row out of K is used on average. With non

random policy we could derive similar algorithms at a cost of

an increased variance for some policies (those using action

with less samples).

The estimator is only asymptotically unbiased. It can be made

closer making use of the knowledge of the sampling

distribution.

The unbiased estimator is not admissible for MSE

[Li et al., 2015]. The difference is important only for action

with a small number of selection.

- SequeL - jeremie.mary@inria.fr 20](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-20-320.jpg)

![ICML’12 Challenge - UCB-v

From [Audibert et al., 2009]

ˆµ = µ +

c · µ · (1 − µ) · log(t)

n

+ c ·

0.5 − µ

n

log(t)

with t current time step, n number of display of the news, µ

empirical mean of the CTR, c contant parameter.

- SequeL - jeremie.mary@inria.fr 23](https://image.slidesharecdn.com/jeremiemarymeetup-160721152000/85/Data-Driven-Recommender-Systems-23-320.jpg)

![Bootstrapped replay on expanded data

We do not want to discard O(1/K) row of the dataset on average.

Under mild hypothesis using bootstrap we have an unbiased

estimator of the CTR distribution with a speed in O(1/L) where L

is the size of the dataset [Mary et al., 2014b].

+

++

+

+

+

+

+

+

+

++++

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+++++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

++

++

+

+

+

+ +

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+ +

++

++++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+++++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

++

+

+++

+

+

+

+

++

++

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

++

+

+

+

+++++

+

++++

+

+

+

+

+

++++

+

++

+

++

+

+

+

++++

+

+

+

+

+

+

++

+

+

+

+

+

++

+

++

+++

+

++++++ ++++

+

++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+++++

+

+

+

+

+

++

+

+

+

+

+

+

+

++++++

+

+

++++

+++

+ +

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

++++

+

+

+

+

+

+

+

+

++

+

+

+

+

++

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

++

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

++++

+

+

+++

+

+

+

++

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

++

+

+

++

++

+

+

+

++

+

++

+

+++

+

+++

+

+

+++

+

++

+

++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+++

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+++++

++

+

+

+

+

+

++

+

+

+

++

+

++

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+++

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

++

+

+

++

+

+

+

+

+

++

+

++++

+

++++

+

+++

+

+ +

+

+

++

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

++

+

++

+

+

+

+

+

+

++++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++

+++++++++

+

++

+

+

++++++

+

+

++

+

++

+

+

+

++++++++

+

++

+

+

+

++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+++++++ +

+

+

+

+

+

+

+

+

+

+++++

+

+

+

++

+++

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

++++

+

+++++

+++

+

++

+

+++

+

+

+

+

+

+++

+

+

+++++++

+

++

+

+++++++++

+

+

++

+

++

+

+

+

+

+

+

+

+

+++

++

+

+

+

+

+

+

+

+

+

+ ++

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++++++++

++

+

+

+

+

+++

+

++++++++++

+

++

+

+

+

++

+

+

+

+

++++

+

+++

+

+

+

+

+

+

++

+

+

+

+

+

+++

+

+++

+

++++

+

+

+

++++++++++++ ++

+

+

++

+

+

+

++++++

+

++++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

++++

+

+++++

+

+

++++++++

+

+

+

+

+

+

+

+

+

+++++

+

+

+

+++

+

+

+

+

+

+

++++

+

+

+

++

+

+

+

+

+

++

+

+

+

++

+

+

++

+

+++++

+

++

+

+

+

+

+

+++

++

+

++

+

+

+

++

+

+

+

++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+++++

+

+

+

+

+

+

++

+++

+

++++ ++

+

+

+

+

+

+++

+

++

++

+

+

+

+

+

++

+

+

++++++

++

+

+

+++

+

++ +++++

+

+

+++

++++++++++

+

+

++

+

+

+

+++ +

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

++

+

+++++++

+

+

+

+

+++++

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++++++

+

+++++++

+

++++

+

+++++

+

++

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+++++++++

+

+++

+

+++++

+

+

+++

+

+

+

+

+

+

+

+

++++

+

++

+

+

+

+

++

+

+

+

+

+

+

+++++++

+

++

+

++++++

+++++++++++

+

++++++++ ++++

+

+++++

+

+++

+

+++++

+

+

+

+

+

+

+

++

+

+

+

++++

+

++

+

+++++++++ +++

+

++++++ ++++

+

++++

+

+

+++++

+

+

+

++++

+

+++

+

++ +++

+

++

+

++

++

+++

+

++++

+

+

++

+

++++

+

+

+

+++++++

+

+

++++++++++

++++++++

+++++++

+

++

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

++++++++

+

++

+

+

++++

+

++++

+

+++++++

+

+

+

+

+++

++

++

+

++

+

+

+

+

+

+

+

+

+++++

+++++++++

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+++

+

+++++++++

++++++++

+

+

+++

+

+++

+

+

+

++++++

+

+

++

+

+

++

+

+++++

+

+

+

+

+

++

+

++

+

+

++

+

+

++++

++

+

++++

+

+

+

++++++++++

++++++++

+

+

++

+

+++

+

+

+

+++++

+

+

+++++

+

++++++++

+++

+

+

++++ +++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

++

+

+++

+

+

+

+

+

+

+

+

+

++++++

+

+

+

++++++

+

+

++++

+

+++

+++

+

++++++

+

++

+

+

+

++++

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++++++++++

+

+

+

+

++

+

+

+

+

+

++

+

++

+

+

+

+++++++++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

++++++

+

+

+

+

+

+

++

+

++

+

+++++++

+

++++++++++++

++++++++

+

++++

+

++++++

+

++++++++ ++++++++

+

+

+++

+

+

+

+

+++

++++++++++++++++++ +++++

+++

+

+

++++++++++

+

+

+

+++++++

+

+

+

+

+

+

+

+

++

++++

+

+++++

++

+

+

+

++++

+

+++

++

++

++

+

+++

+

+

+

+

+

+

+

+

+

+

+++++++

+++

+

+++++

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+++

+

+

+++++ +++++

+

++++

++++++++ ++++++

+

++

+

++++++++

+

+

+

+

+

++

+++

+++++++++

+

+++

+

+++++

+

+

+

+

+

+

+

+

+

+

+

+++++

+

+++ +

+

+

++

+

+

+

+

+

+

+

++++++++++++++++++

+

+

+

+

+

++

+

+

+

+

+

++

+

+

+

++

+

+++

+

+

+++

+

++++

+

+++

+

+

+

++

++

+

++

+

+

++++++

+

+

+

+

+

++++++++++

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+

++++++++

+

+++

+

+

++

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

++

++

+

+

+

+++

+

+++

+

+

++

+

+

+

+

++

+

++

+

++++++

+

+

++

+

+++++++++

+

+

+

+

+

+

+++++

+

++

+

+++

+++

+

++

+

+

++

++

+

++++++

+

+

++++

+

+

+

+

+

+++++++

+

++

+

++

+

+

+++

+

++++++++

+

+

+

+

+

+

+

+

+

+++

+

+

+

++++

+

++

+++++

+

++++

+

+

++++

+

++

+

+

+

++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+ +

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+++++++++

+

+++++++++++++

+

++

+

+

++++

+

++

+++++++

+

++++++++++++

+

+

+++

+

+

+

++

++++++++++

++

+

+

++++

+

+

+

+

+

+

+

+

+

+

+

+

+

++++

+

++++

+

++

+

++++++

+

+

+

+

+

+

+

+

+

+

+

+

+++++++

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

++++

+++++++

+

++ +++

+

+

+

+

+

+

+

++++++++++

+

+

+

+

+

++

+

+

+

+++++

+

+++

+

+

+

+

++

+

+

++++

+

++++++++++++++++++

++++++++++

+++++++++

++++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++++++++

+

+

+

+

+

+

+

+

+

+

+

+

+

++++

+

+

+

++ +

+

+

+

+

+

+

+

+

+

+

+

++

+

++

+

+

+

++

+

++

+

++

+

++++++++++++

+

++++

+

++ +++

+

+

+

+

+

++

+

++

+

+++

+

+

+

+

+

+

+

++

+

+

++

++++

+

++

+

+

+

+

+

+++++

+

+

+

+

+

++

++

+

+

+

+++

+

+++++++ ++

+

+

+

++

+

+

+

+

+

+++++

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

++

+

+

+

+

++++

+

++++

+

++++++++++ +++

++

+

+

++

+

+

+

+

+

+++++

+

+++++++++++

+

+++++++++++++

+

+++

+++

+

++

+

+++

+++++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+++++

+

+++++++

+

+++++++++

+++

+

+

+

+++

+

+

++++++++

+++++++

+

+

++

+

++

+

++

+

+

+

++

+

+++++++

+

+++++

+

+

+

+++

+

+

+++++

+

+

+

++

++

+

+

++

+

++

+

+++++++++++++++

+++

+

+++

+

+

+

+++++++++++

+

+++++

+

++

+

++++++++

+

+

+

+

++

+

++

+

++++++

+

+++

+

+

+

+

+

++

+

+

+

+++++++++

++++

+

+

+++++++++

+

+++++++++++ +

+

++

+

++++

++++++++

+

++++++

+

++++

+

+

+

+

+

+

+++

+

+

+

+

+

+

++++

+

++

+

+

++++++ ++

+

+

+++

+

+

+

+

+

++++++

+

+

+

+

+

++

+

++

+

+

+++

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+++++

+

+

+

+

+

+++++

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+++

+

+

+++++

+

++

+

+

+

++++

+

+++

+

+

++

+

+

+

+

+

+

++

+

+++

+

++

+++++

+

++++

+

++

+

+

++

+++

+

+

++++

+

++

+

++

+

++

+

+

+

+

+

+

+

+

++

+++

+

+

+++++++++

+

+++++++++

+++

+

+++++

+

+

+

+

++

+

+++

+

+

+

+

++

+

++

+

+

+

+

+

+

+++

+

+

++

+

+++

+

+++++++++++++

+

+

+

++

++

+

+

+

++++++++

+

+

+

+++

+

+++++++++

+

+++

+

+

++

+

++

+

+

+

+

+

+

++

++

+

+

+

+

++

+

+

+

++

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

++++++

+

+

+

+++

+++++++++

+

++

+

+

++++++

+

+

+

++

++

+

+

+

+

+

++++++++++

+

+

+

+

++

+

+

+++++

+

++++

+

+

+

++

+

++

+

+

+

+

+++++++

+

+

+++

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

++

+

++

++

+

+

+

+

+

++

+

+

+

+

+

+

+ +

+

+

++

++

+

+

++

+

+

+

+

+

++

+

+

+

+

++

++

++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

++++++++++

+

+

+

++

++

+

+

+

++

++

+

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

+

+

+++++++

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+++

+

+

++++++

+

++

+

+

+

+

+

++

+

+++++

+

+++++

+

+++

+

+++

+

++++

+

+++++++

+

+

+

+

+

+

+

+

++

+

+++

+

+

+ +

+

+

+

++

+

+++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++++++++++ +++

+

+

+

++++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+++++++++++

+

+ +

++

+

+

+

++

+

+

+

+

+

+

+

++++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++++

+

++

+

+++

+

++

+

+

+

+

+

++

+

++

+

++

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

++

+

+++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

+++

+

++

+

++

+

+

++

+

++++

+

+

+

+

+

+

+

++++

+

+

+

+

+

++

+

+

+

+++++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

++

++

+

+

+

+

+

+++

+

++++++

+

++

+++

+

++++

+

+++++++

+

+

+

++++

+

++++

+

+

+

+

+

+

+

+

+

+

+

++

+

++

++

++++++

+

++++++

+

++

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

++++

+

+

++

++++

+

++

+

+

+

++

+

+

+

+

+

+

+++

+

+++

+

++

+

+

++

+

+

+

+++++

+

+++++

++

+

+

+

++

+

+

+

+

+

+

++

+

+

+

++

+

+

+++

+

++++

++++++++

+

+

+

+

+

+

+

++++

+

++++++++

+

++++++

+

+++

+

+

+

+

+

+

+

+

+

+

++++++

+

++++

+

+++++++

+

+

+

+

+

+

++

+

+

+

+

++

++

+

++

+

+

++

+

++++++++

+

+

+++++

+

+

+

+

+

+

+

++

+

++ +

+

+++++

++

+

+

+

+

+

++

+

++

+

+

+++++

+

++++

+

++

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

++

+

+++++

+

+

++++

+

+

+

+

++

+

+

+

+

+

+

+++

+

+++

+

++

++

+++++++++++++++++

+

+++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+++

+

+

+

++

+

+

+

++++

+

++++

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+++++

+

++++++++++++++

+

+

+

+

+

+

+

+

+

+

++++

+

+++++

+

+

+

+

+

++

+

+

+

+

++

+

++

+

+

+

+

++++++++++ +

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++++

+

+++++++++ +

+

+++++

+

++

+

++++++

+

+

+

+

+

++

+

++++++++++++++++++++++++++++++++++++++

+

+++++++++++++

+++

+

+

+

+

+

++++++++++++

++

+

+

+

+

+

+++

++++++++++

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

++++

+

++

+

+

+

+

++

+

+

+

+

+

++

+++

+

++

+

+++

+

+++

+++

+

+++++++

+

+++++

+

+

+

+

+++++++++

++

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

++

+

++

+

+

+

+

+++++

+

++++

+

++++++++++++

+

+

+

++

+

+++

+

+++++++ +

+

+

+

+

+

++++

+

+

++

+

+

+

+

++

++++++

+

+++

+

+

++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++++++++++++

+

+

+

+

++

+

++

+

++

+

+

+

++

+

+

+

+

+

+

+

++++++++++++

+

+

+

+

+

++++++

+

+

++++++++++++

+

+++++++++

+

+

+

+++++++

+

+++

+

+

++++++++

+

+++

+

+ +

+

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+++++++++++++++++

+

+

+

++

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+++

+

+

+

++

+

+

+

+

+

+++

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

++

+

+++

+

++++++++++++

+

+

++

+

+

++

+

+

+

+++

+

+

++

+

+

++

++

+

+

+

+

++++++

+

+++++++ +++

+

+

+

+

+

++

+++++++

+

+

+

+

+

+

+

+

+

++++

++

+

++

+

++++ +

+

+

+

+++

+

+

+

+

+

+++

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

++

+

+

++

+

+++

+

++++++++++++ +

+

+

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+++

+

+

+

+++++

+

++

+

+

++

+

++++++++++++++

++

+

++

+

++++

+++++++++

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+++

+

+

+

+

+

++++++

+

++

+

+

++

+

+++++++ +

+

++

+

+

++

+

+

++

+

+++

+

+++

++

++

+

++

+

+

+

+

+

++

+

+

++

+

+ +

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

++

+

+

+++

+

+

+

++++++++++++

++++

+

+

+

+

+

+

++

+

+

++

+

+

+

+++

+

+

+

+

+

+

+

+

+++++

+

+

+

+

+

+

++

+

++++++

+

++

+

++++++

+

+

+++++

+

++

+

+++++++

+

+

+

++

++

+

+

+++

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+++

+

++

++++++

+

+

+

+

++

+

+++++++

+

+

+

+

+

++

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++++

+

+++

+

+

+

+

++

+

+++

++

+

+++++

+

+

++

+

+++++++++++++++++

+

+

+

+

+++

+

++

+

+

+

+

++

+

++

+

+

++

+

+

+

+

+

+

+

++++++

+

++

+

+

+

+

+

+++

+

+

+

+++

+

+

++

++

+

+

+

+

+

+

+

+

+++

+++

+

++++++ +

+

+

+

++

+

++

+

+

++++++++++

+

++++

+

++++

+

+

+

++

+

++

+

+

+

+++

+

++++

+

+

+

+

+

+

+

+

++

++

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+++++++++++

+

+

+

+++

+

+

+

++

+

++

+

+

+++ +

+

+

+

+

+++++

+

++++++

++

+

+++

+

+

+

+

++++++

+

+

+

+

+

+

+ +

+

+

+

+

++

+

+

+

+

++

+

+

+

+

++

+

+

+

+

++

+

+

++

+

+

++++++++

+

+

+++

+

+

+

++

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

++

+

+

++++

+

++

+

+ +

+

+

++

+

++

+

+++++

+

+

+

+

+

+++++++++

+

++++++

+

+++++++++++++++++++++++ +

+

+++++

+

+

+

+

+

+

+

+

+

+

+

++

+

+++++

+

+

+

+

+

+

+

+

+

+

++

+

+

+++

+

++

+

+

+

+

+

++

+

+

+

+++++

+

+

++

+

+

+

+

+

+

+

++++

+

++++++

+

+++

+

++

+++++++

+

++

+++

+

+

+

++++

+++++++++++++++++++++

+

+

++

+

+

+

+ ++

+

++++++++++++

+

++++

+

+

+++

+

+

+++

+

+++++++

+

+ ++

++

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

++++++++

+

+

+

++++++++++++

+

+

++

+

+

+++

+

+

++

+

+

+

++++++++++

+

+

+

+

+

+++++

+++

+

++++++

++++++++++ +

+

+++

+

+

++

+

+++++++

+

+

+

++

+

+++++++++++++++++ ++

+

+

+

+++++++++++++

+

+

+

+++

+

+

++

+

+++++++++++ ++

+

+++

+

++

+

+

+

+++++++

+

+

+

+

++

+

+

+

+

+++++++++

++++++++++

+

+

++++++++

+

+

++++

+

+

+

+ +

+

+

+

+++++

+

++++++++++ +

+

++++

+

+

++

+

++

+

+++++++

+

+++++++

+++++++++

++++++++++ +

+

+

+++

+

+++

+

+

+

+

+

+

+++

+ +

+

+

+

+

+++

+

+

+

+

+

+

+

++

+

++ +

+

+

+

+

++

+

+

+

+++++++++

+

++++

+

+

+

++

+

+

++++++++

++

+

++++

+

+

+

+++

+

+

+

+++++

+

++

+

+

+

+++ +++

+

+++

+

++++

+

++++++++

+

++++++++

+++++++

+

++

+

+

+

+

++

+

+

+

+ +

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

++

+++

+

++++++

++++++++

+

+

+

+

++

+

+

+

+

+

+

+++

+

+

+++++

+

+

+

+

+++

+

+

+

+

+

+

+++

+

+++

++

+

++

+

++

+

+

++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+++++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++

+

+

+

+++++++

+

+

+

+

+

+

+

++

+

++

+

+++++

+++++

+

++ +

+

++

+

+

+

+

++

+

++

+

+++

+

+ +++

+

++++++

+

++

++

+

+

+++

+

+

+

++

+

+

+

+

+

++

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+++

+

++++

+

+

+++

+

+

+

++++++++++

+

++ +

+

+

+

+

+

+

++

++

+

+++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++++++

+

+

+

++

+

+++++

+

+

+

+

+

+

+

+

+

+ +

+

+

+

+++++

+

+

+++++++

+

+

++++

+

+++

+

+

+

+++++++

+

+

+

+

+

+

++

+

+

+

+

+

+

+

++++++

+++++

+

+++++

+

+++++++

++++++++++++++++++++++++++

+++++++++++++++++

+

+++++

+

+

+

+

+

+++

+

+++++

+

+

+

+

+

+

+

+

++++++++++

+

++

+

++++

+

+

+

+++++++

+

+ +

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

++

++

++

+

+

+

+

+

+

++

+

+

+

+ +

+

+

+

+

+

+

+

++

++++++++

++

+++

+

+

+

++

+

+

+

+

+

+

+

+++

+

+

+++++

+

+

+++

+

+

+

+

+

+

++++ +++

+

+

+

+

++

+

++++++++++

+

++

++

+

+

++

+

+

+++++++++ +++

+

++++++++++

+

++++++++

+

++++

+

+++++++

+

+

+

++

+

+

+

+

+

++

+

++

+

+++

+

+

+

++

+

+++

+

+

+

+

+

++

+

+

+

+

+

++

++++++++++

+

+

+

+

+

+

++++

++

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

++

+

++++

+

++++++++++

+

+++++

+

++++

++

+

+

+++++

+

+

+

+

+

+

+

+

+

+

+

+++++++++

++++++

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+++

+

+

+

++

+++++++++

+

+

++++

++

++

++

++

+

+

+

+

+

+

+

+++

+

+++++++++++++++

++++++++++

++++++++++

+

+

+

++

+

+

+++

+

++++

++++++++

+

+

+

+

+

+

+

+

++

+++++

+

+

+

++++++++

+

+++++++

+

+

+

+++

+

++

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++ +

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++++

+

+

+

++

+

+

+

+

+

++++

+

+++++ +

+

+

++

++

+

+

+

+

+

+

++++

+

++

++

+

++

+++

+

+

+

+

+

+

+

+++

++

+

+

+

+

+

++

+

++

+

++

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+++

+

+

++

+

+

+

+

+

+

++

+

+

+

++++

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

++

++

+

+++

+

+

++

+

++++

+

++

+

+

++

+

++

+

++

+

+

++++

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

++++++

+

++

+

+

+

+

+

+

+

+

+++

+

++

++

+

+

+

+

+

+

+

++++

+

+

+

+

++

+

+++

+

+

++

+++++++

+

++

+

+

+

++++++

+

+

+

++++

+

+

+

+

++

+

+++

+

+

+

+ ++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++++++++

+

+

+

++

+

+

++++

++

+

+

+

++++

+

+

++

+

+

+

+++

+

+

++

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+++++++

+

+

+

+

+

++

+

+

+

+

++

+

+

+

+

++

+

+

0 50000 100000 150000 200000 250000 300000

−0.050.000.050.100.150.20

Dataset size

EstimatedCTR−ActualCTR

+++

+

+

+

+

+

+++

+

+

+

+

+

++

+

+

+

++

+

++

+

+

+

++

++++

++

+

++

++

++

+

+

++

+++

+

++

+

+

+

+

+

+++++

+

+

+

+

+

+++++

+

++

+++

+

+

+

++++

+

+ ++++++

++++

+

+

+

+

+++

+++ ++++++

++++

++++

+

+

+

+++ +++

+++

++

+

+

+++

+

+

++

+

++

+

+

+

+

++

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+++

+

+++++++

++

+

+

++

+++

+

+++

++

+++

+++

+

+

+++

+

+

+

+

+++

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

++

+

+

++

++

+

+

++

+

+ +

+

+

+

++

+++

+

+

+

+

+

+

+

++

+

+ ++

+

+

+

+

+

++

+

+++

++

+

++++

++

+

+

+++

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

+

++

++

+

+

+

++

++

+

++++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+++

+

++

+

++

+

+

+

+ +

+

+

++

+

++

++

++

+

+++

+

+

++

+

++++

+

+++++

+

+

+

++++

+

+ ++++

+++

+

++ +

+

+

+

+

+

++

++

+

++

++

+++

+

+

+++++

+

+

+

+

+

+

++

+

+

++

++

+

+

++++++

+

+++

+

++

+

+

+

+

+

+

+++

+

+

+++

+

+

++

++++++

+

+

+++

++

+

+

++

+

++

+

++

+

+

++

+

++++++++++

+

++++

+

+++

+++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

+++

+

+

+

++

+

++

+

+

+

+

++

+

+

+

++

+

+

++

+

+

+

+++

+++++

+

+

+

++

++

++

+

+

+

++

+

++

+++

++

+

+

++

++

++

++

++

++

++

+

+++ ++

+

++++

+++

+

+

+

+

+++

+

+

+

+

+

+

++

+

+

+

++ ++

+

+++++

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ +

++

+

++

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

++

+++++

+

++

+

++

+

+++

+++++

+

++

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+++

+

++

+

+

+

+

+

+

+

++

+

+++

+

+

+

+

++

+

+

+

+++

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+++

+

+

++

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++++

+

++

+

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++++

+

+

+

+

+

+

+

++

+

++

+

+

+

+

+

+

++

+

++++

+

+

+

+

+++++

+

+

++++++++

+

+

+

+

+

+

+

+

+

+

++

+

+

+++

+

+

+

+

+

+

++

++

+++

+

++++++

+

+

+

+

++

+++

+

+

+

+ +

+

++

+

++

+

+++++

+

+

+

+

+++

+

+

++

+

+

+

++

++

+

++

+

+

+

+

+

+

+

+++

+

++

+++

+

+

+

+

+

+

++++ +

+

+

++

++++

+

+++

+++++

++

+

+

+

+

+

+

++

+

++

+

+

+

+

+

+

+

++

+

+++

+

+

++

+

++

+

++

+

+

+

+

+

++

+

+

+

+

+

+

++

+ ++++++++

+

+

+

+++

+

++

+

+

+

+

+

+

+

+

+

+++

+

++

+

+

++

+++

+

+

+++

+

+

+++

++++

+

+

+++++

+

++++

+

++

+

+++

++

++

+

+

+

+

+

+

+

++

+

+

+

++ ++++

++

+

++

+

++

+

+

+

+

+

++++

+

+++

+

+

+

++

+

+

+

+

+

+

++++

+

+

+

++

++

++

+

+++++

++

+

+

+

+

+++

+

+

++

+

+

+

+

+++++

+

++ +

+++

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

++

+

+++

+++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+ +

+

+++

+

+

+

+++

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+++

+

+

+

+

+

+

+

+

+++

+

+

+++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

++

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+ +

++

++

+

++

+

+

+

+

++

+

++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+++

+++

+

++

+++

+

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+++

+

+

+

+

+++

+

+

++

+

+

+++

+

+

+

+

++++++++ +

++

+

+

+

+

+++ +

+++++++++

+

++

+

+++++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+++

+

+

++

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+ +

++

+

+

++++

+++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

++

+

+++

+

+

+

+

+

+

+

++

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

++

+

++

+

+

++

++

+

+

++

+

++

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

++++

+

+

+

+

+ ++

+

+

+

+

++

+

+++

+

+

+

++

+

+

+

+

+++

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

++

+

+++

+

+

+

+

+

+

+

+

+

+

++++

+

+

+

+

+

+ +

+

+

+++

++

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+ +++

+

+

+++

++

++

+++

+

+

+

+

+

+++

++

++

+

+

+

+

+

+

+++

++++

++

+

+

+

++

+

++

+

+

+

++

+

+

+

+

+

++

+

+

++

+

++

+

+

+

+++++

+

+

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

++

+

+

++

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++

+

++

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+++

+

+

+

+ ++

++

+

+

++

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+++

+

+

++

+++

+

++

+

+

++

++++

++

+++++++

+

++

+

+

++++

+

+

+++

+

+

+

+

+ +

+

+

+

+

++

++

+

+

+

++

+

+

++

+

+

+

++++

+

+

+

+

+

+

+

+

+

+

++++++++

+

+++

+

++

+

+

+

++

+

+

+

+++

++++++++++

+

+++

+

+

++

+

+

++

+

+

+

+

+

+ +

++++

+

+

+

+

+

+

+++

+

+

+

++

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+++

+

+

+

++

+

+

+

+

++

+

++

+

++

+

+

+

++

+

+

+

+++

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+ ++++++

+

+++

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++++

+

+

+

+

++

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

++

+

+

+

++

+

+

+

+

+

++

++

++++

+

+

+++

++++

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

++

+

+

+

+

+

+++

+

+

+++

+

+

+

++

+

+

+

+

+

+

+++

++++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++++

+

+

+

++

+

+

+

++

++

++

+

+

+

+

+

+

+

+++

+

++++

+++

+

+

++++

++

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

++

+

+

++

++

+

+

+

+

+

+

++

++

+

+

+

++

+

+++

+

+

+++

++

+

+

+

+++

+

+

+

++

+

+

++

+

++++

+

+

+

+

+

+

+

+

+

+

+

++

++

+

+

+

++

+

+

+

+

+

+

+

+

+

+

++

+

+

+

++

+

+

+

+

++

++

++

+

+++

+++

+

+

+

++

+

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+++

+

+

+

+

+

+

+

+

+

+ +

+

+

+

+

+

+

++

++

+

+

+

+

++

+

+

+

+

+

+

+

++

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+