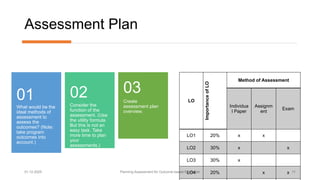

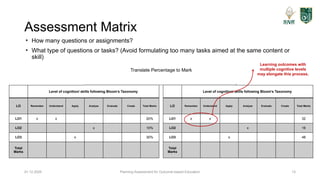

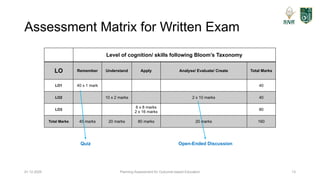

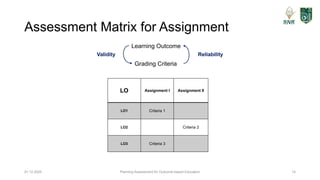

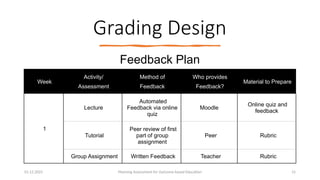

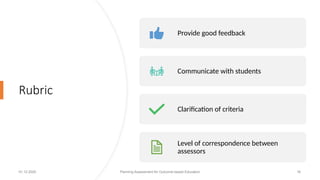

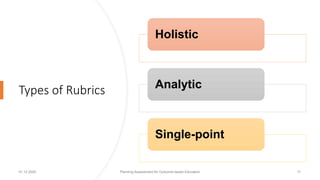

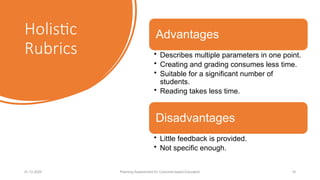

This presentation portrays my session on planning assessment for outcome-based education for the Faculty Development Program on Outcome-based Education organised by the Department of Civil Engineering in collaboration with ISTE. This presentation is open for sharing and does not incur any royalty expenses for usage.