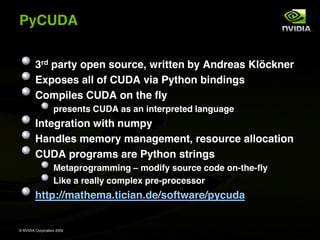

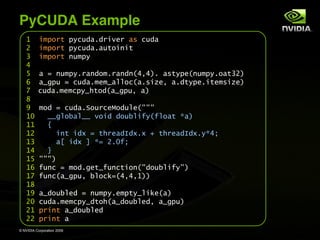

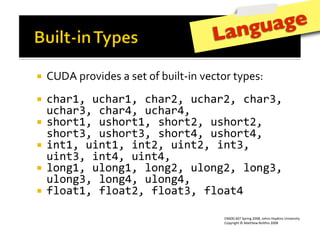

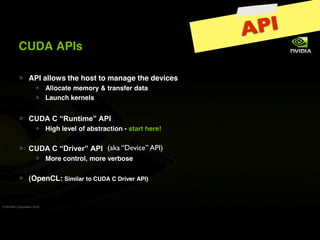

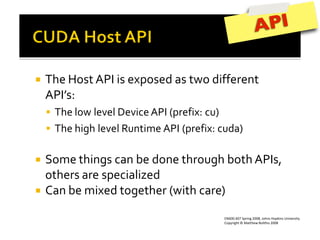

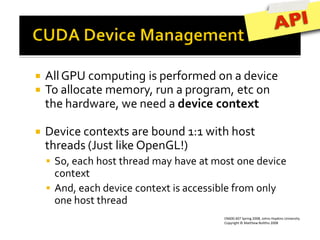

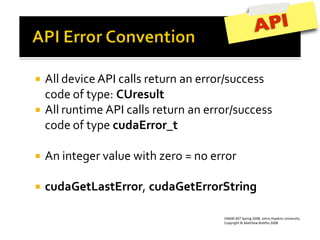

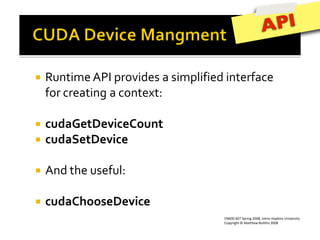

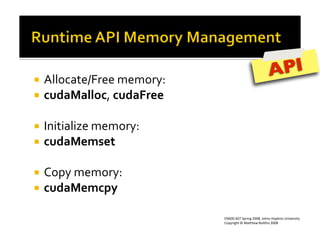

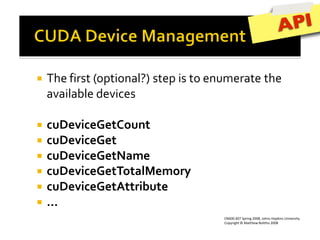

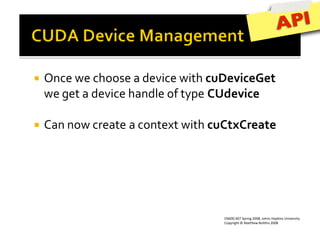

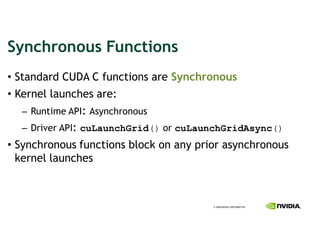

This document provides an overview and summary of key points from a lecture on massively parallel computing using CUDA. The lecture covers CUDA language and APIs, threading and execution models, memory and communication, tools, and libraries. It discusses the CUDA programming model including host and device code, threads and blocks, and memory allocation and transfers between the host and device. It also summarizes the CUDA runtime and driver APIs for launching kernels and managing devices at different levels of abstraction.

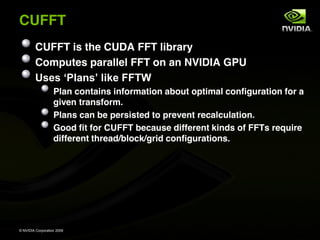

![CUFFT Features

1D, 2D and 3D transforms of complex and real-

valued data

Batched execution for doing multiple 1D transforms

in parallel

1D transform size up to 8M elements

2D and 3D transform sizes in the range [2,16384]

In-place and out-of-place transforms for real and

complex data.

© NVIDIA Corporation 2009](https://image.slidesharecdn.com/cs264201104-cudaintermediatesharetmpopt-110215180915-phpapp02/85/Harvard-CS264-04-Intermediate-level-CUDA-Programming-112-320.jpg)