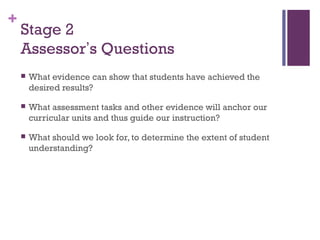

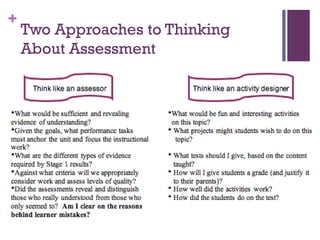

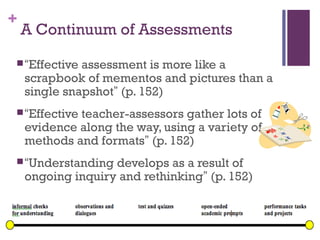

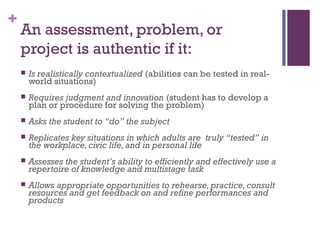

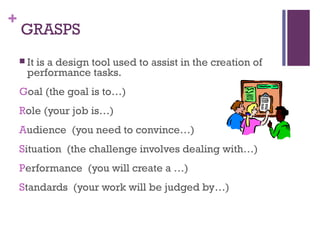

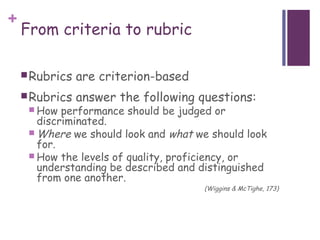

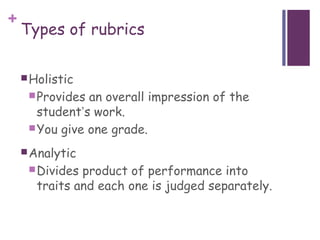

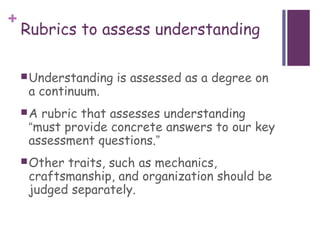

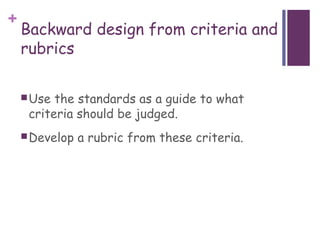

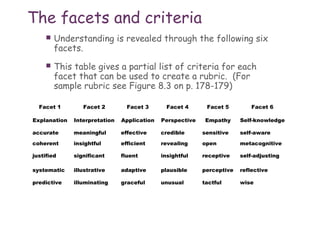

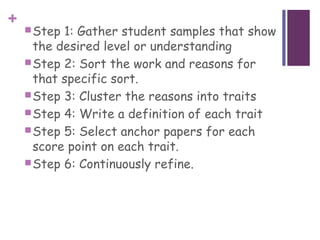

This chapter discusses how teachers must think like assessors to determine if students have understood the material. It emphasizes using multiple forms of assessment over time, including performance tasks, to gather evidence of understanding. The chapter also covers developing valid rubrics to evaluate student work, with criteria focused on facets of understanding rather than just correctness. Rubrics should be refined based on analyzing student work to ensure they accurately measure understanding.