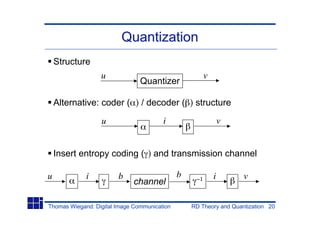

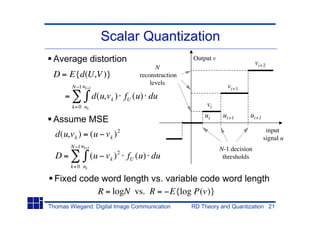

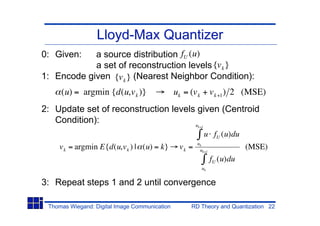

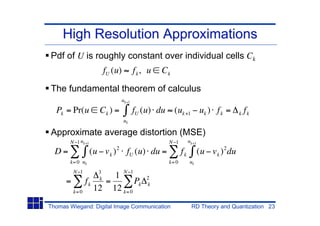

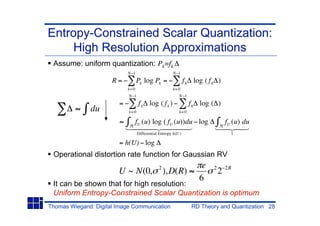

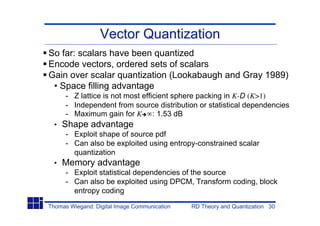

Rate distortion theory calculates the minimum bit rate R needed to represent a source within a given distortion D. Scalar quantization maps samples to reconstruction levels, minimizing the distortion between original and reconstructed values. Optimal quantizers like Lloyd-Max iteratively assign samples to levels and update levels to centroids. Entropy-constrained quantization assigns variable length codes to levels to minimize a rate-distortion cost function.

![Rate

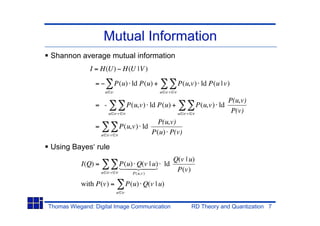

Shannon average mutual information expressed via

entropy

I(U;V ) = H(U) H(U |V )

Source entropy Equivocation: conditional entropy

Equivocation:

• The conditional entropy (uncertainty) about the

source U given the reconstruction V

• A measure for the amount of missing [quantized]

information in the received signal V

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 8](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-8-320.jpg)

![R(D*) for a Memoryless Gaussian Source

and MSE Distortion

Gaussian source, variance 2

Mean squared error (MSE) D = E{(u v) 2 }

2

1 2 2 R*

R(D*) = log ; D(R*) = 2 ,R 0

2 D*

2

SNR = 10 log10 = 10 log10 2 2 R 6R [dB]

D

Rule of thumb: 6 dB ~ 1 bit

The R(D*) for non-Gaussian sources with the same

variance 2 is always below this Gaussian R(D*) curve.

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 15](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-15-320.jpg)

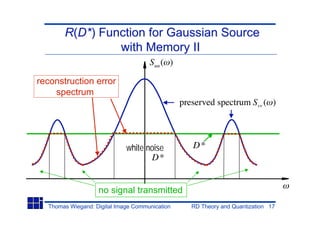

![R(D*) Function for Gaussian Source

with Memory I

Jointly Gaussian source with power spectrum Suu ( )

MSE: D = E{(u v) 2 }

Parametric formulation of the R(D*) function

D= 1 min[D*,Suu ( )]d

2

1 Suu ( )

R= 1 max[0, log ]d

2 2 D*

R(D*) for non-Gaussian sources with the same power

spectral density is always lower.

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 16](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-16-320.jpg)

![R(D*) Function for Gaussian Source

with Memory III

ACF and PSD for a first order AR(1) Gauss-Markov

process: U[n] = Z[n] + U[n 1]

2

|k| 2 (1 2 )

Ruu (k) = , Suu ( ) = 2

1 2 cos +

Rate Distortion Function:

1 Suu ( ) D* 1

R(D*) = log 2 d , 2

4 D* 1+

2

1 (1 2 ) 1 2

= log 2 d log 2 (1 2 cos + )d

4 D* 4

2 2

1 (1 2 ) 1

= log 2 = log 2 z

2 D* 2 D*

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 18](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-18-320.jpg)

![R(D*) Function for Gaussian Source

with Memory IV

SNR [dB] 45

2

40 D* 1 =0,99

= 10 log10

D 2

1+ =0,95

35

=0,9

30 =0,78

25 =0,5

=0

20

15

10

5

0 R [bits]

0 0.5 1 1.5 2 2.5 3 3.5 4

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 19](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-19-320.jpg)

![Comparison for Gaussian Sources

30

SNR [dB] R(D*), =0.9

2 R(D*), =0

= 10 log10 25 Lloyd-Max

D Uniform Fixed-Rate

Panter & Dite App

Entropy-Constrained Opt.

20

15

10

5

R [bits]

0

0 0.5 1 1.5 2 2.5 3 3.5 4

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 29](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-29-320.jpg)

![Comparison for Gauss-Markov Source: =0.9

40

SNR [dB]

35

30

25

20

15 R(D*), =0.9

VQ, K=100

VQ, K=10

10 VQ, K=5

VQ, K=2

5 Panter & Dite App

Entropy-Constrained Opt.

R [bits]

0

0 1 2 3 4 5 6 7

Thomas Wiegand: Digital Image Communication RD Theory and Quantization 31](https://image.slidesharecdn.com/dicrdtheoryquantization07-130417040621-phpapp01/85/Dic-rd-theory_quantization_07-31-320.jpg)