This document discusses decision trees, including:

- Decision trees are tree-like graphs used for decision support modeling. There are classification trees for categorical variables and regression trees for continuous variables.

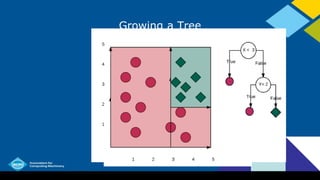

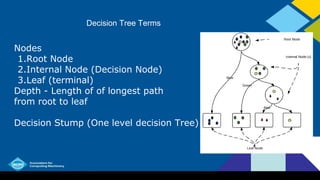

- Nodes in a decision tree include the root node, internal decision nodes, and leaf nodes. The depth is the longest path from root to leaf.

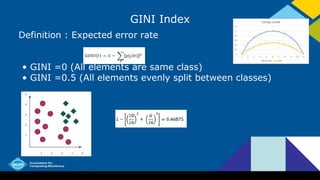

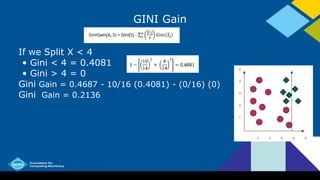

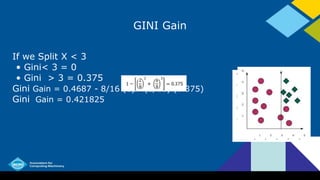

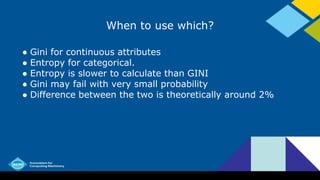

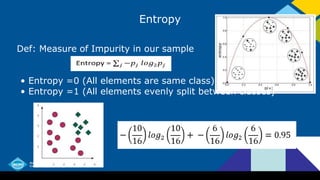

- Common algorithms for building decision trees include ID3, C4.5, C5.0, and CART. They use criteria like information gain, Gini index, and classification error to determine the best attributes to split on.

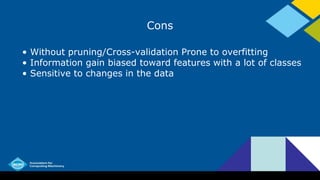

- Decision trees can be prone to overfitting without pruning. Strategies like prepruning and postpruning are used to prevent over

![Information Gain

Information Gain = Entropy (Parent) - [ Weighted Average]

Entropy (Children)

If we Split X < 4

• Entropy < 4 = 0.86

• Entropy > 4 = 0

Information Gain = 0.95 - 14/16 (0.86) - (2/16) (0)

Information Gain = 0.19](https://image.slidesharecdn.com/advancedmachinelearningwithpython1-160901142911/85/Decision-Trees-12-320.jpg)

![Information Gain

IG = Entropy (Parent) - [ Weighted Average] Entropy (Children)

If we Split X < 3

• Entropy < 3 = 0

• Entropy > 3 = 0.811

Information Gain = 0.95 - 8/16 (0) - (2/16) (0.811)

Information Gain = 0.8486](https://image.slidesharecdn.com/advancedmachinelearningwithpython1-160901142911/85/Decision-Trees-13-320.jpg)