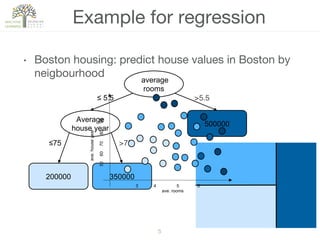

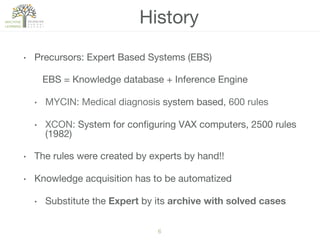

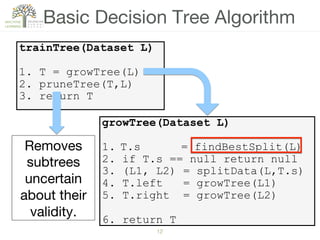

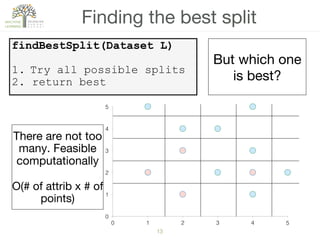

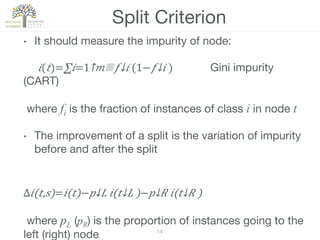

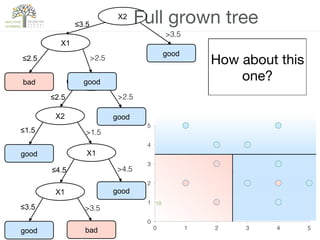

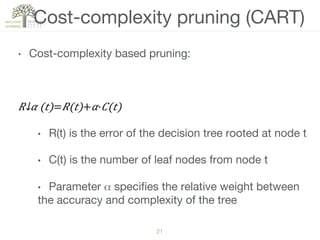

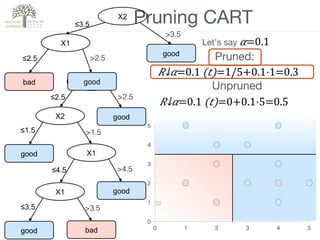

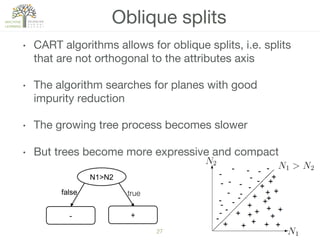

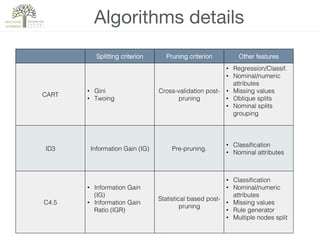

The document discusses decision trees, a hierarchical learning model used for classification and regression by recursively partitioning data based on decision rules. It covers their historical development, algorithms for growing and pruning trees, criteria for making splits, and advantages and disadvantages of decision trees in machine learning. Key algorithms mentioned include CART, C4.5, and ID3, which vary in terms of handling issues like overfitting and missing values.