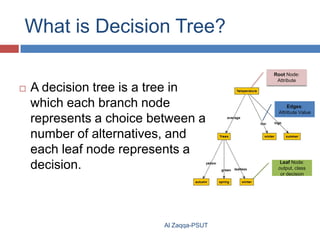

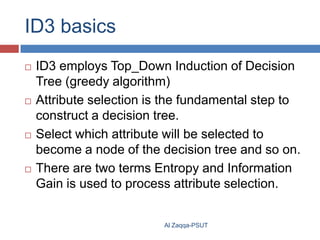

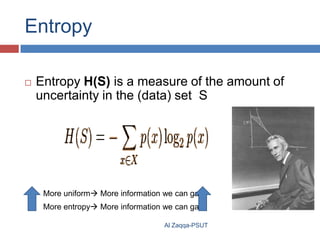

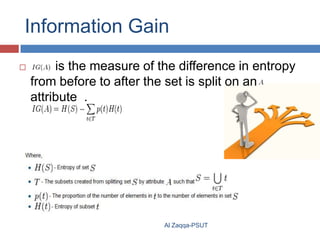

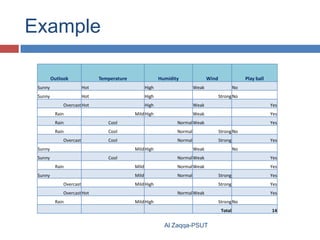

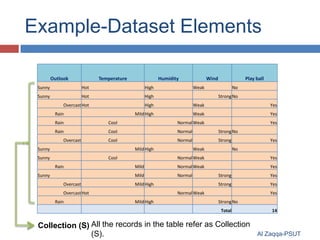

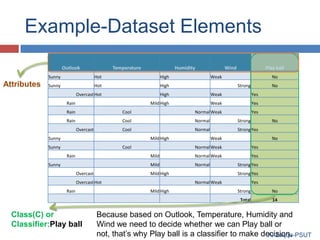

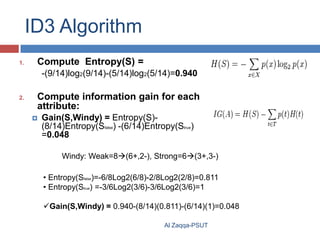

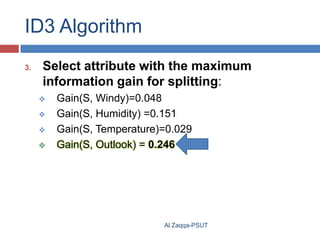

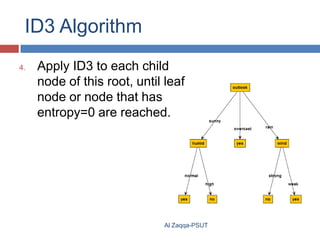

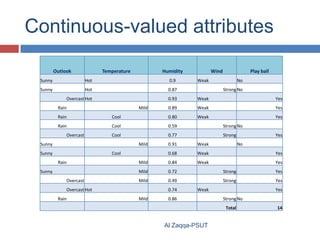

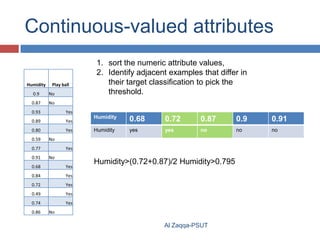

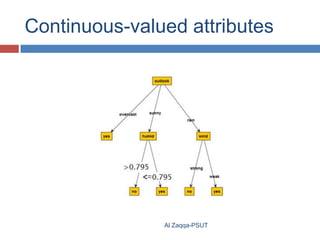

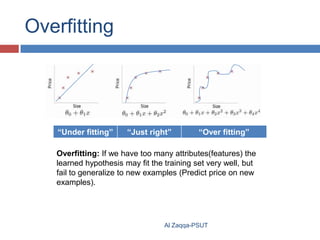

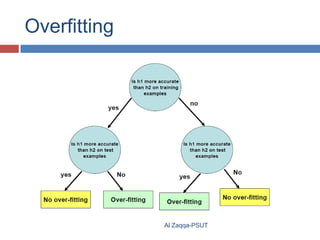

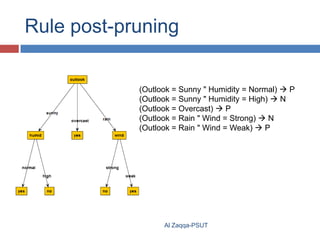

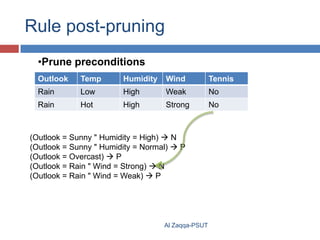

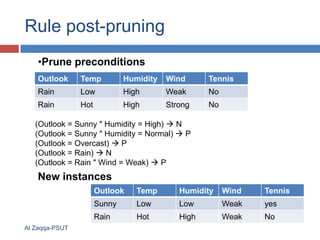

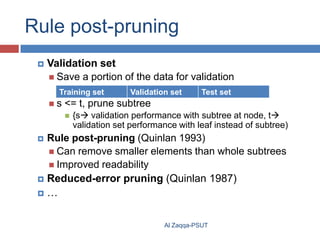

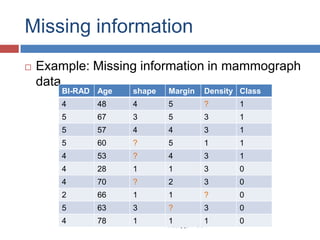

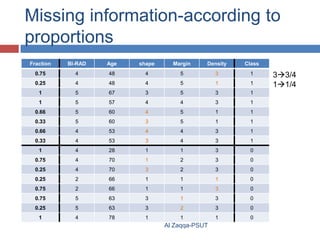

The document discusses machine learning concepts, particularly focusing on decision trees and the ID3 algorithm developed by Ross Quinlan for creating decision trees from datasets using Shannon entropy. It explains essential terms like entropy and information gain, which are used to select attributes for tree splitting, while also introducing the C4.5 algorithm as an extension of ID3 to handle continuous attributes and missing data. Additionally, it addresses overfitting and methods for pruning trees to improve performance.