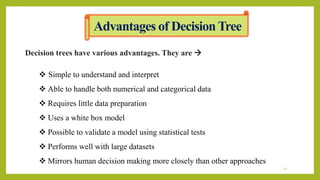

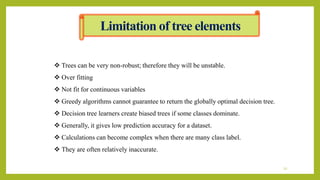

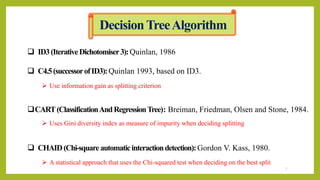

The document presents an overview of decision trees, including what they are, common algorithms like ID3 and C4.5, types of decision trees, and how to construct a decision tree using the ID3 algorithm. It provides an example applying ID3 to a sample dataset about determining whether to go out based on weather conditions. Key advantages of decision trees are that they are simple to understand, can handle both numerical and categorical data, and closely mirror human decision making. Limitations include potential for overfitting and lower accuracy compared to other models.

![ Calculate the Entropy of every attribute using the data set S.

Entropy 𝑆 = 𝛴 − 𝑃 𝑖 . 𝑙𝑜𝑔2

𝑝

𝑖

Split the set S into subsets using the attribute for which the resulting entropy is minimum.

Gain (S,A) = Entropy(S) − 𝛴[P(S|A).Entropy(S|A)]

Make a decision tree node contain that attribute.

Recurs on subsets sing remaining attribute.

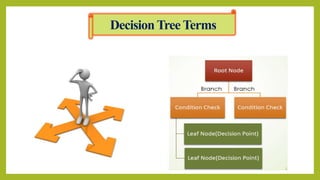

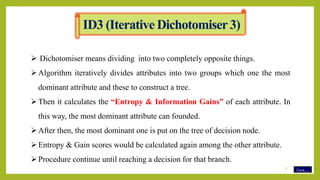

ID3 (Iterative Dichotomiser 3)

Cont…9](https://image.slidesharecdn.com/decisiontreeinartificialintelligence-200307042210/85/Decision-tree-in-artificial-intelligence-9-320.jpg)

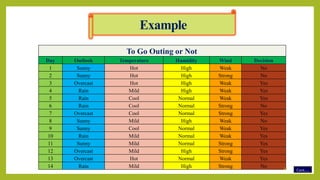

![Day Outlook Temperature Humidity Wind Decision

3 Overcast Hot High Weak Yes

7 Overcast Cool Normal Strong Yes

12 Overcast Mild High Strong Yes

13 Overcast Hot Normal Weak Yes

Outlook

Sunny Overcast Rain

Yes

[3,7,12,13]

Decision will always be yes if outlook were overcast

Cont…

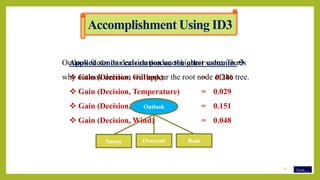

Accomplishment Using ID3

16](https://image.slidesharecdn.com/decisiontreeinartificialintelligence-200307042210/85/Decision-tree-in-artificial-intelligence-16-320.jpg)

![Day Outlook Temperature Humidity Wind Decision

1 Sunny Hot High Weak No

2 Sunny Hot High Strong No

8 Sunny Mild High Weak No

Day Outlook Temperature Humidity Wind Decision

9 Sunny Cool Normal Weak Yes

11 Sunny Mild Normal Strong Yes

Sunny

High

Humidity

Normal

No

[1,2,8]

Yes

[9,11]

Cont…

Accomplishment Using ID3

18](https://image.slidesharecdn.com/decisiontreeinartificialintelligence-200307042210/85/Decision-tree-in-artificial-intelligence-18-320.jpg)

![Strong

Wind

Weak

No

[6,14]

Yes

[4,5,10]

Day Outlook Temperature Humidity Wind Decision

4 Rain Mild High Weak Yes

5 Rain Cool Normal Weak Yes

6 Rain Cool Normal Strong No

10 Rain Mild Normal Weak Yes

14 Rain Mild High Strong No

Wind produces the highest score if outlook were rain

Cont…

Accomplishment Using ID3

Rain outlook on decision

(Outlook = Rain|Temp)

(Outlook = Rain|Humidity)

(Outlook = Rain|Wind)

19](https://image.slidesharecdn.com/decisiontreeinartificialintelligence-200307042210/85/Decision-tree-in-artificial-intelligence-19-320.jpg)

![Humidity

Outlook

Sunny Overcast Rain

Normal

No

[1,2,8]

High Weak

Wind

Strong

Yes

[4,5,10]

No

[6,14]

Yes

[9,11]

Yes

[3,7,12,13]

Accomplishment Using ID3

20](https://image.slidesharecdn.com/decisiontreeinartificialintelligence-200307042210/85/Decision-tree-in-artificial-intelligence-20-320.jpg)