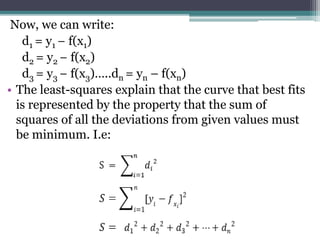

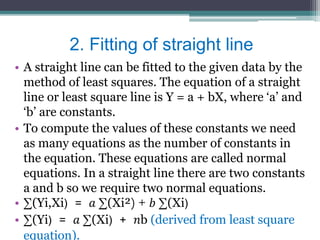

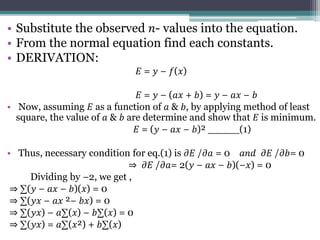

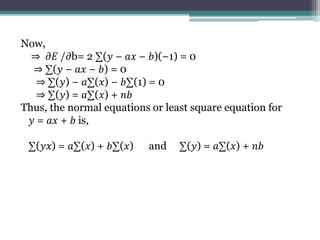

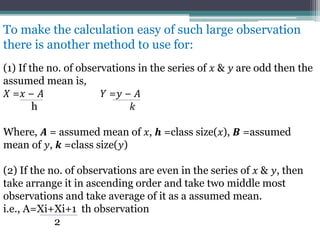

The document discusses curve fitting and the principle of least squares. It describes curve fitting as constructing a mathematical function that best fits a series of data points. The principle of least squares states that the curve with the minimum sum of squared residuals from the data points provides the best fit. Specifically, it covers fitting a straight line to data by using the method of least squares to compute the constants a and b in the equation y = a + bx. Normal equations are derived to solve for these constants by minimizing the error between the observed and predicted y-values.