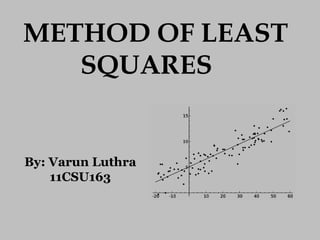

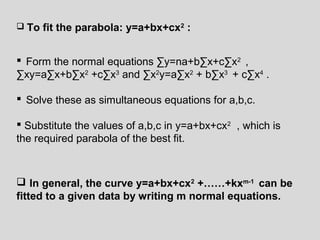

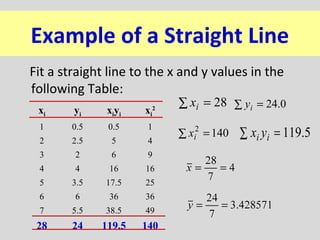

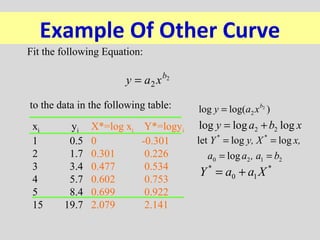

The document discusses the method of least squares for fitting curves to data points. It begins by introducing trend analysis and hypothesis testing as two applications of curve fitting. It then describes least squares regression and interpolation as two approaches for curve fitting. The main part of the document provides details on the least squares method, including forming normal equations from the data points, solving the normal equations to determine the coefficients of the curve, and examples of fitting straight lines and parabolas to data. It concludes by noting the key application of data fitting to minimize residuals and limitations when there is uncertainty in the independent variable.