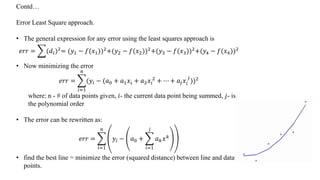

Curve fitting is a statistical technique used to create mathematical functions that closely approximate a set of data, allowing for the modeling of relationships between variables. The process includes methods like least squares regression and interpolation, which help in estimating parameters for mechanistic models that describe various phenomena. Accurate curve fitting is essential for analyzing experimental data, but care must be taken to avoid overfitting or underfitting the model to the data.

![Interpolation

• The simplest type of interpolation is linear interpolation, which simply connects each data

point with a straight line.

• The polynomial that links the data points together is of first degree, e.g., a straight line.

• Given data points f(c) and f(a), where c>a.

We wish to estimate f(b) where b∈ [𝑎 𝑐] using linear interpolation.](https://image.slidesharecdn.com/am17m022sachinkumar-181112120828/85/Curve-Fitting-5-320.jpg)

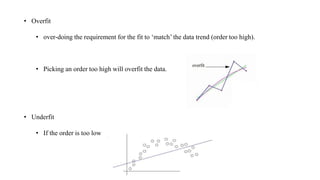

![Contd…

• The linear interpolation function for functional values between a and c can be found using

similar triangles or by solving of system of two equations for two unknowns.

• The slope intercept form for a line is:

𝑦 = 𝑓 𝑥 = 𝛼𝑥 + 𝛽, 𝑥 𝜖 𝑎, 𝑐

As boundary conditions we have that this line must pass through the point pairs 𝑎, 𝑓 𝑎 and

𝑏, 𝑓 𝑏 .

Now using this we can calculate 𝛼 and 𝛽. By substituting the values of 𝛼 and 𝛽 we can form

the equation as:

𝑓 𝑏 = 𝑓 𝑎 +

𝑏 − 𝑎

𝑐 − 𝑎

[𝑓 𝑐 − 𝑓(𝑎)]](https://image.slidesharecdn.com/am17m022sachinkumar-181112120828/85/Curve-Fitting-6-320.jpg)

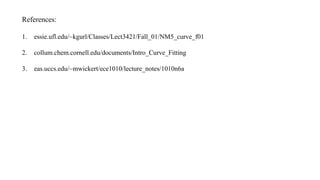

![Contd…

• Least square regression.

With linear regression a linear equation, is chosen that fits the data points such that the sum of

the squared error between the data points and the line is minimized

The squared distance is computed with respect to the y – axis.

Given a set of data points

𝑥 𝑘, 𝑦 𝑘 , 𝑘 = 1, … , 𝑁

The mean squared error (mse) is defined as

𝑚𝑠𝑒 =

1

𝑁

𝐾=1

𝑁

[𝑦 𝑘 − 𝑦1 𝑘]2

=

1

𝑁

𝐾=1

𝑁

[𝑦 𝑘 − (𝑚𝑥 𝑘+𝑏)]2

The minimum mse is obtained for particular values of m and b. Using calculus we compute the

derivative of the mse with respect to both m and b.

1. derivative describes the slope

2. slope = zero is a minimum ==> take the derivative of the](https://image.slidesharecdn.com/am17m022sachinkumar-181112120828/85/Curve-Fitting-9-320.jpg)