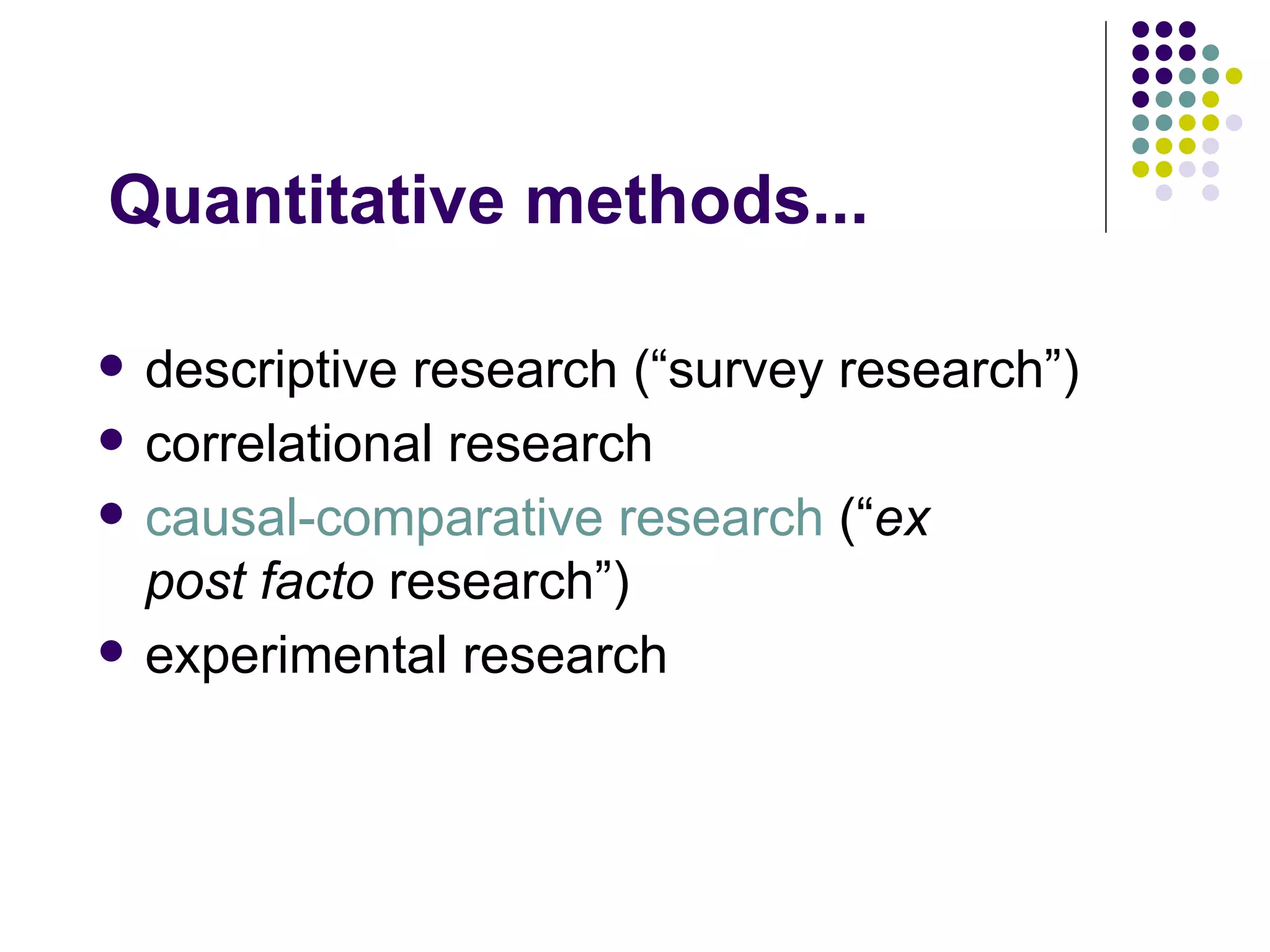

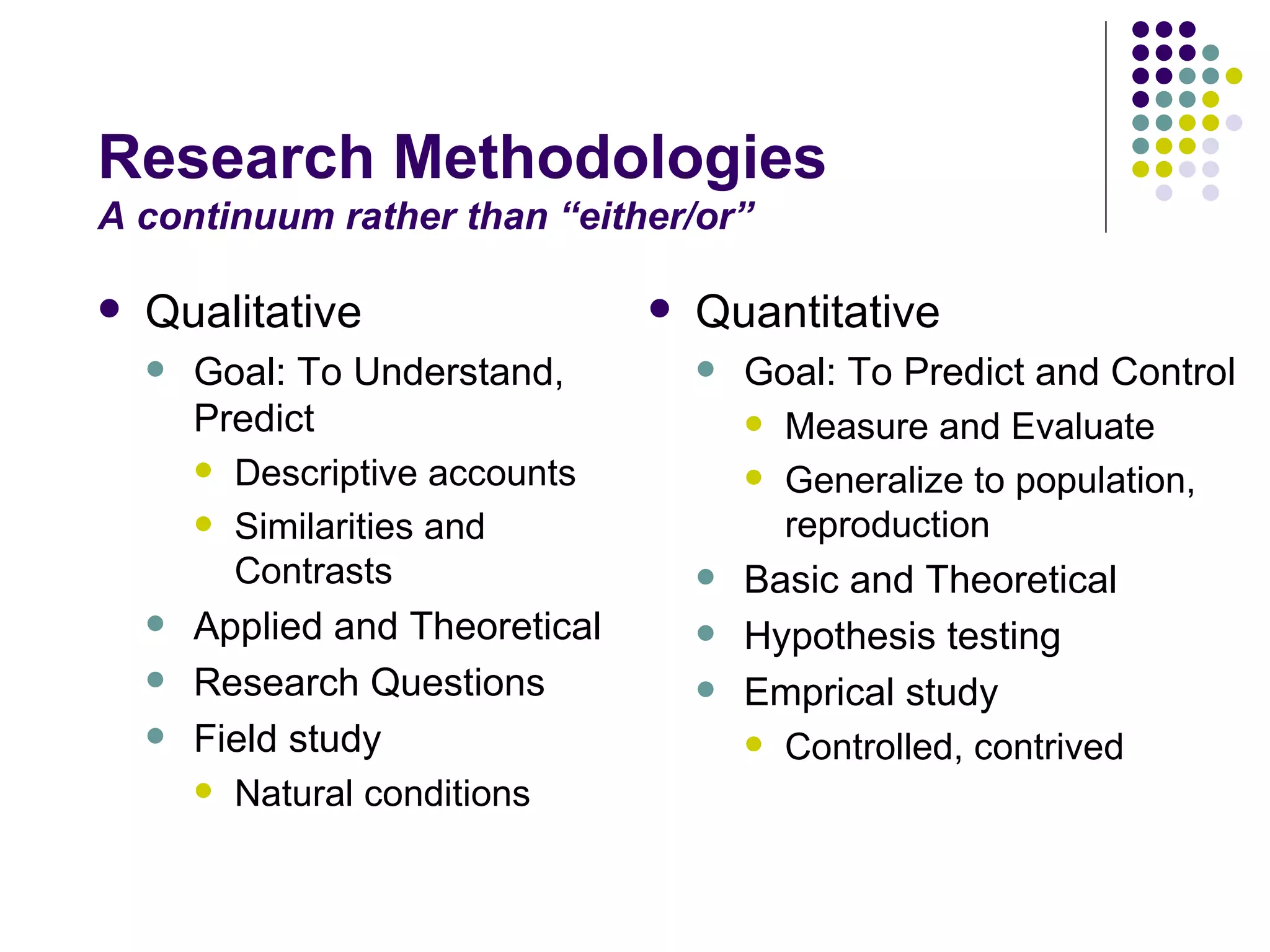

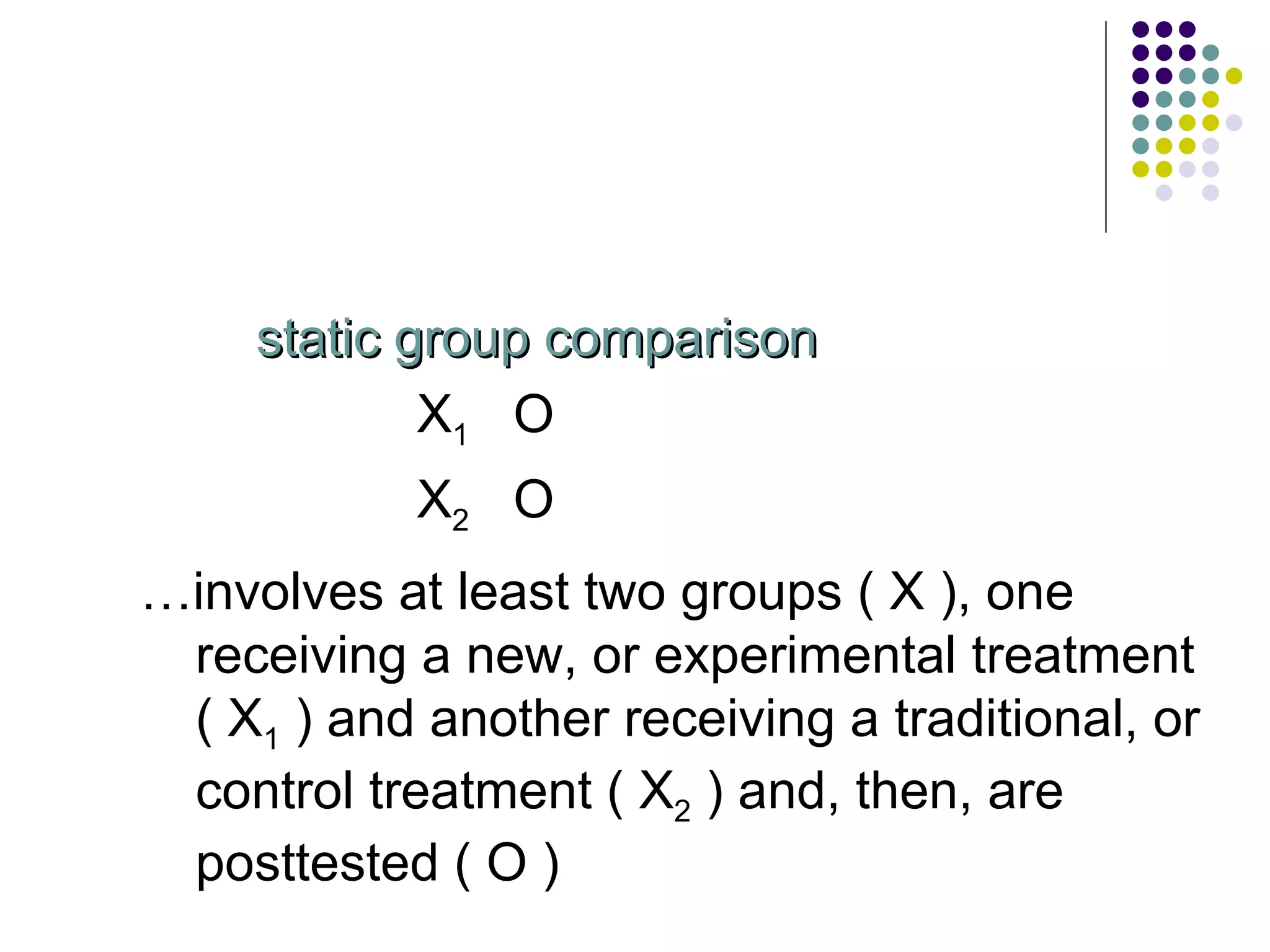

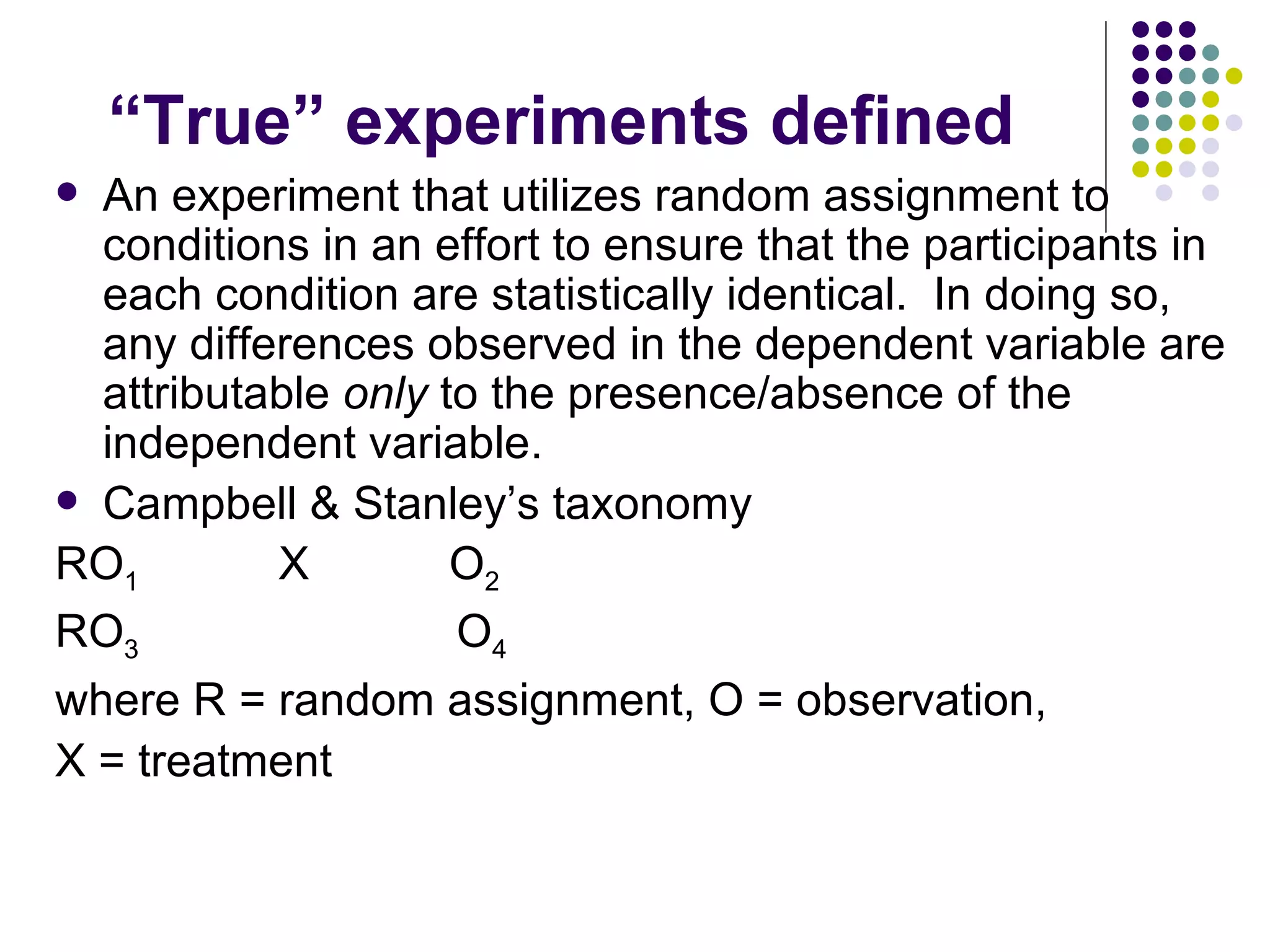

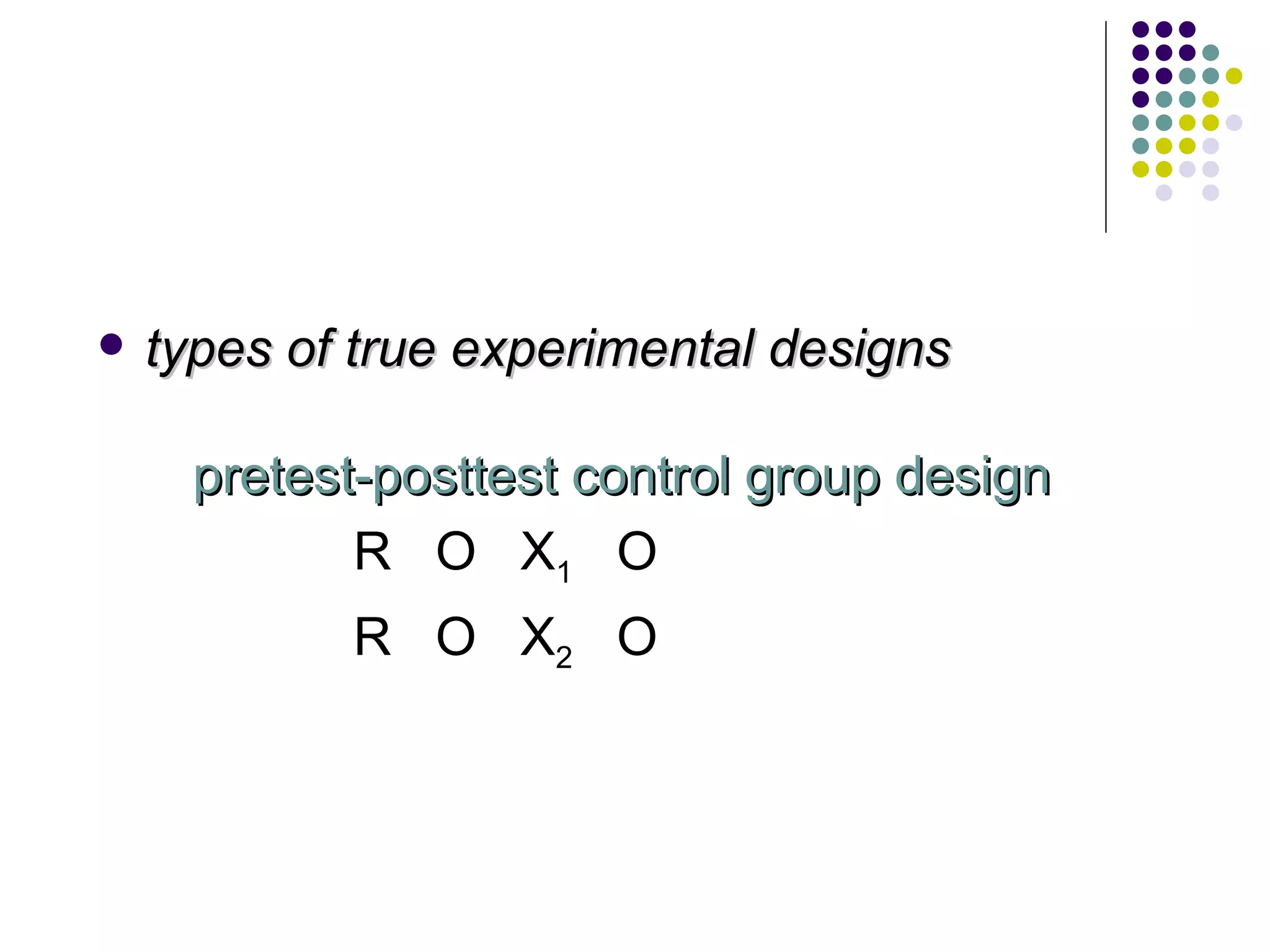

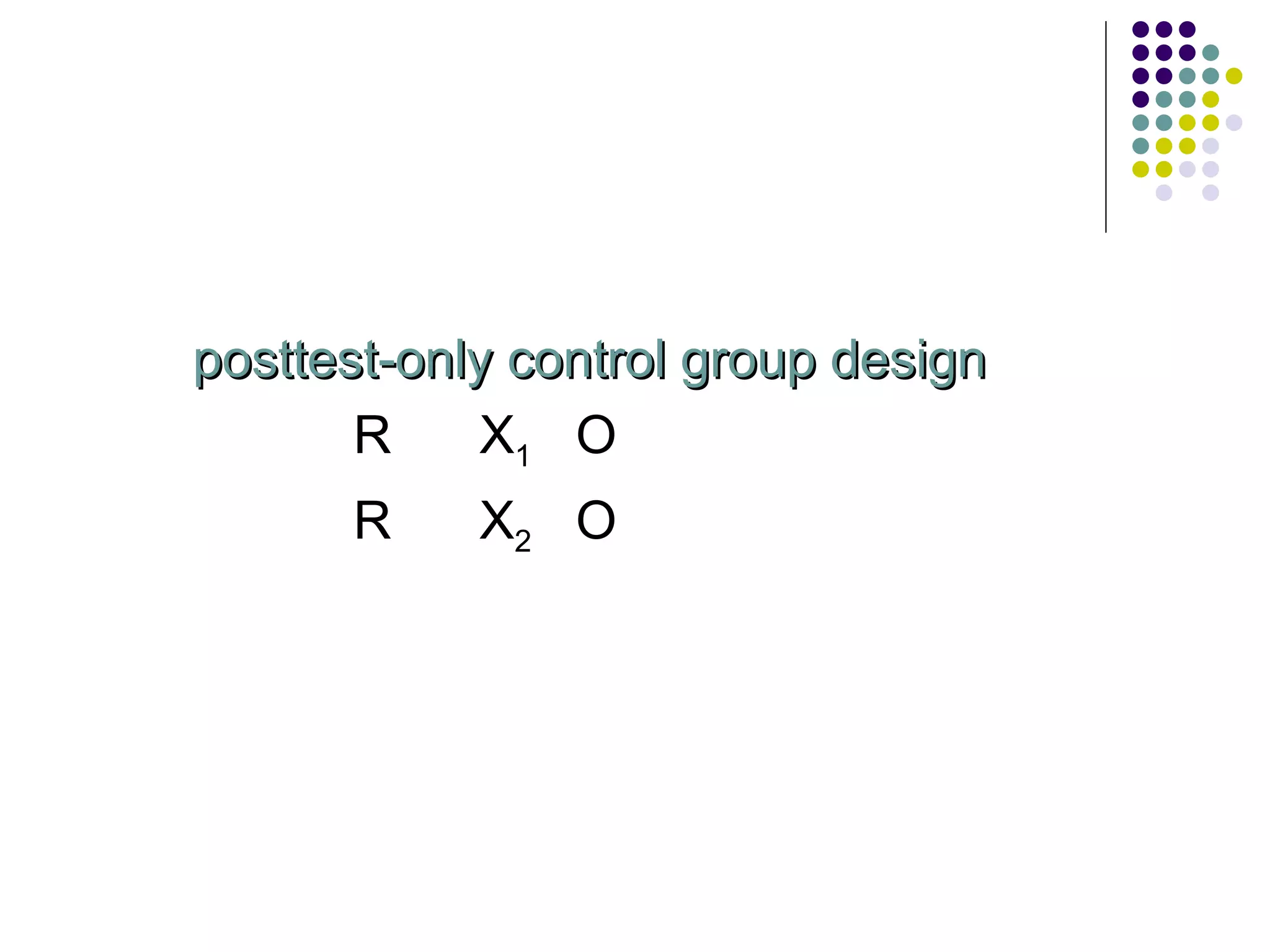

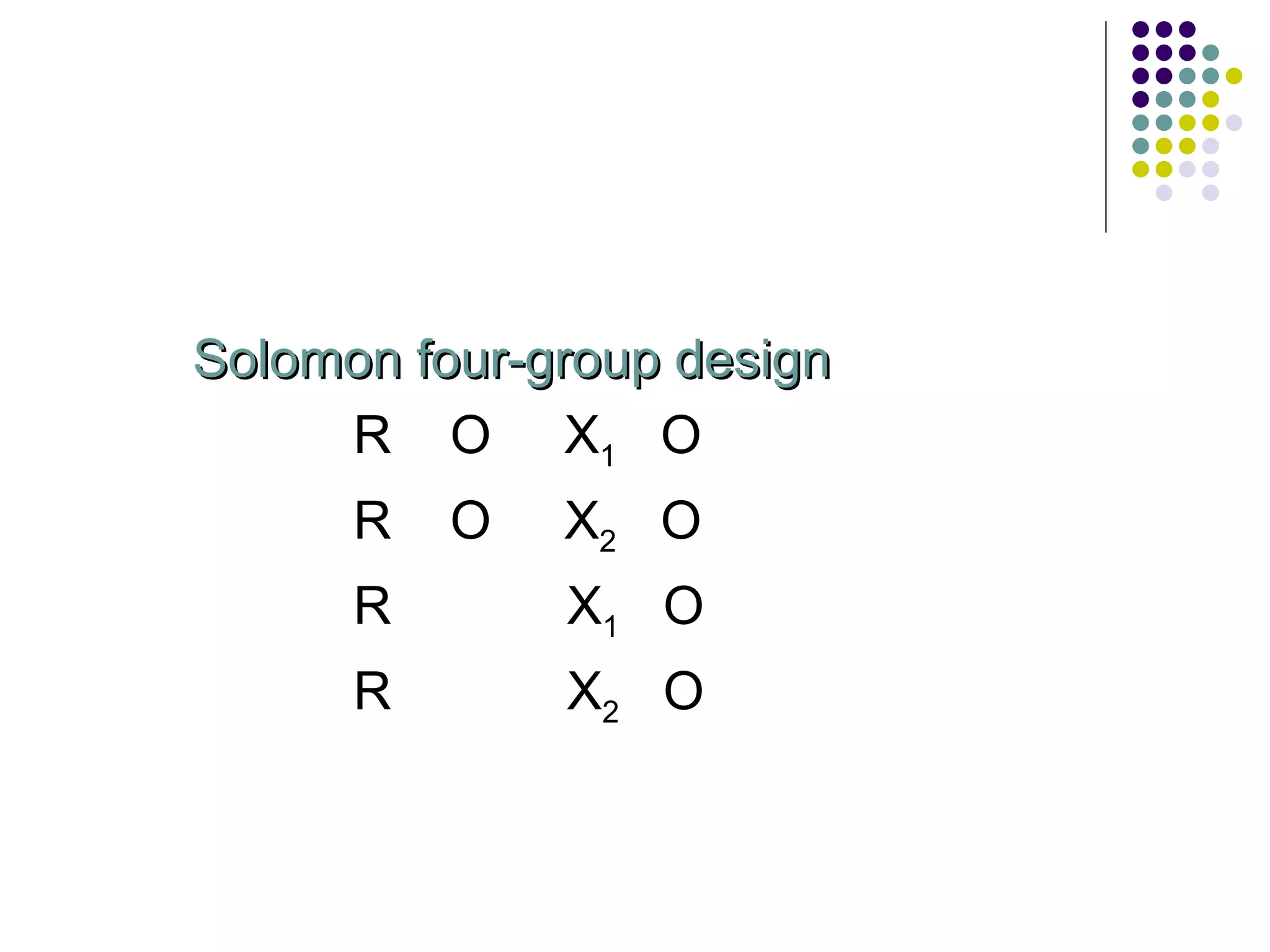

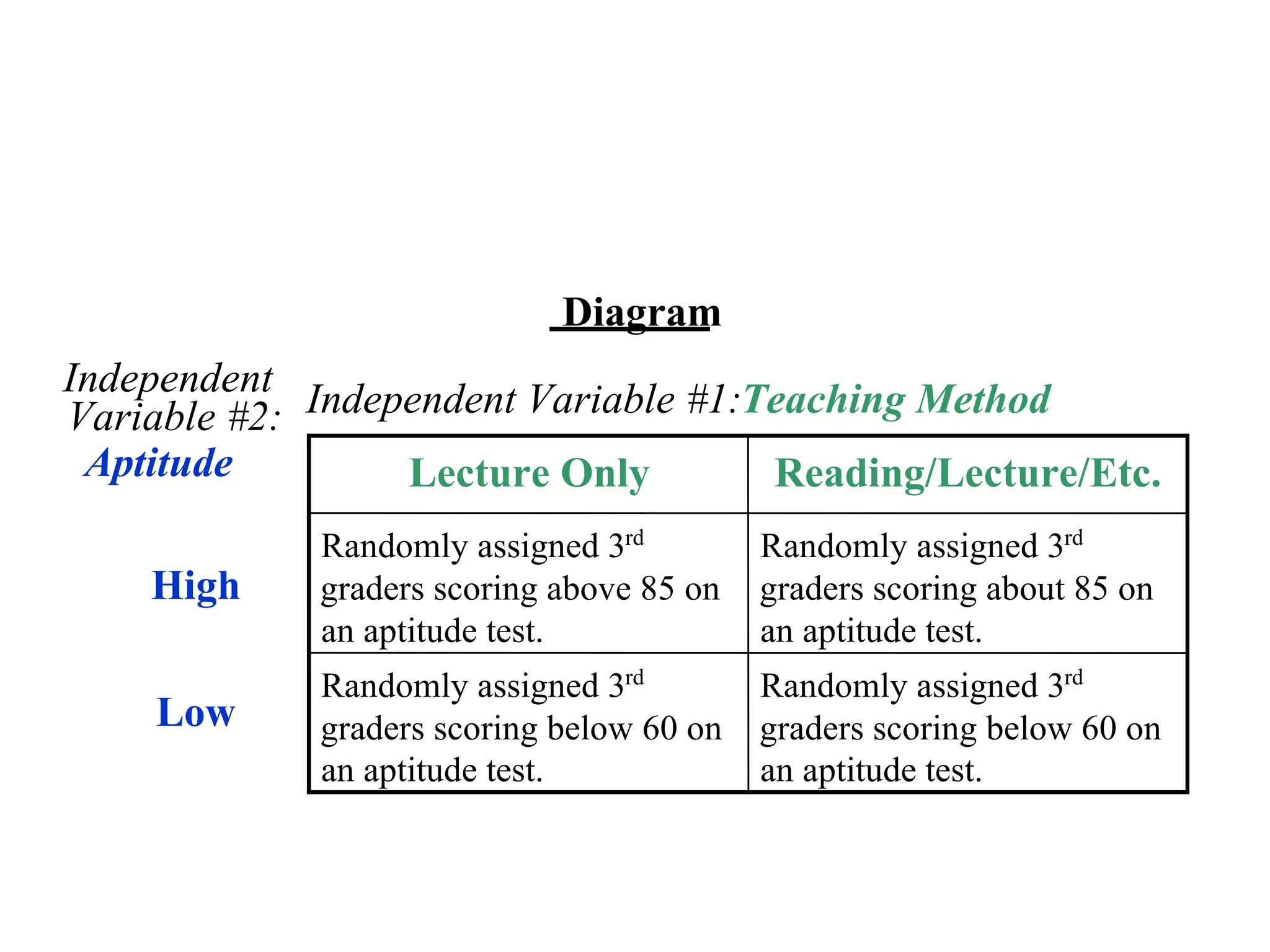

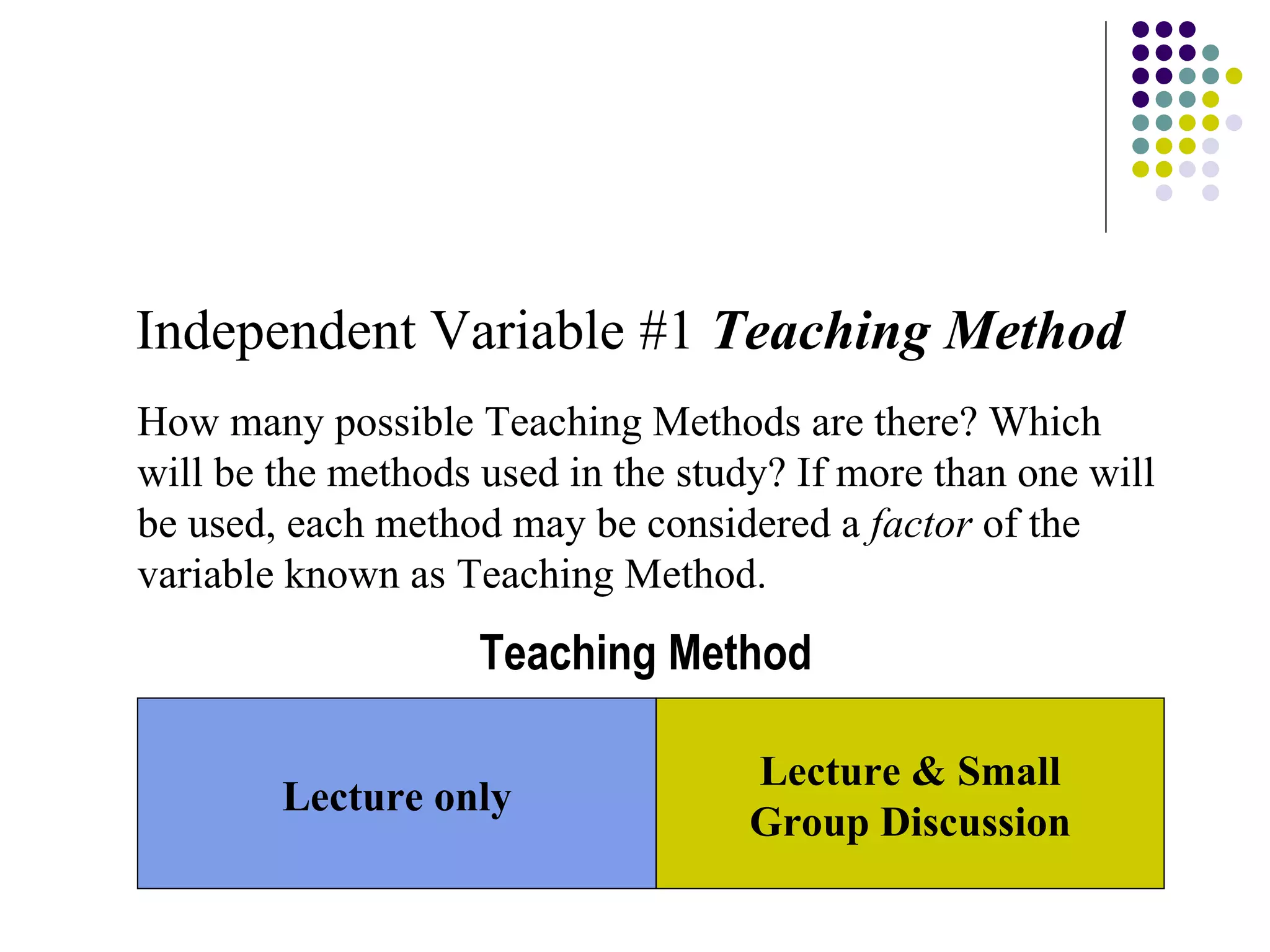

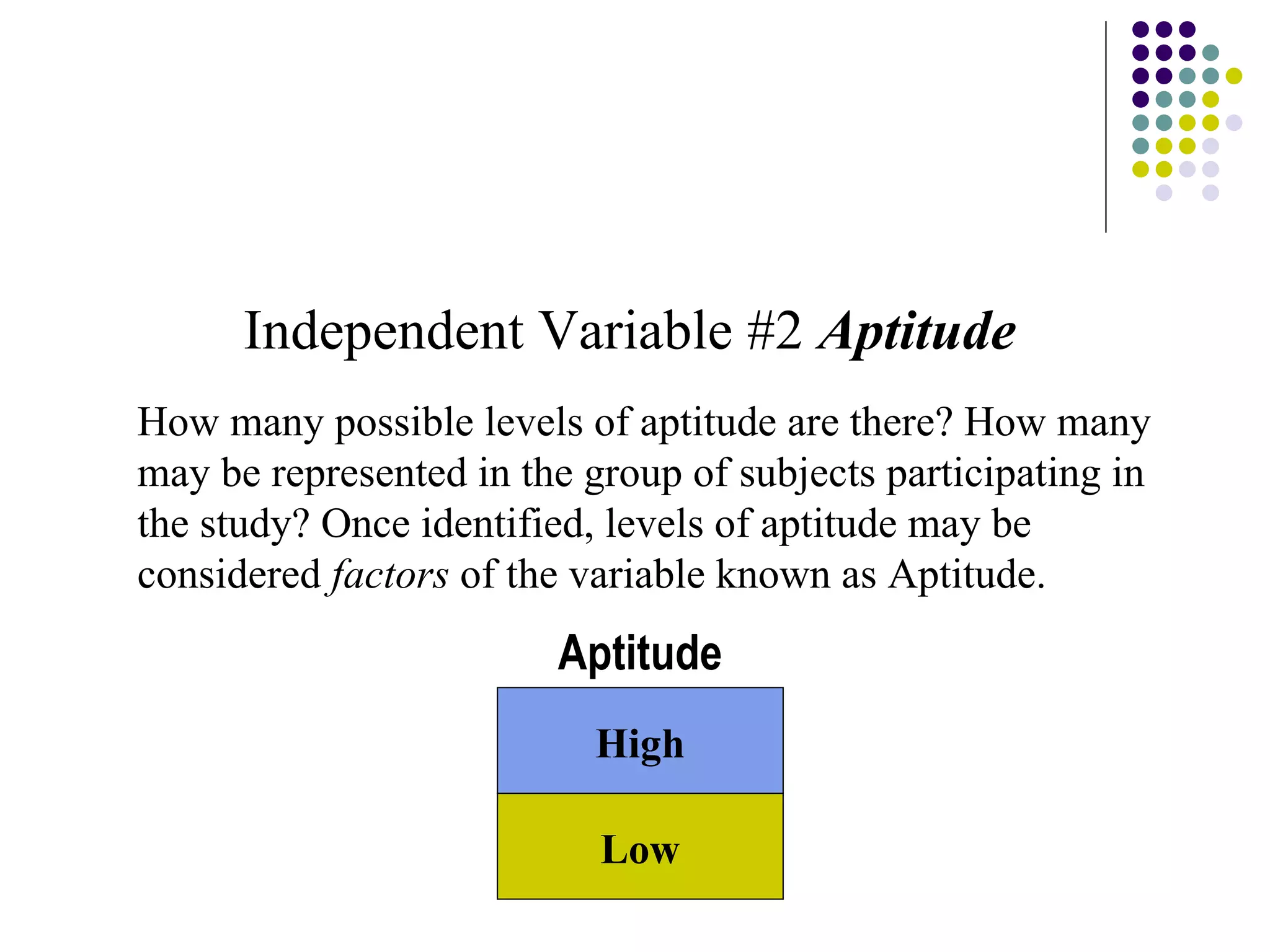

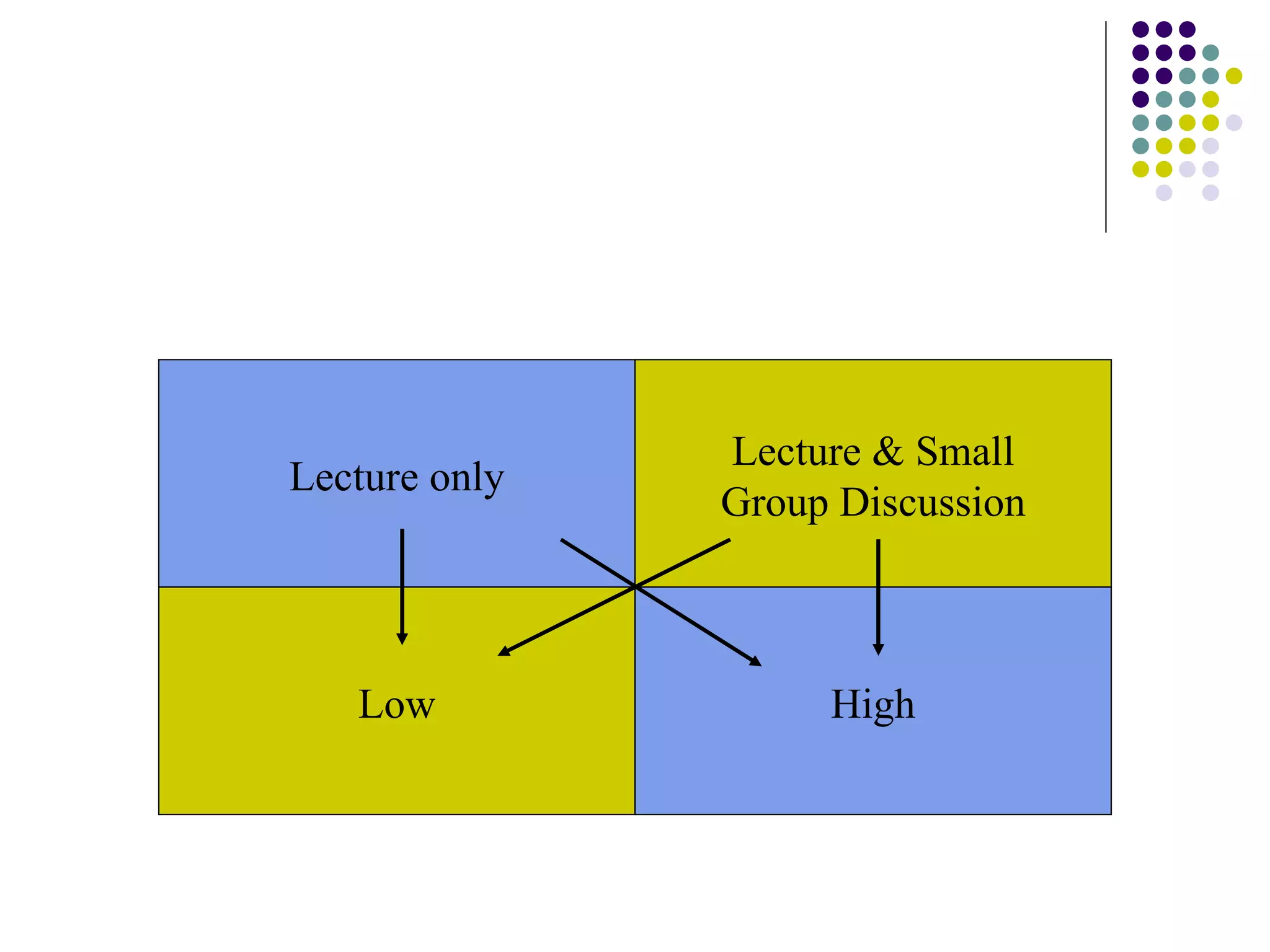

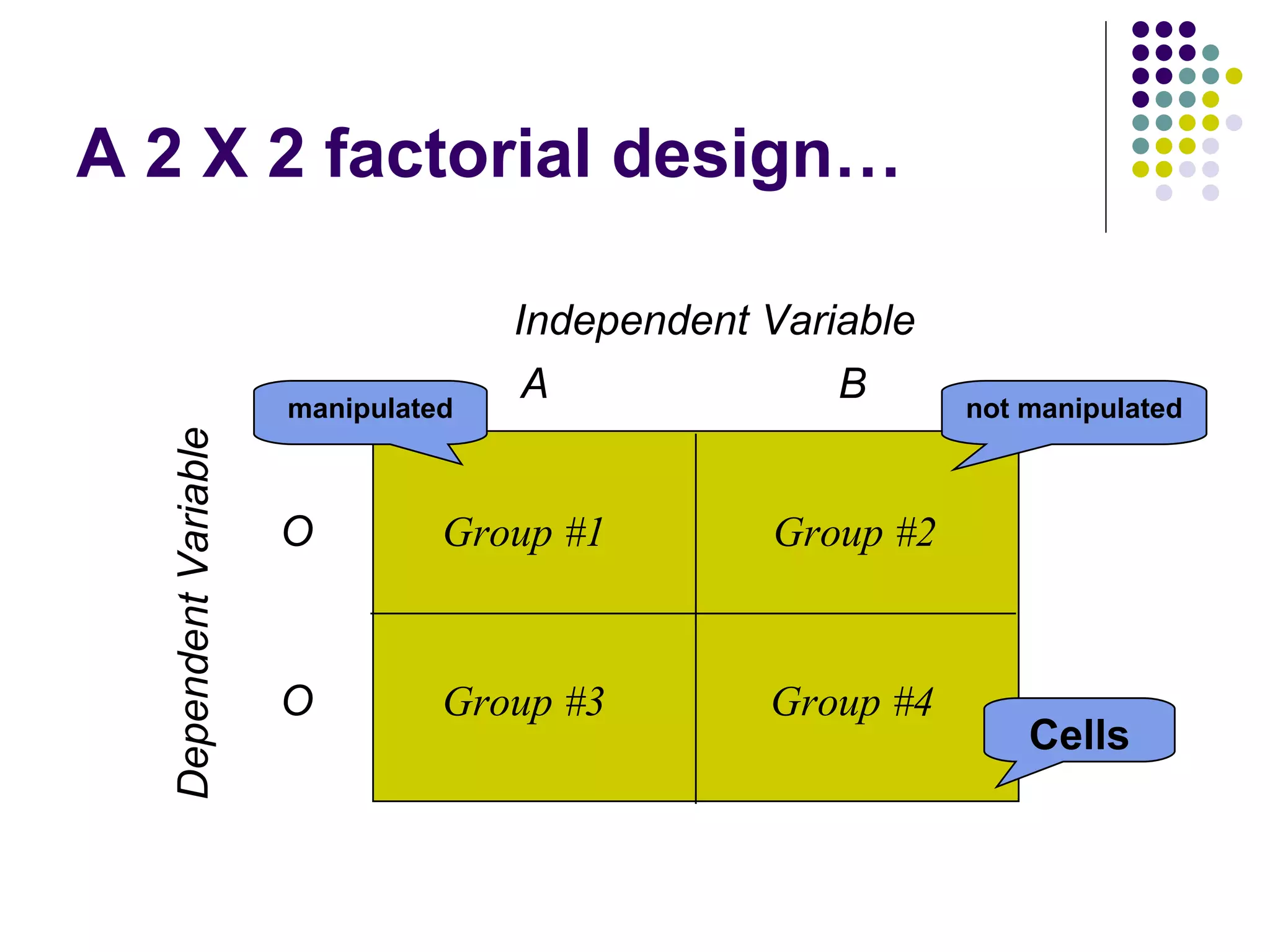

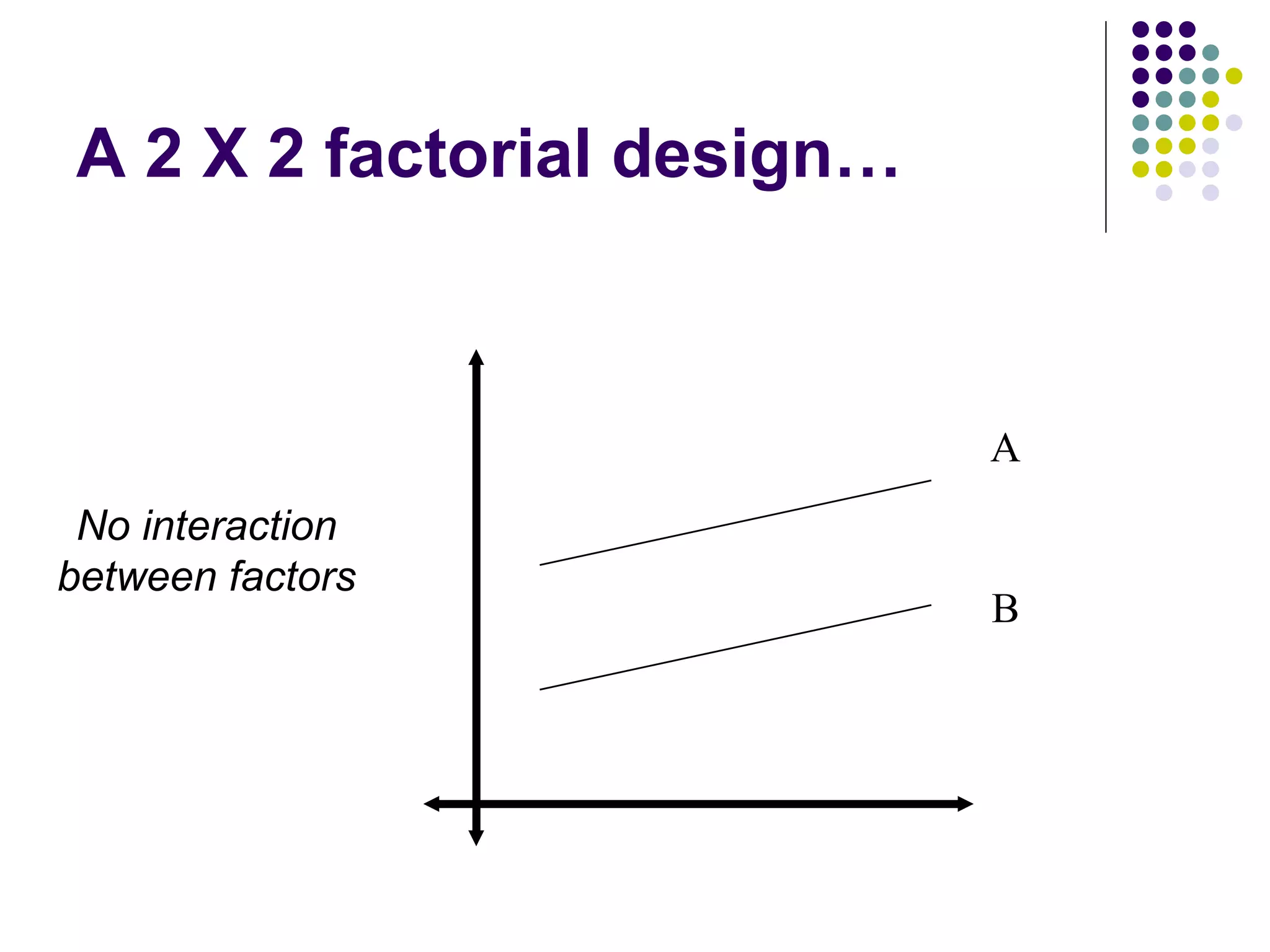

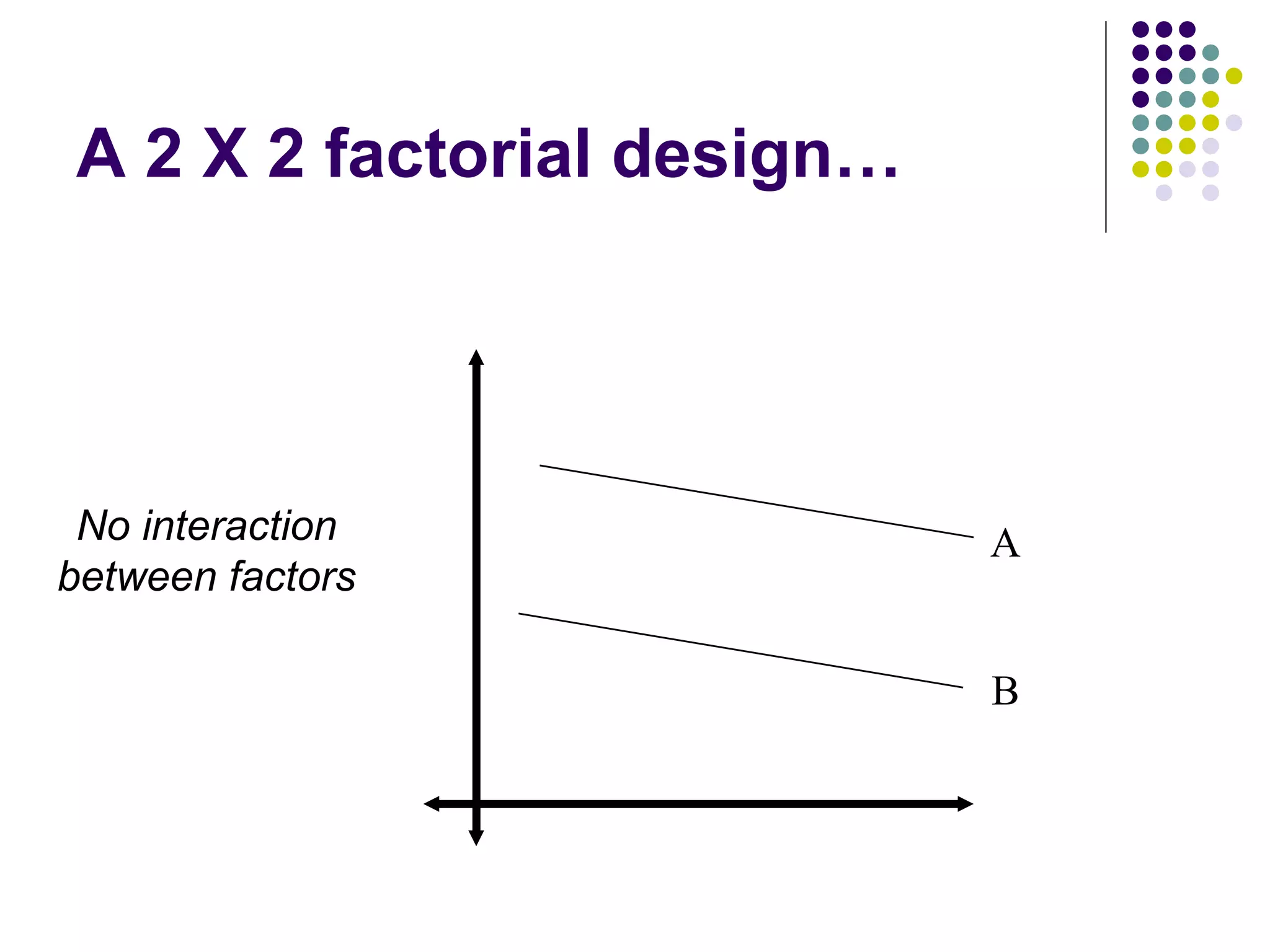

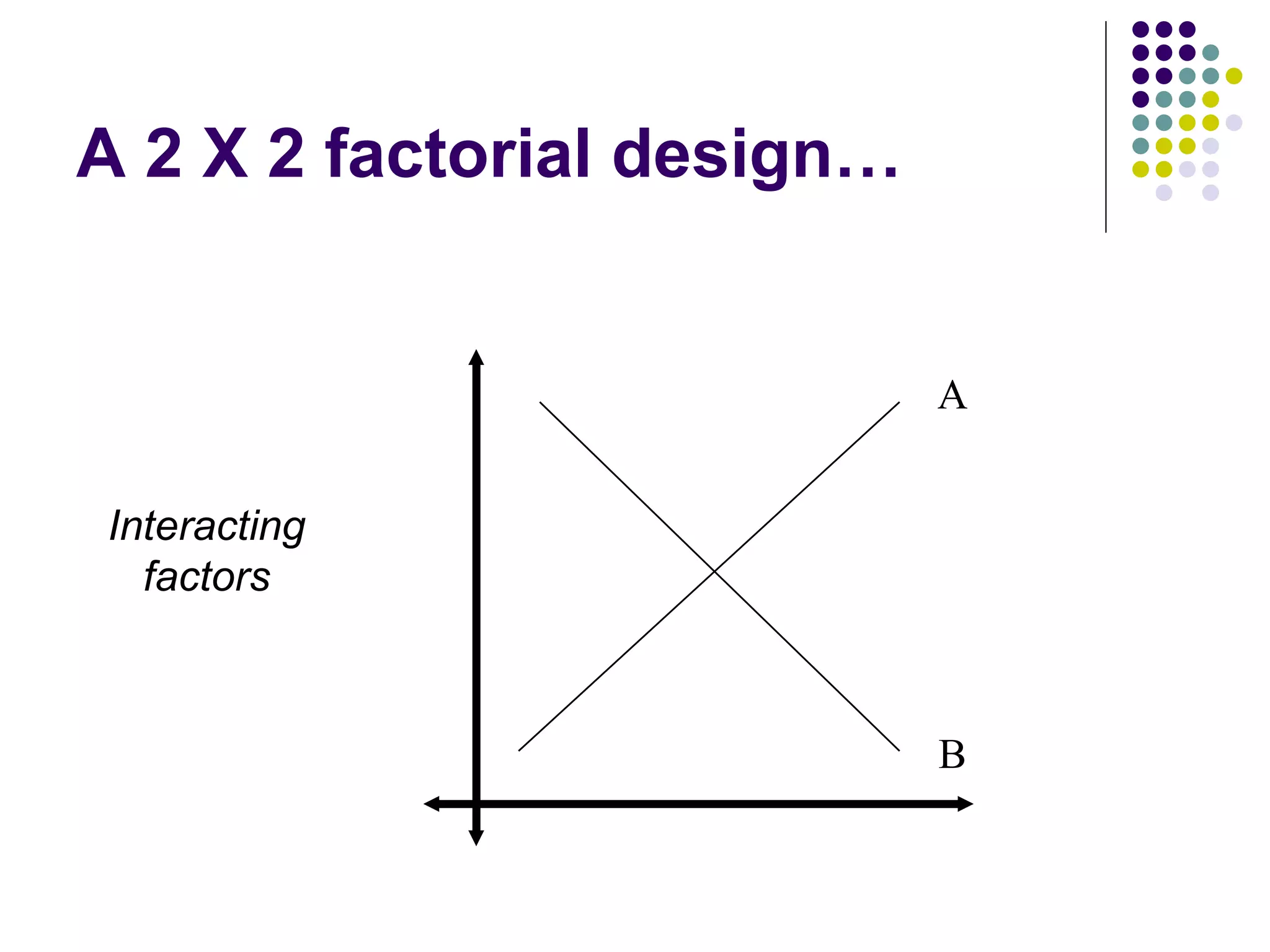

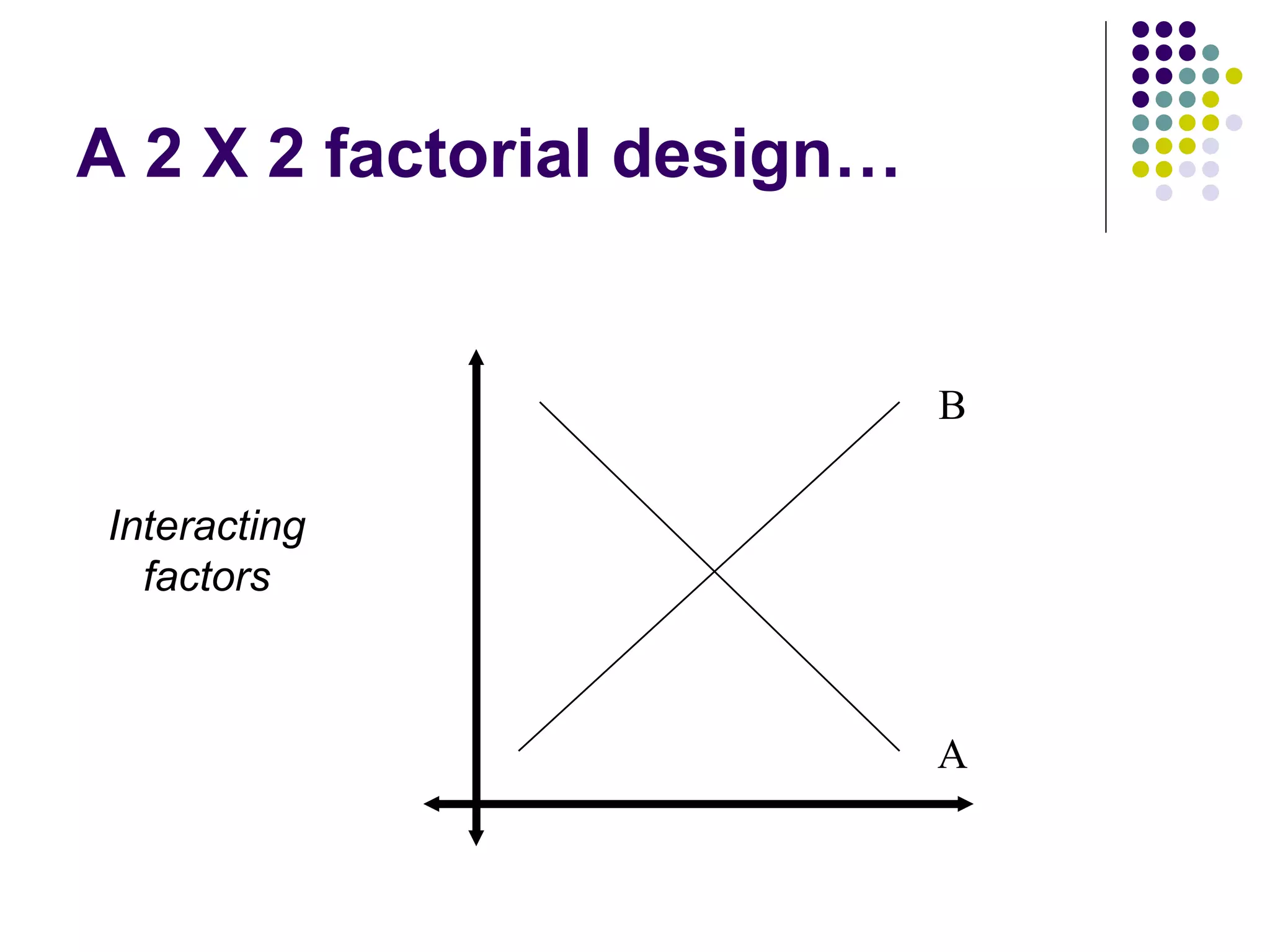

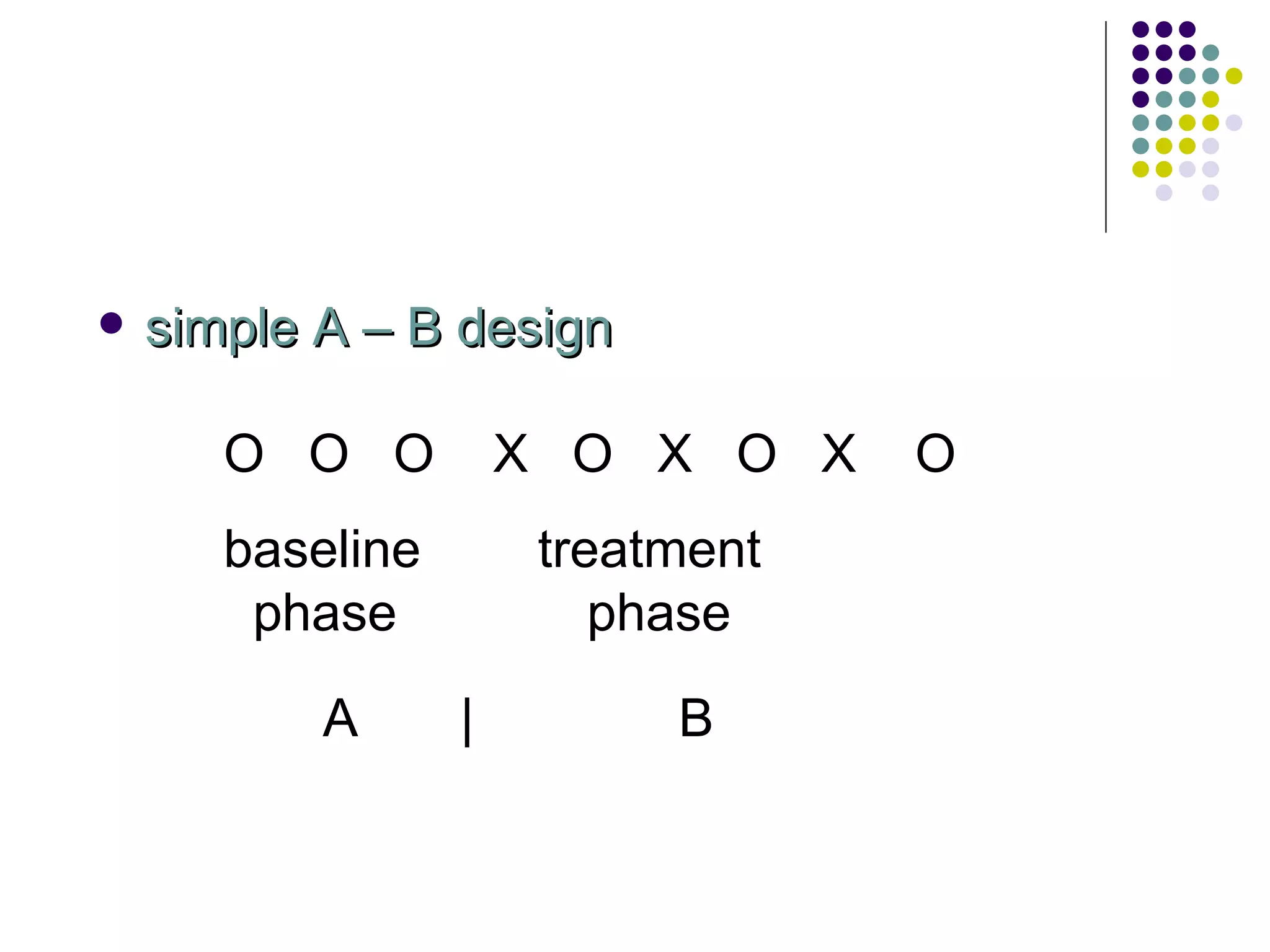

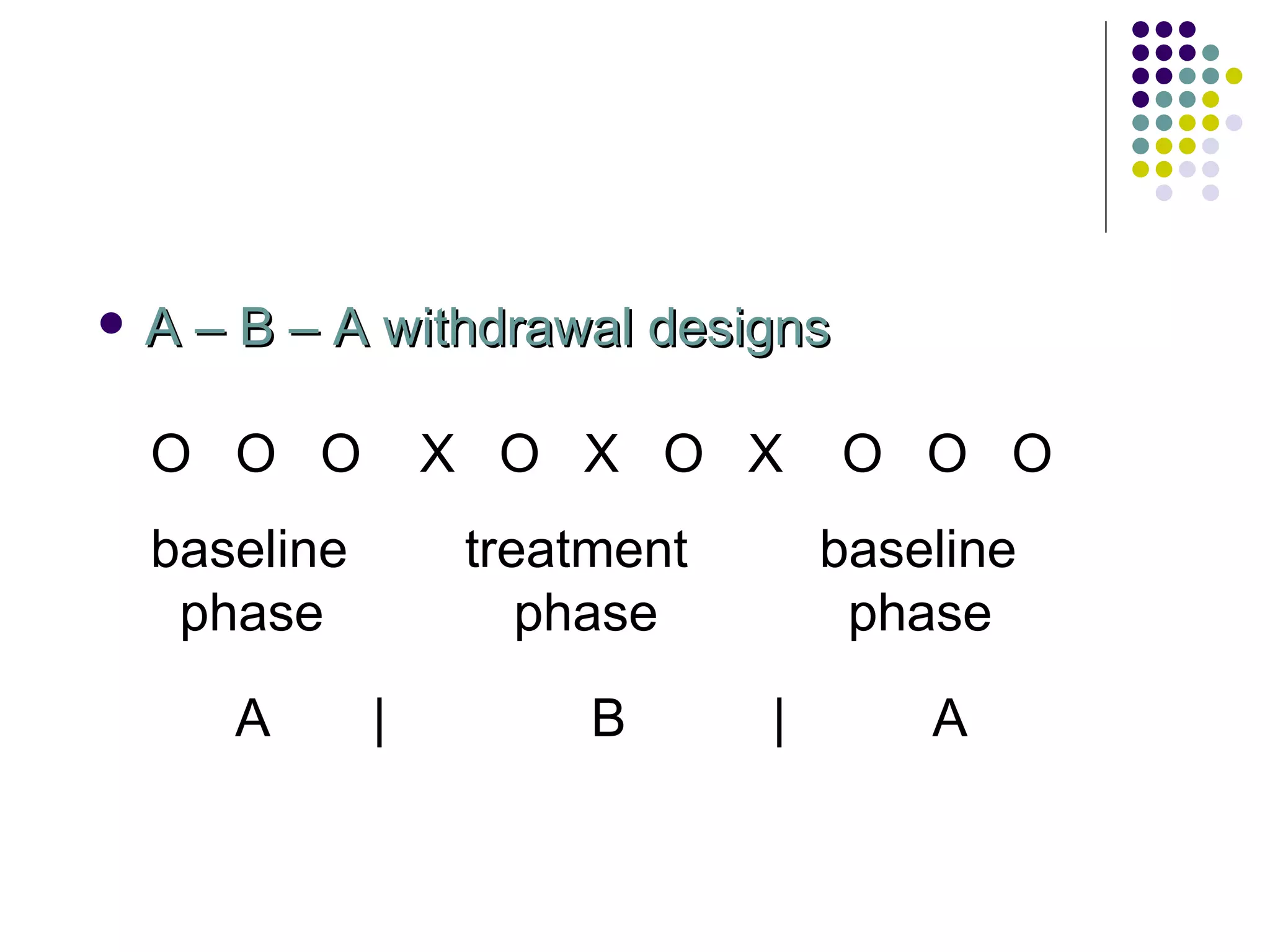

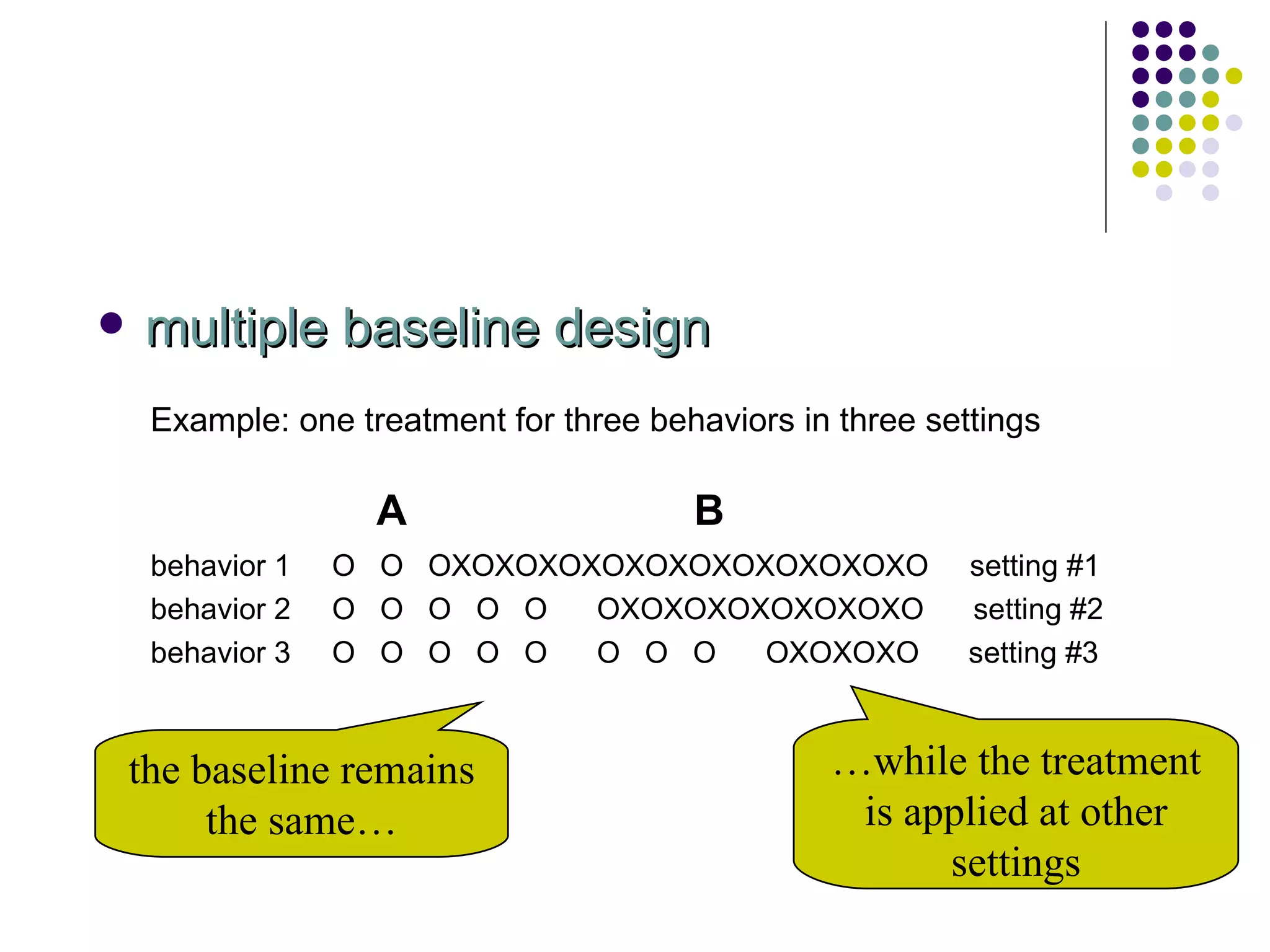

The document discusses various types of research methods and designs used in educational research, including causal-comparative, experimental, qualitative, and quantitative approaches. Causal-comparative research involves comparing at least two groups on a dependent variable because the independent variable cannot be manipulated. Experimental designs allow researchers to control variables and manipulate independent variables, including true experiments using random assignment. Factorial designs involve two or more independent variables that are each manipulated at different levels. Single-subject designs longitudinally measure an individual or small group's performance over time using methods like withdrawal or multiple baseline designs.