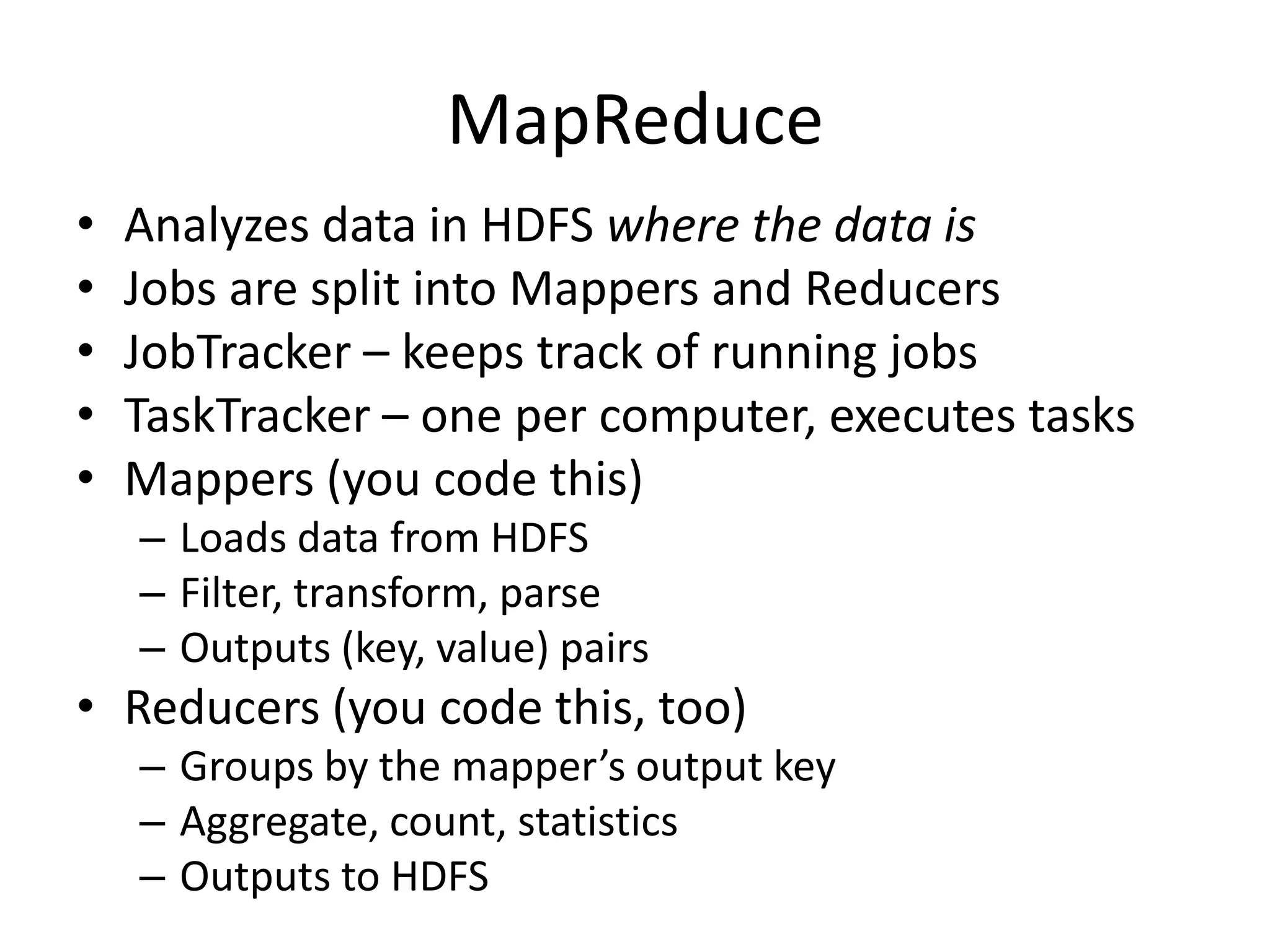

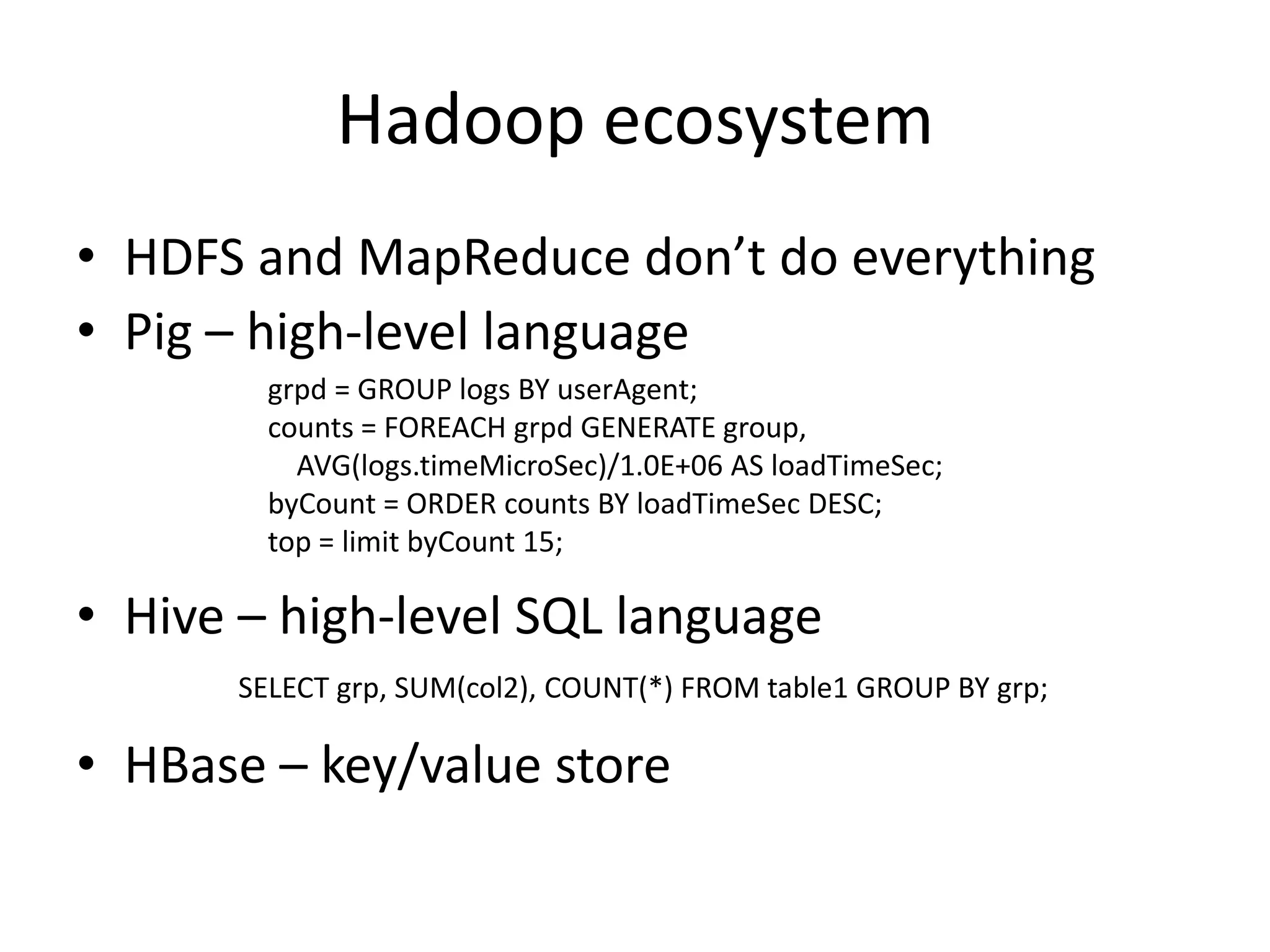

Hadoop is an open-source distributed platform designed for processing and storing large volumes of data on commodity hardware, initiated at Yahoo!. Its core components include the Hadoop Distributed File System (HDFS) and the MapReduce data analysis framework, with additional ecosystem tools like HBase, Pig, and Hive for various data processing tasks. While Hadoop excels in batch processing unstructured and structured data, it is not ideal for real-time updates and ease of use.