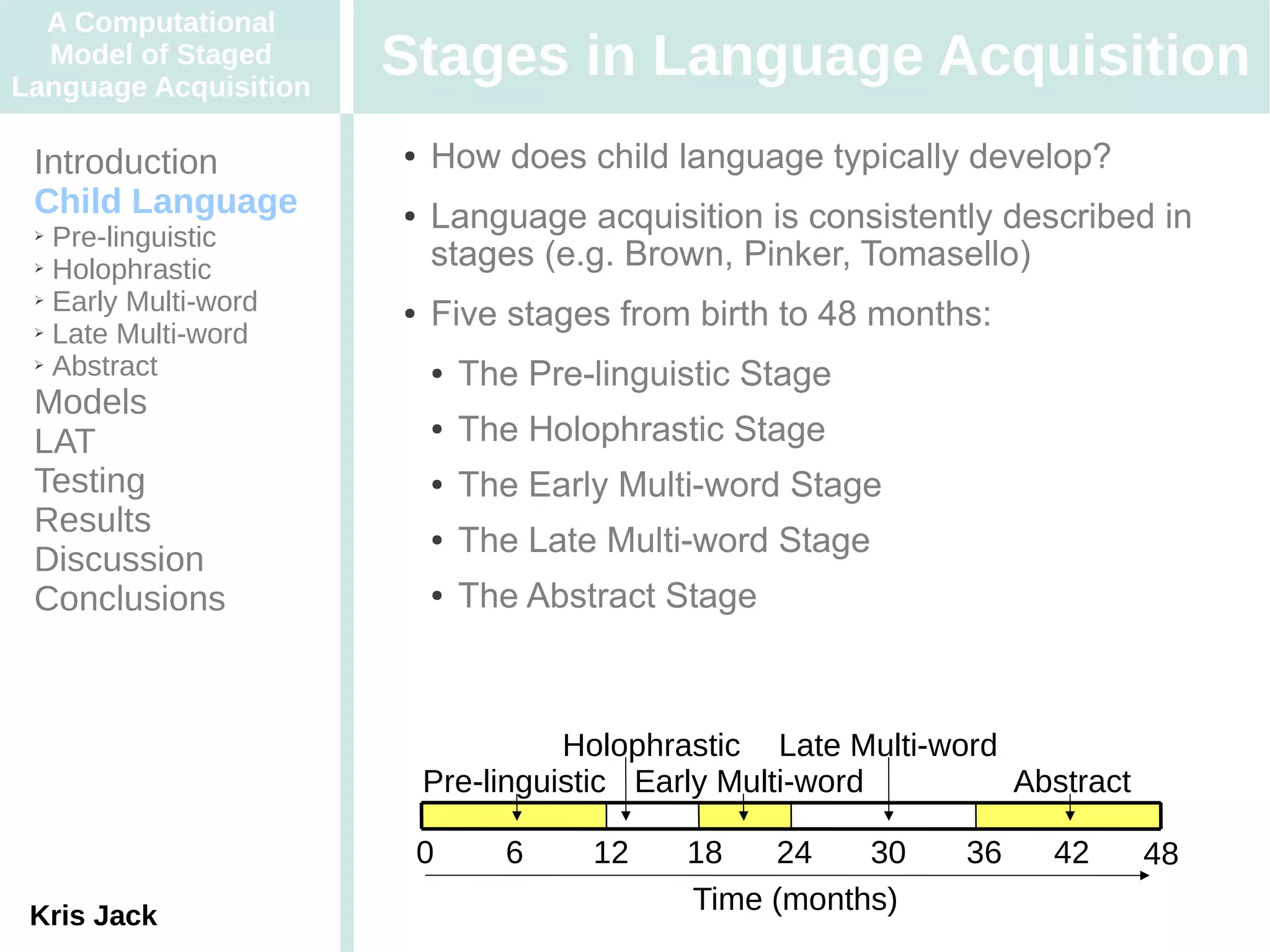

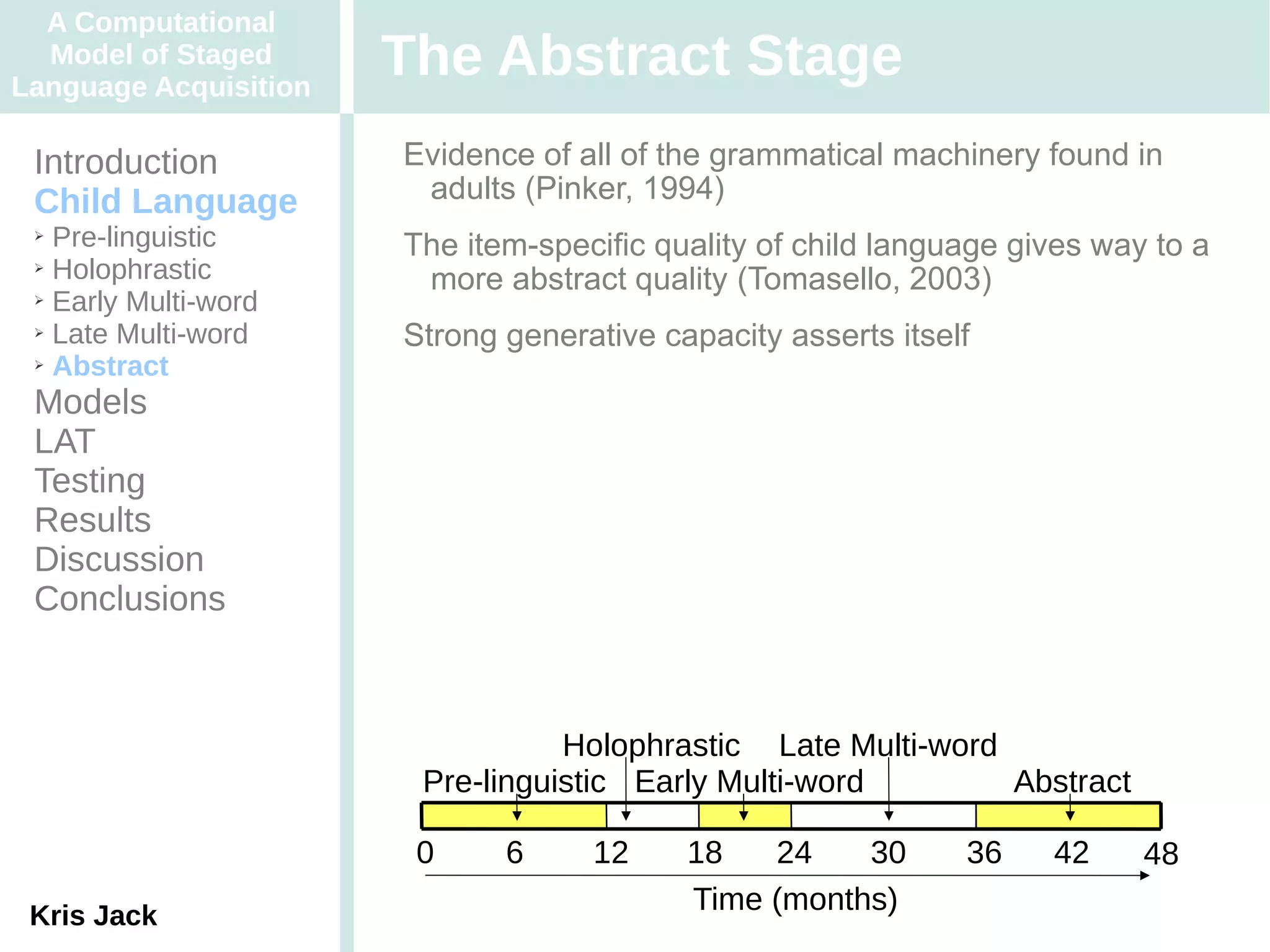

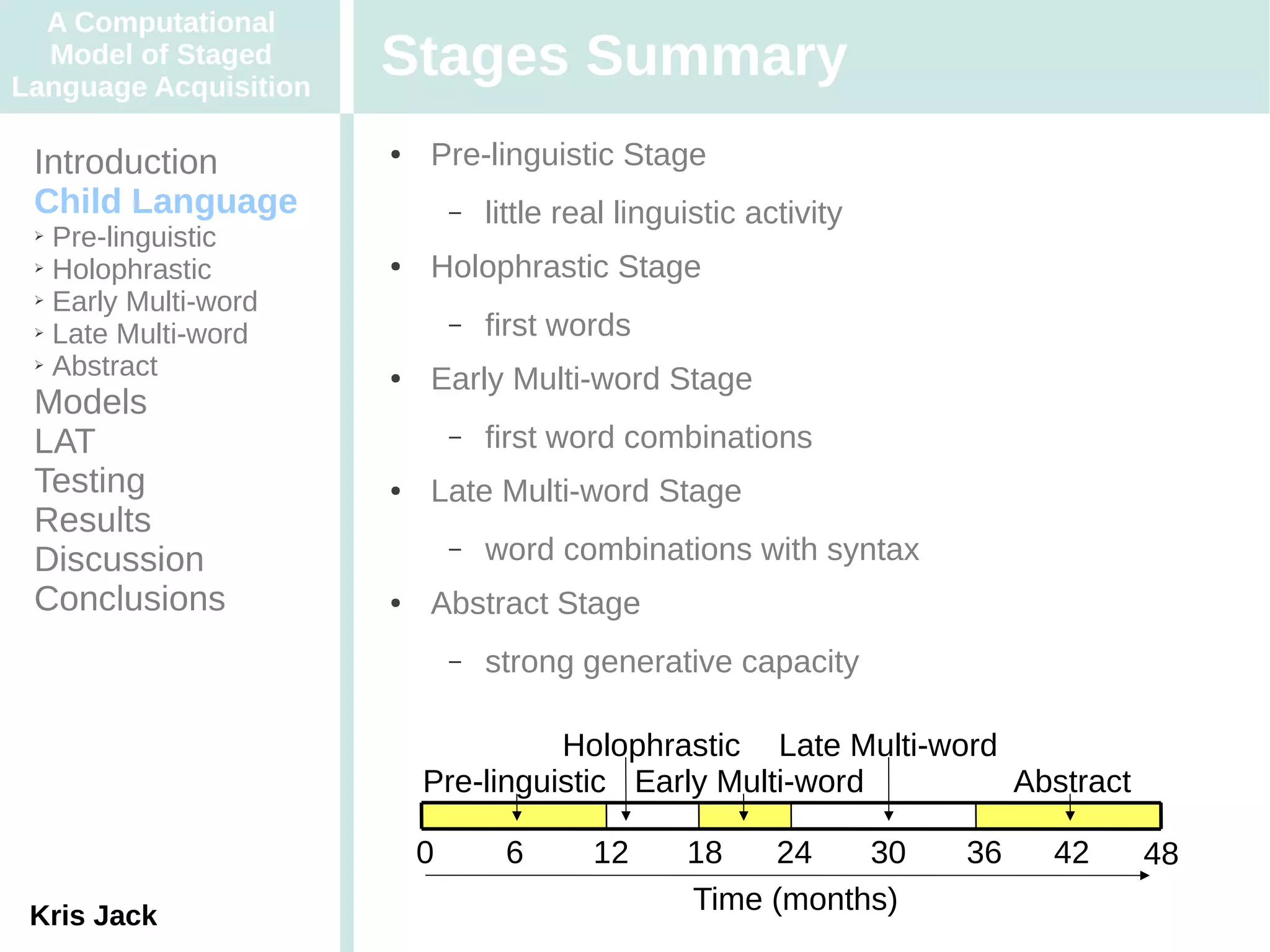

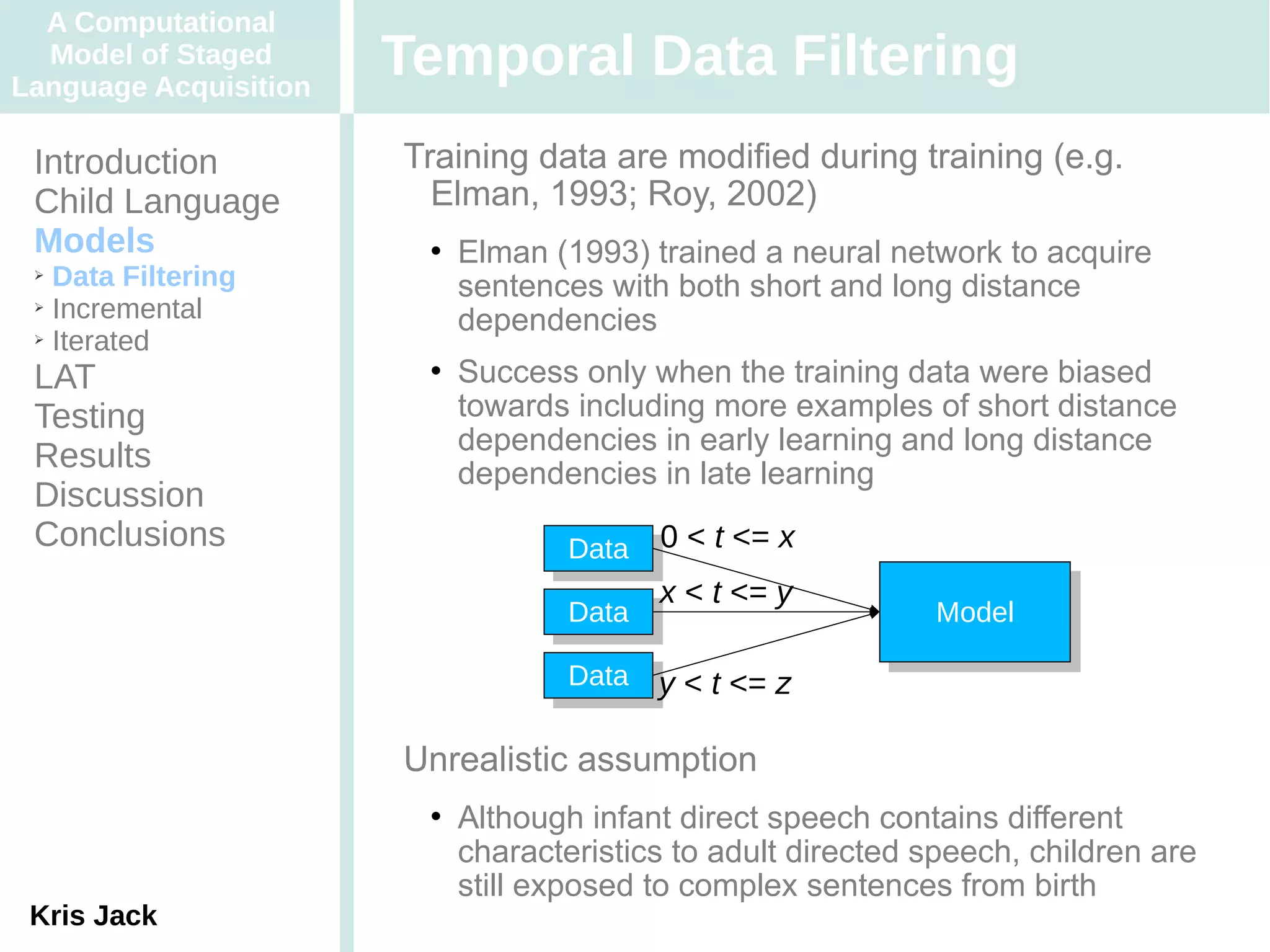

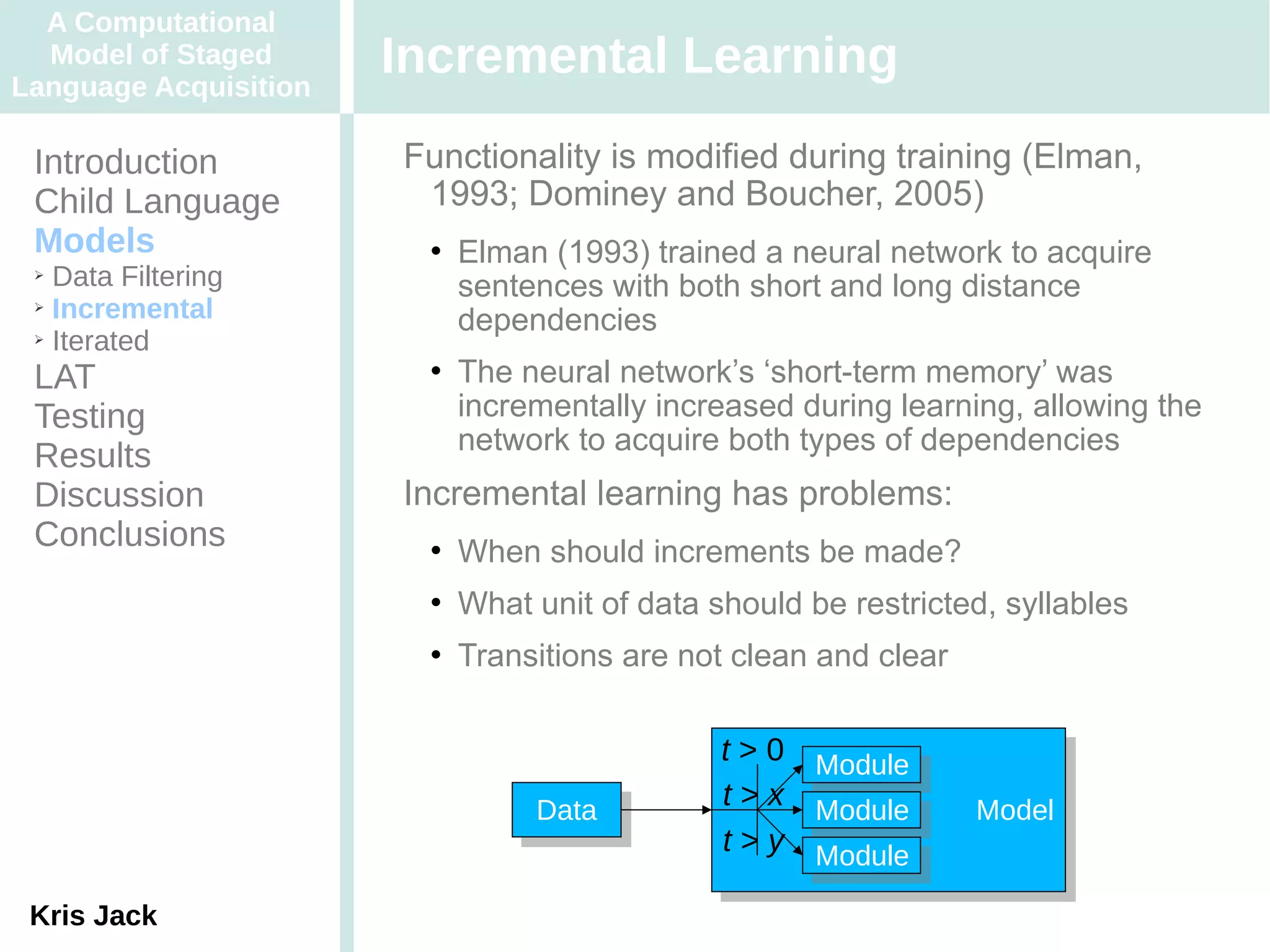

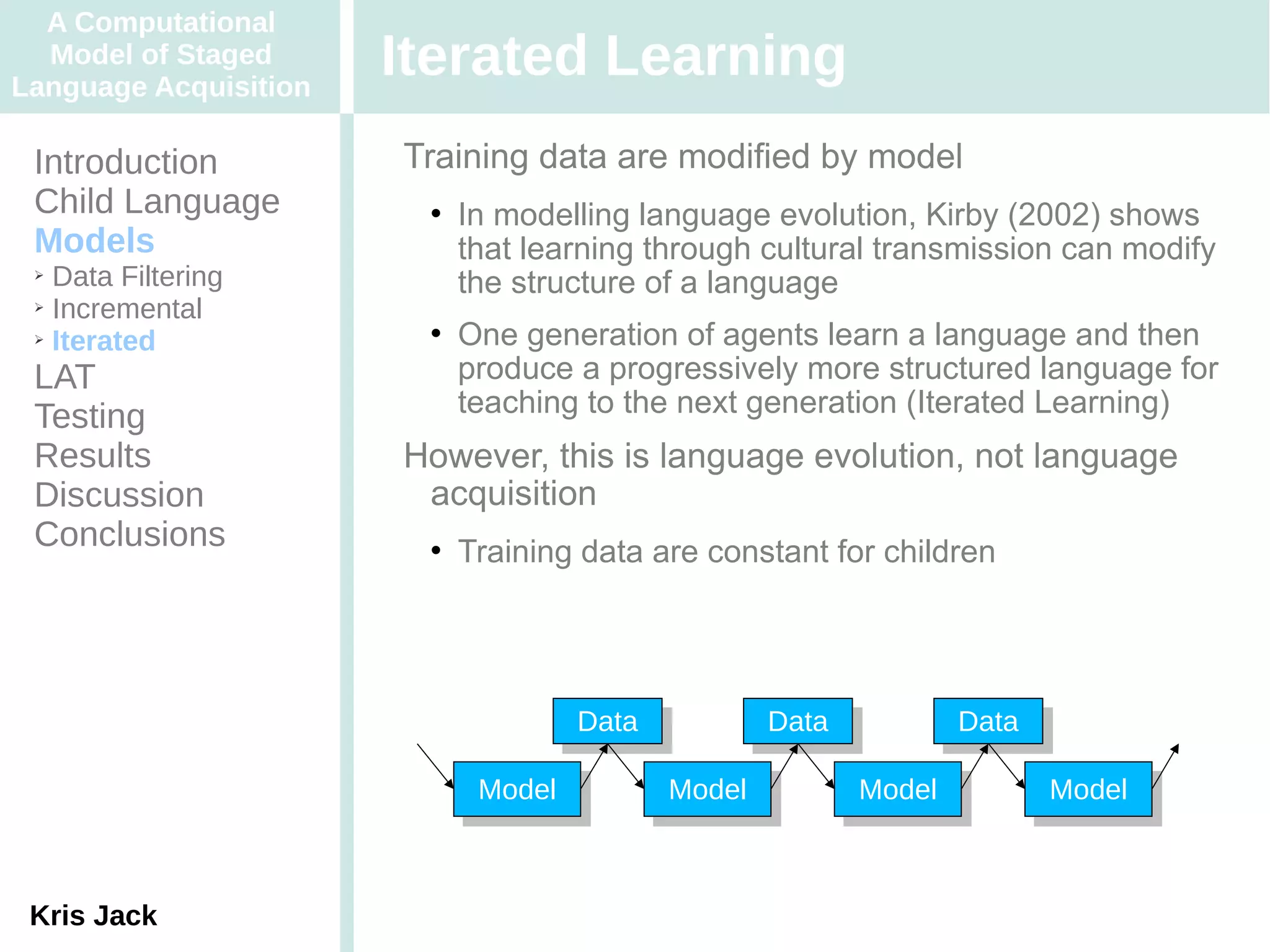

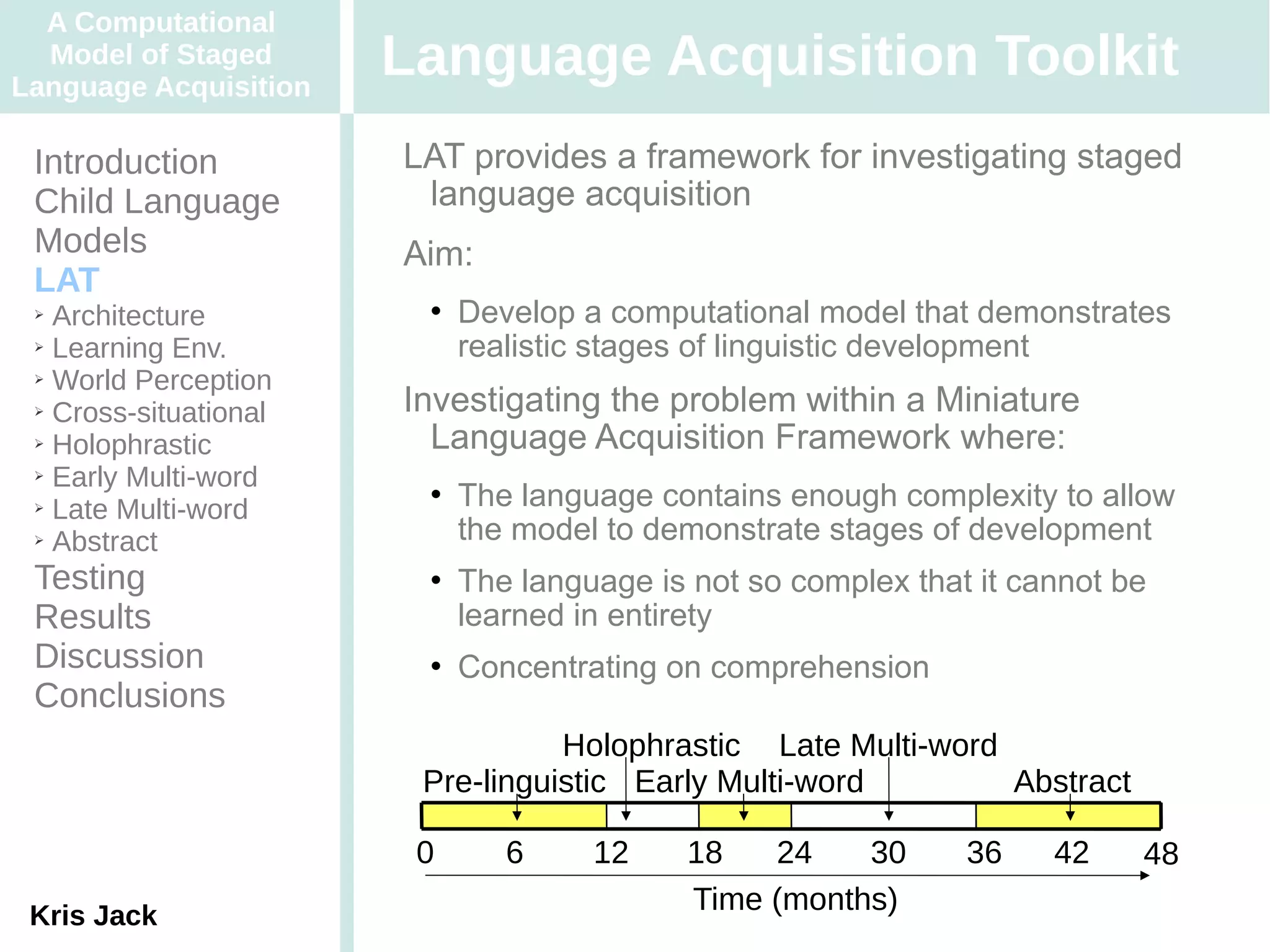

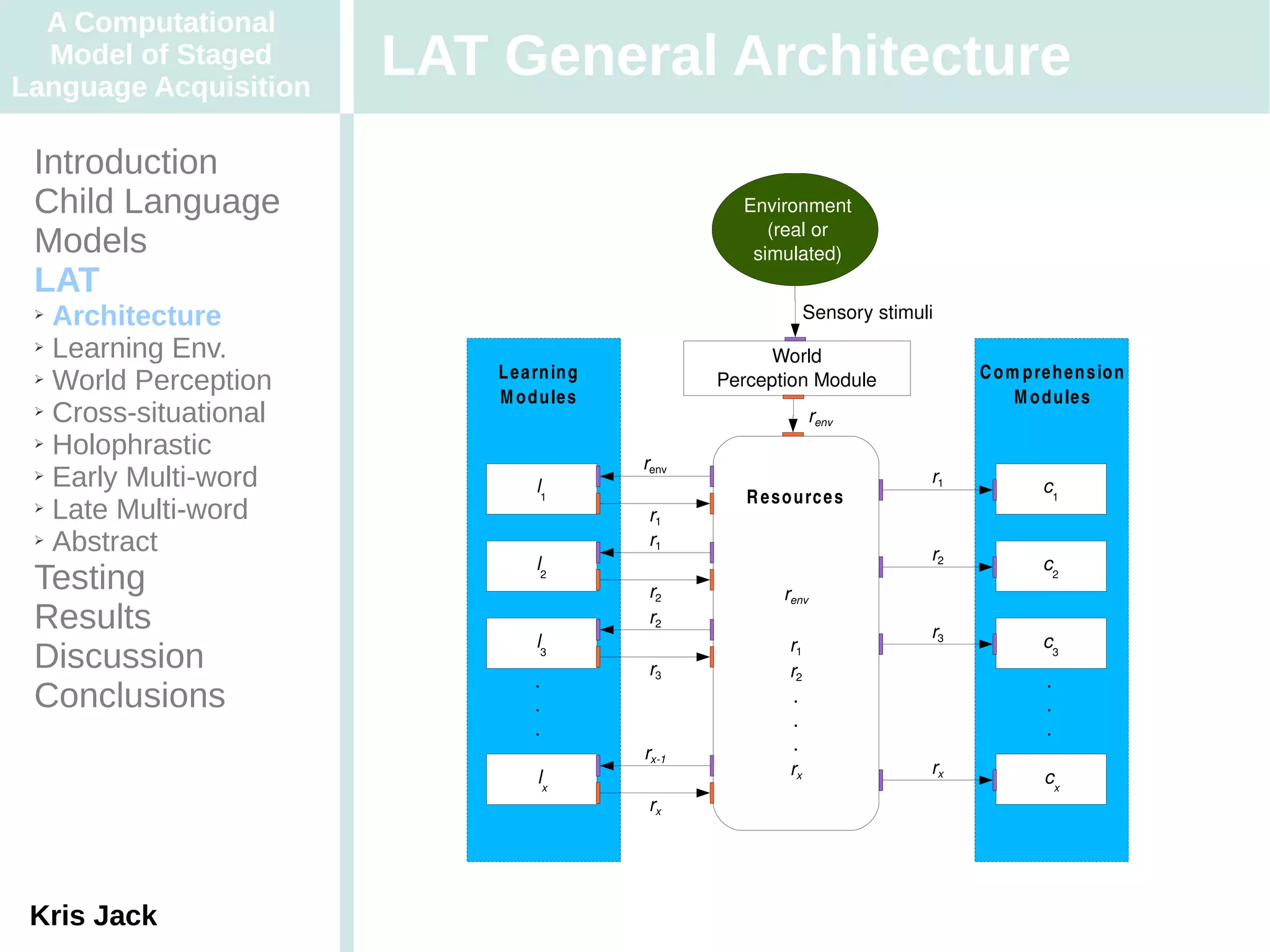

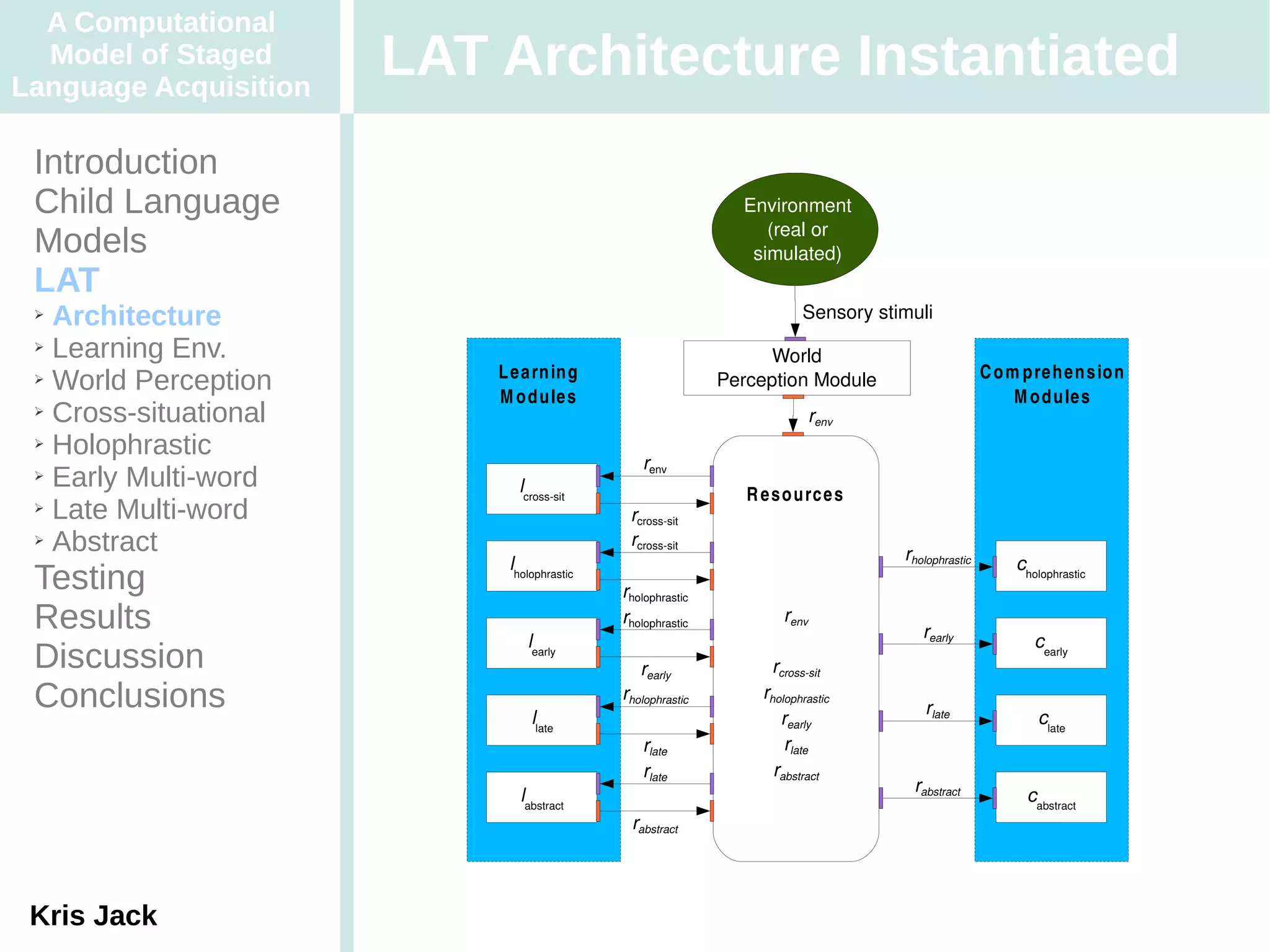

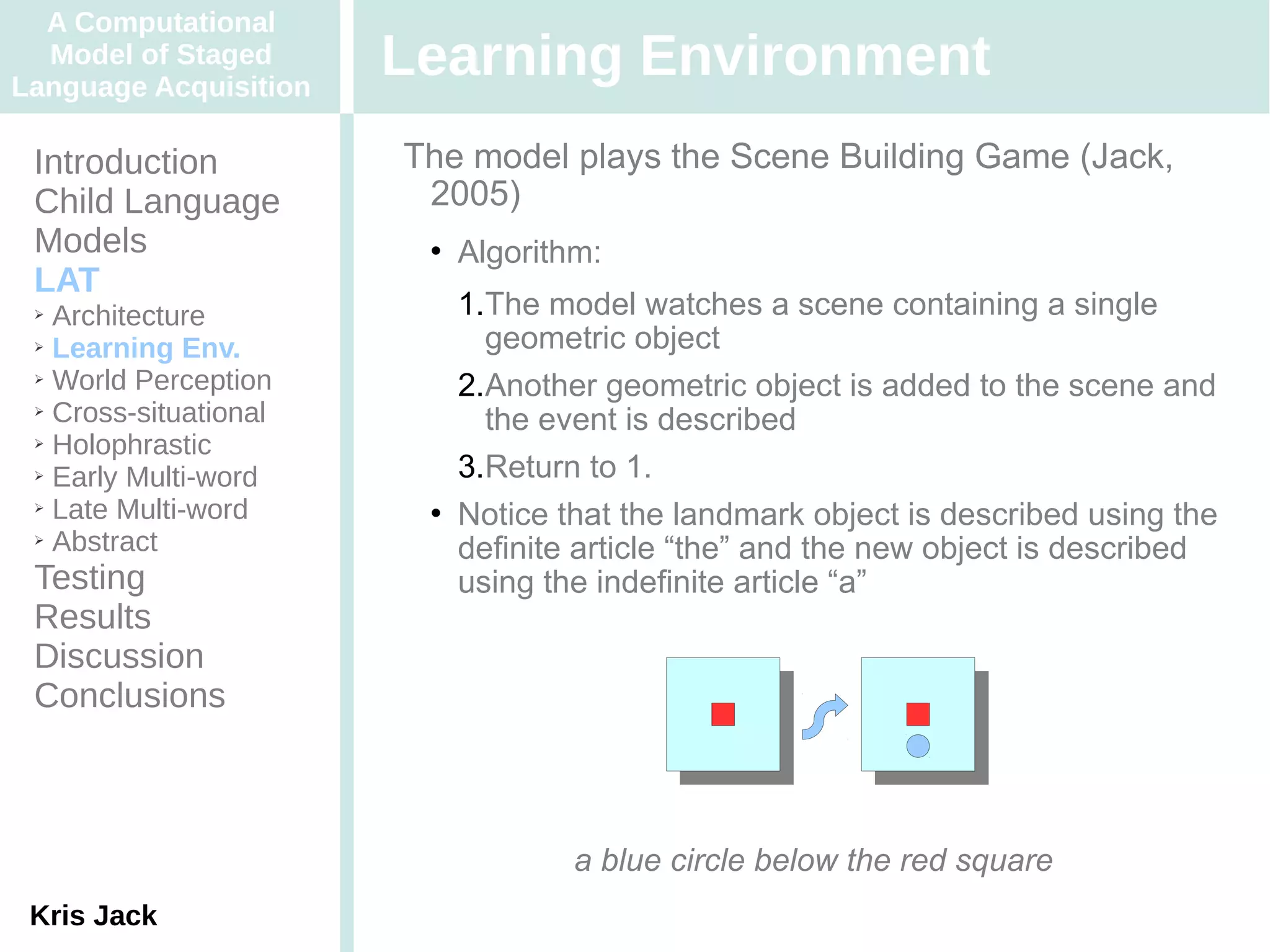

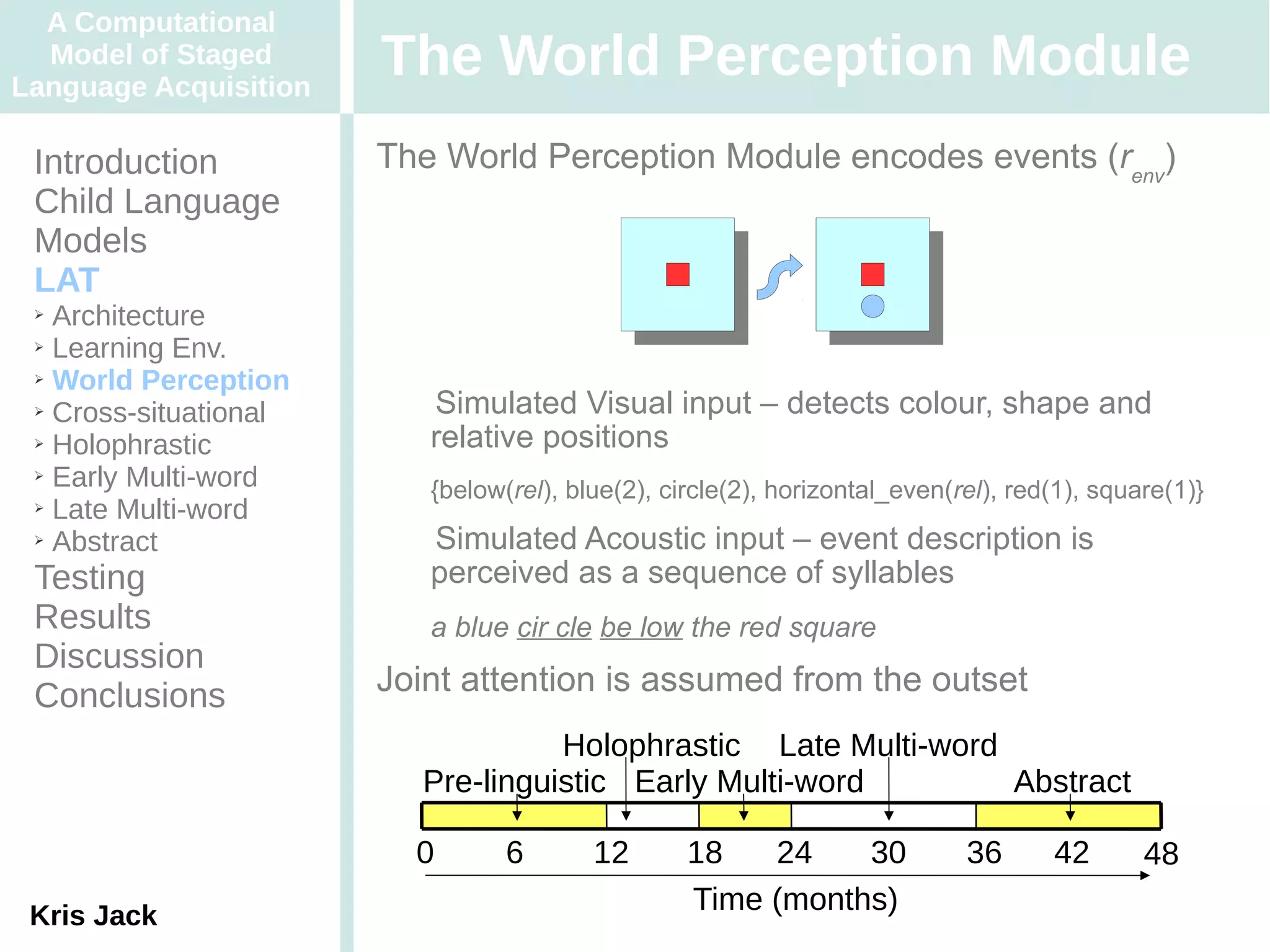

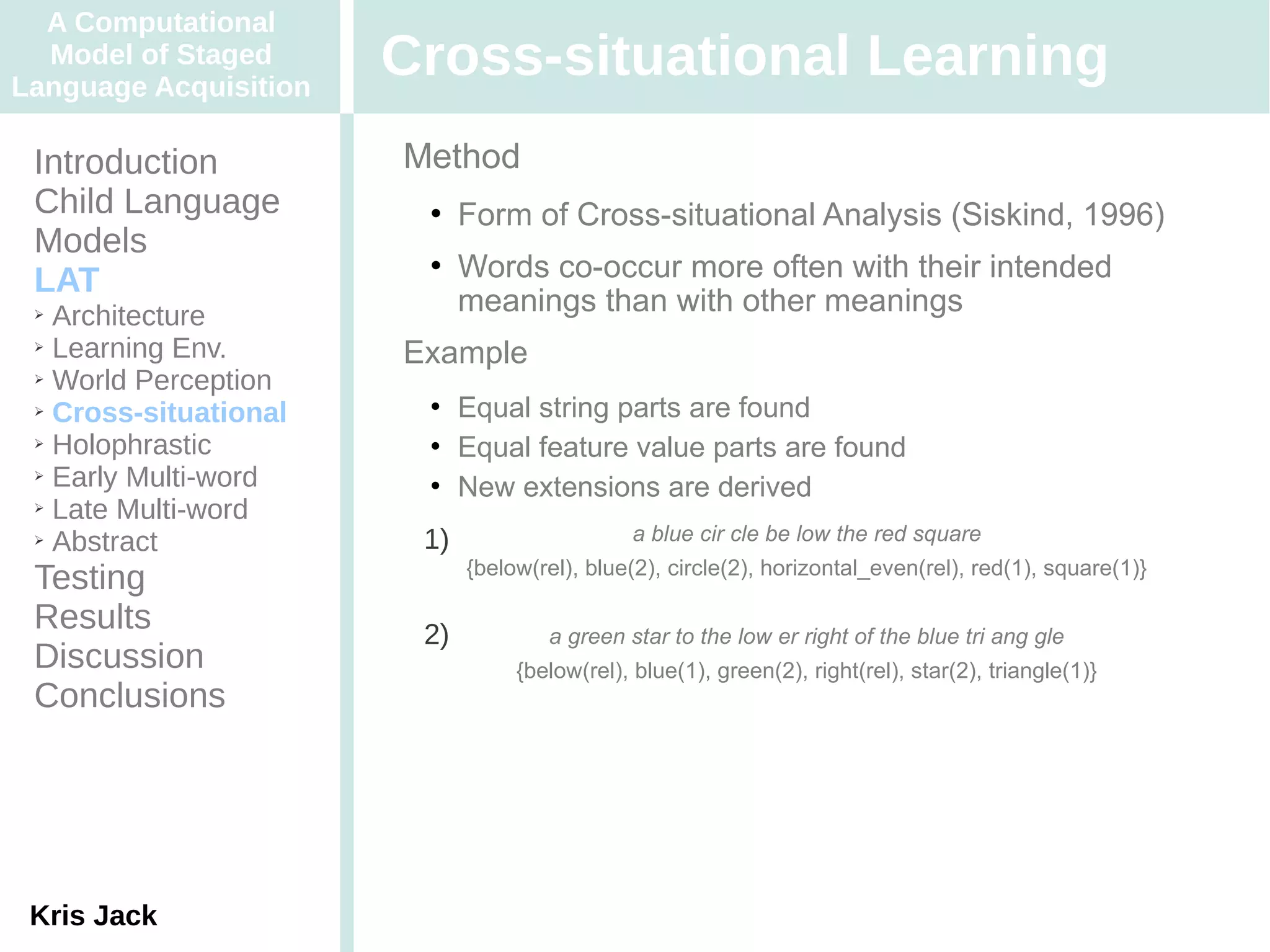

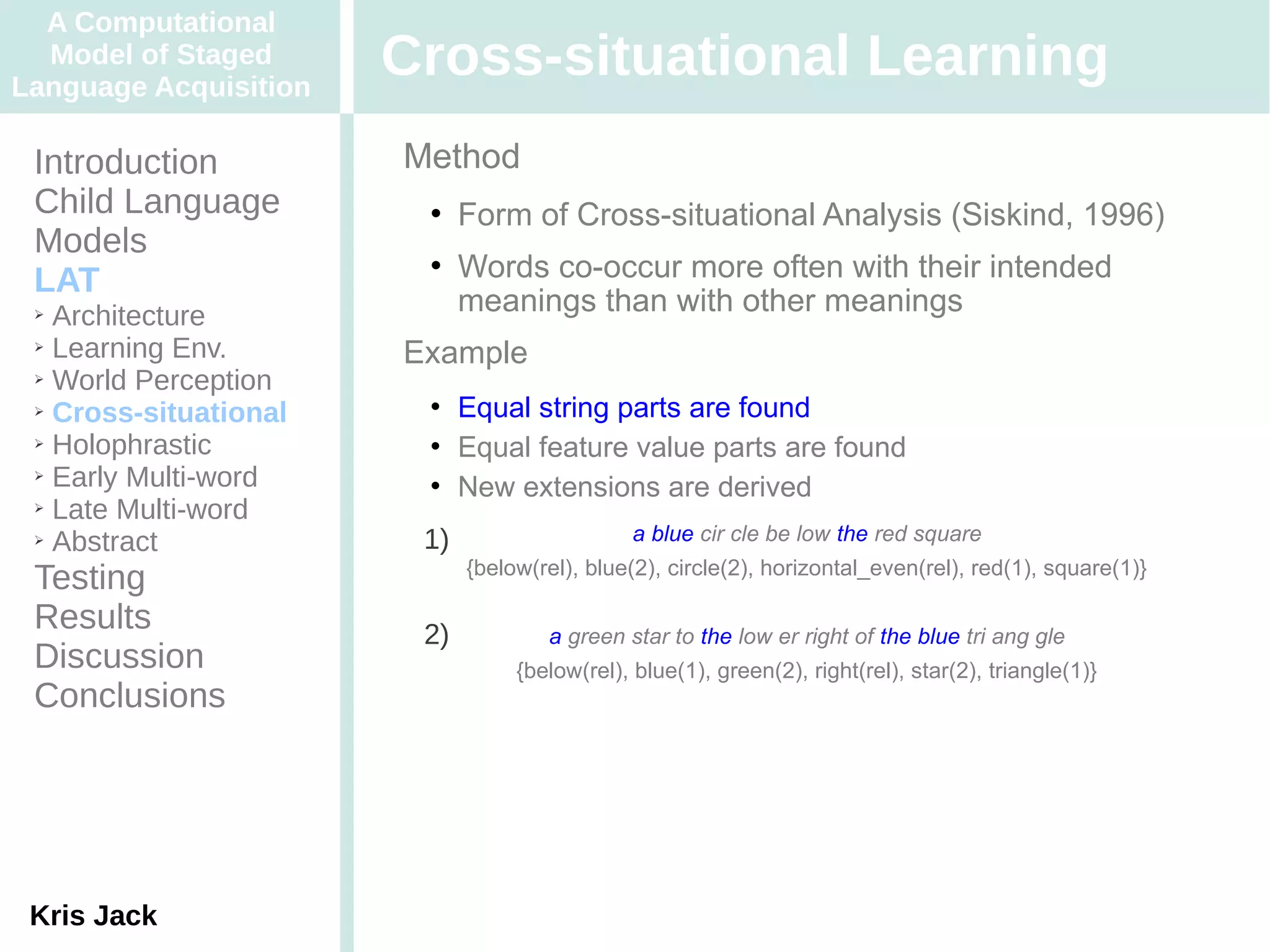

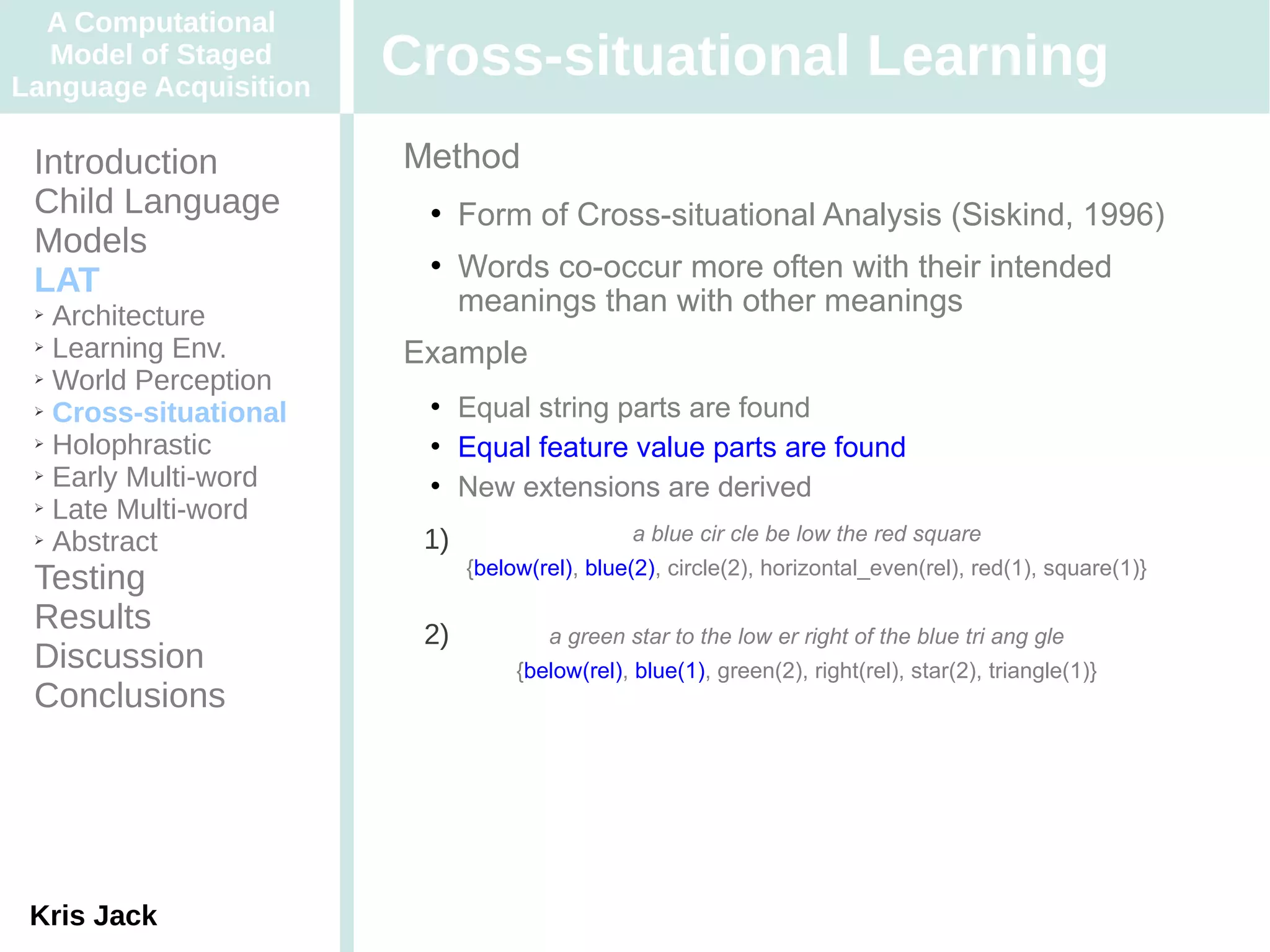

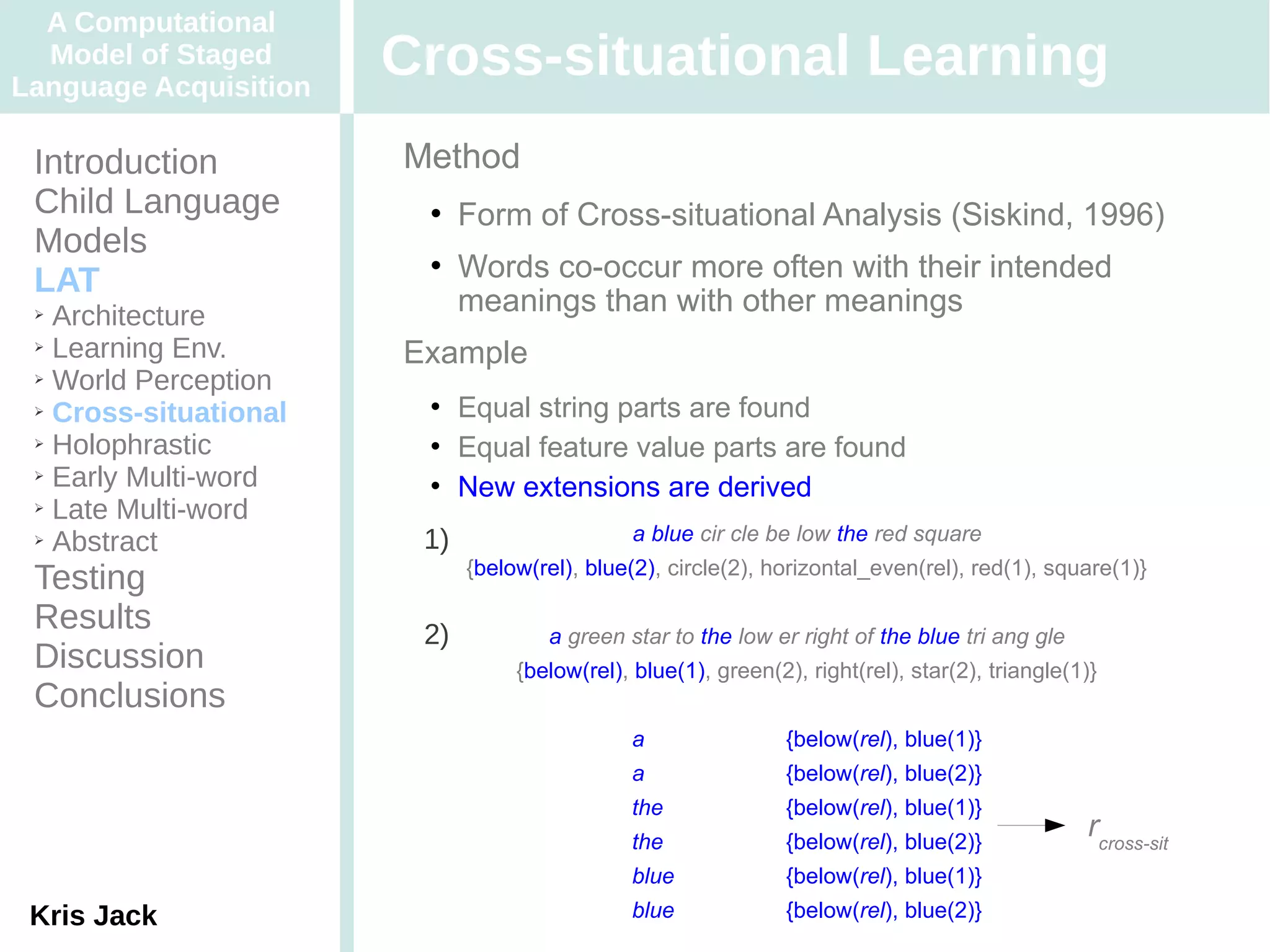

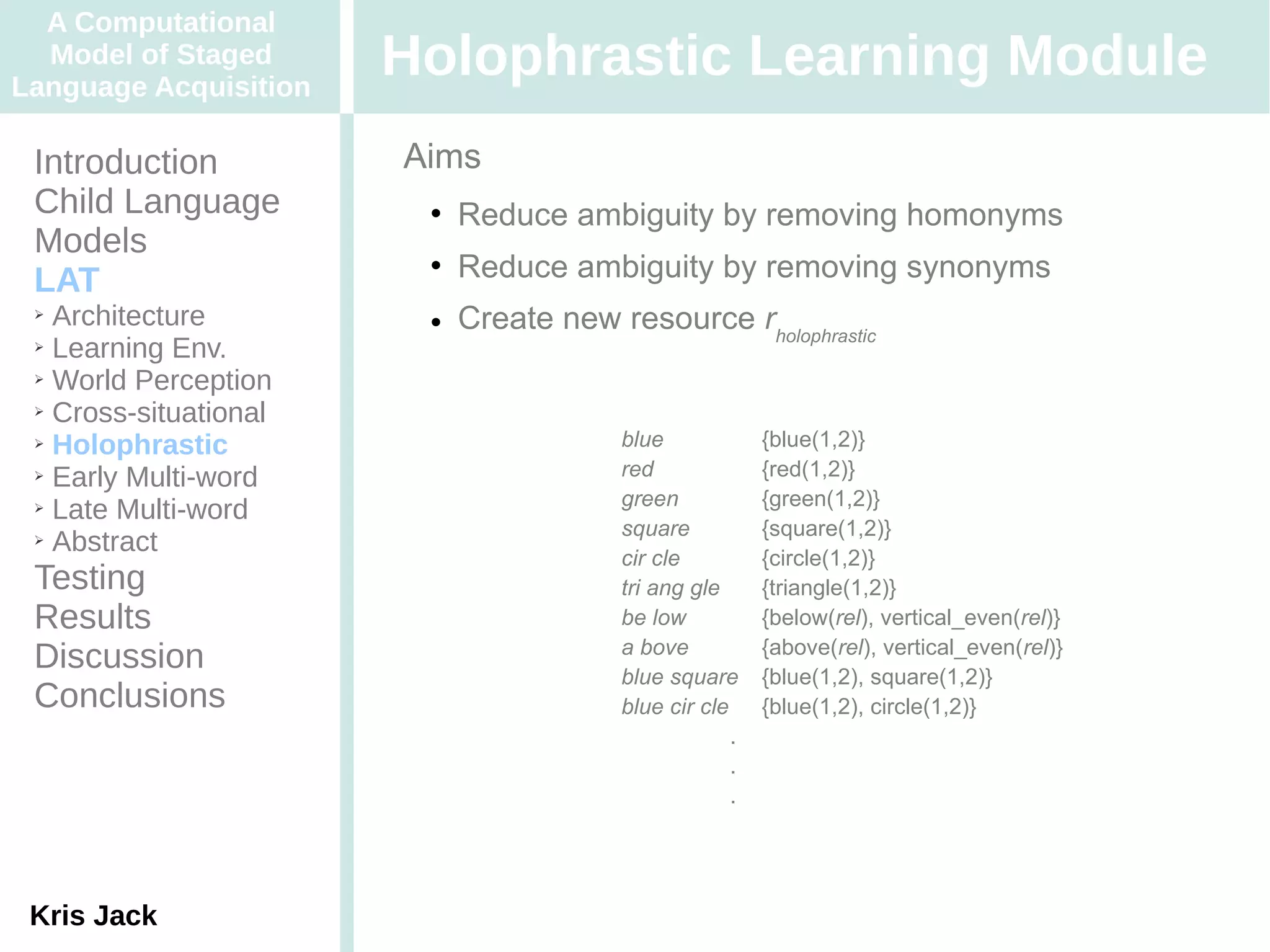

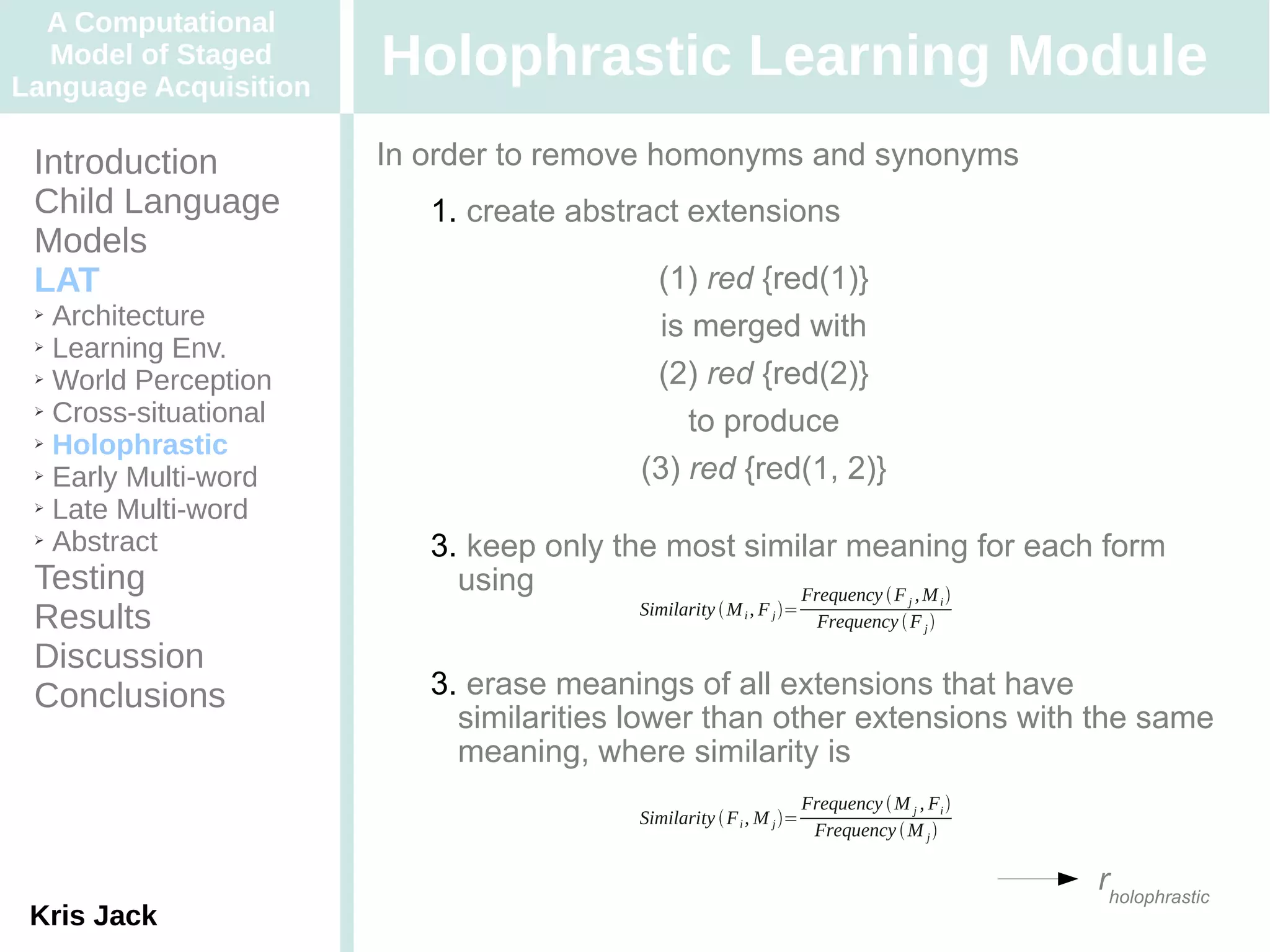

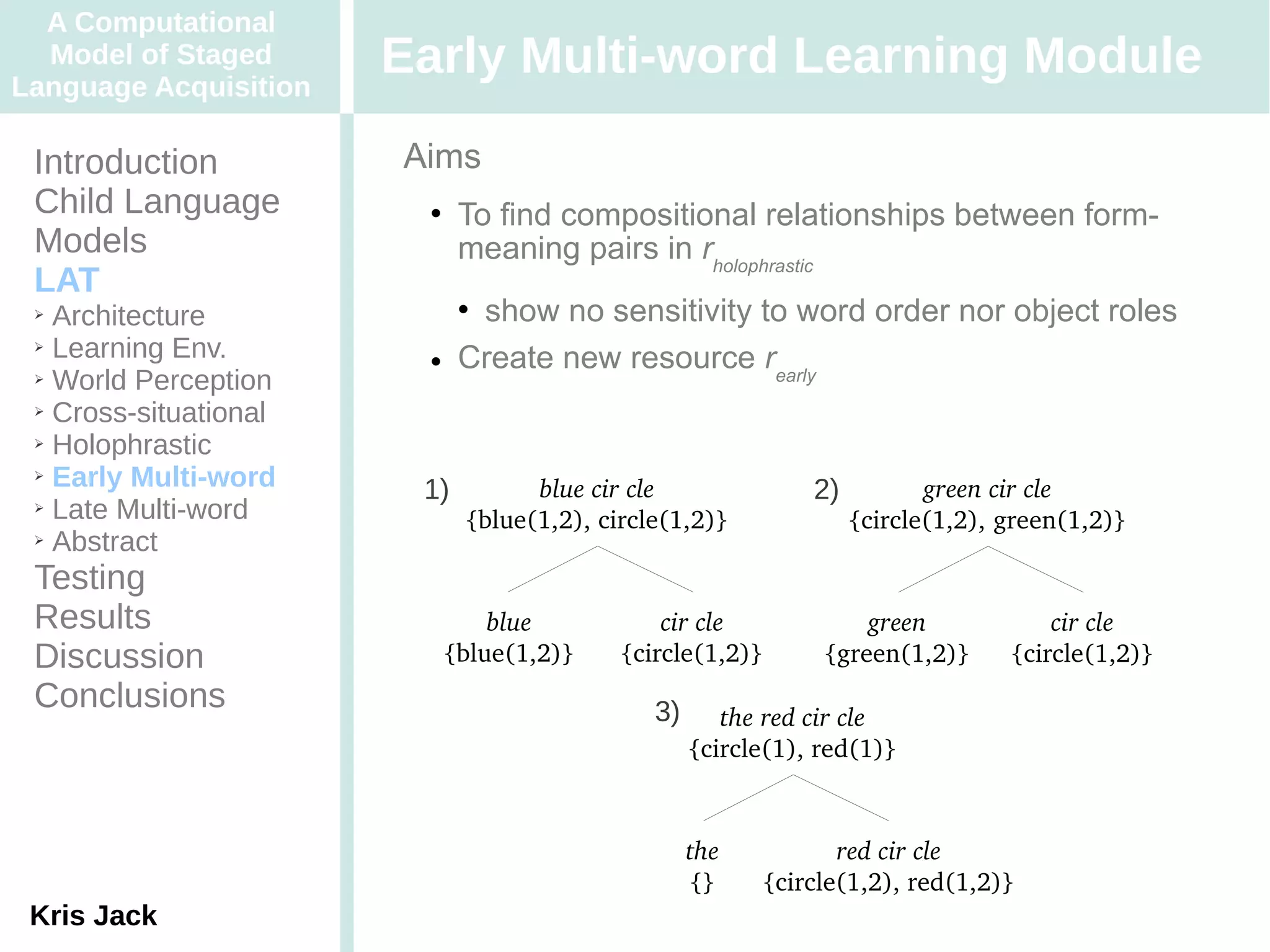

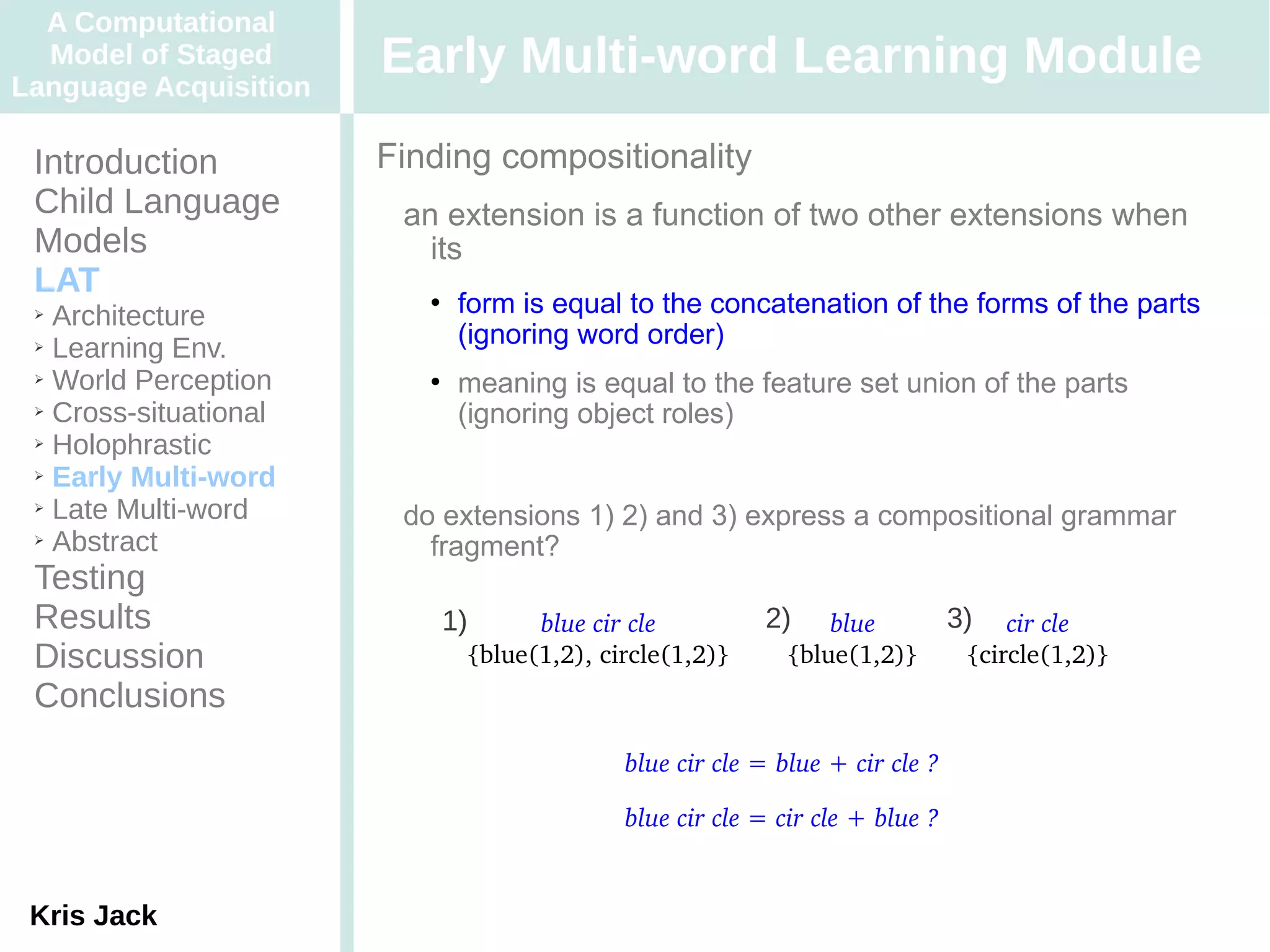

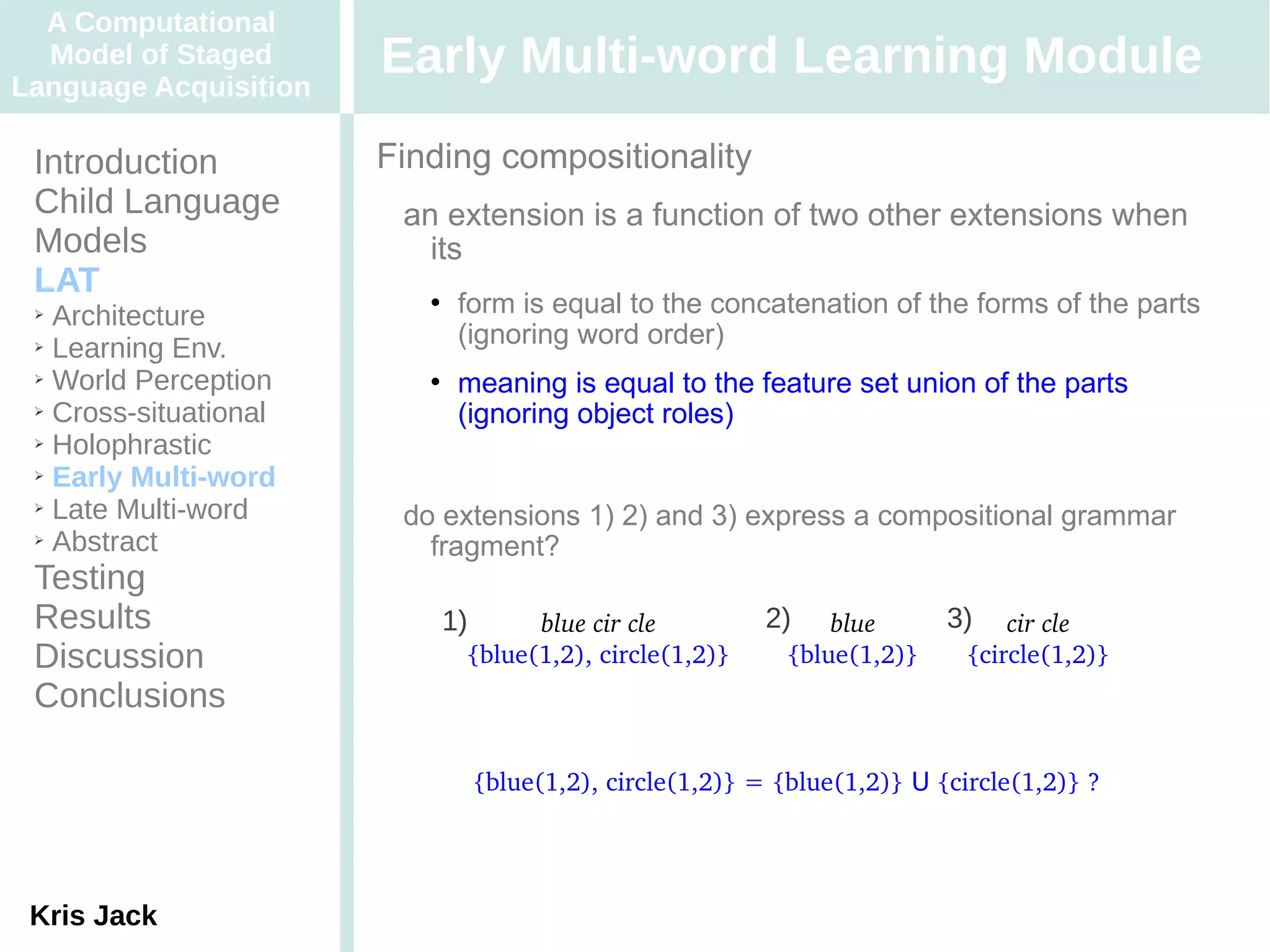

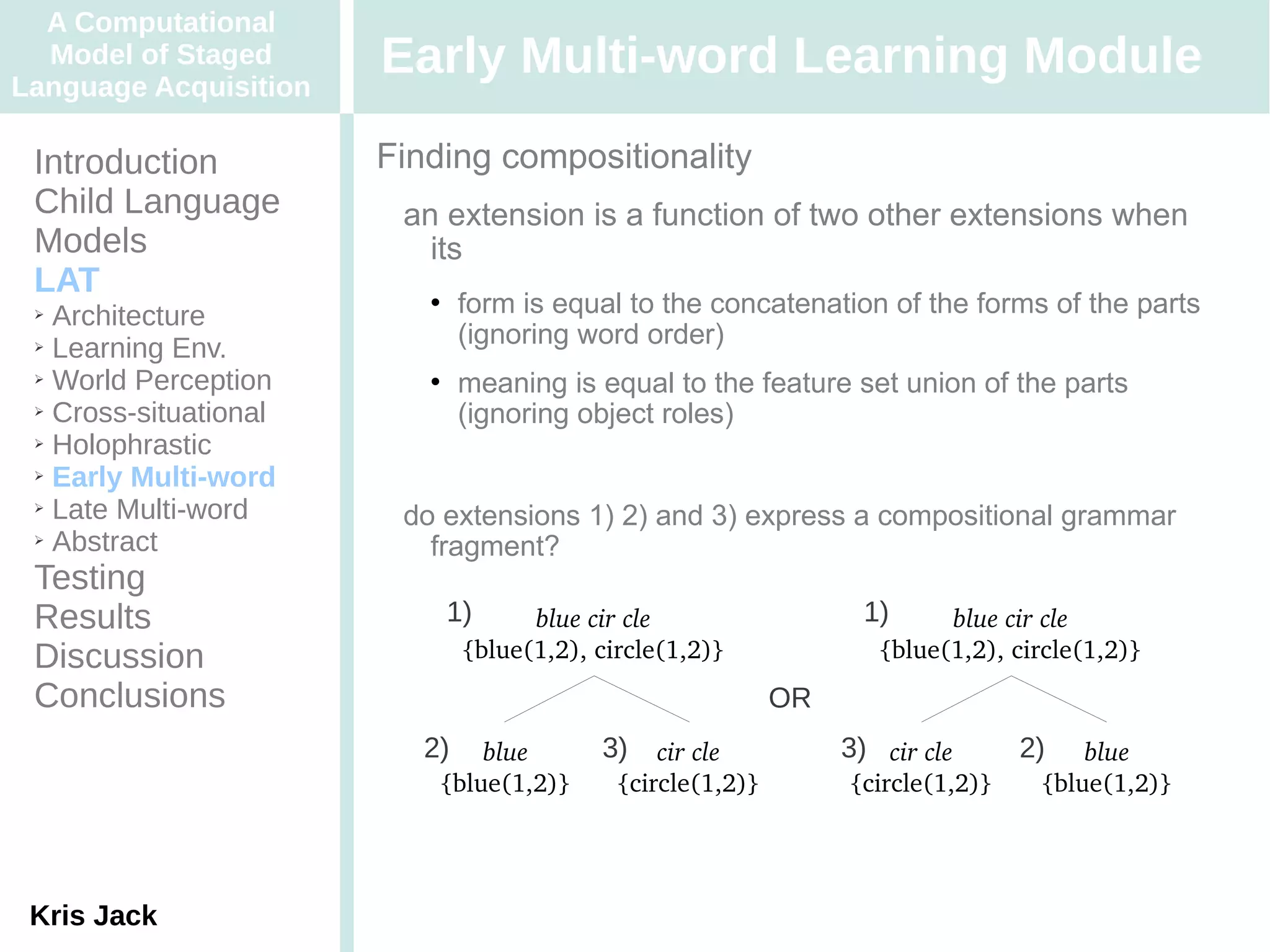

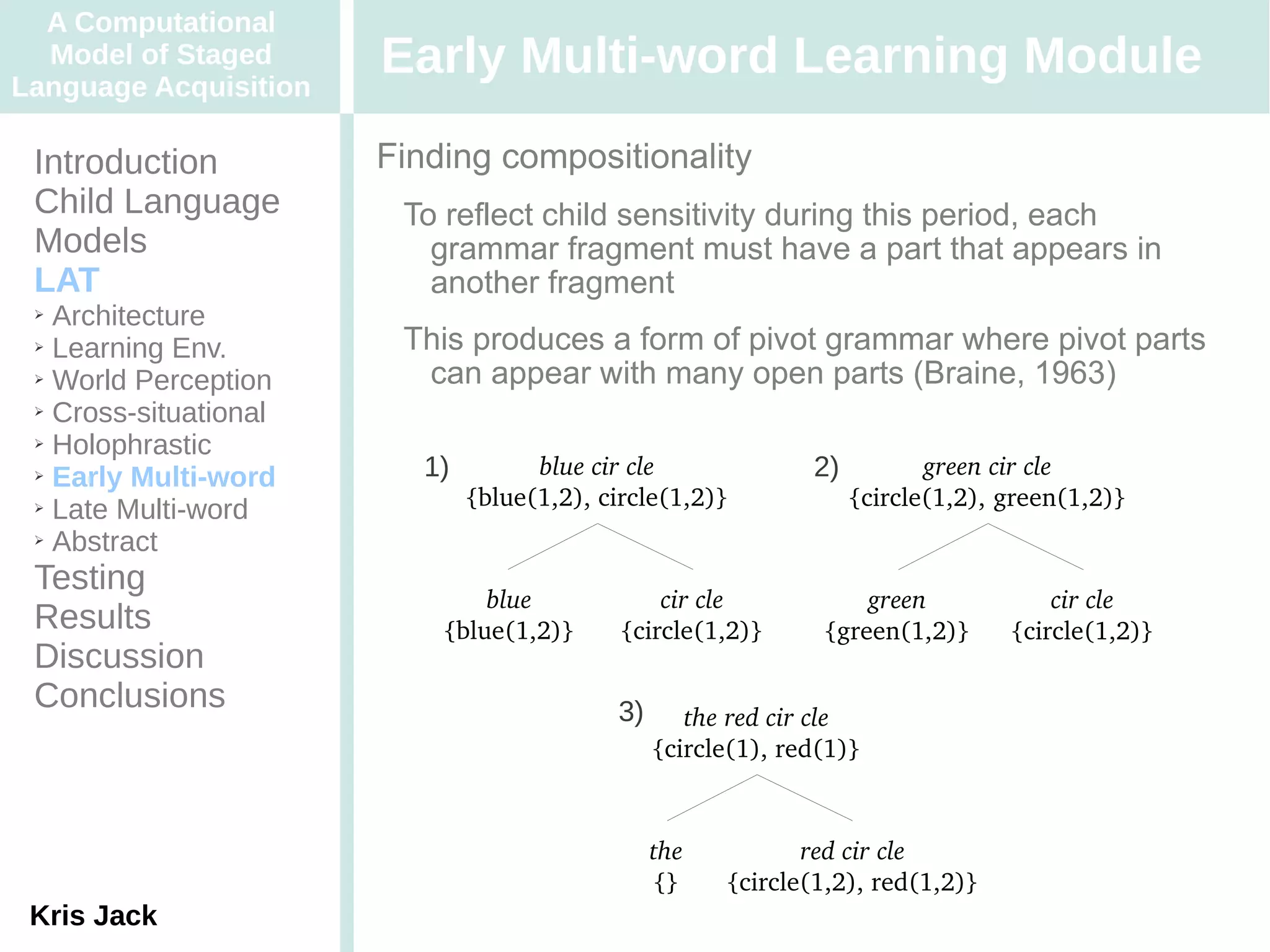

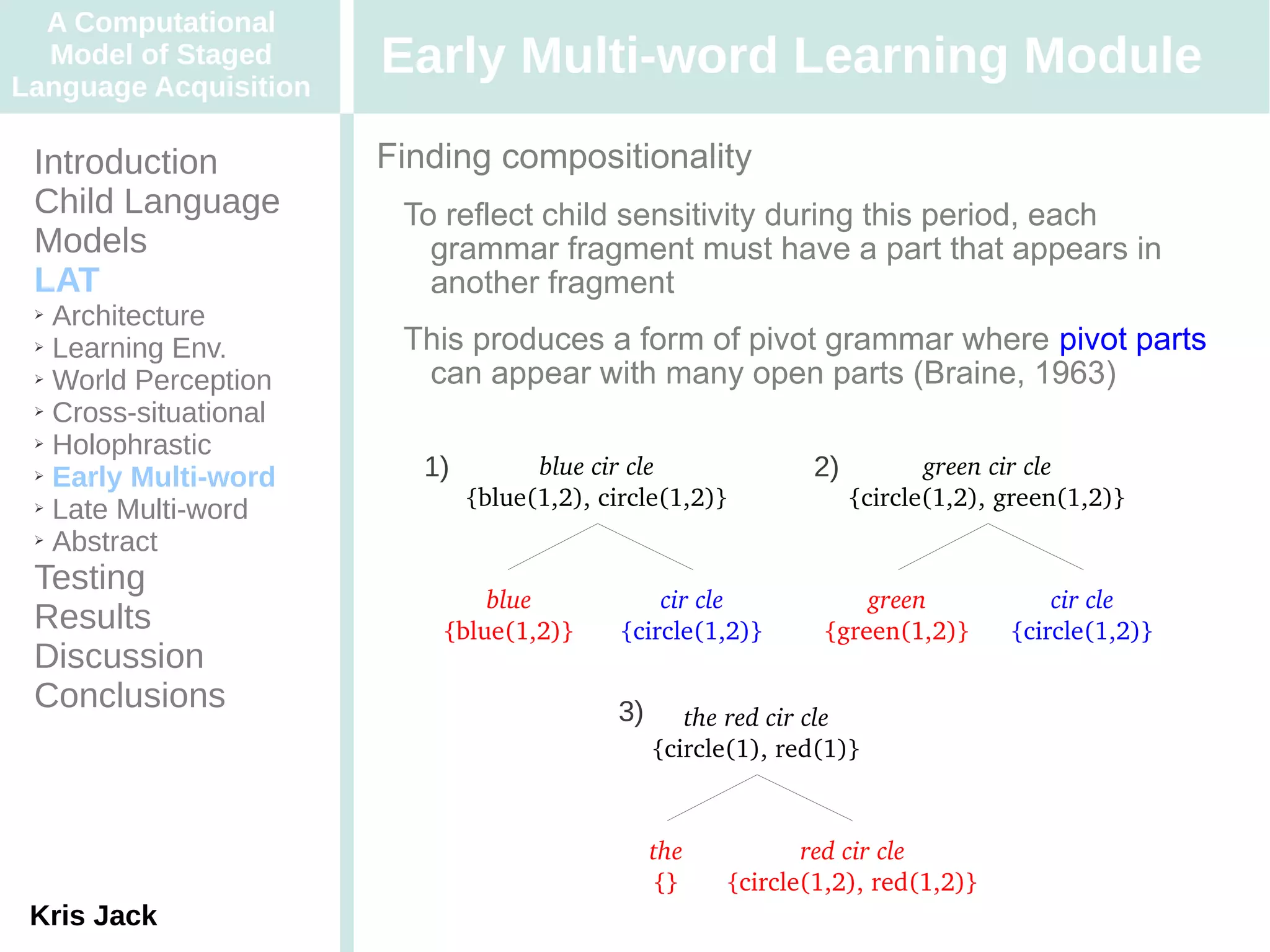

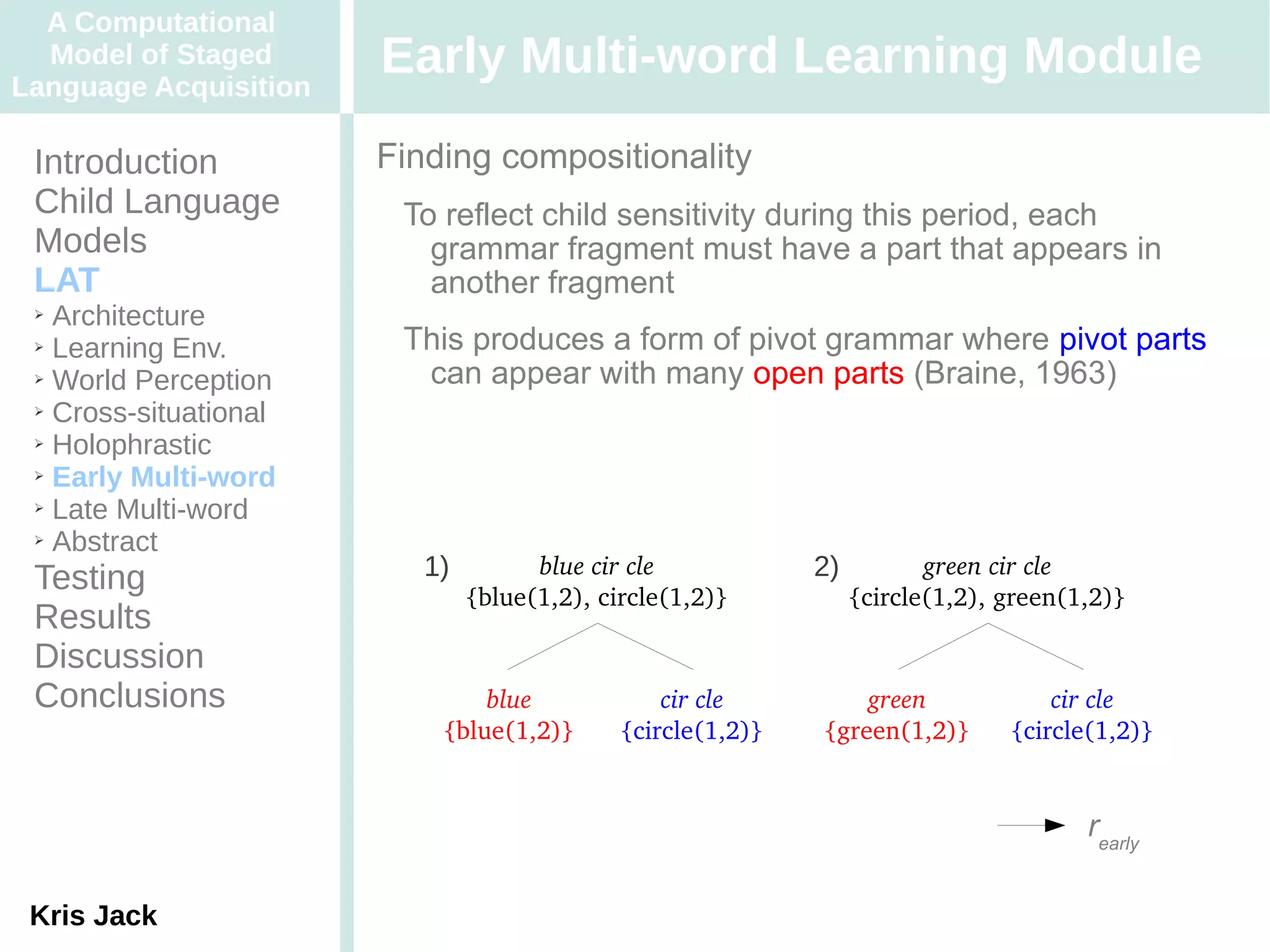

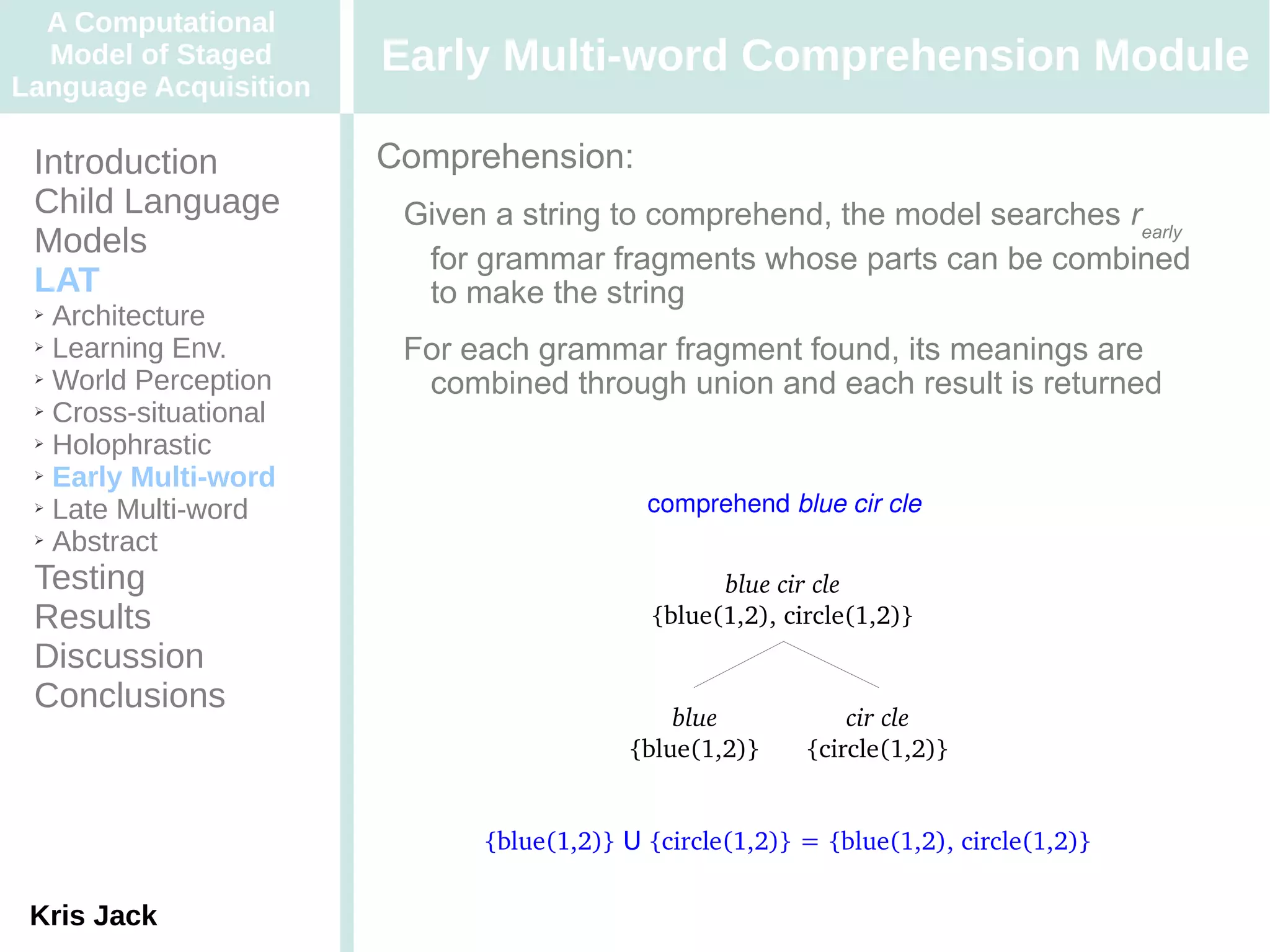

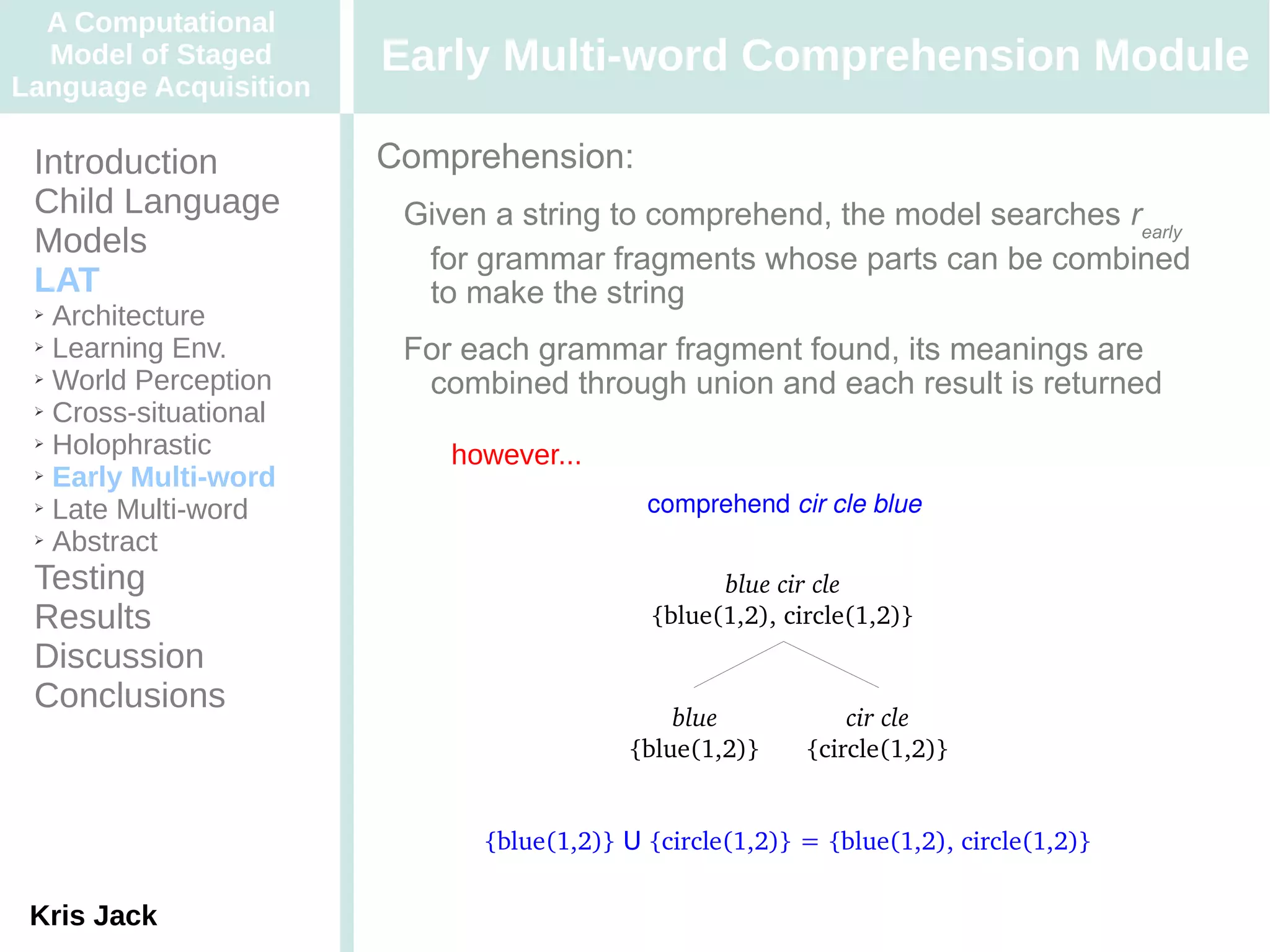

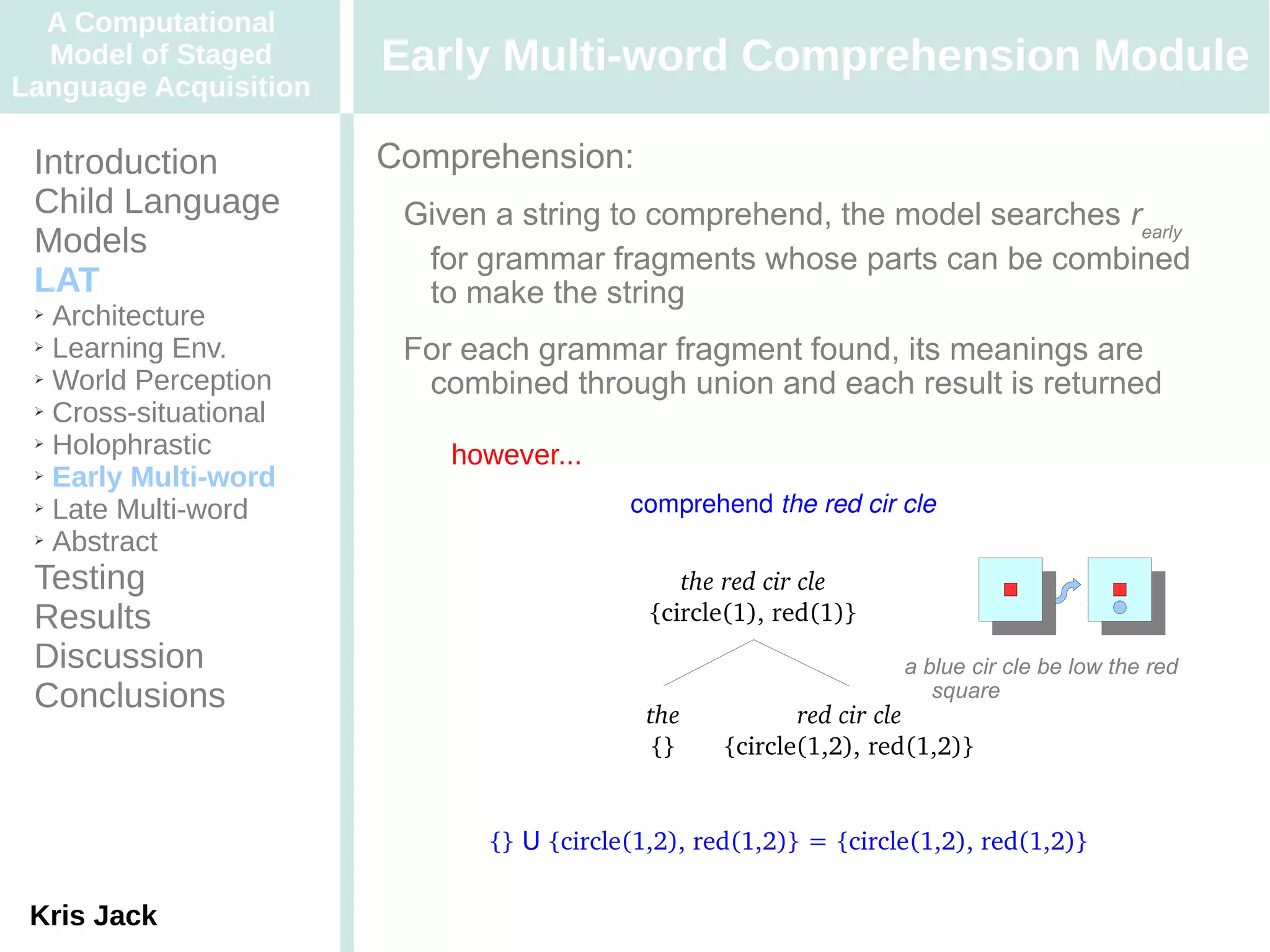

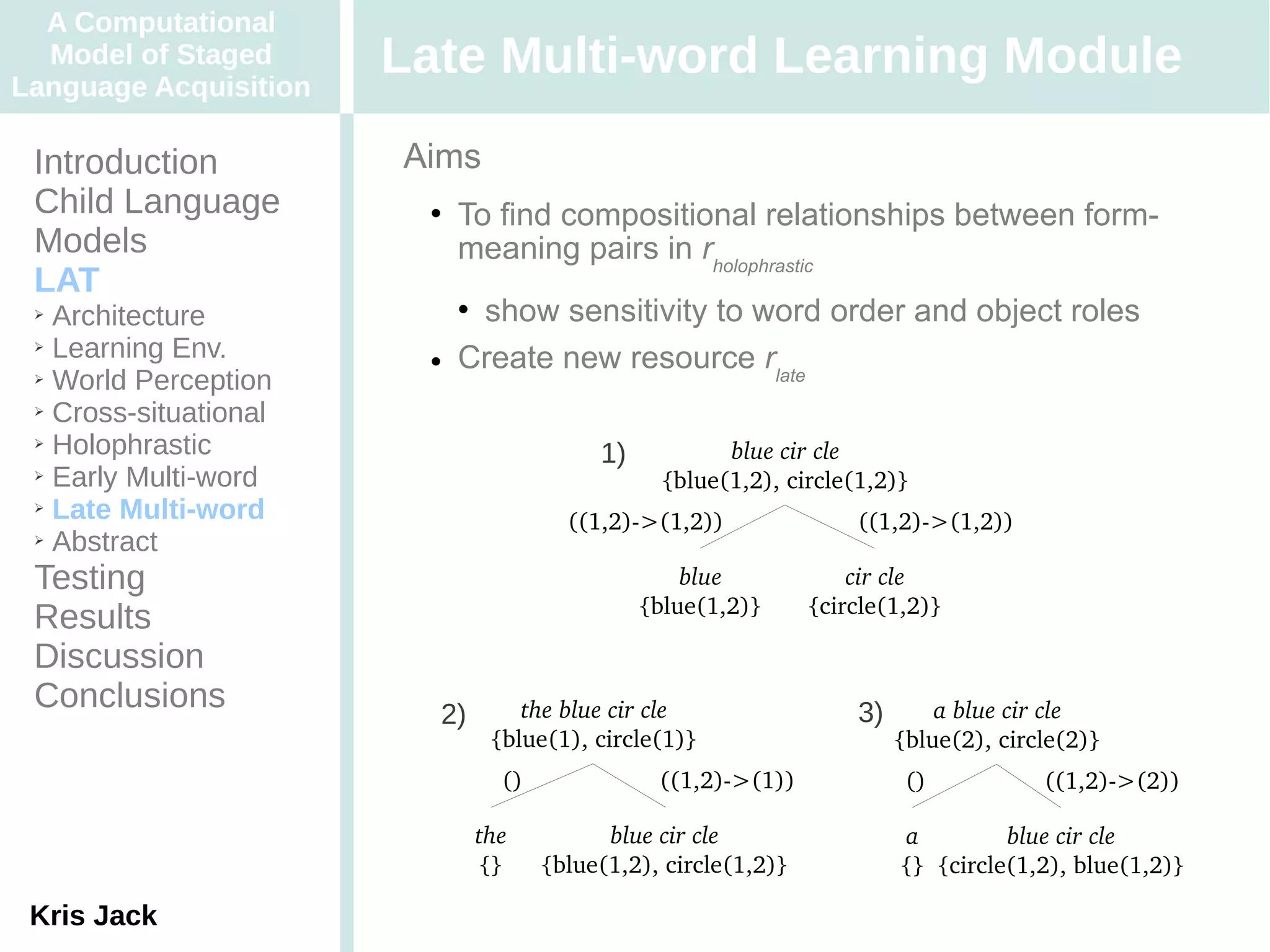

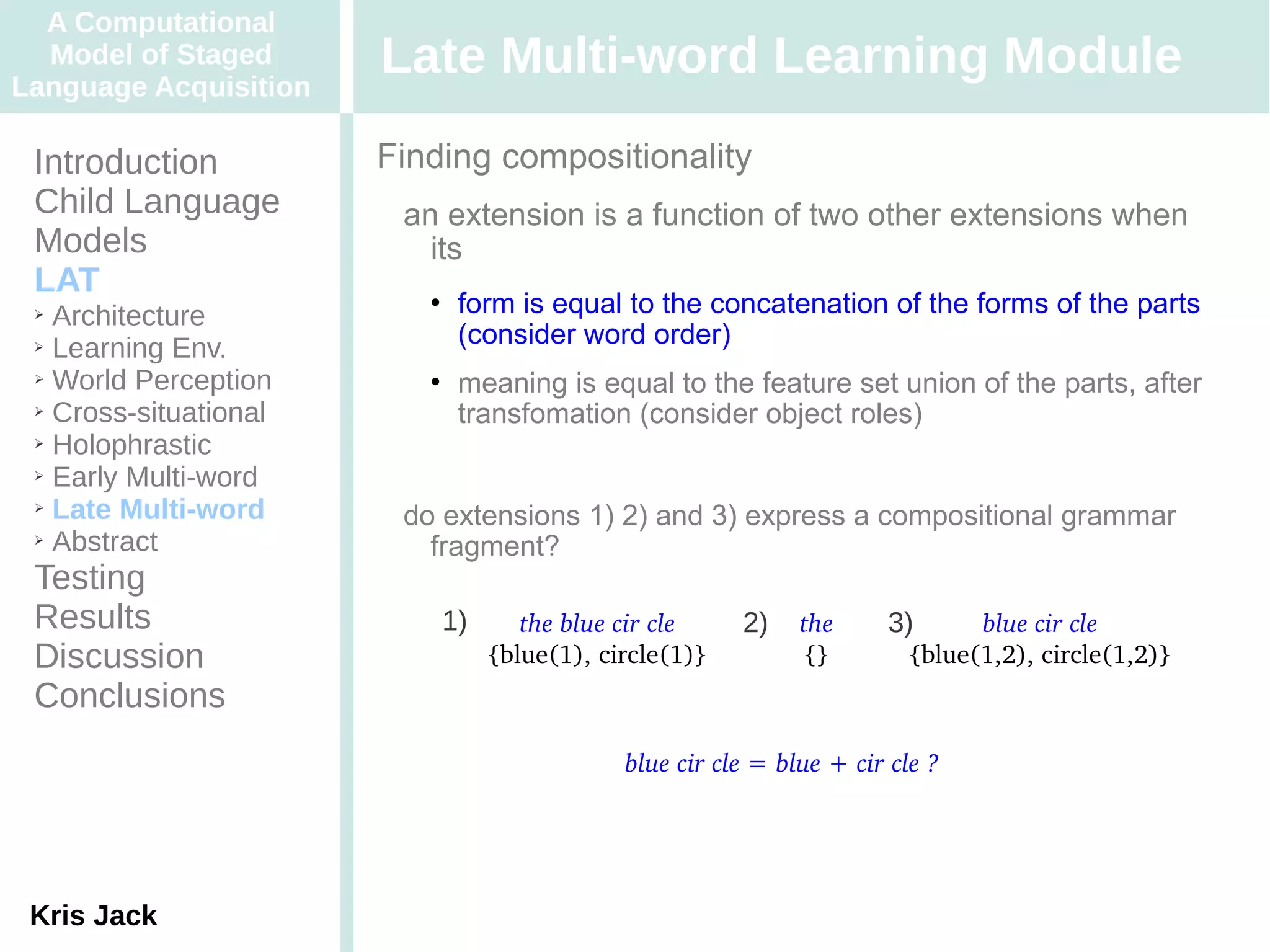

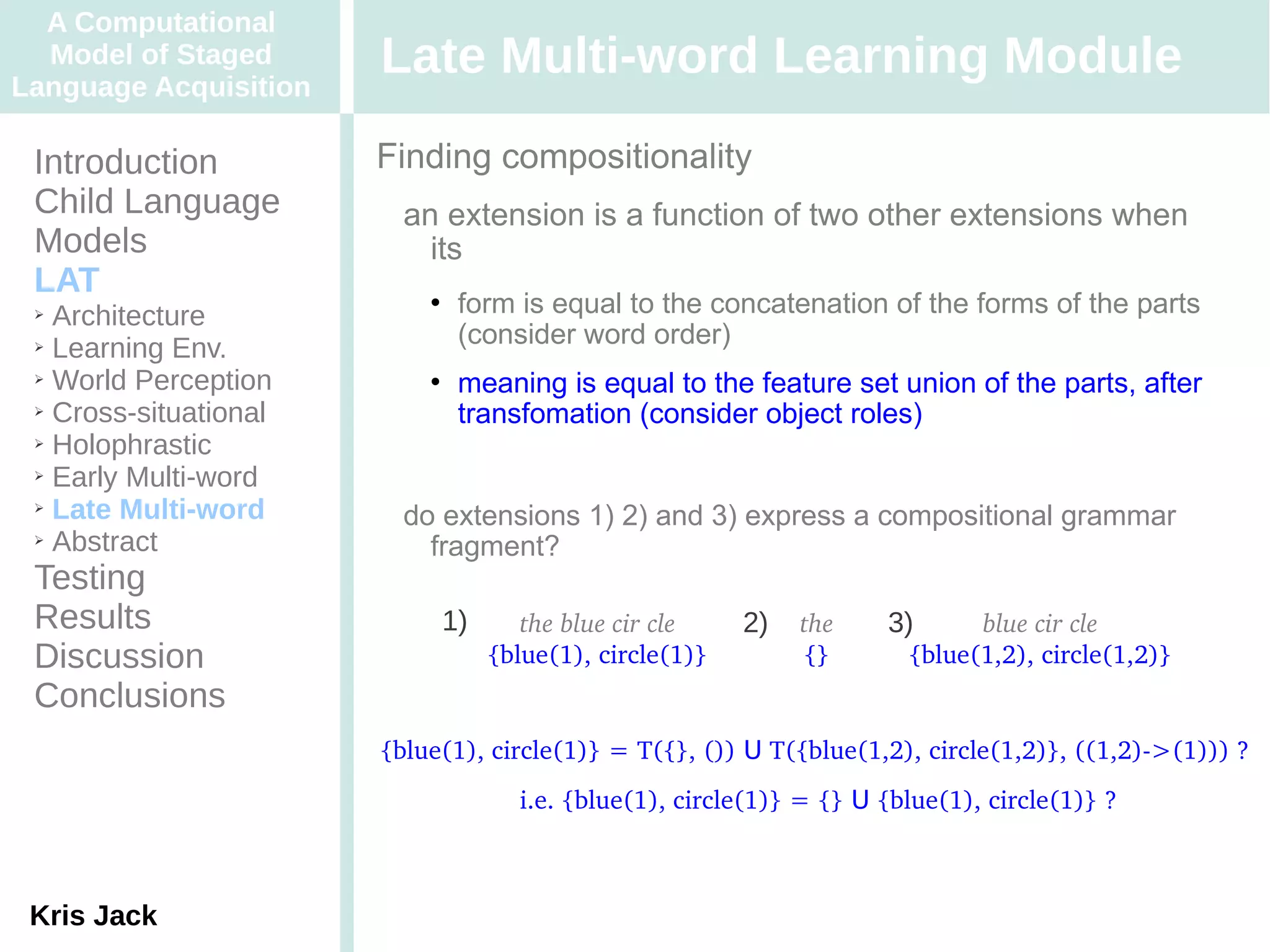

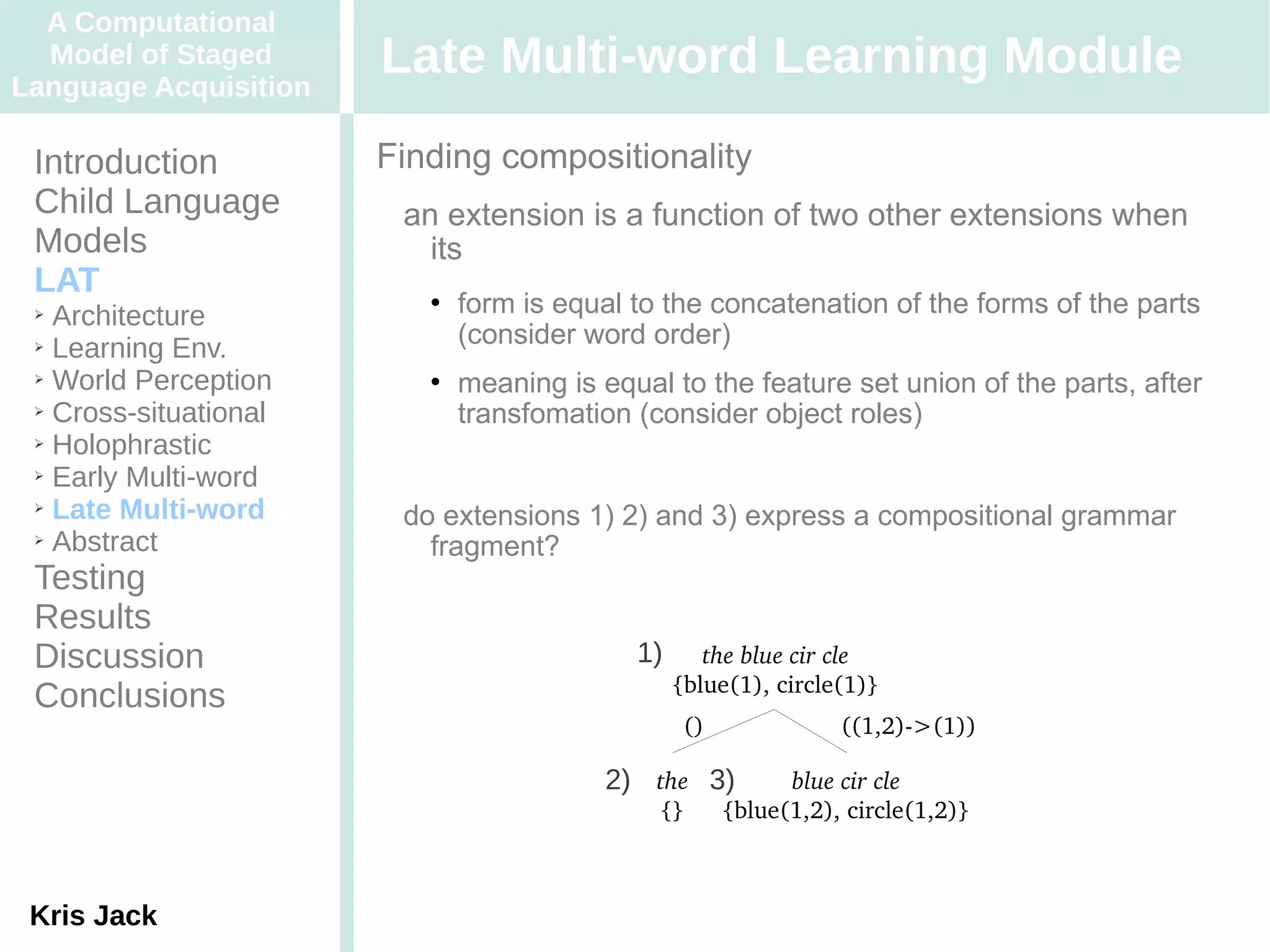

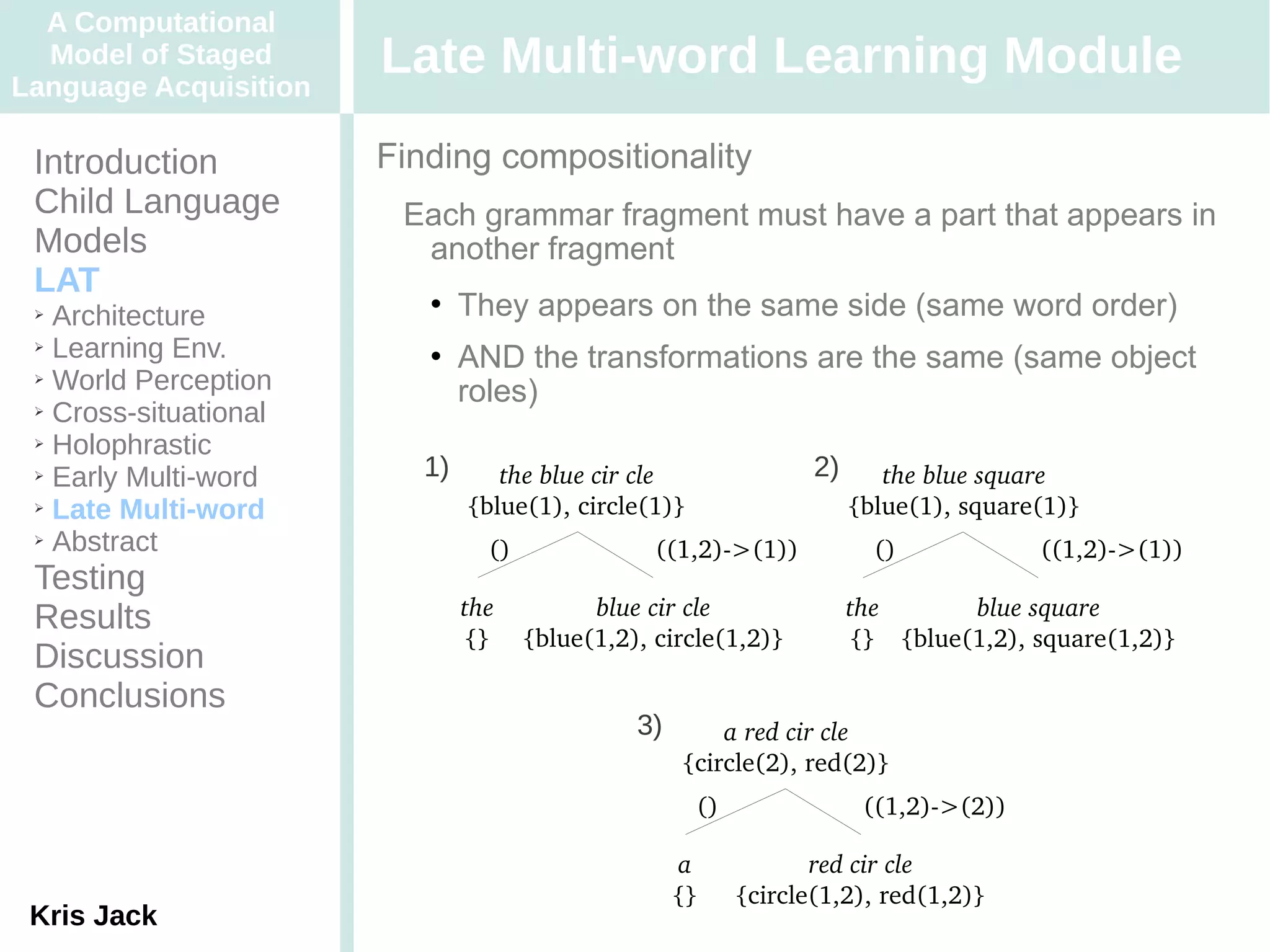

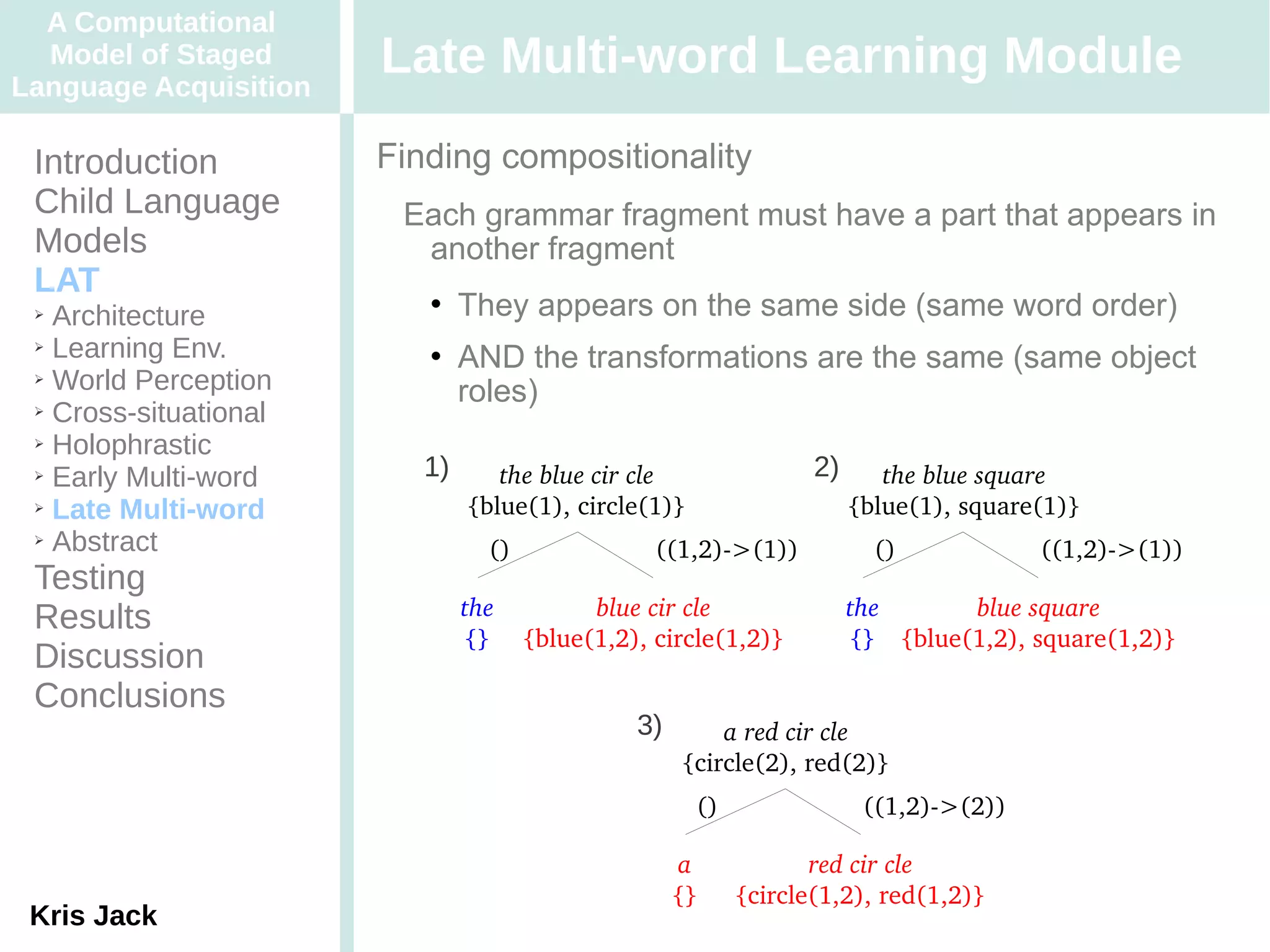

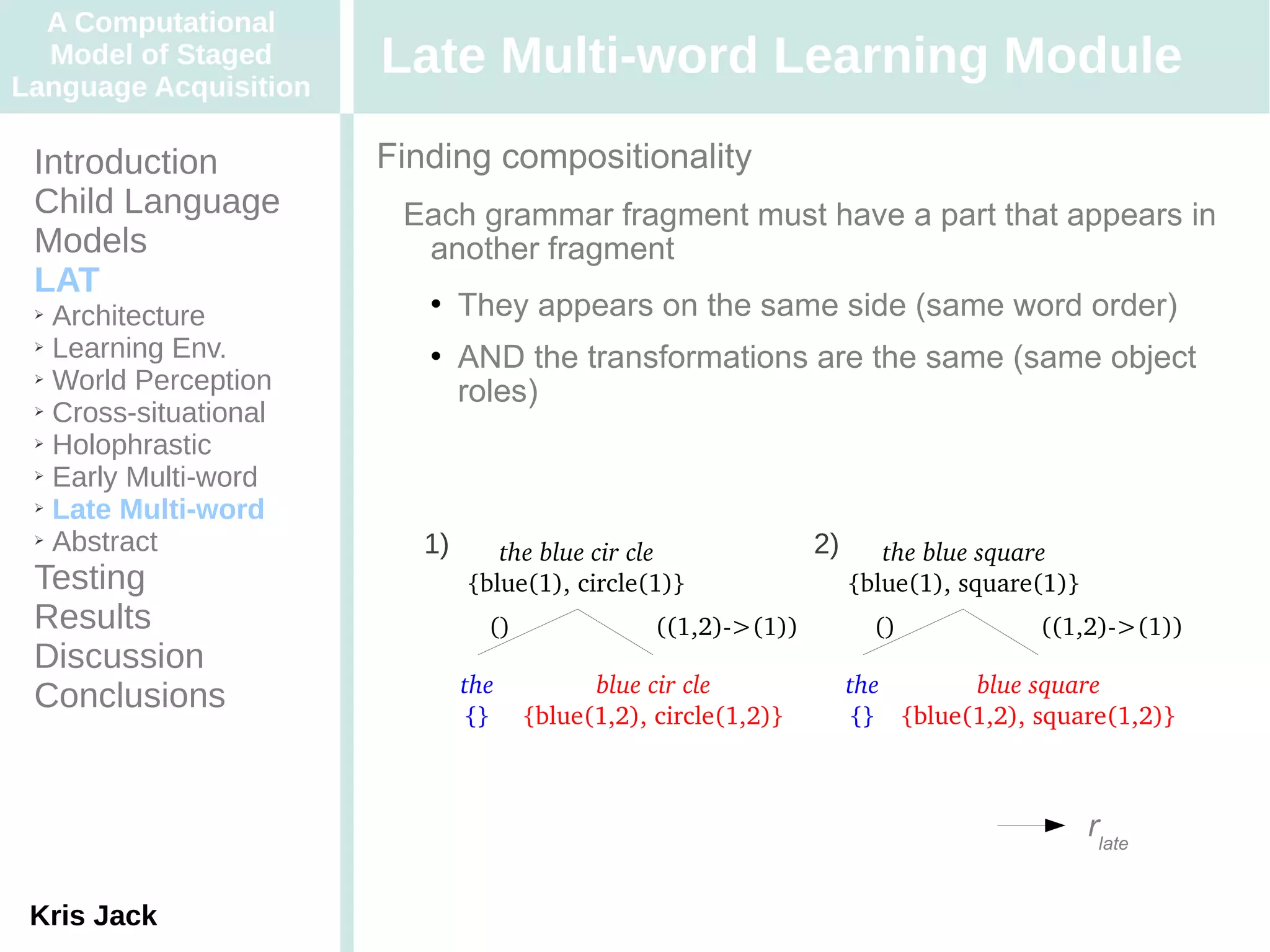

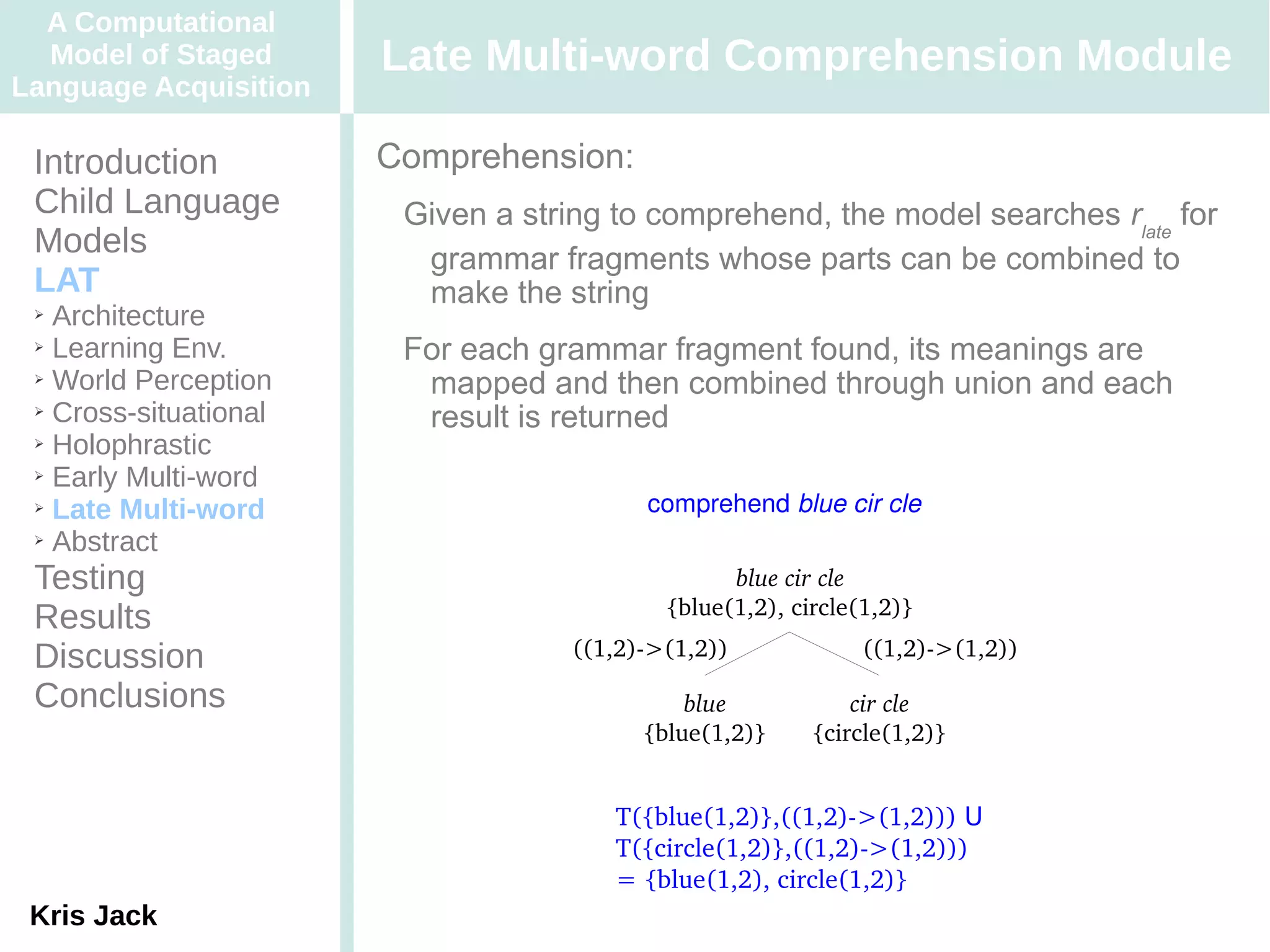

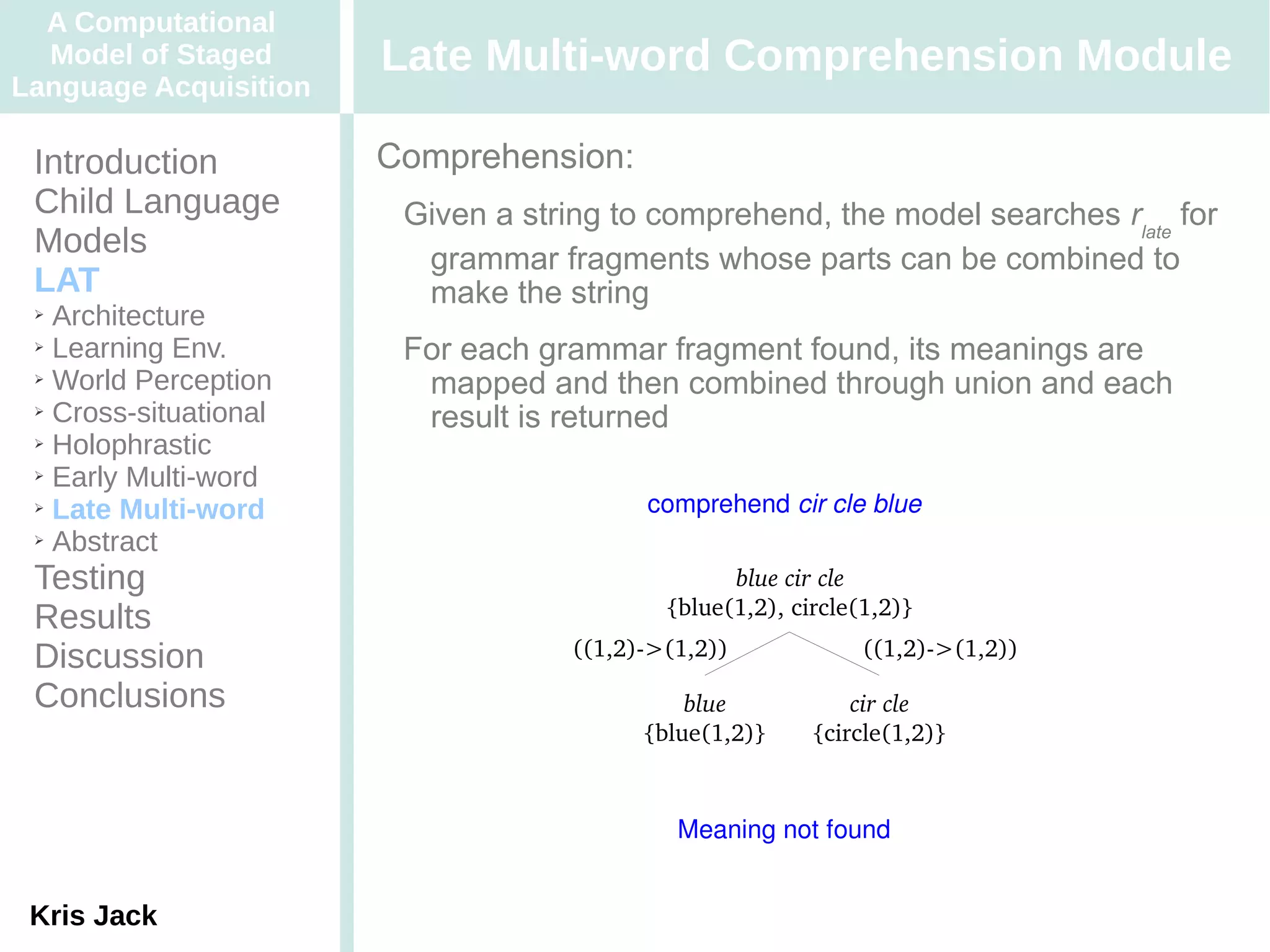

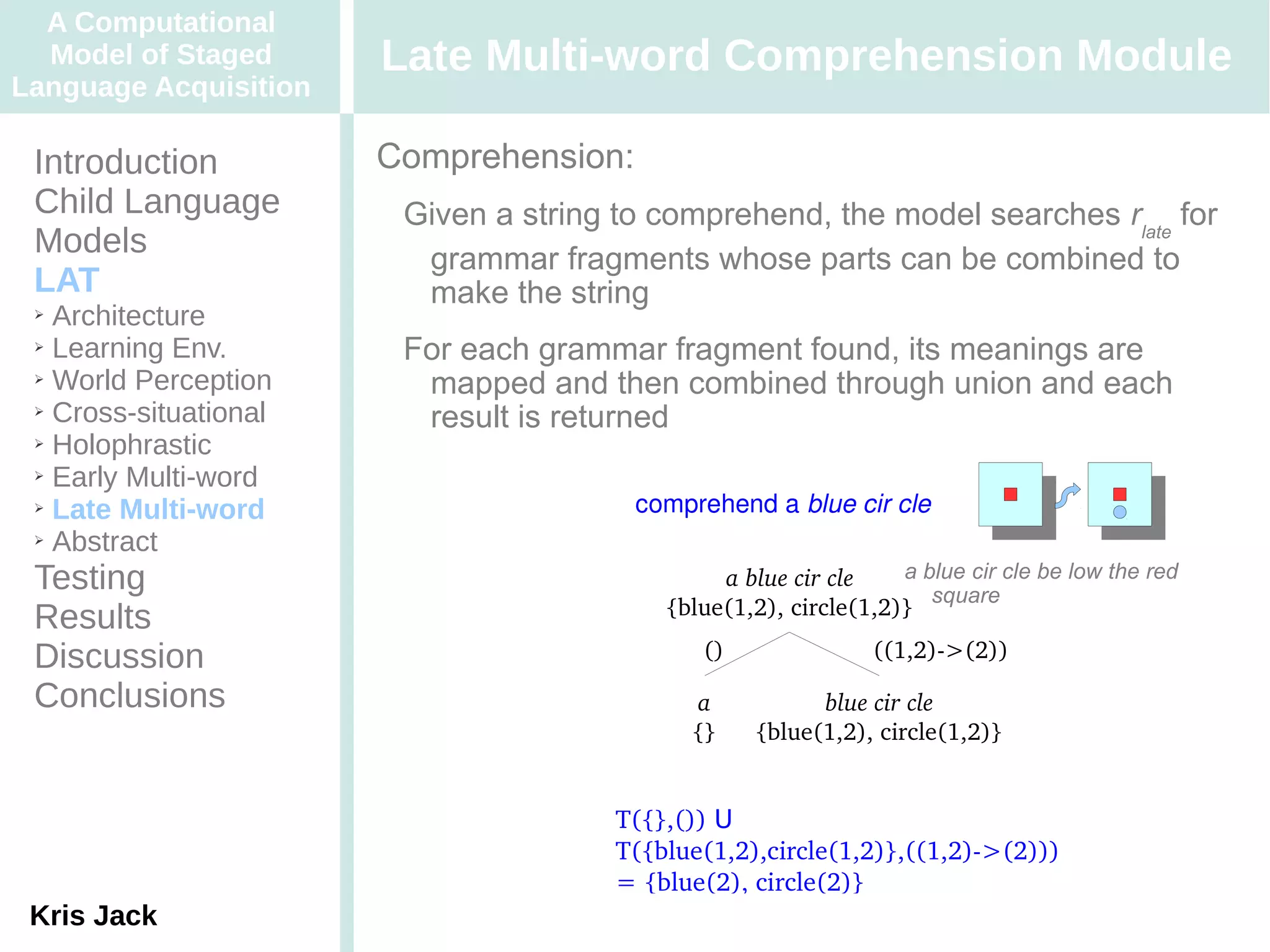

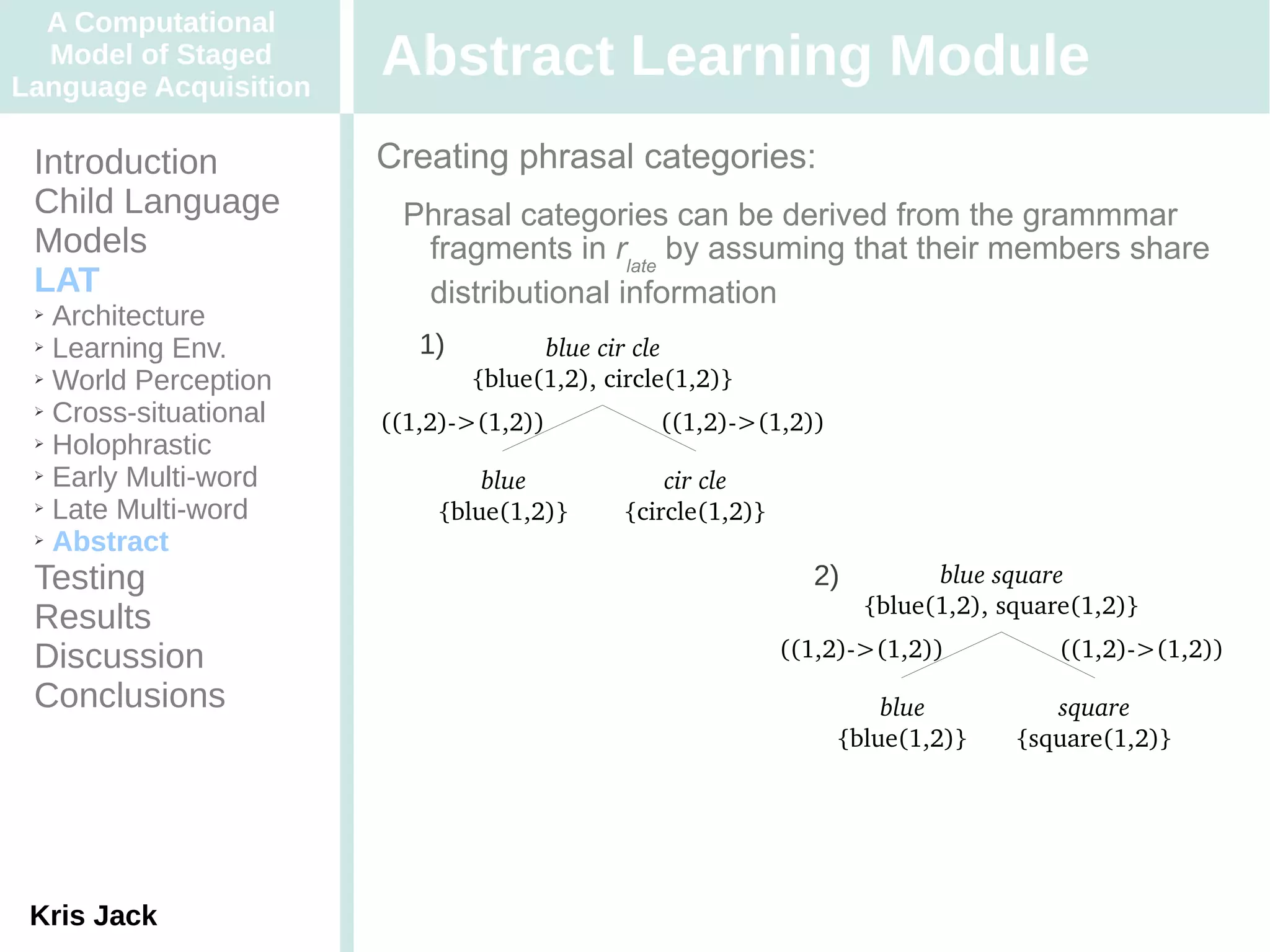

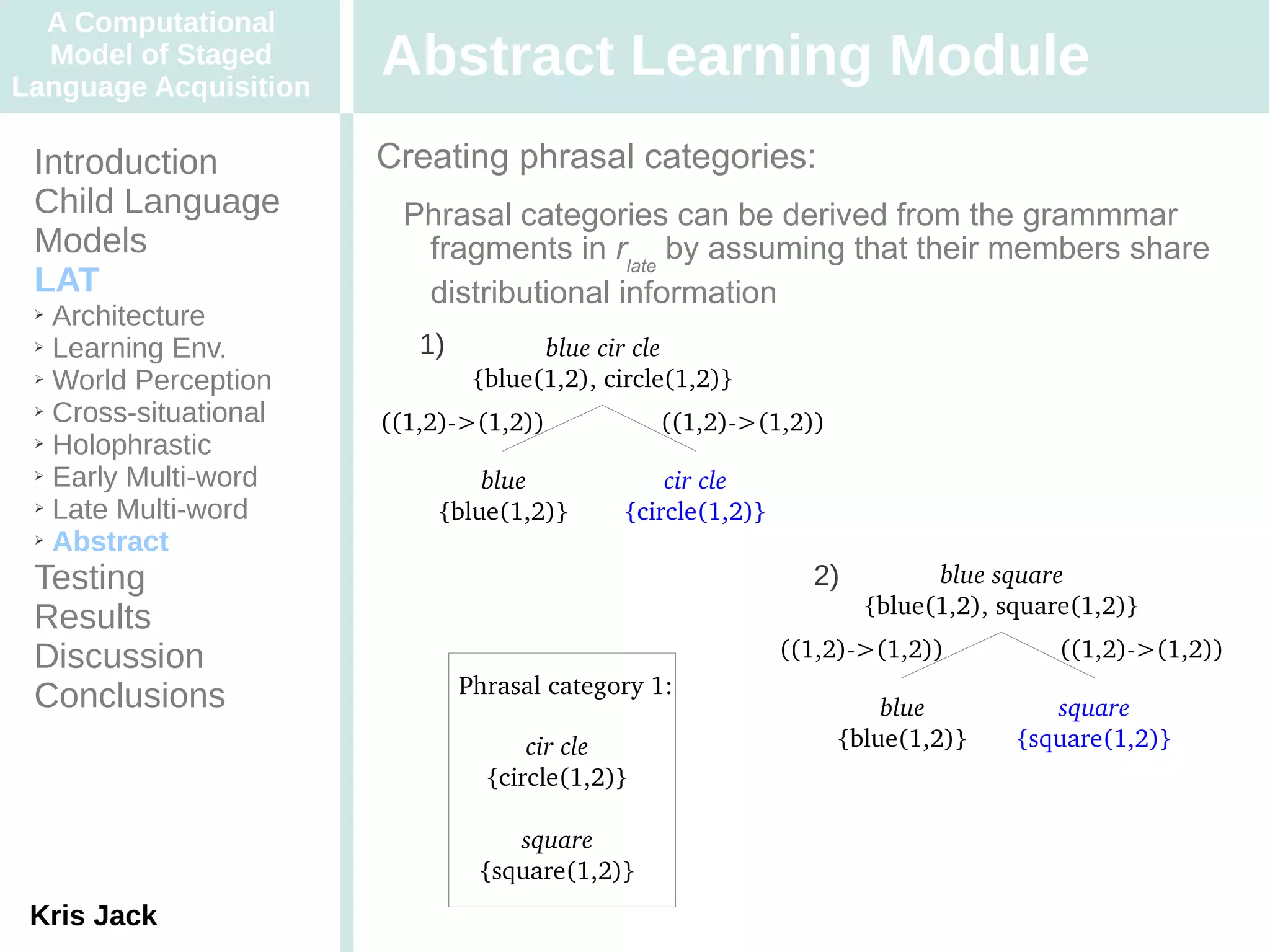

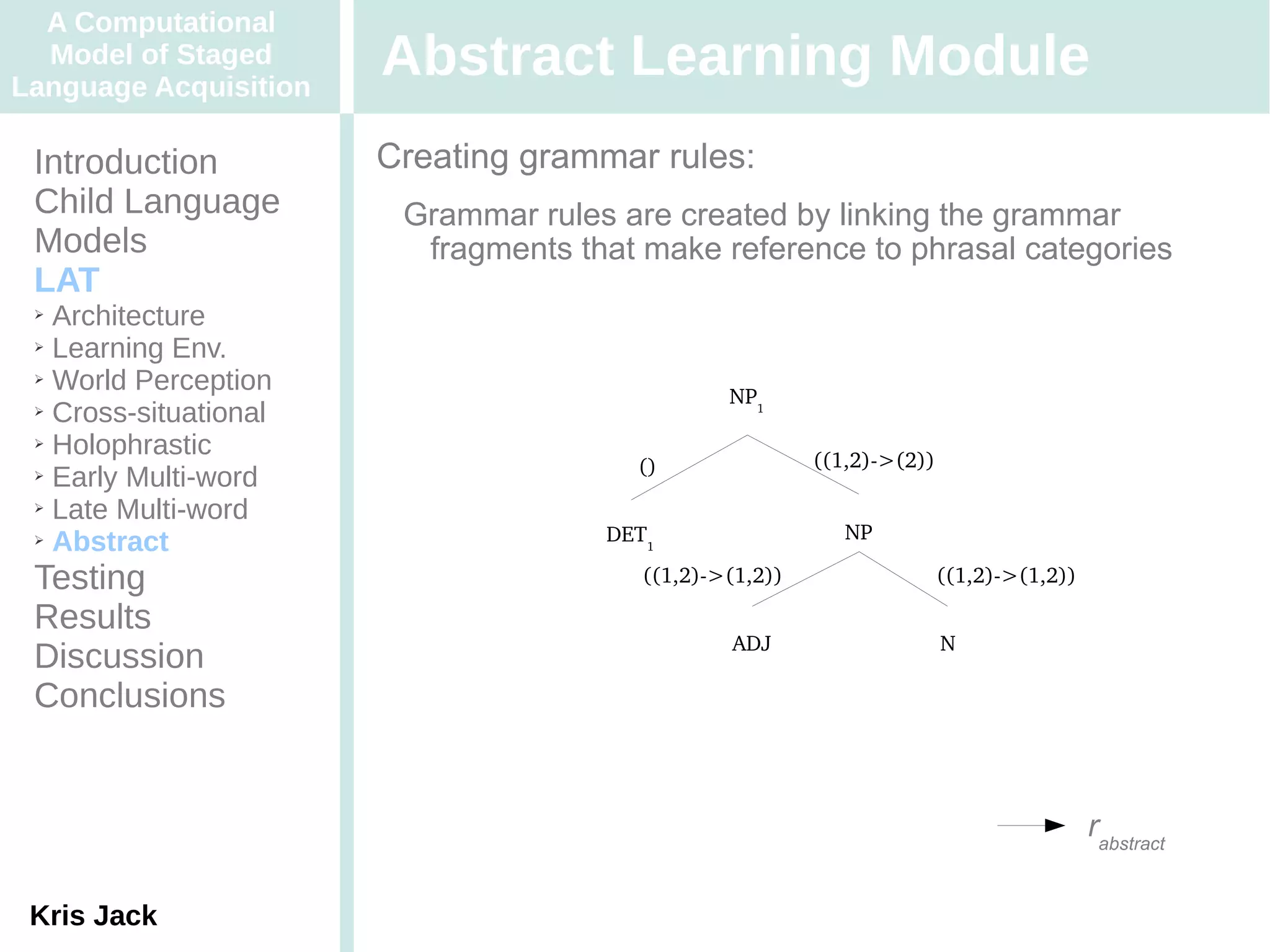

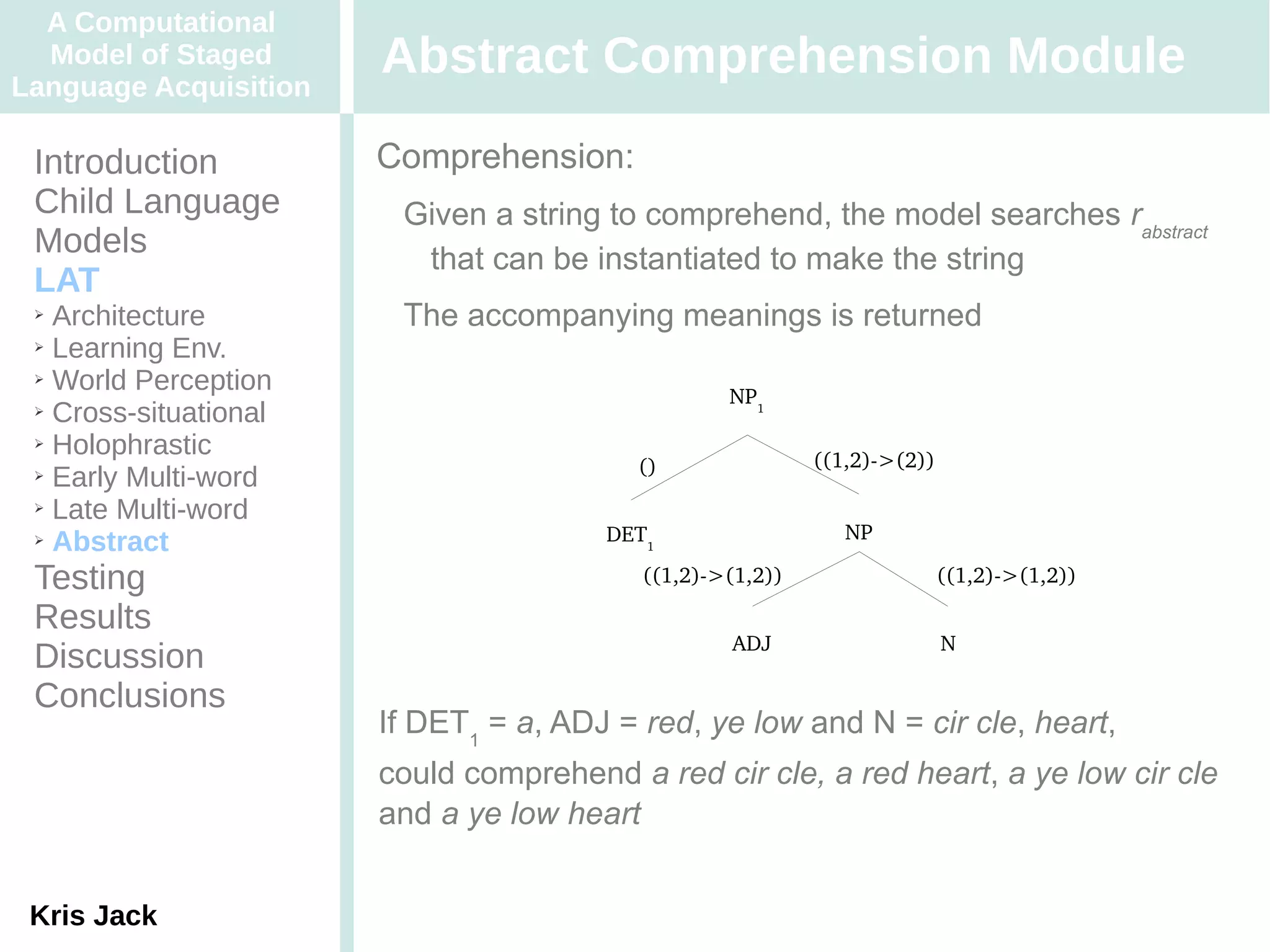

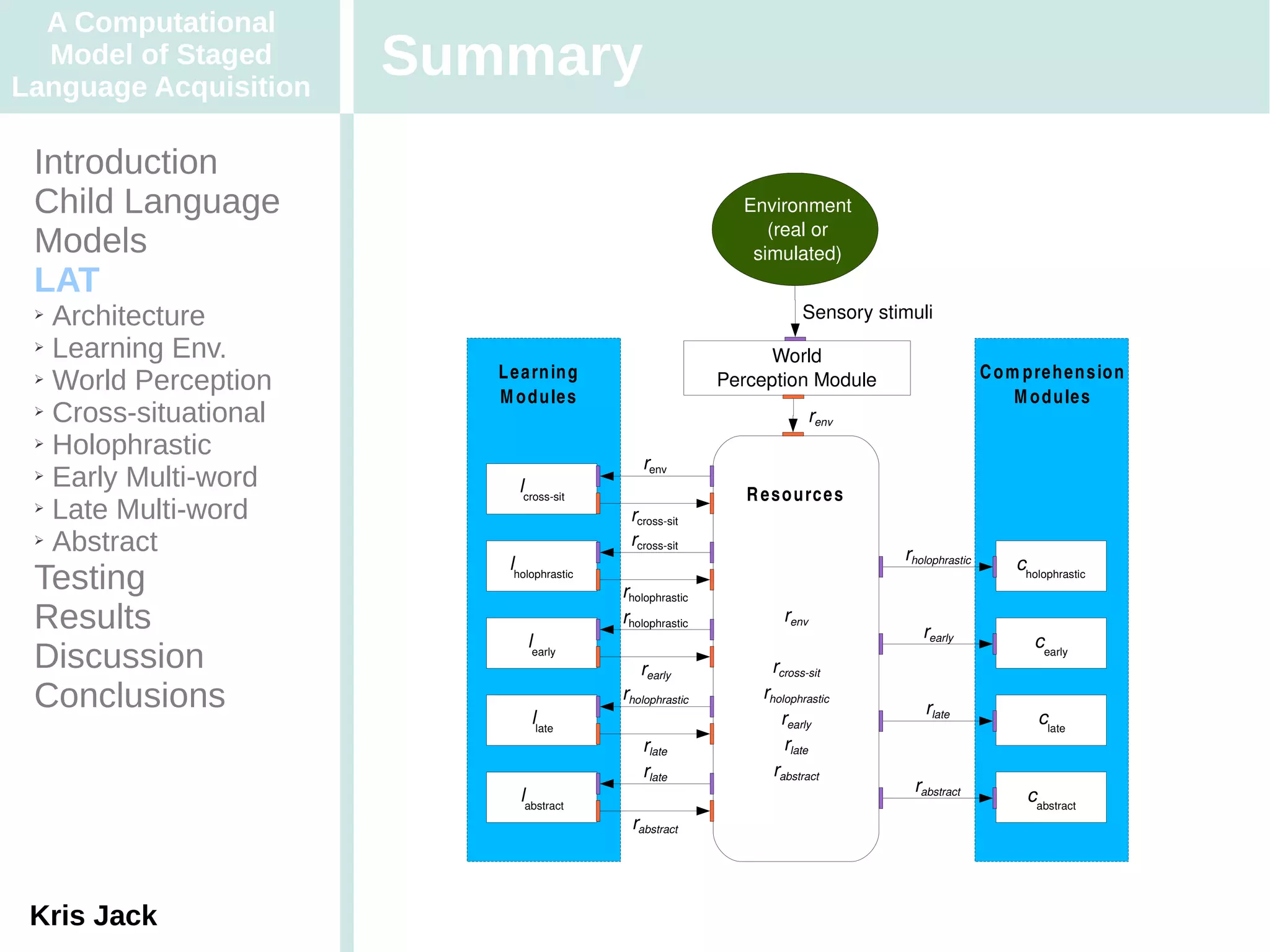

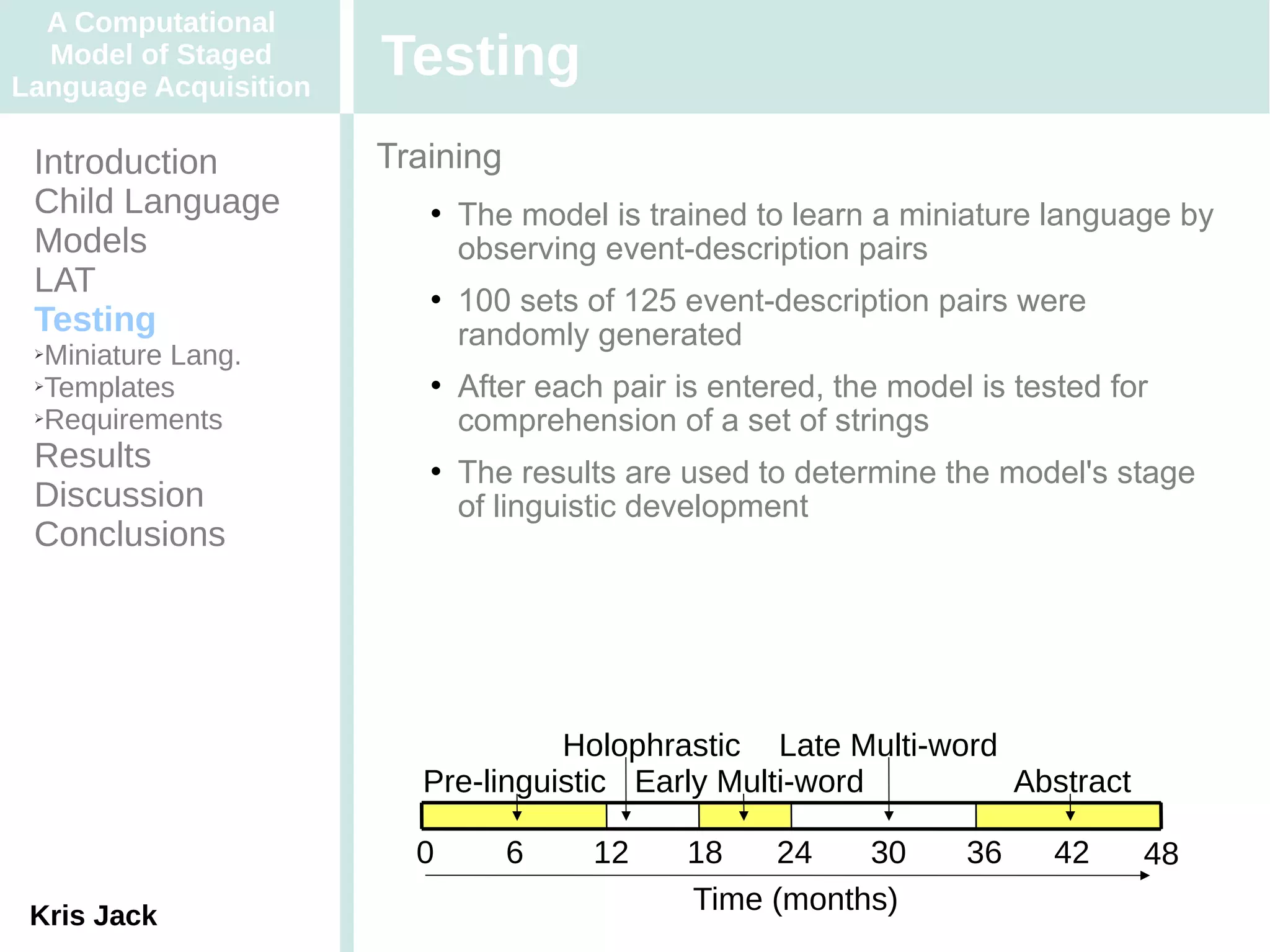

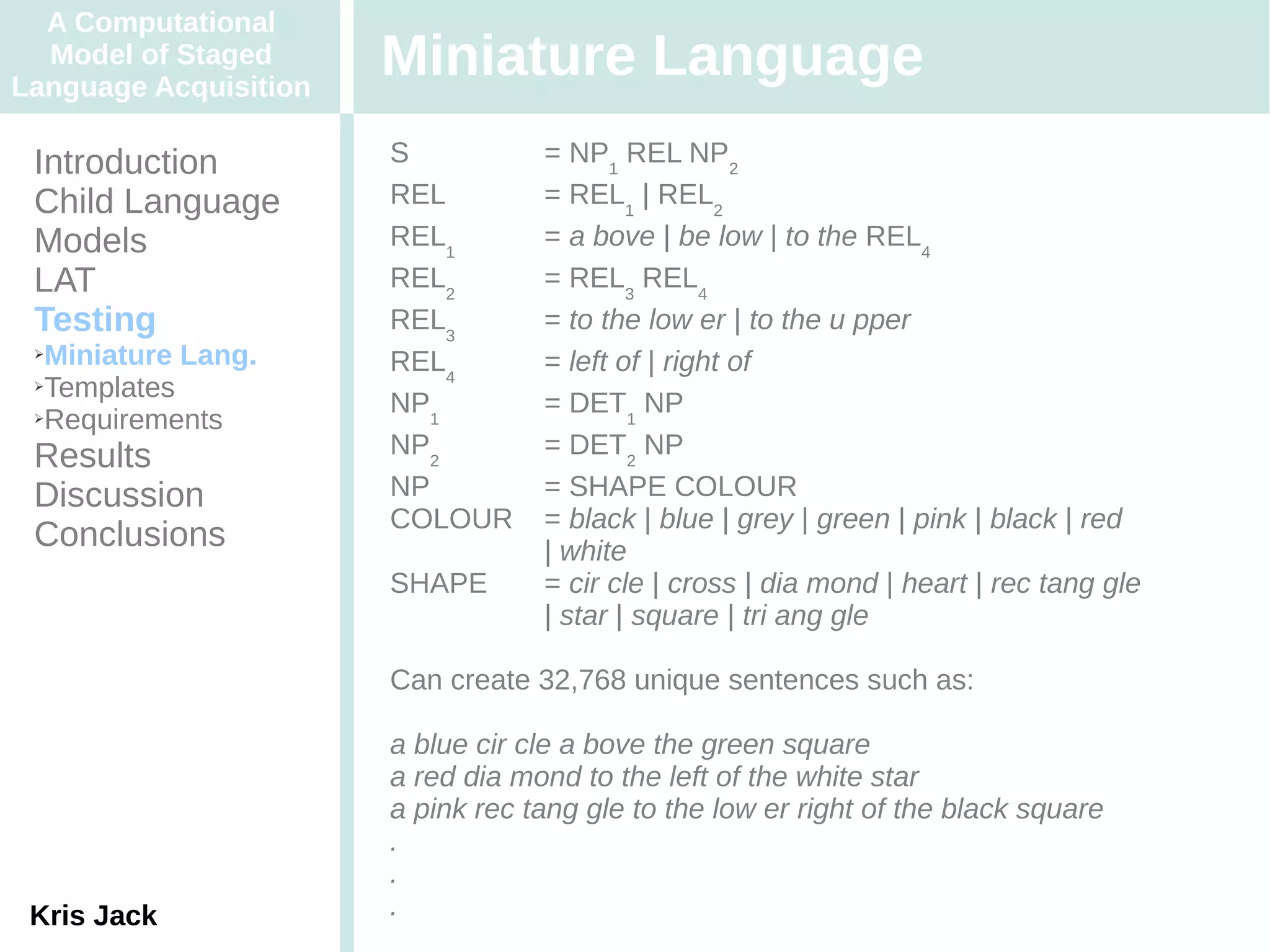

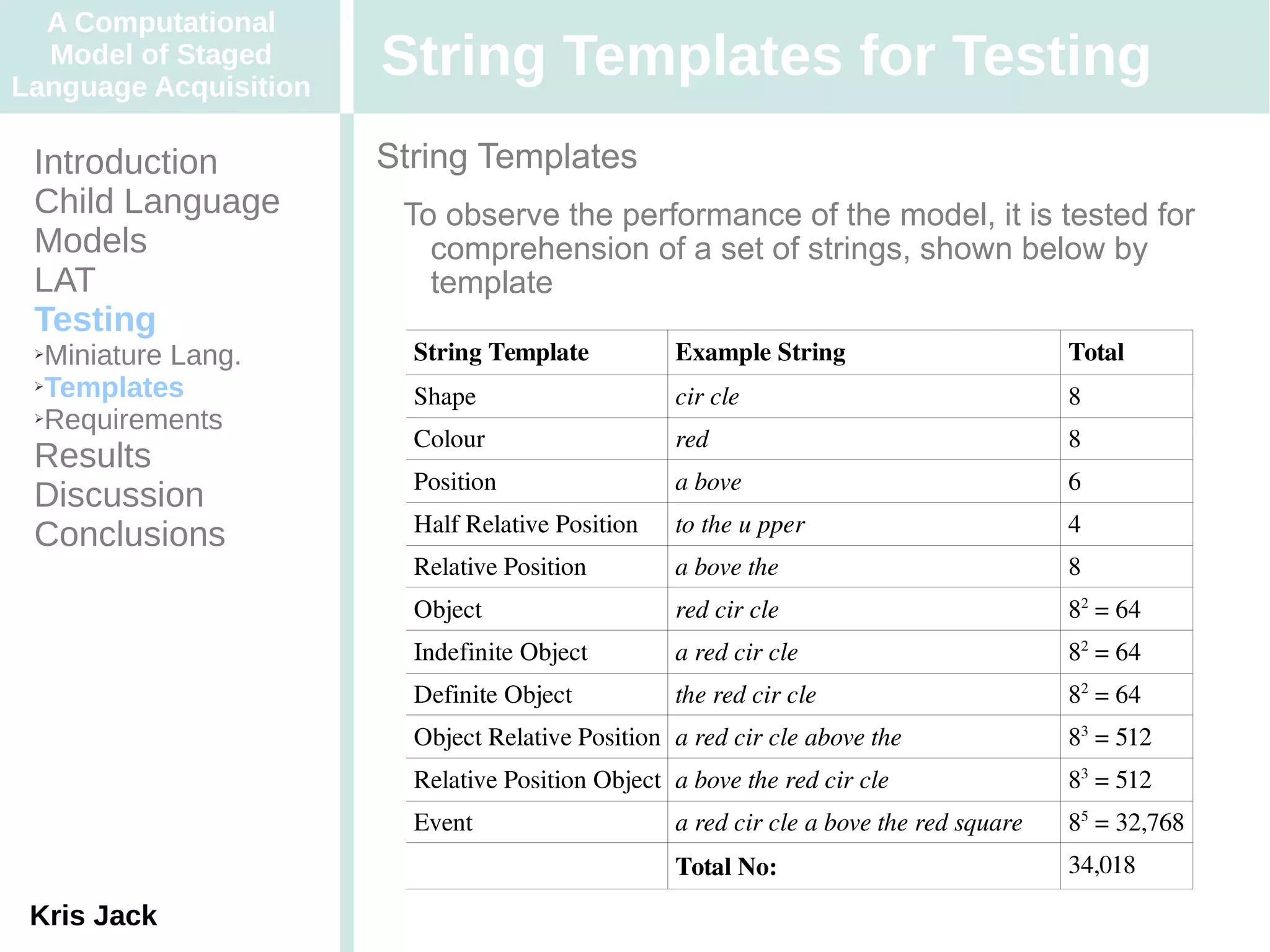

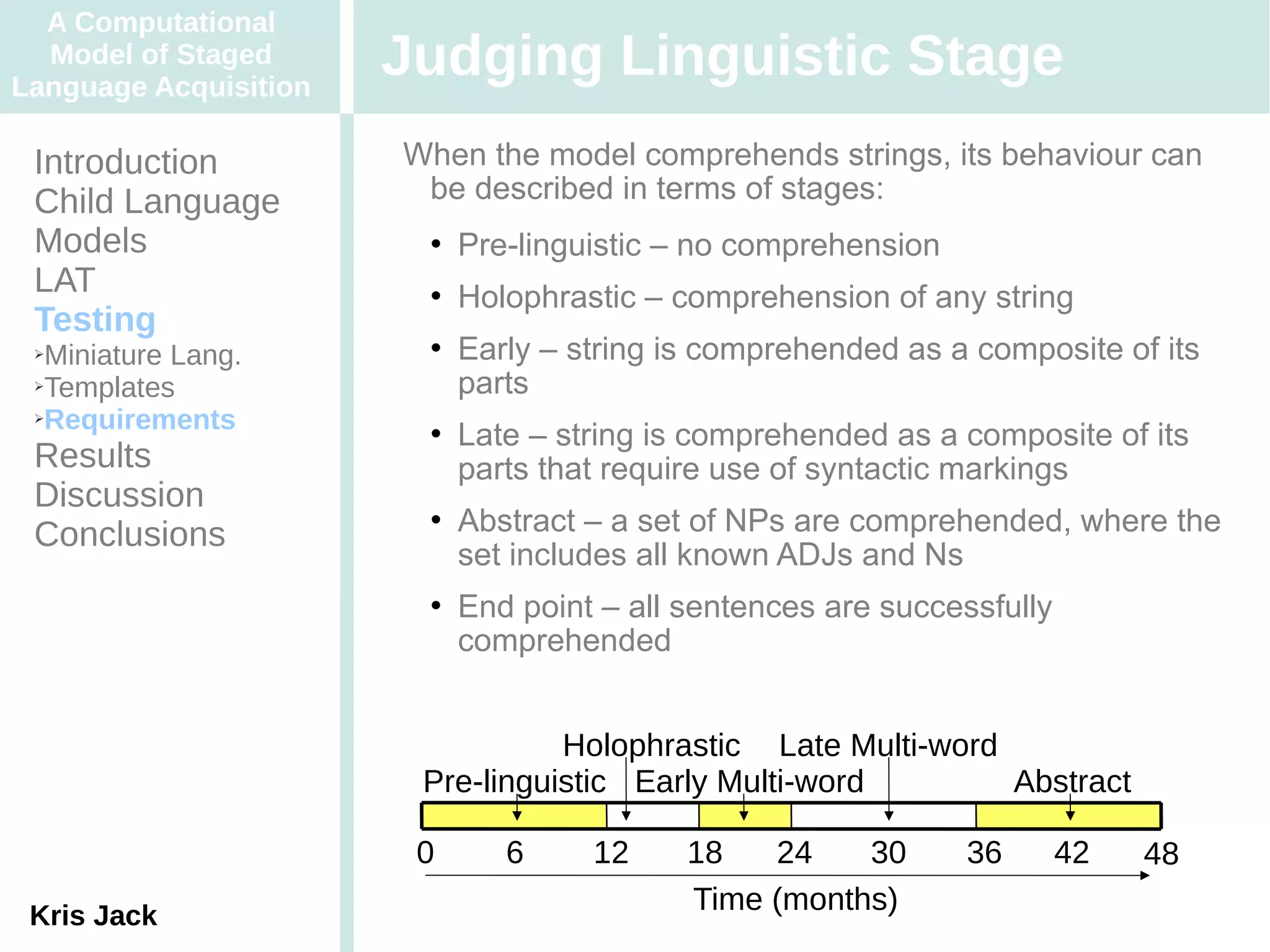

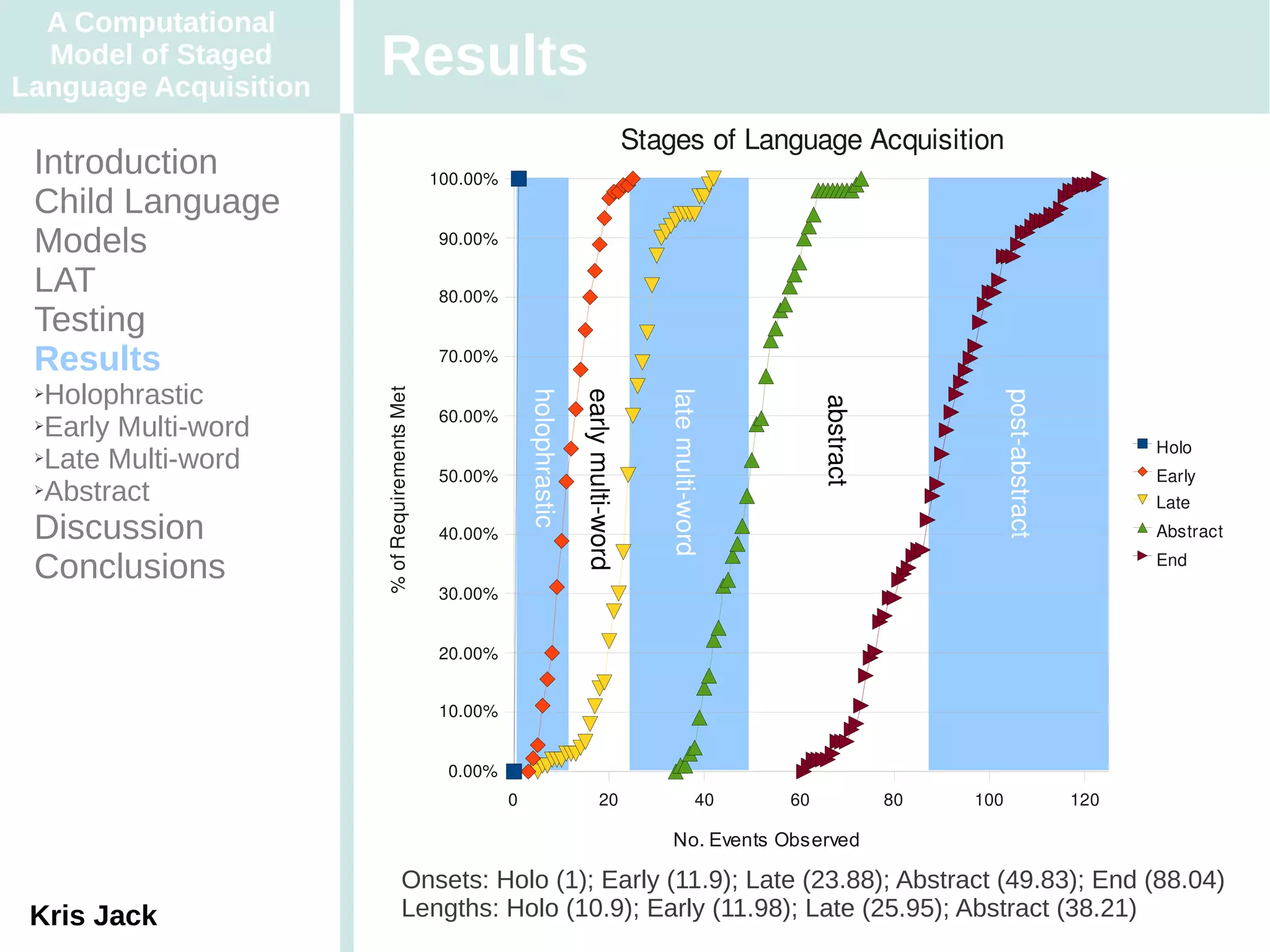

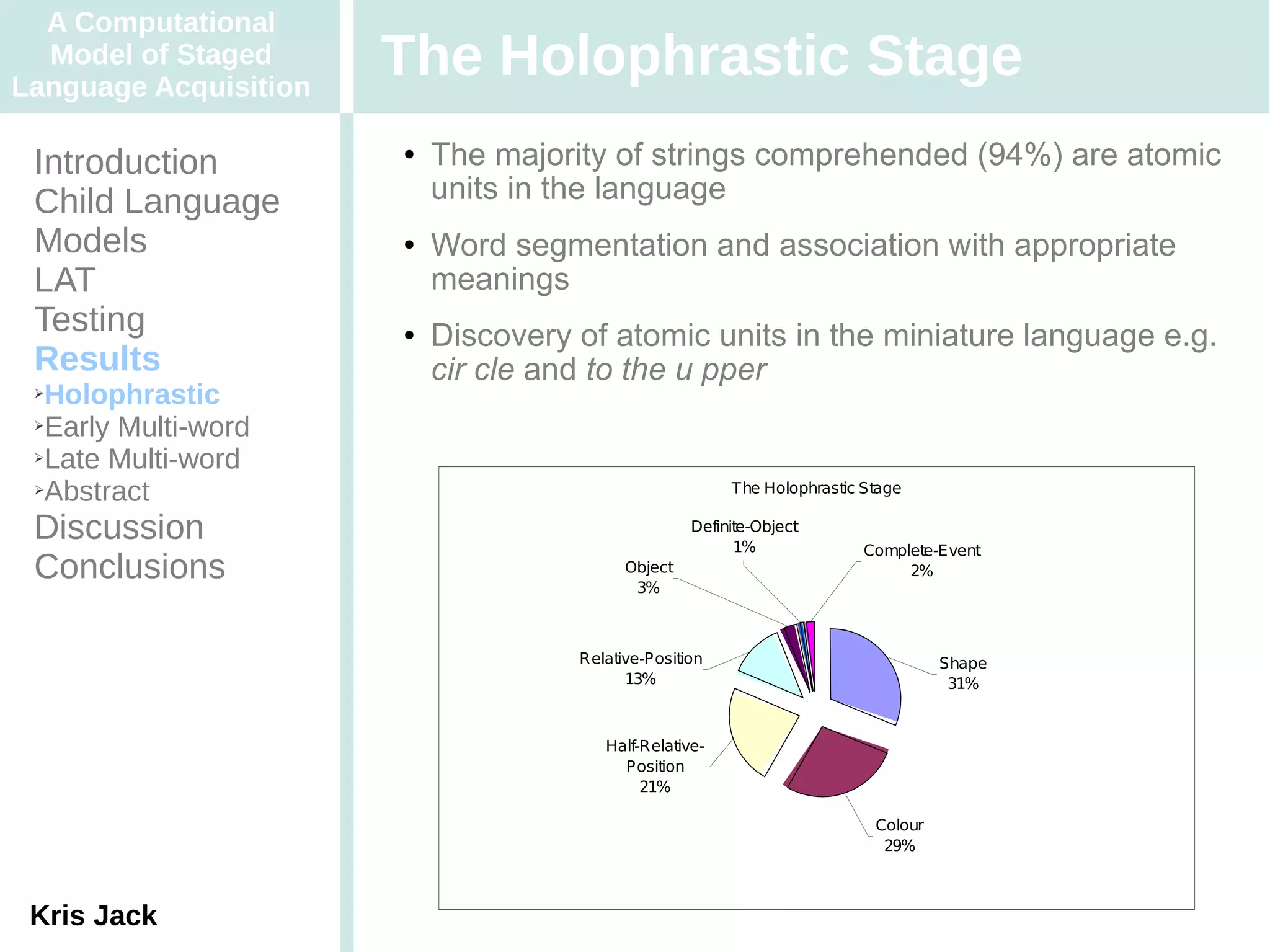

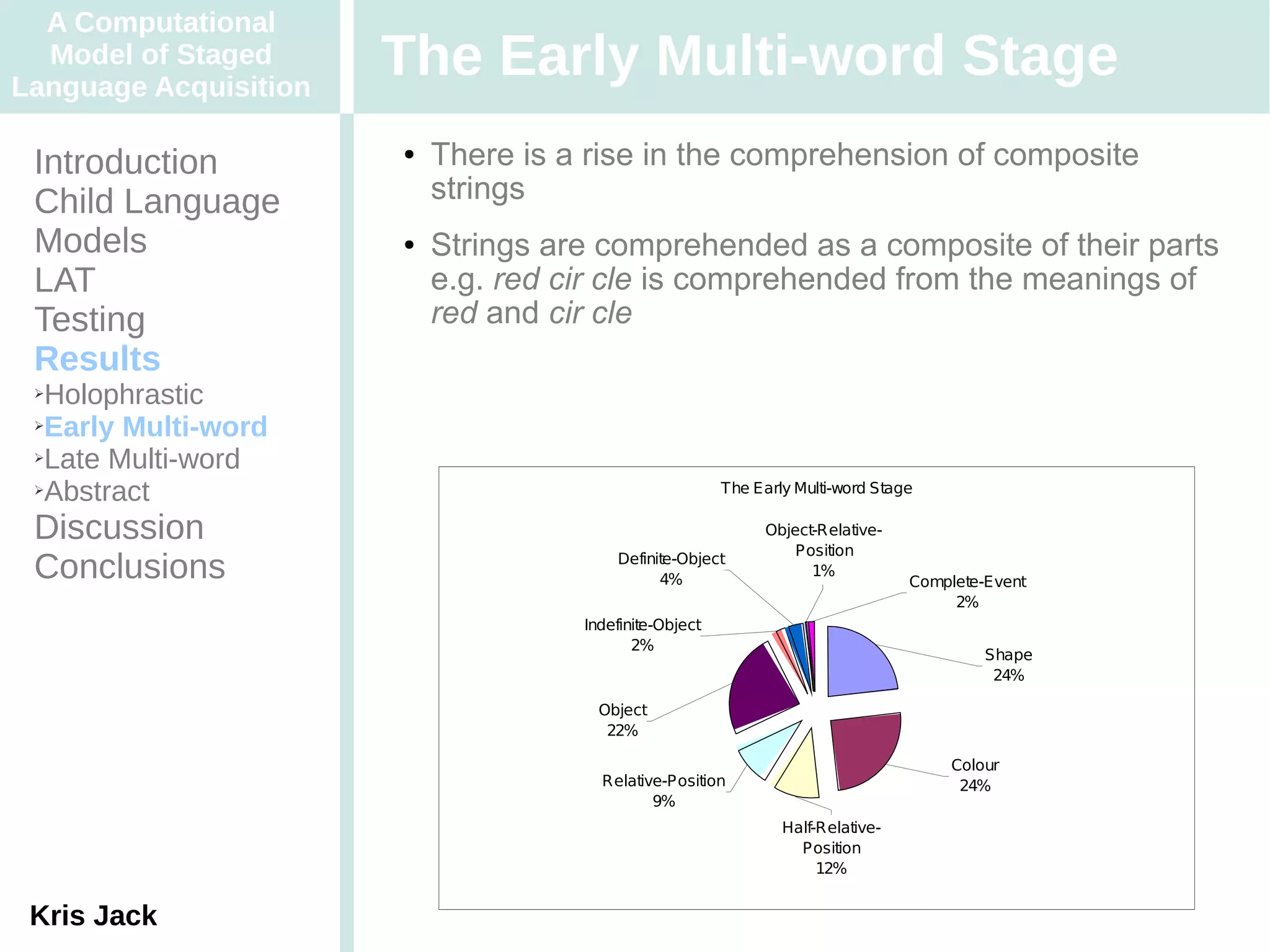

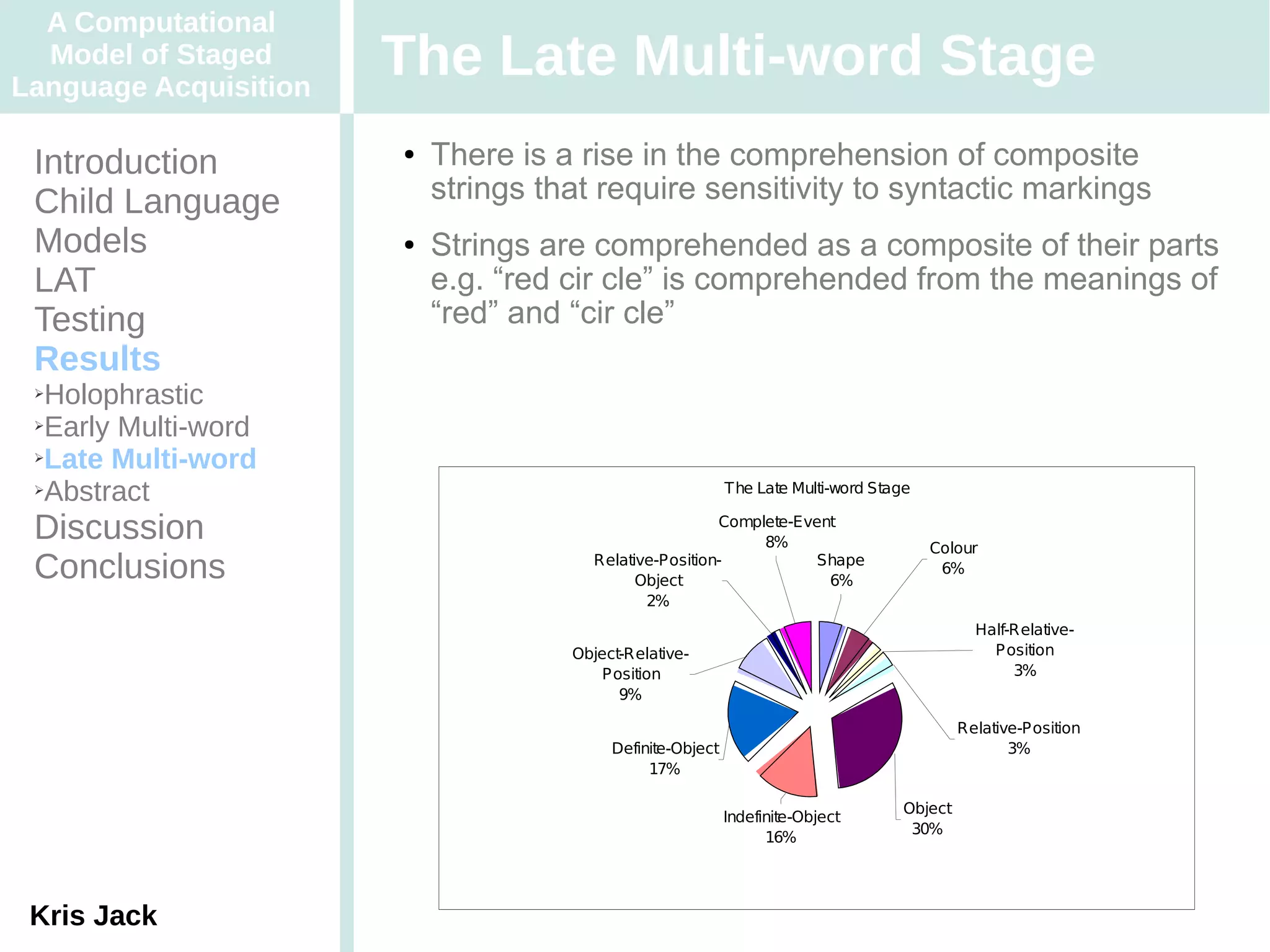

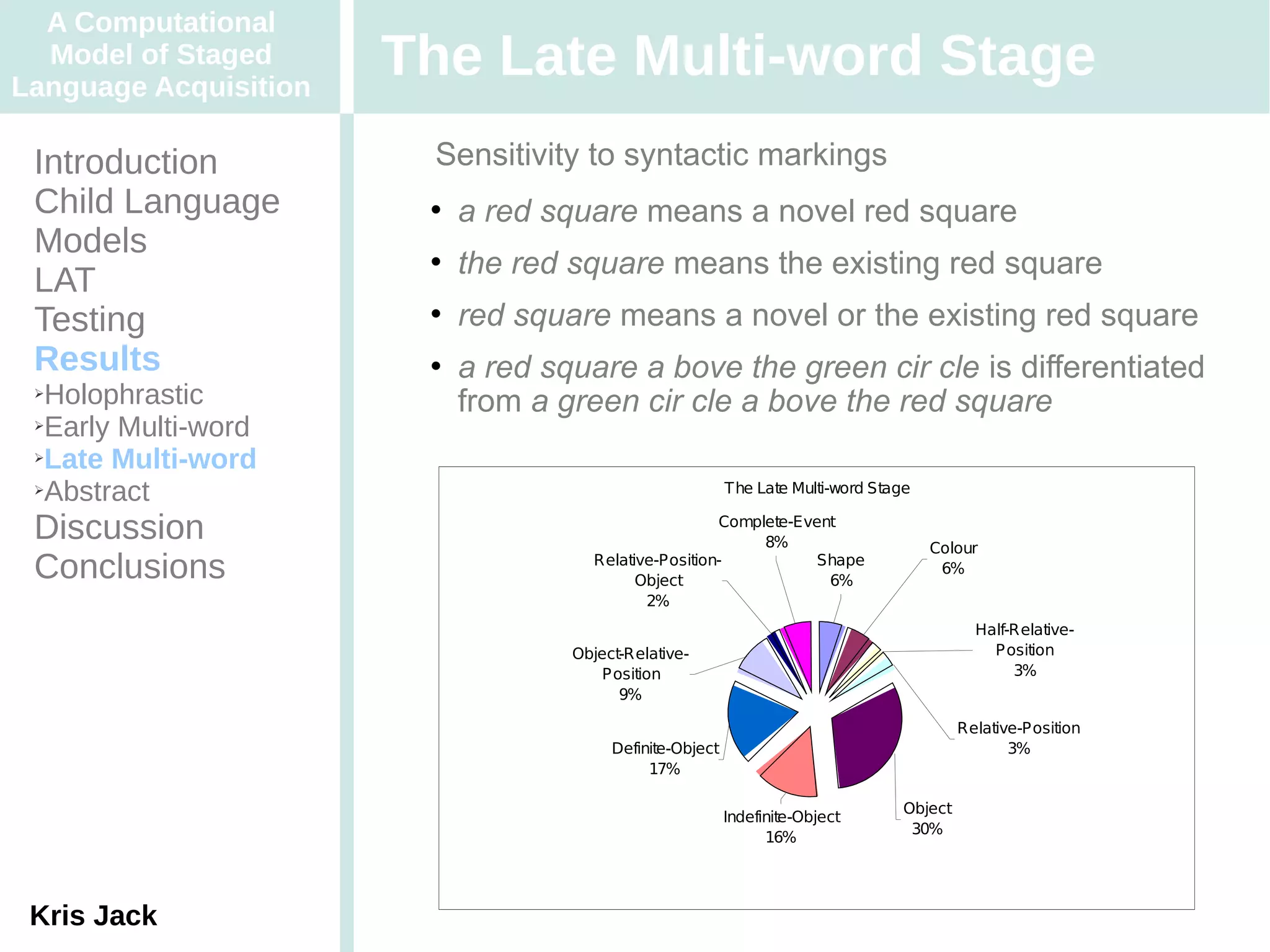

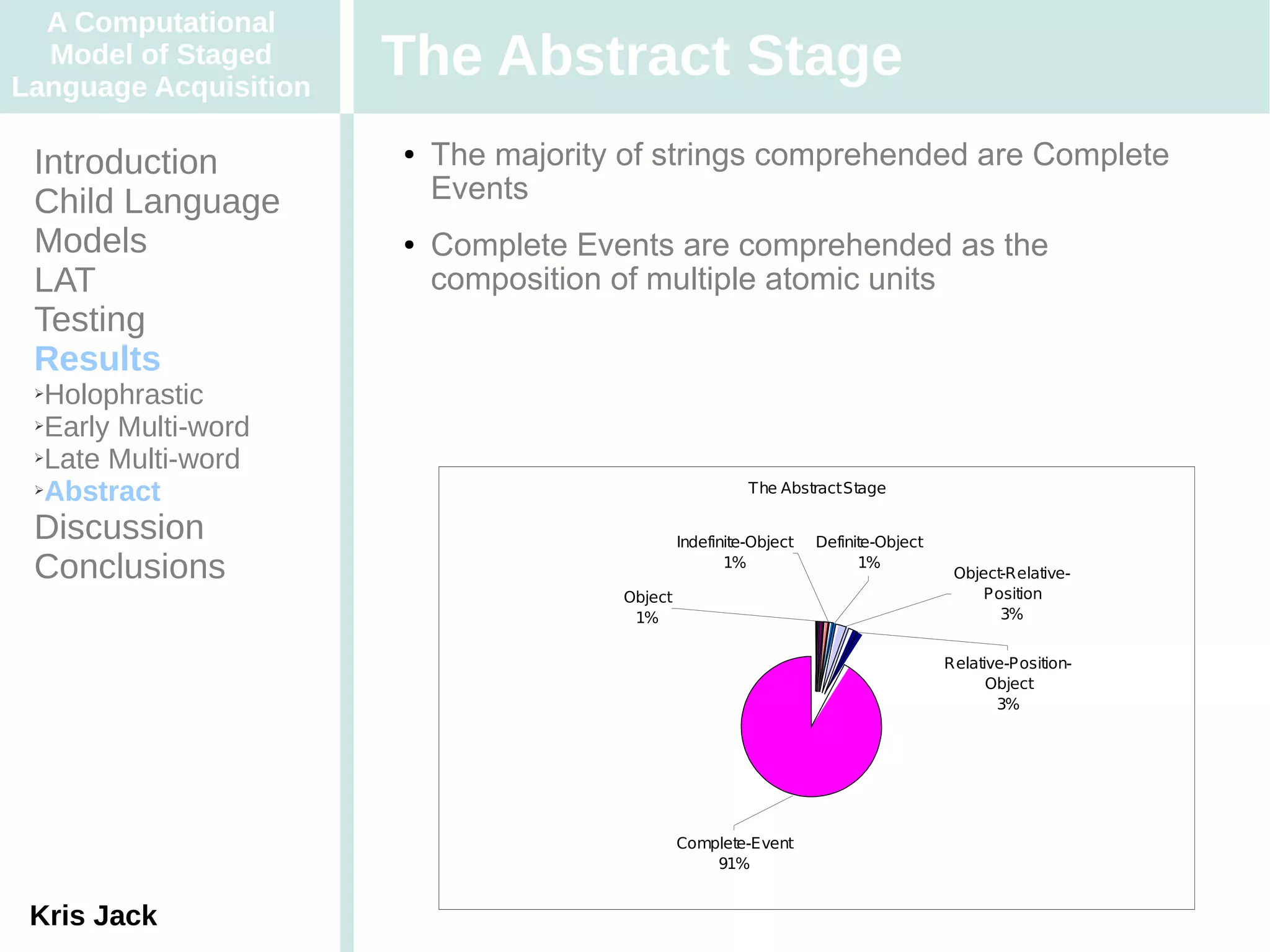

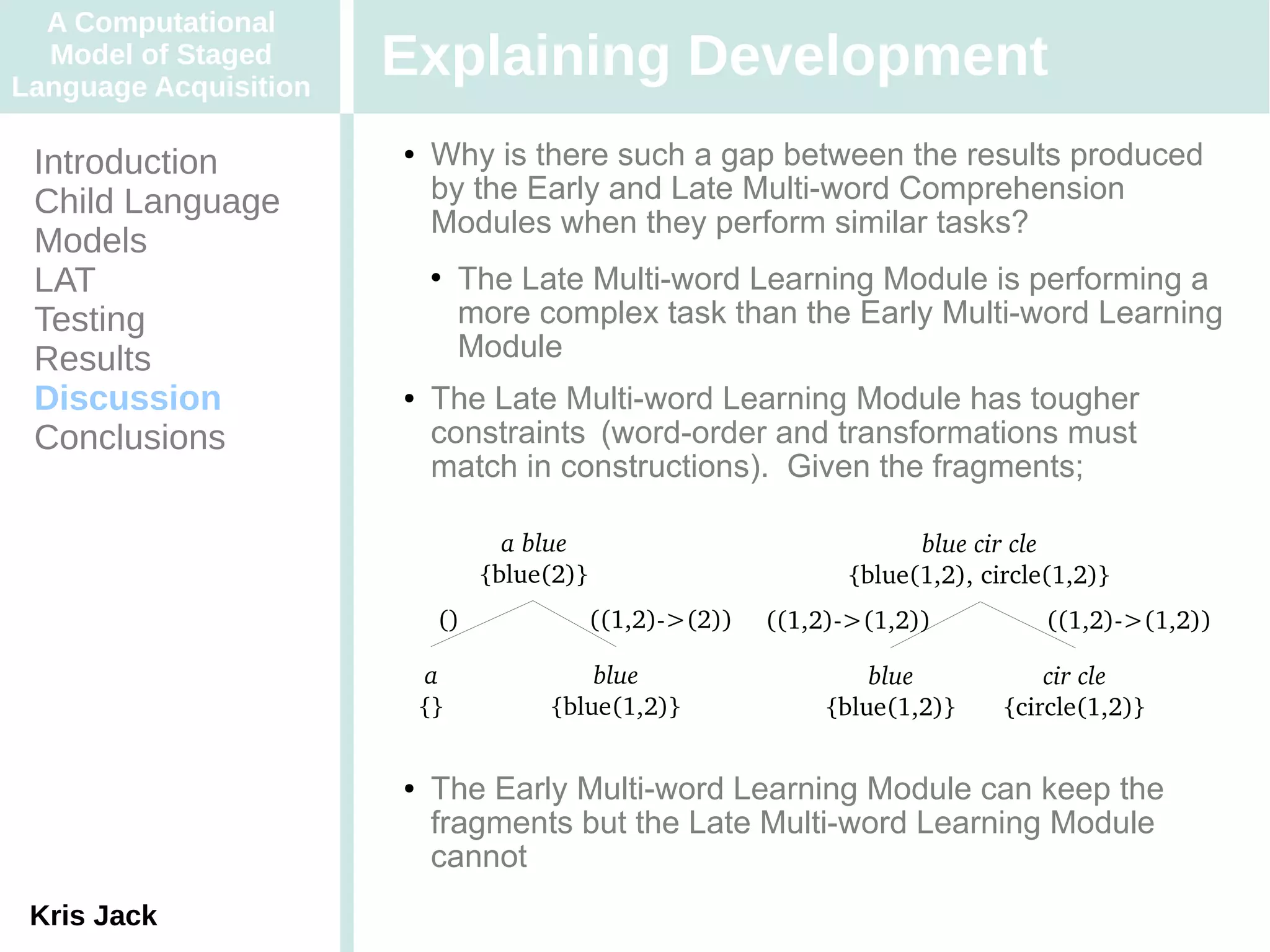

The document presents a computational model of staged language acquisition, outlining the various stages children typically progress through, which include pre-linguistic, holophrastic, early multi-word, late multi-word, and abstract stages. It discusses the role of computational modeling in understanding language development and the absence of a comprehensive model in existing literature. The model aims to provide insights into the linguistic tasks faced by children and proposes methodologies for simulating language acquisition.