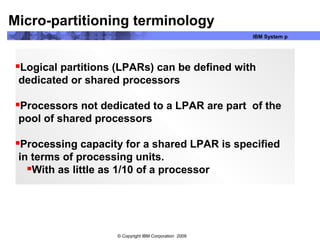

Virtualization technologies allow servers to be consolidated onto fewer physical servers for improved efficiency. IBM's PowerVM allows one physical server to be divided into multiple logical partitions (LPARs), with each LPAR able to run its own operating system. Key PowerVM technologies include micro-partitioning which divides physical CPUs among LPARs, dynamic LPARs which moves resources between active partitions, and virtual I/O servers which allow partitions to share physical network and storage adapters. These technologies improve utilization, flexibility, and availability compared to using separate physical servers.