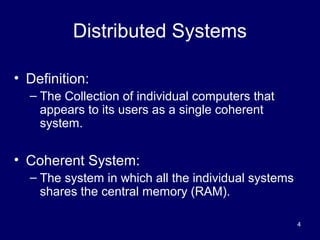

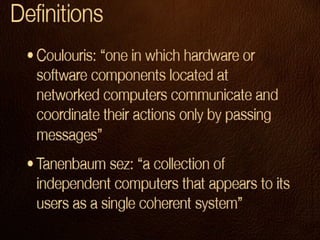

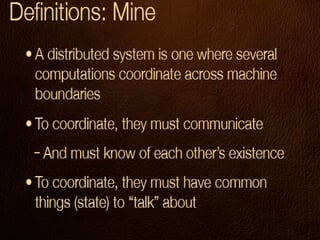

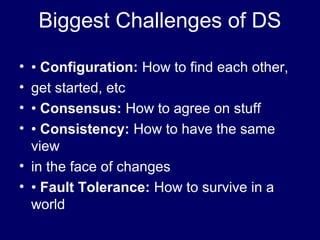

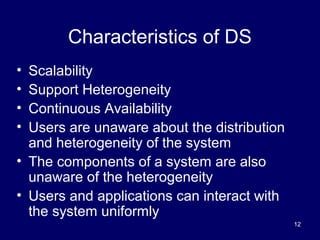

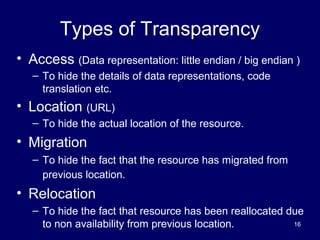

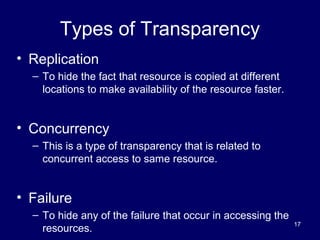

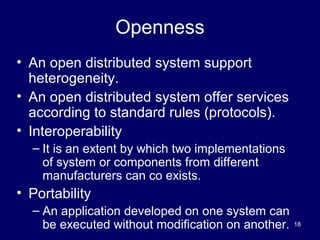

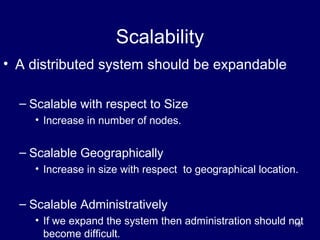

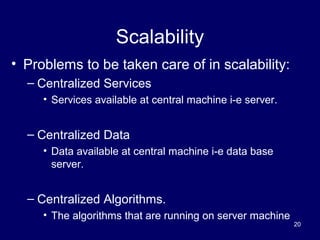

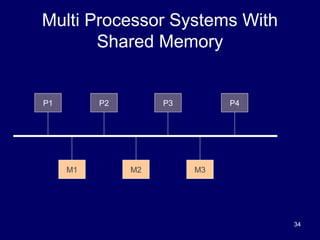

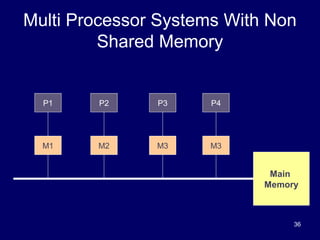

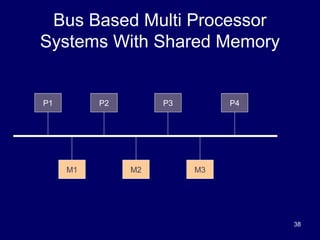

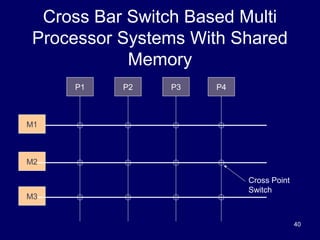

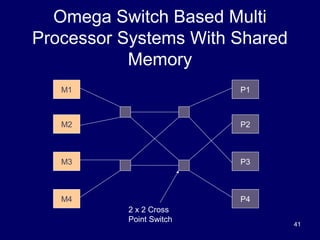

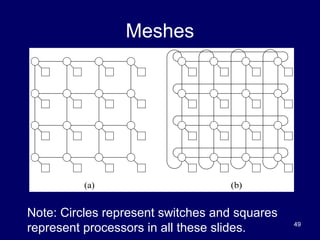

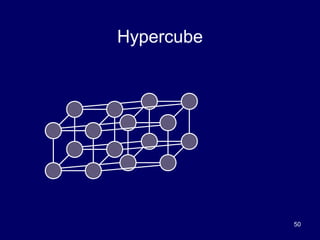

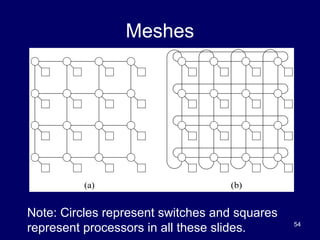

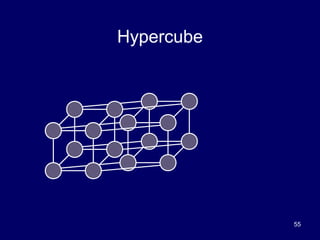

This document provides an overview of distributed systems and distributed computing. It defines a distributed system as a collection of independent computers that appears as a single coherent system. It discusses the advantages and goals of distributed systems, including connecting users and resources, transparency, openness and scalability. It also covers hardware concepts like multi-processor systems with shared or non-shared memory, and multi-computer systems that can be homogeneous or heterogeneous.