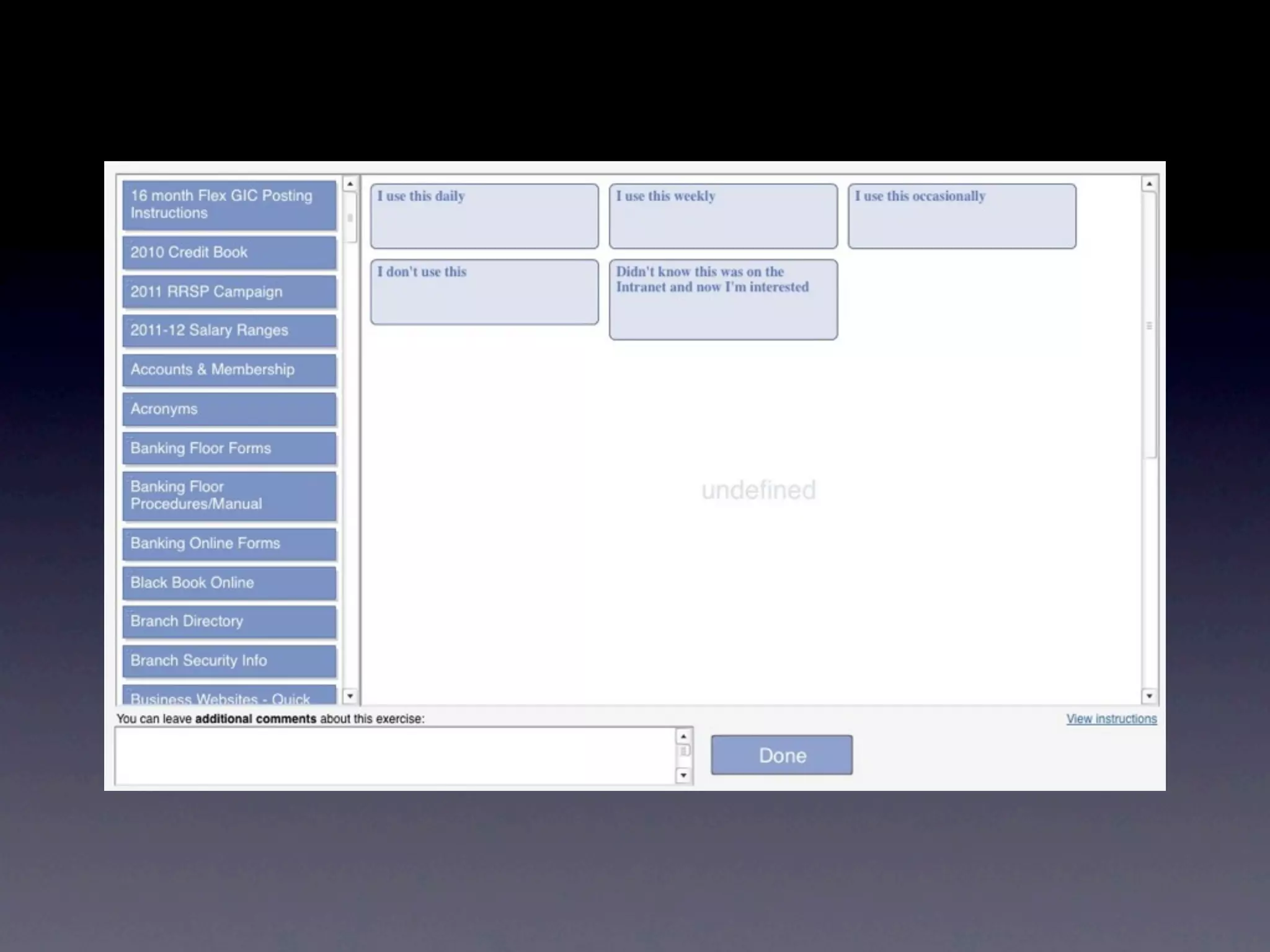

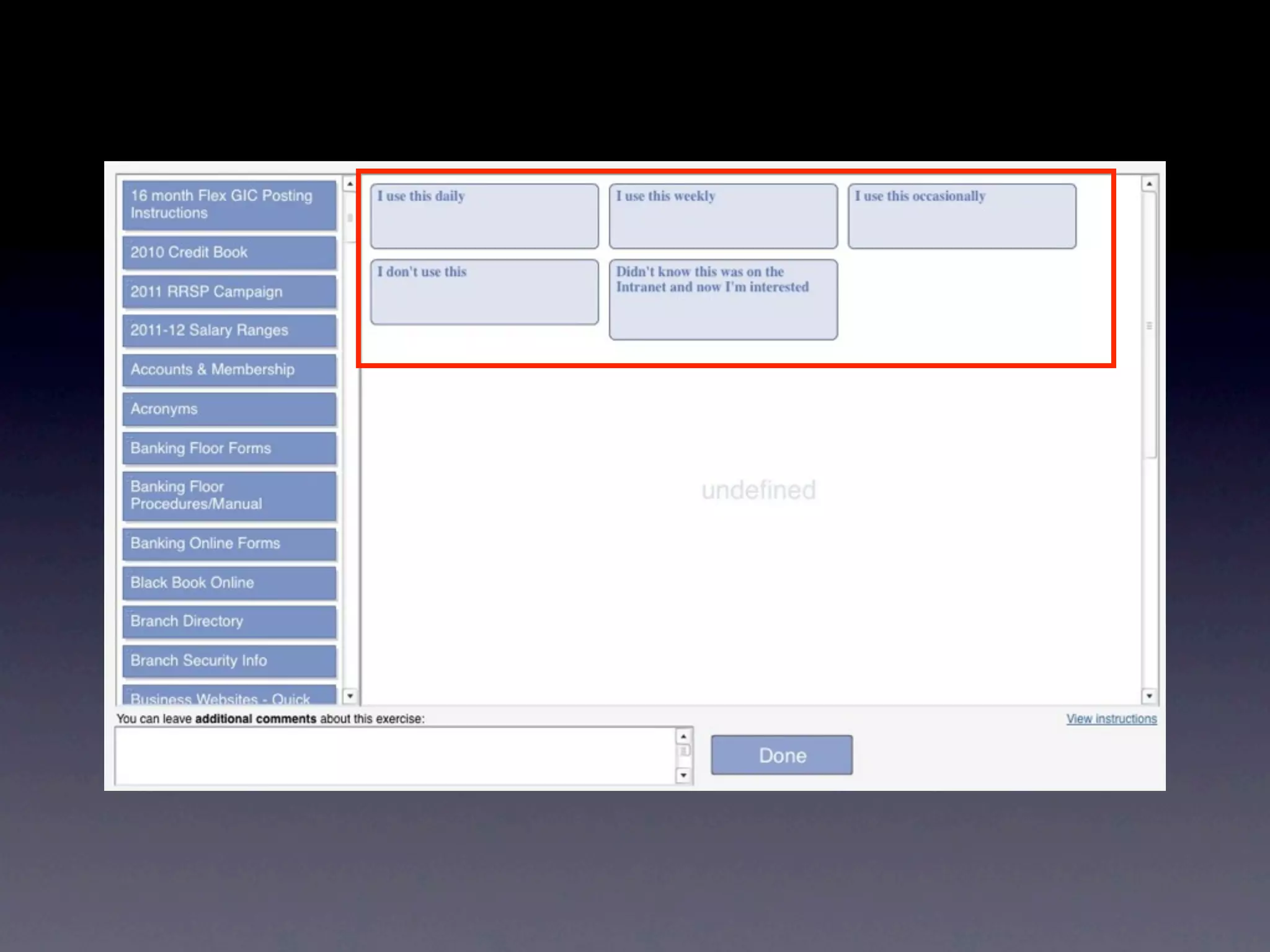

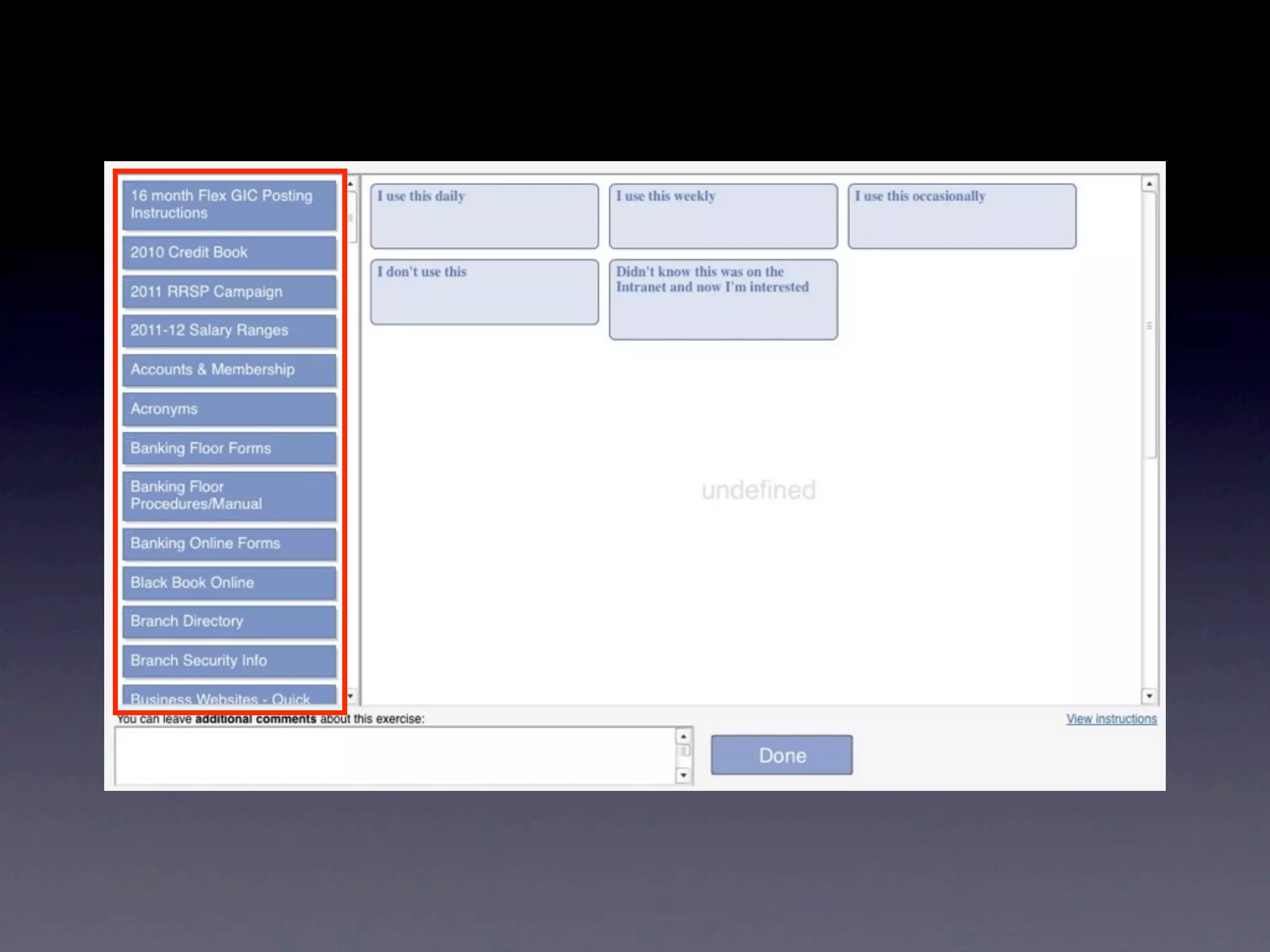

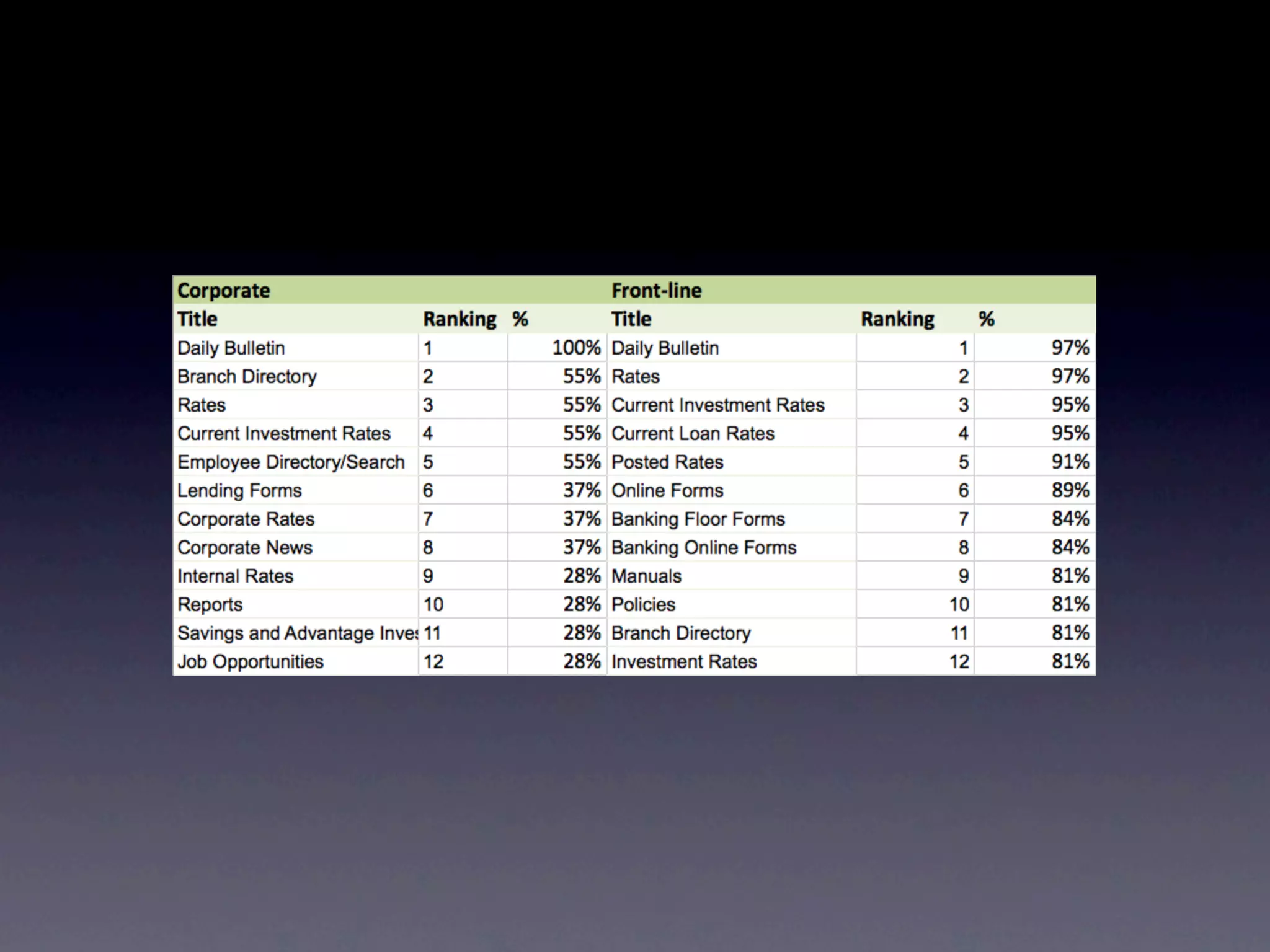

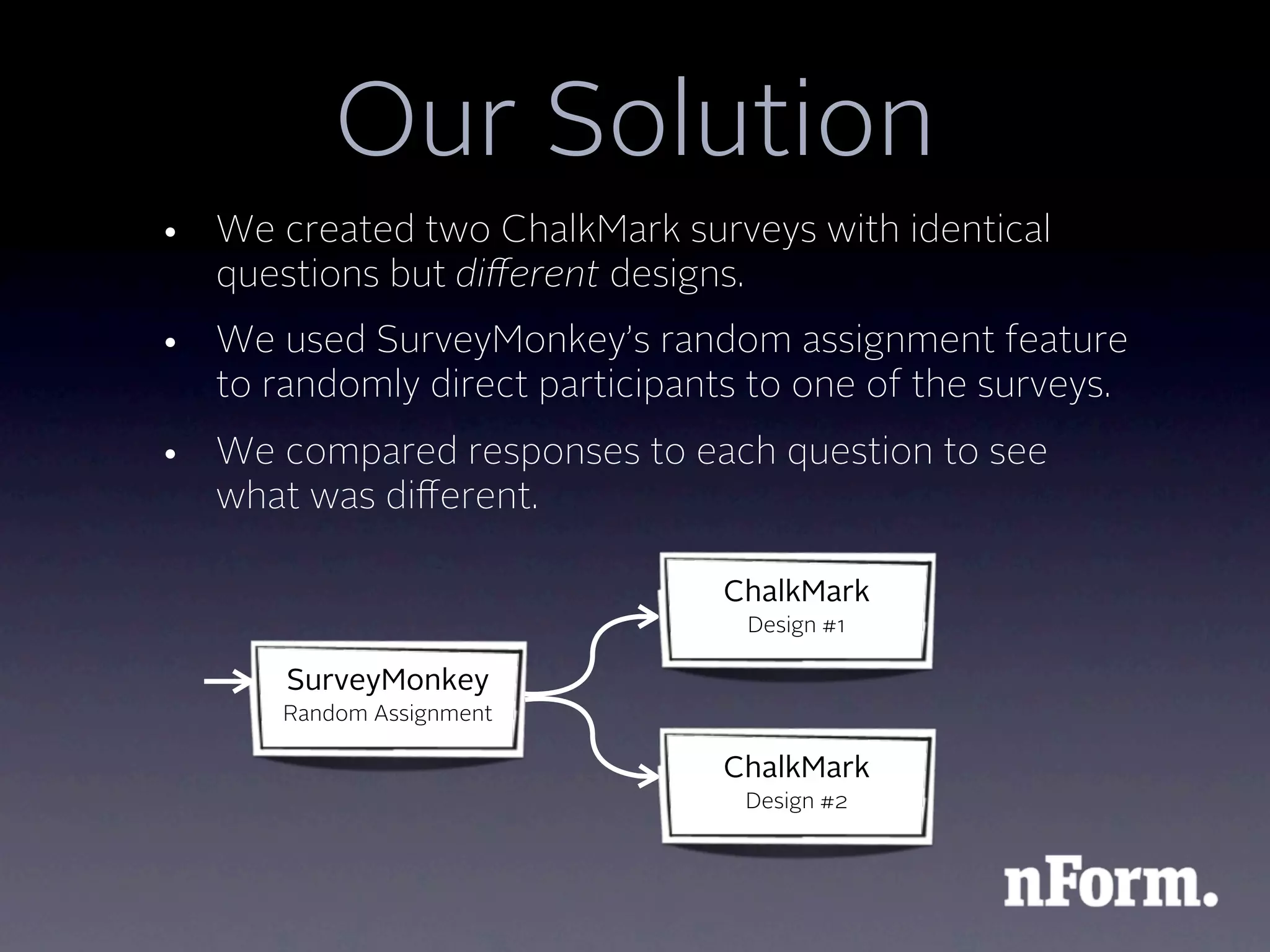

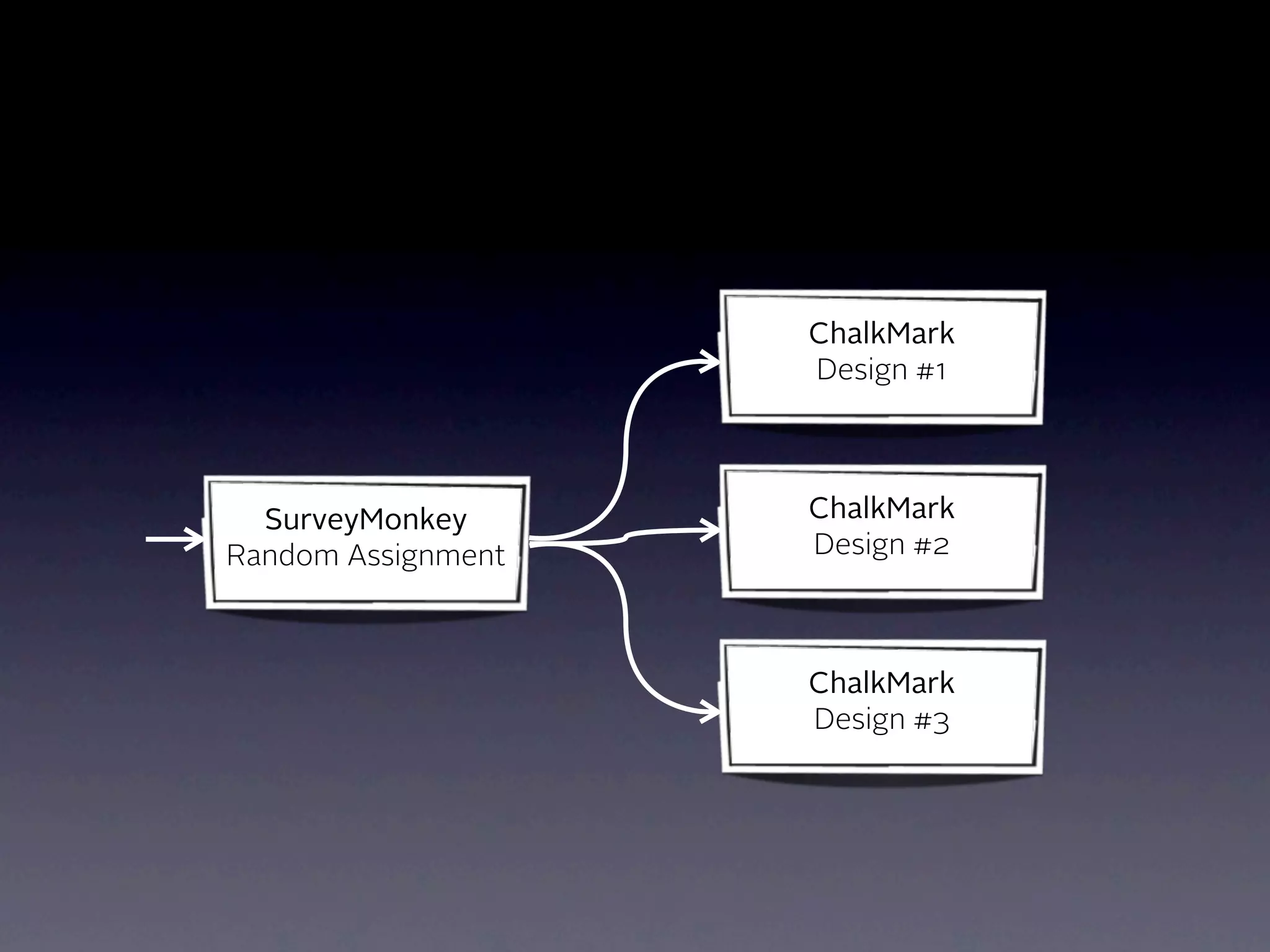

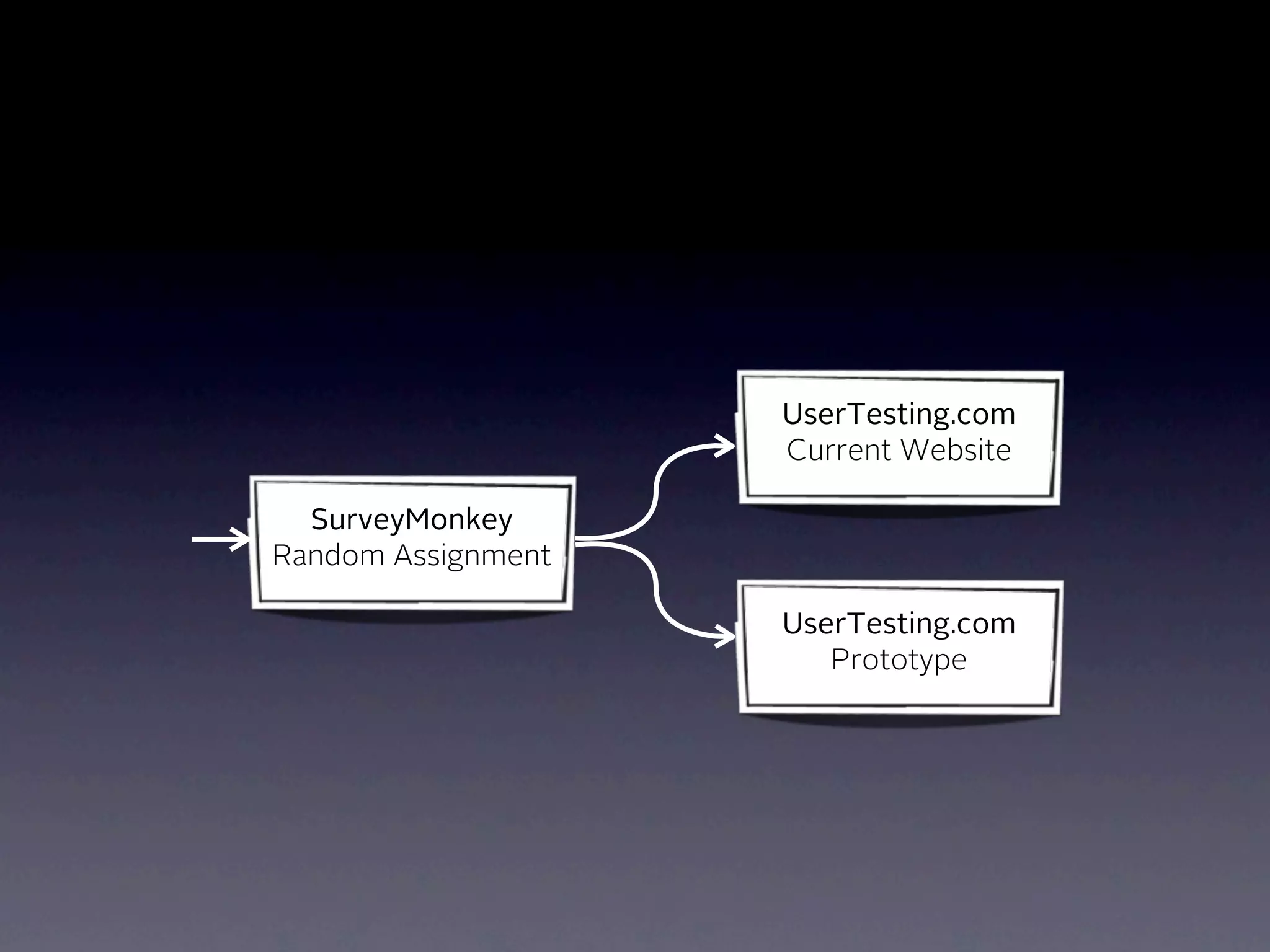

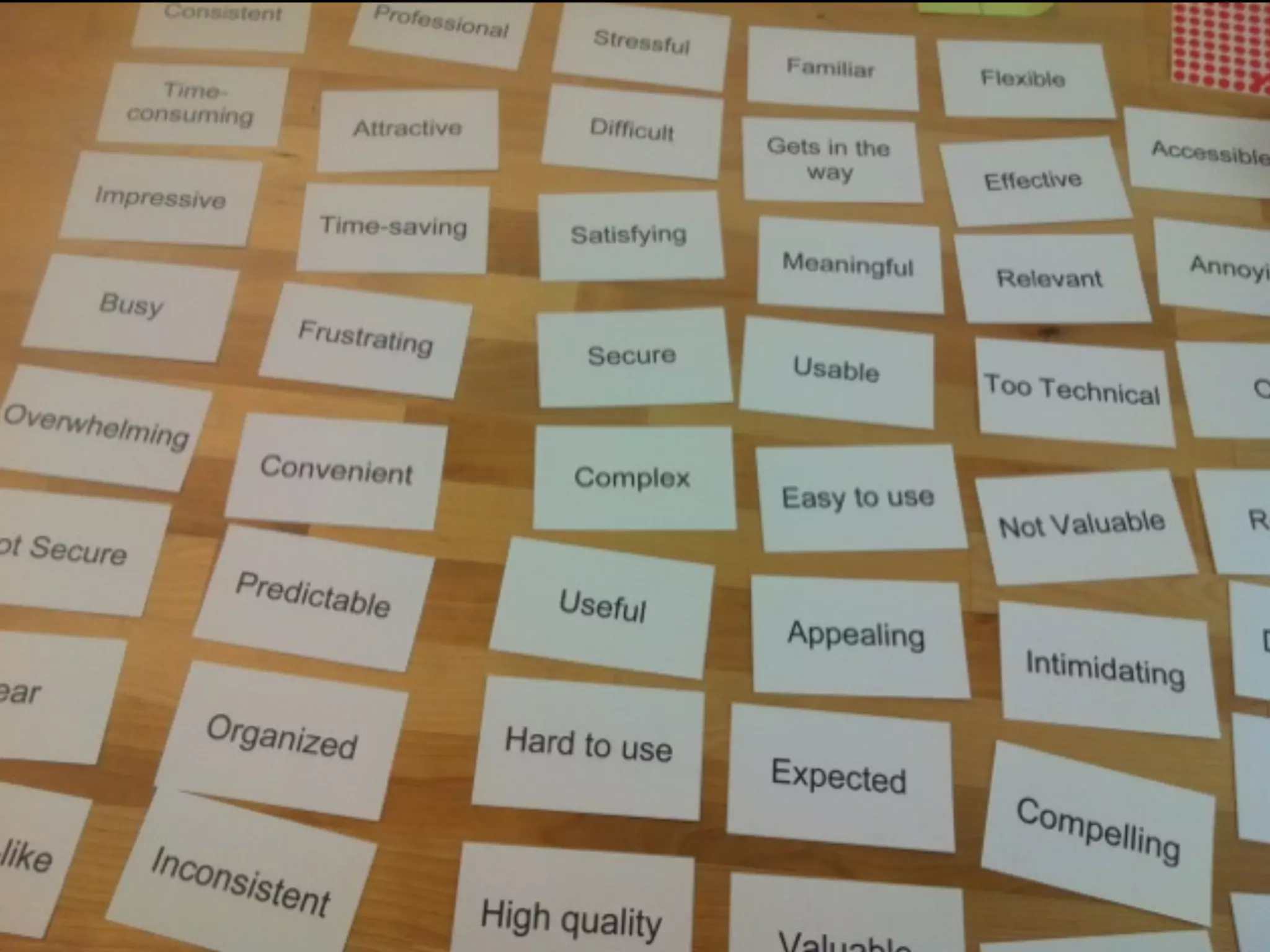

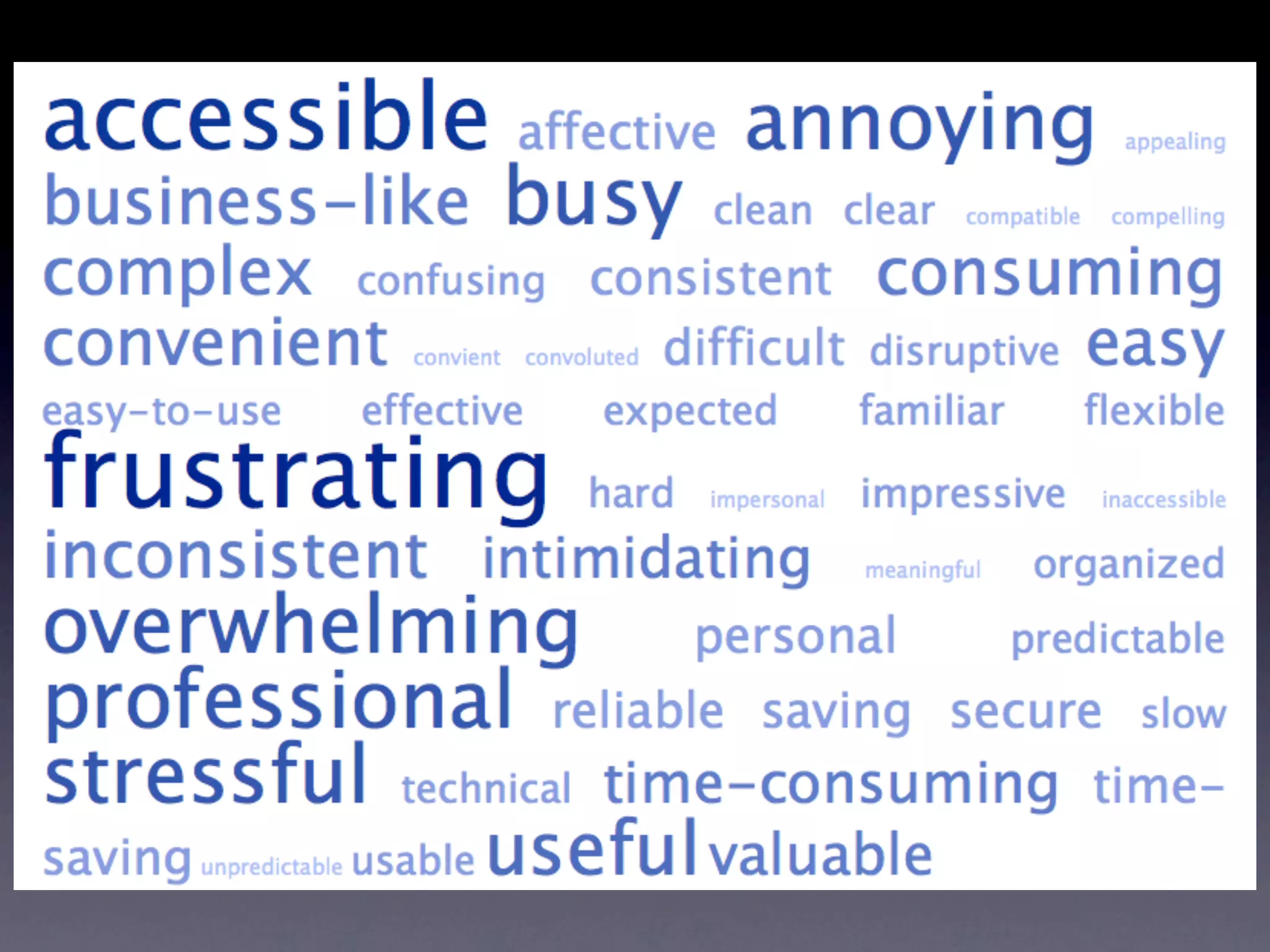

This document summarizes three user research hacks presented by Gene Smith at UX Lisbon in 2012. The hacks provide kludgy but effective ways to conduct user research when clients have small budgets, limited time, or lack executive support. The first hack uses a content prioritization card sort to understand users' information needs. The second hack uses A/B testing in SurveyMonkey to evaluate website mockups. The third hack boosts interview responses by having participants select emotion cards to describe their experiences. The goal is to provide low-cost, high-impact ideas for user research projects.