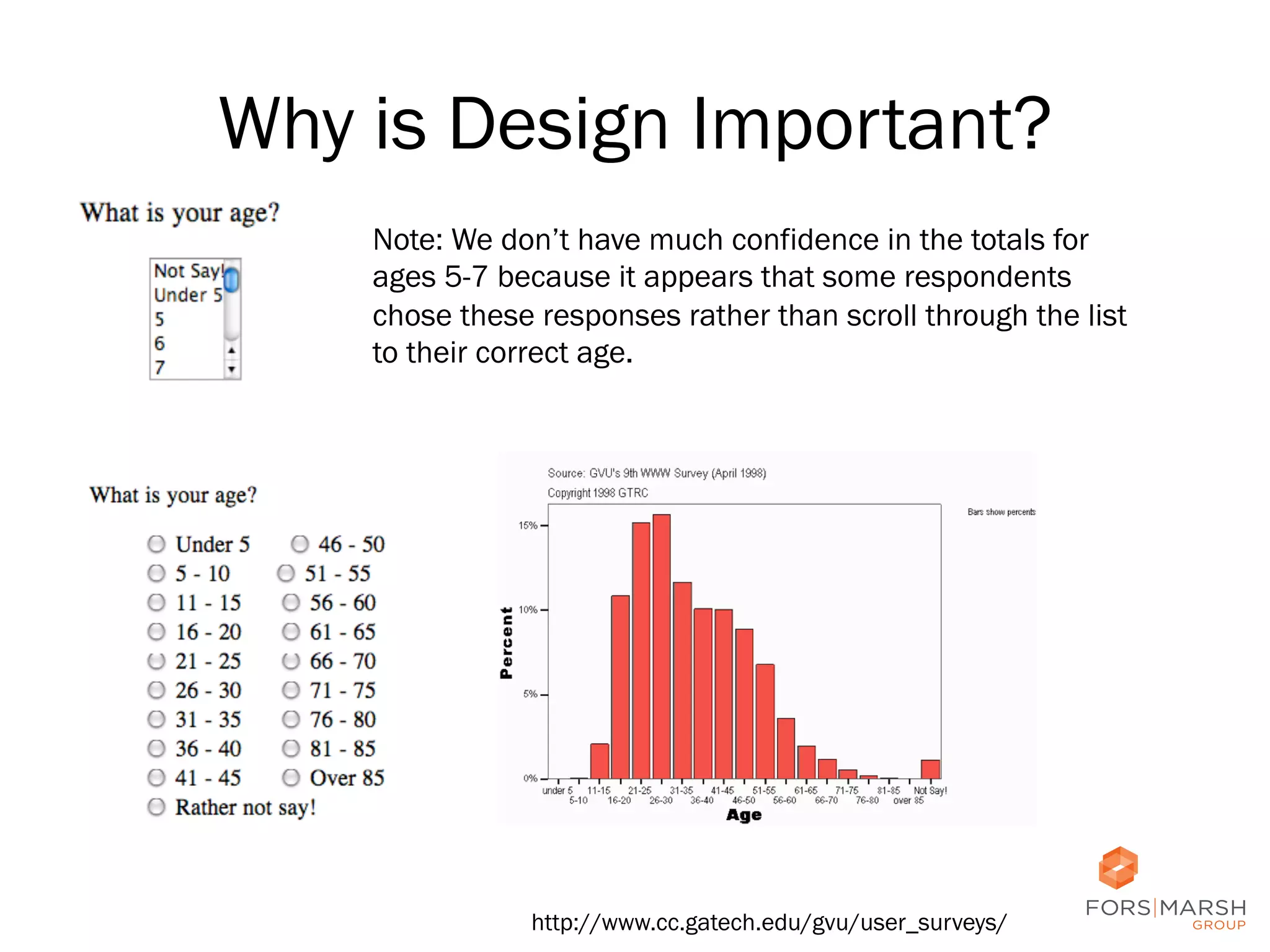

This document discusses best practices for designing usable government forms and surveys. It covers topics like usability testing methods, navigation patterns, input field design, and placement of instructions. Specifically, it recommends:

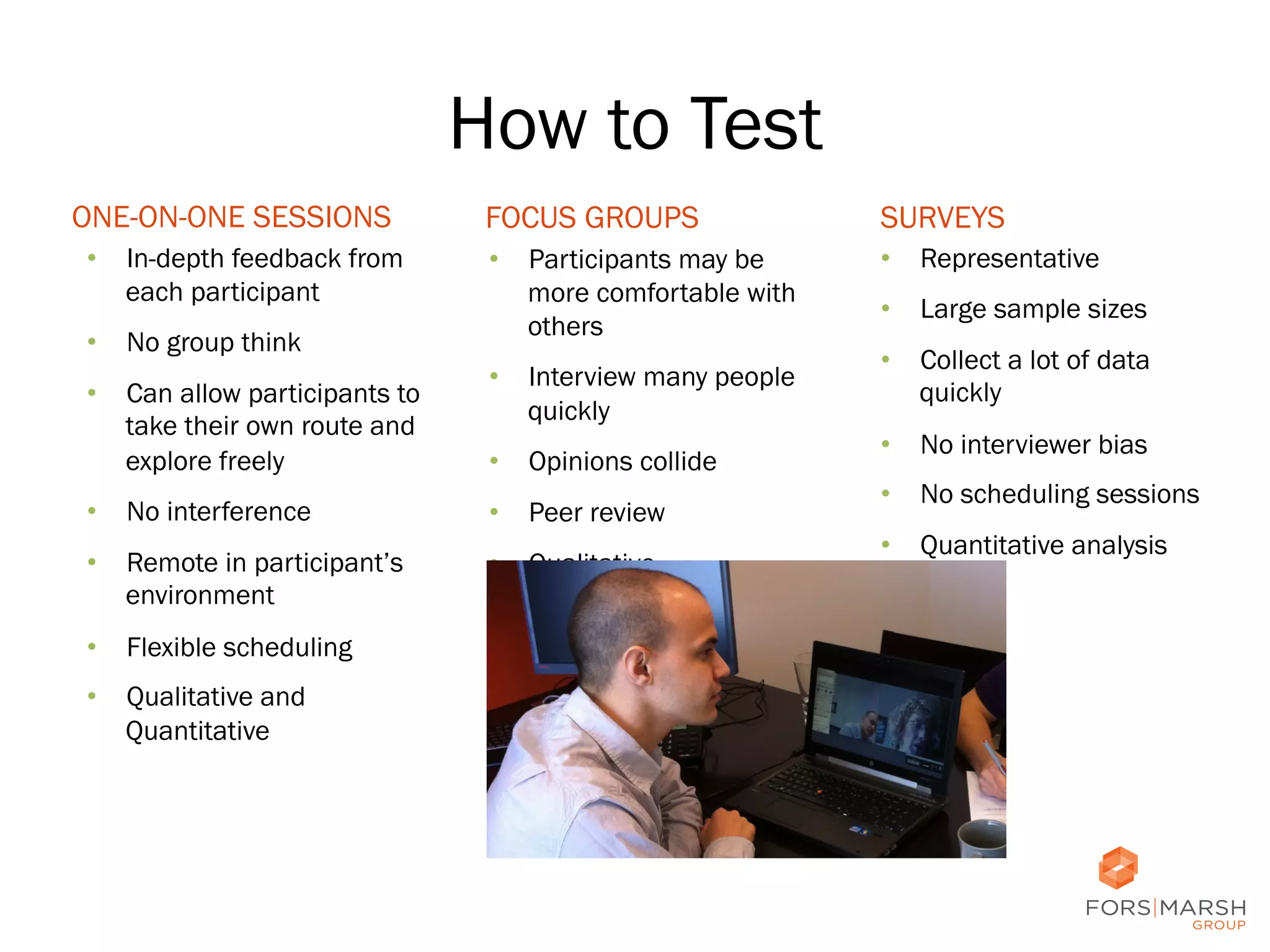

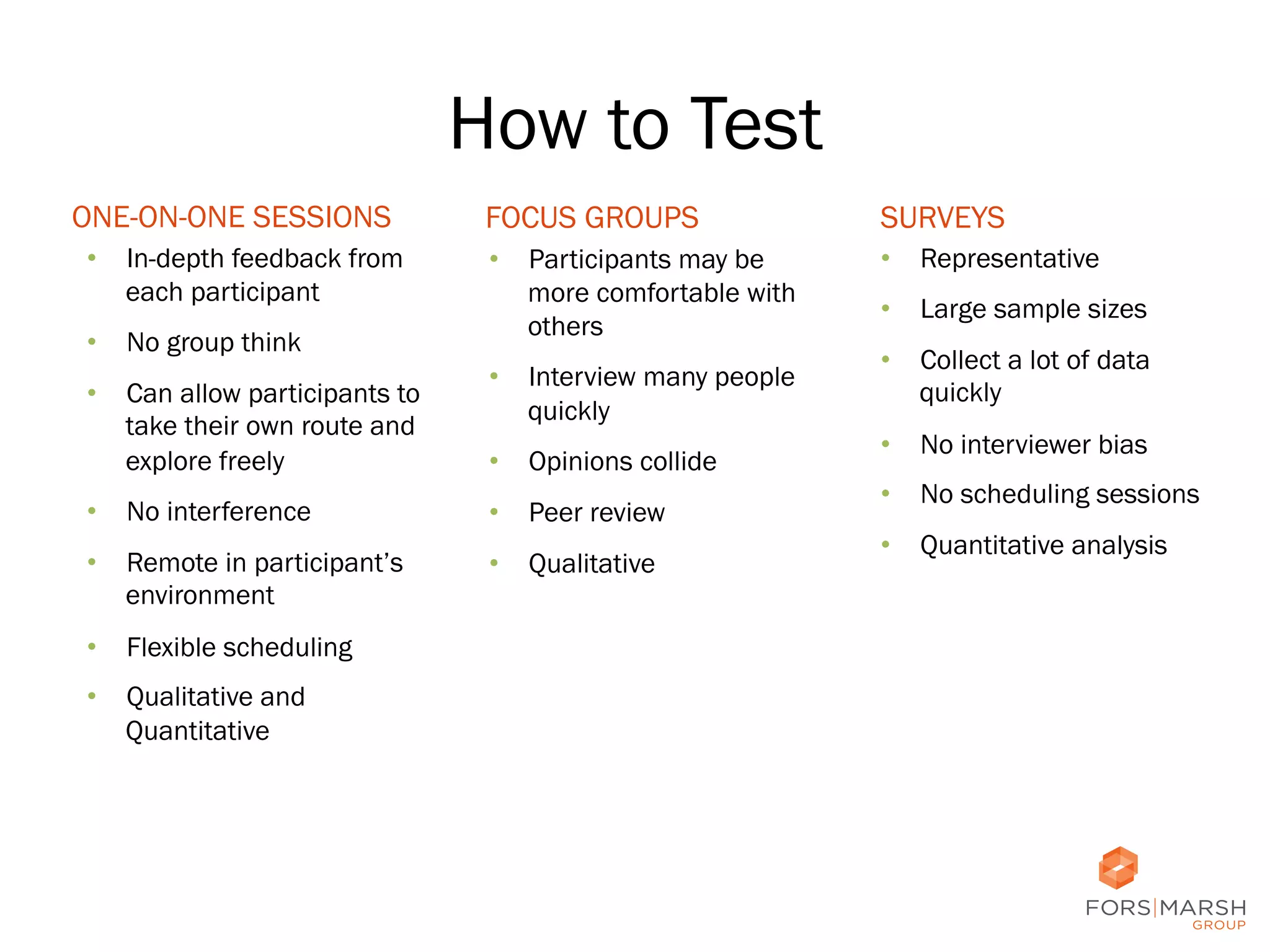

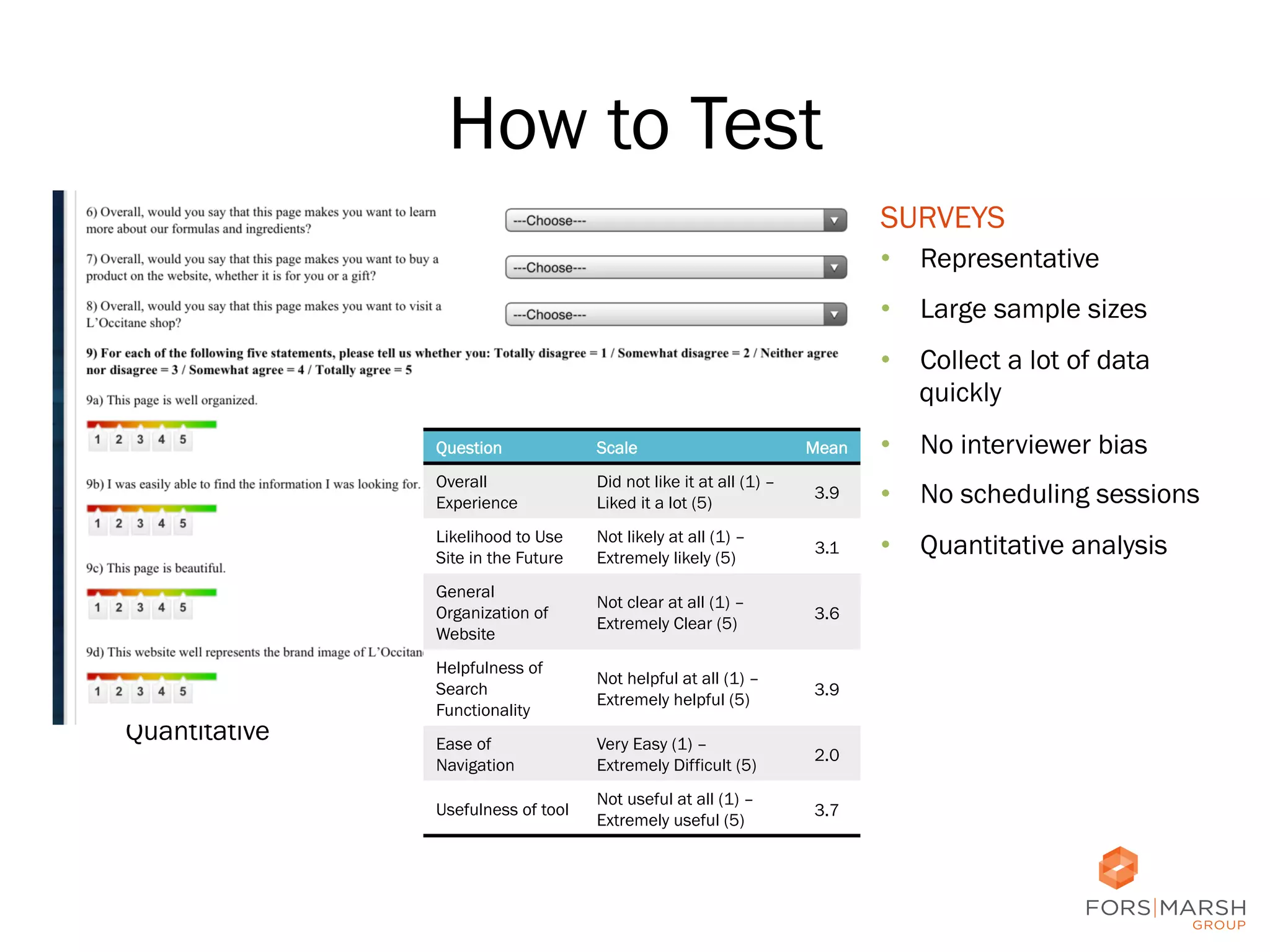

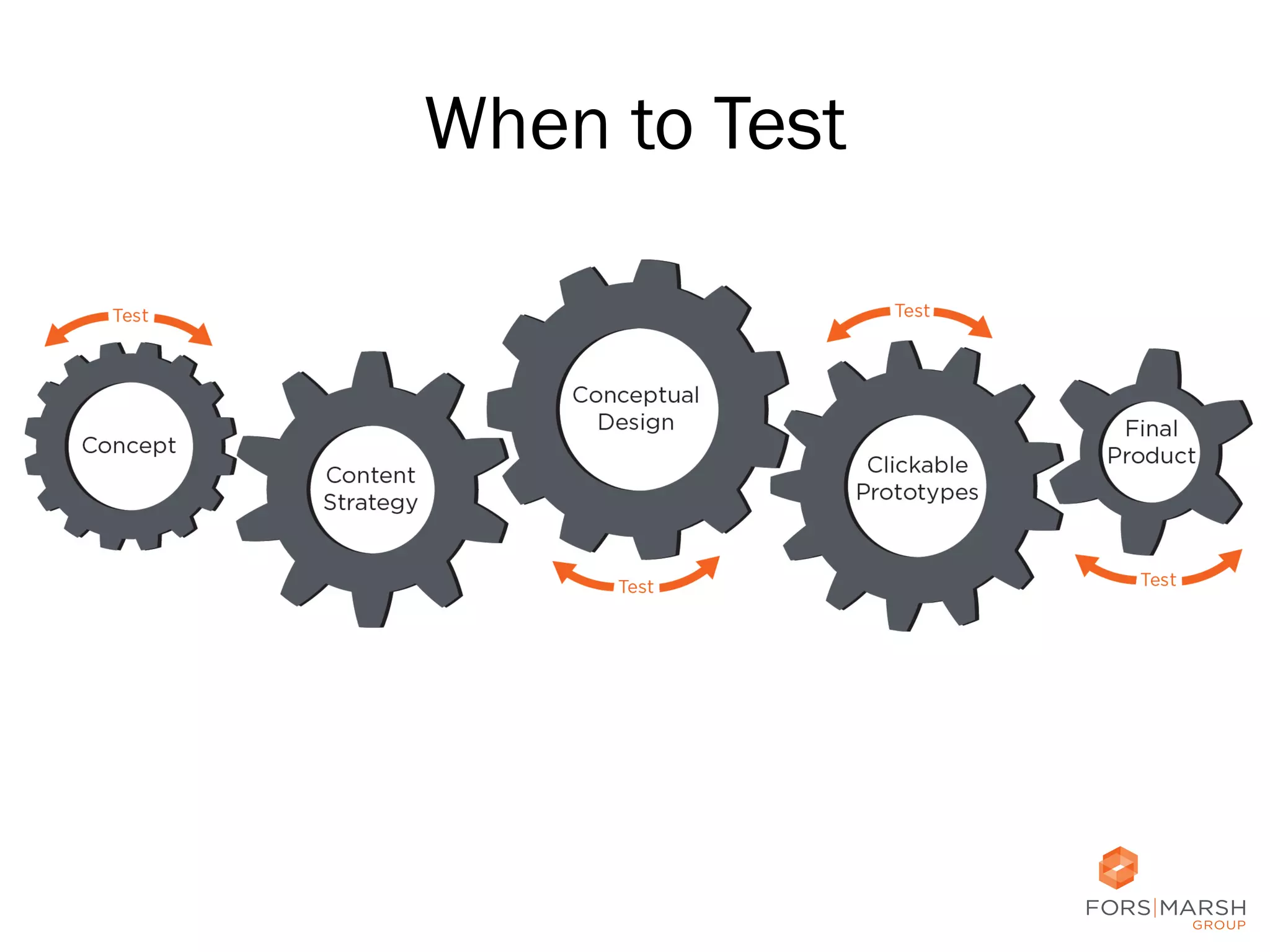

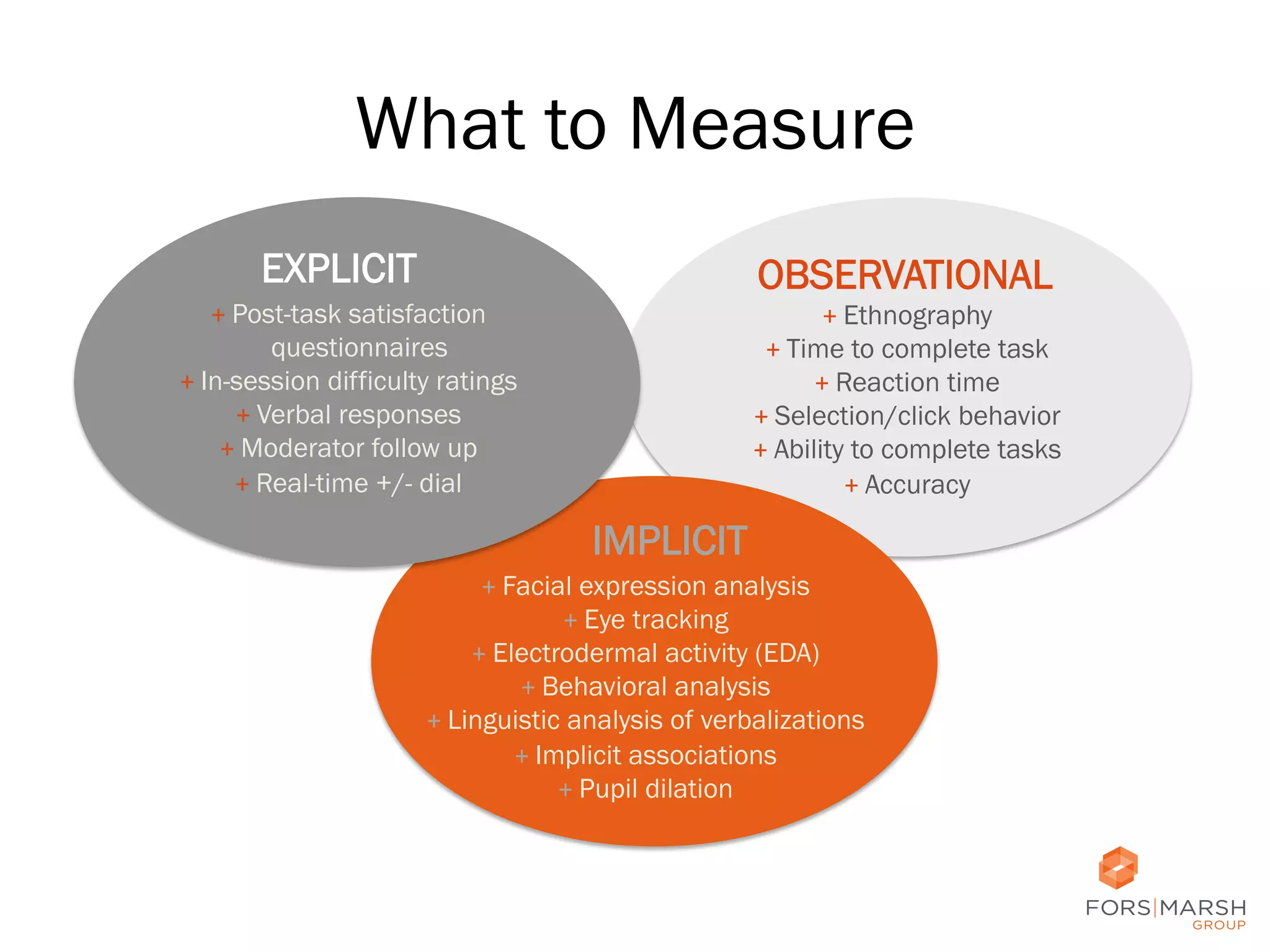

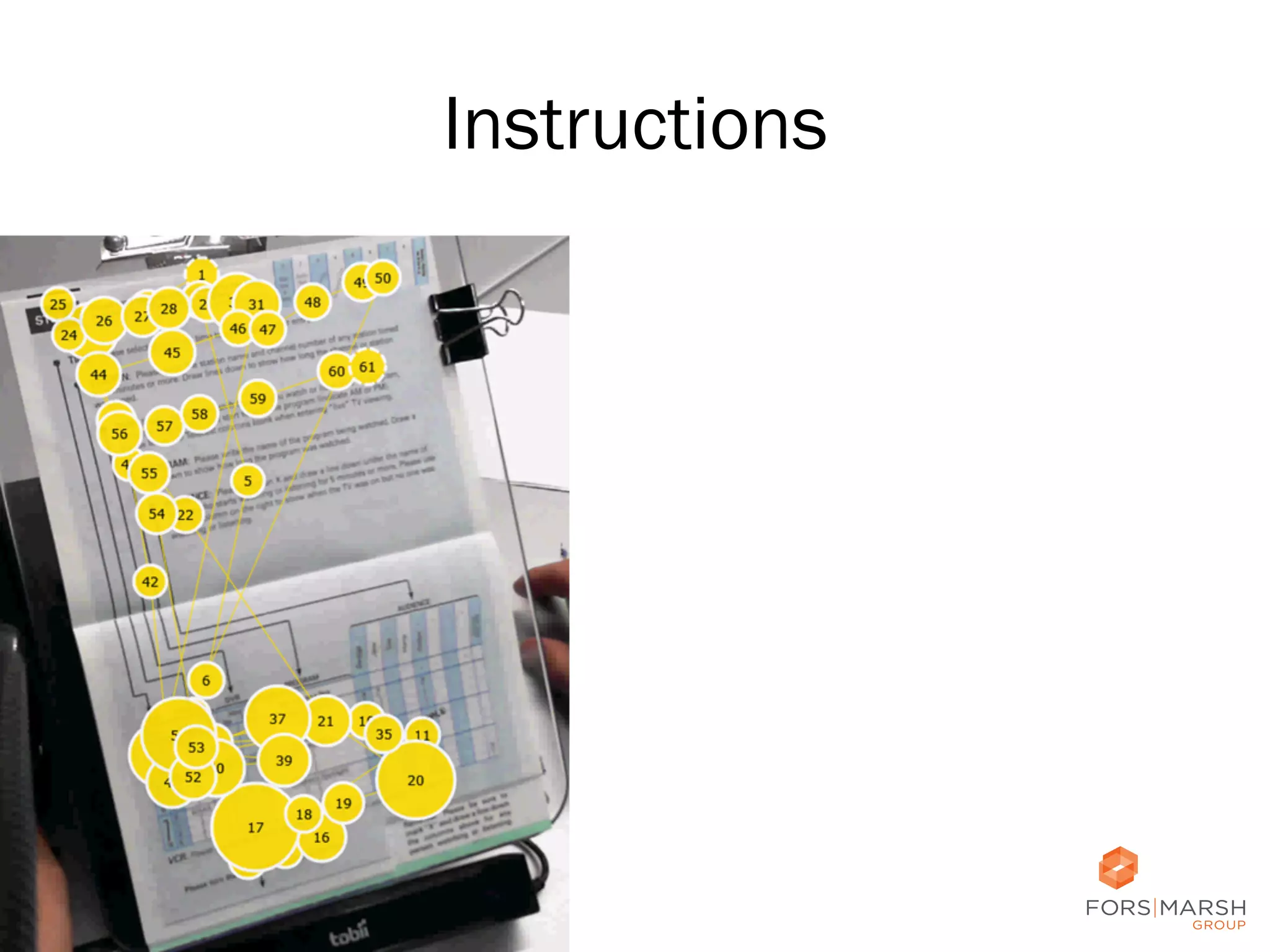

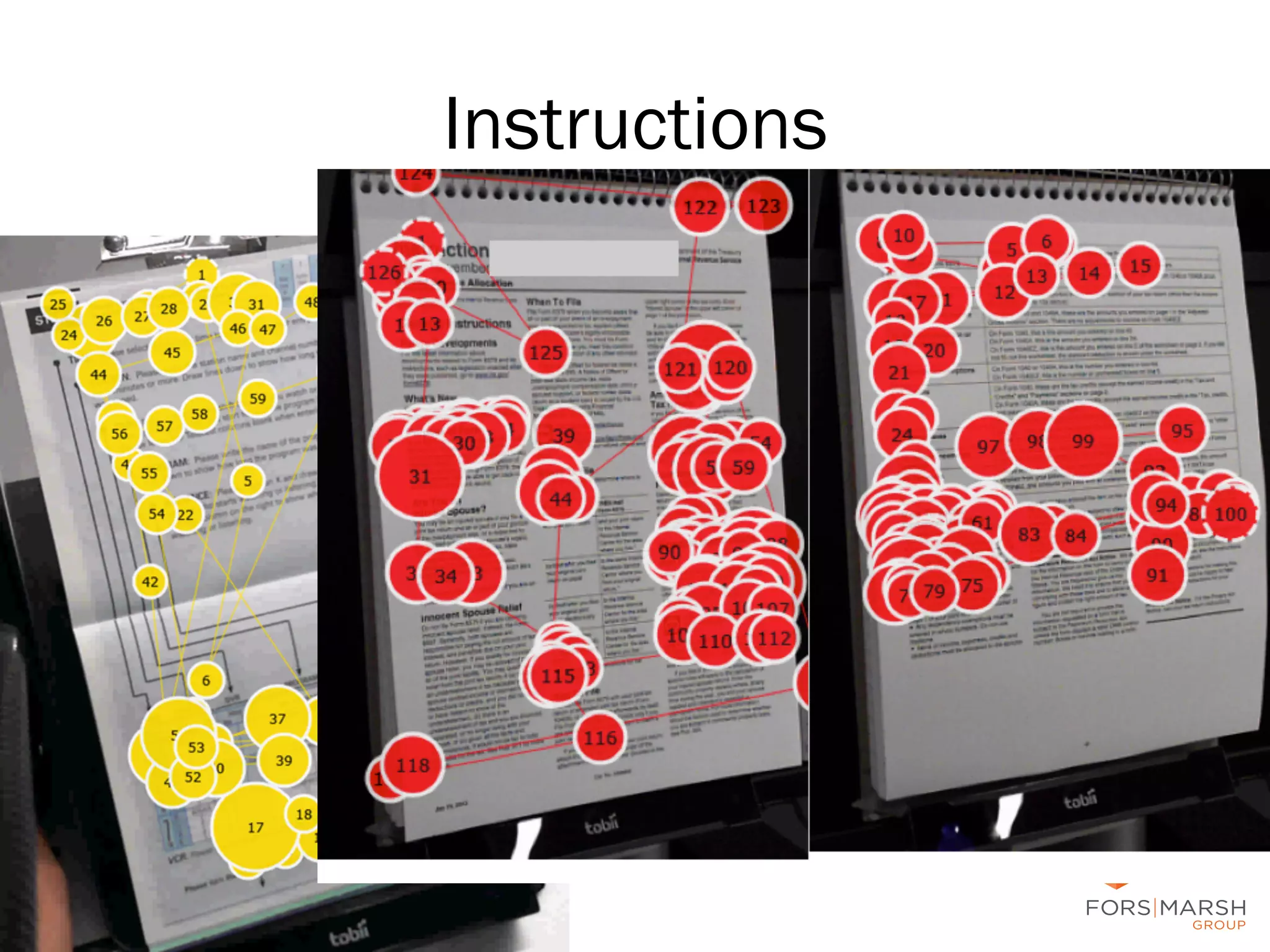

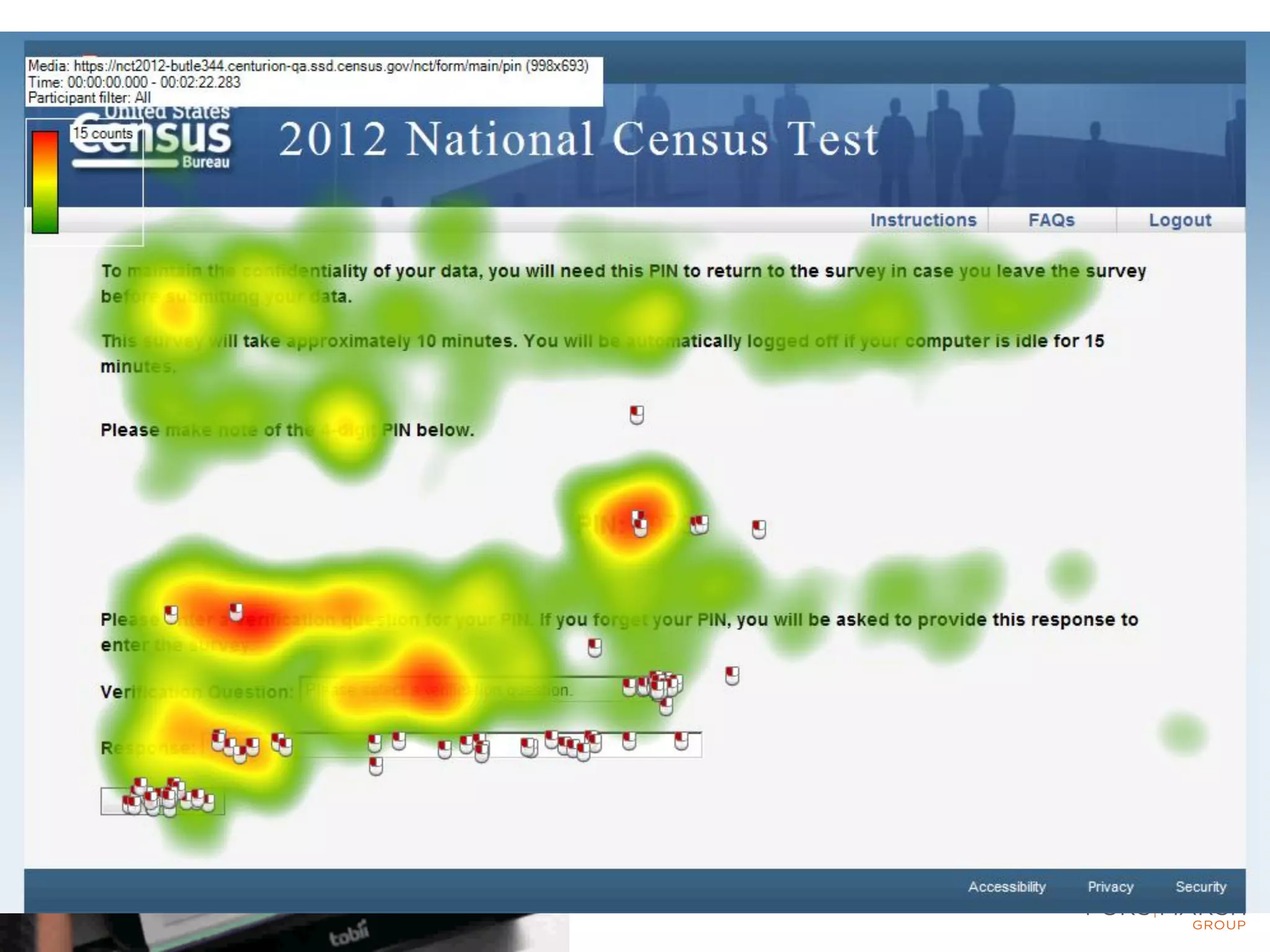

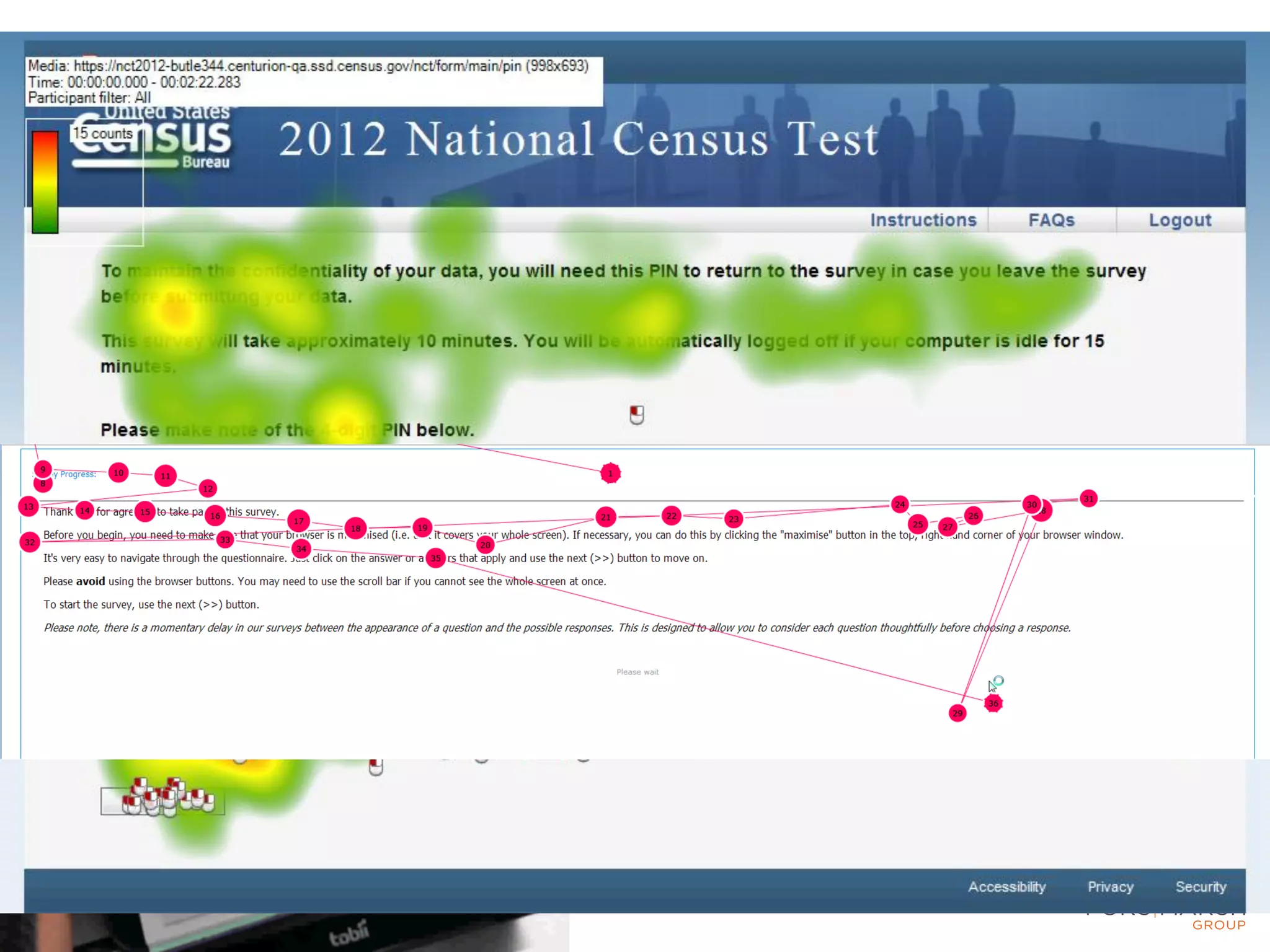

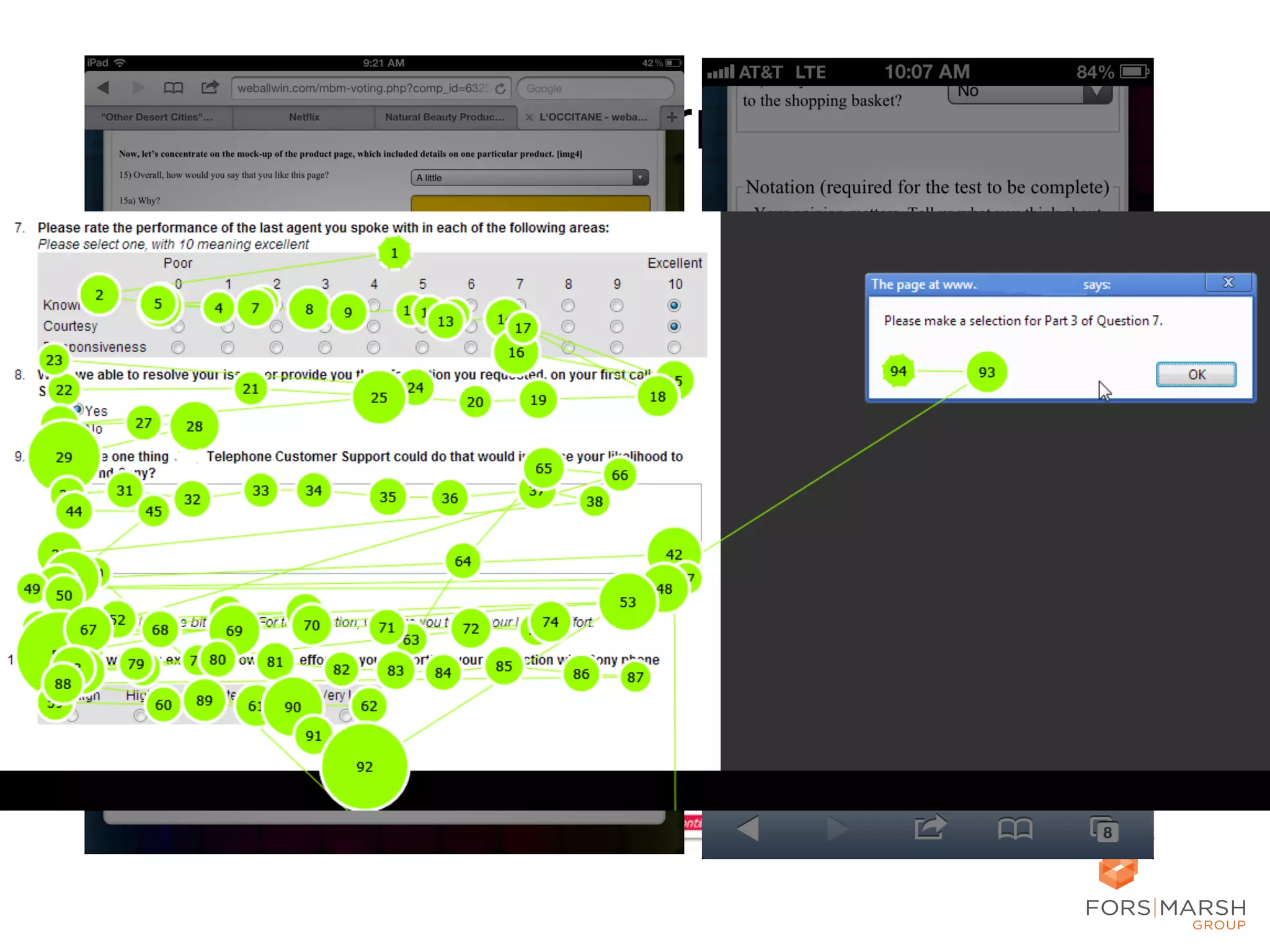

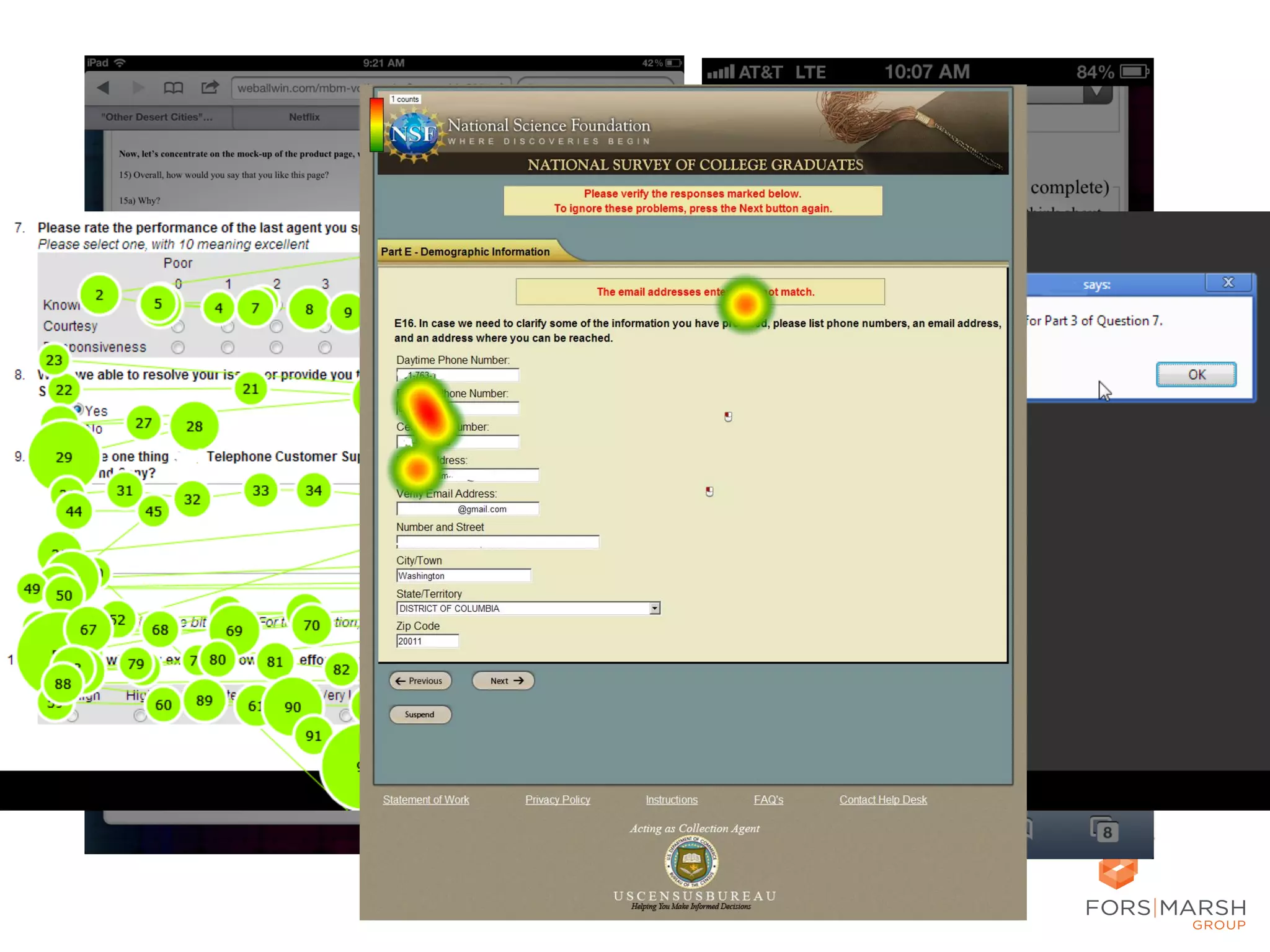

- Conducting one-on-one sessions, focus groups, and surveys to test designs

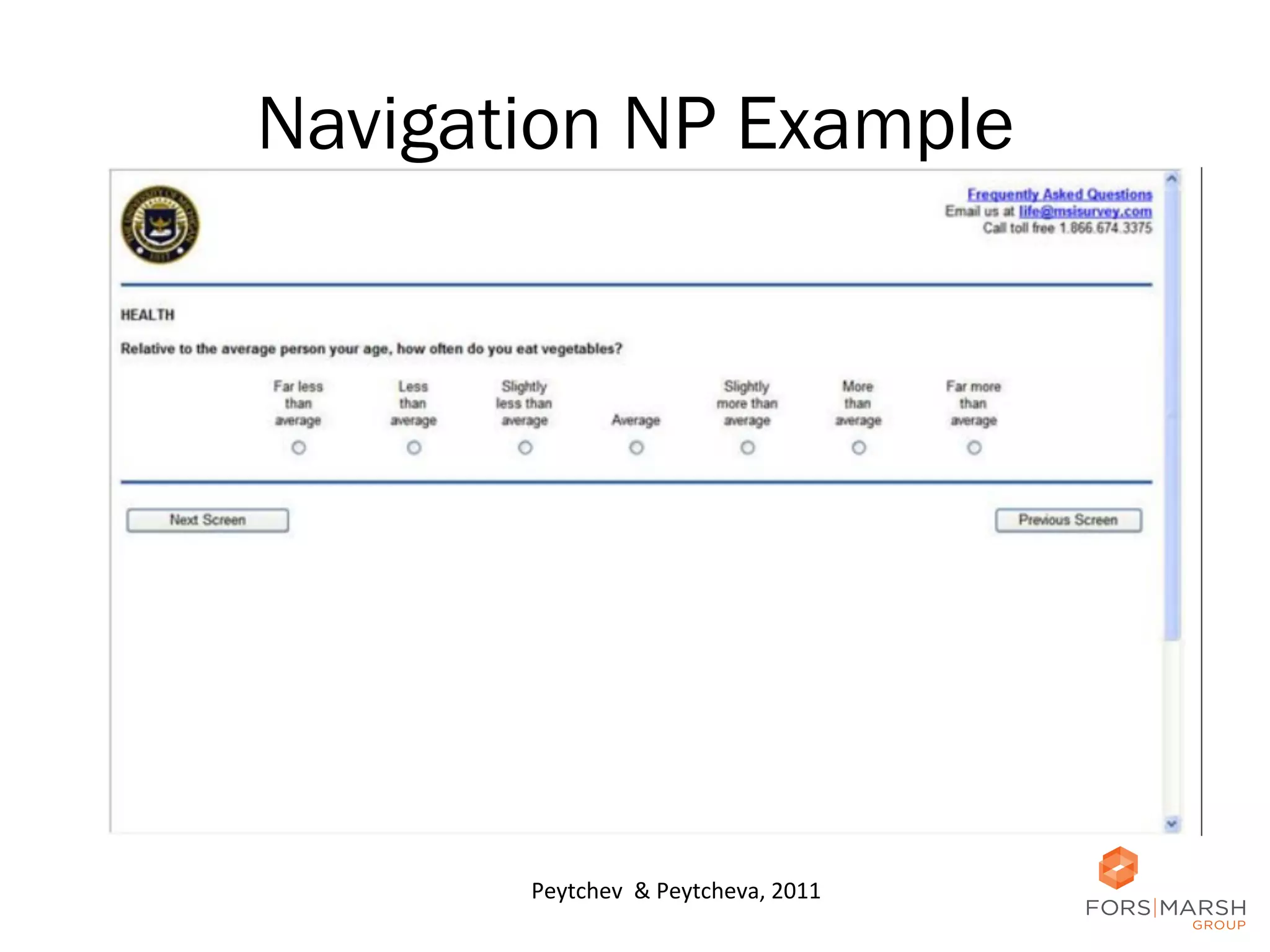

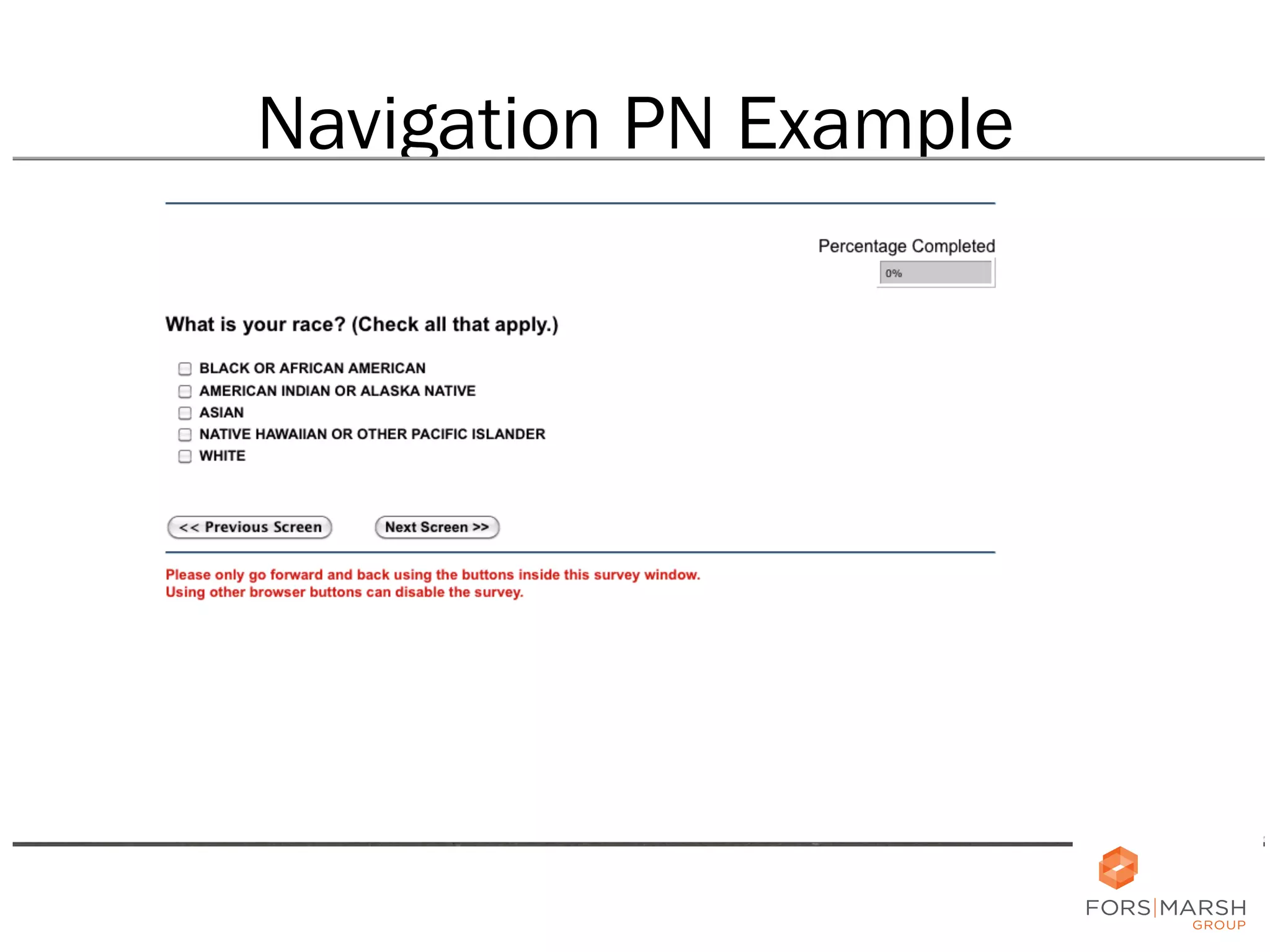

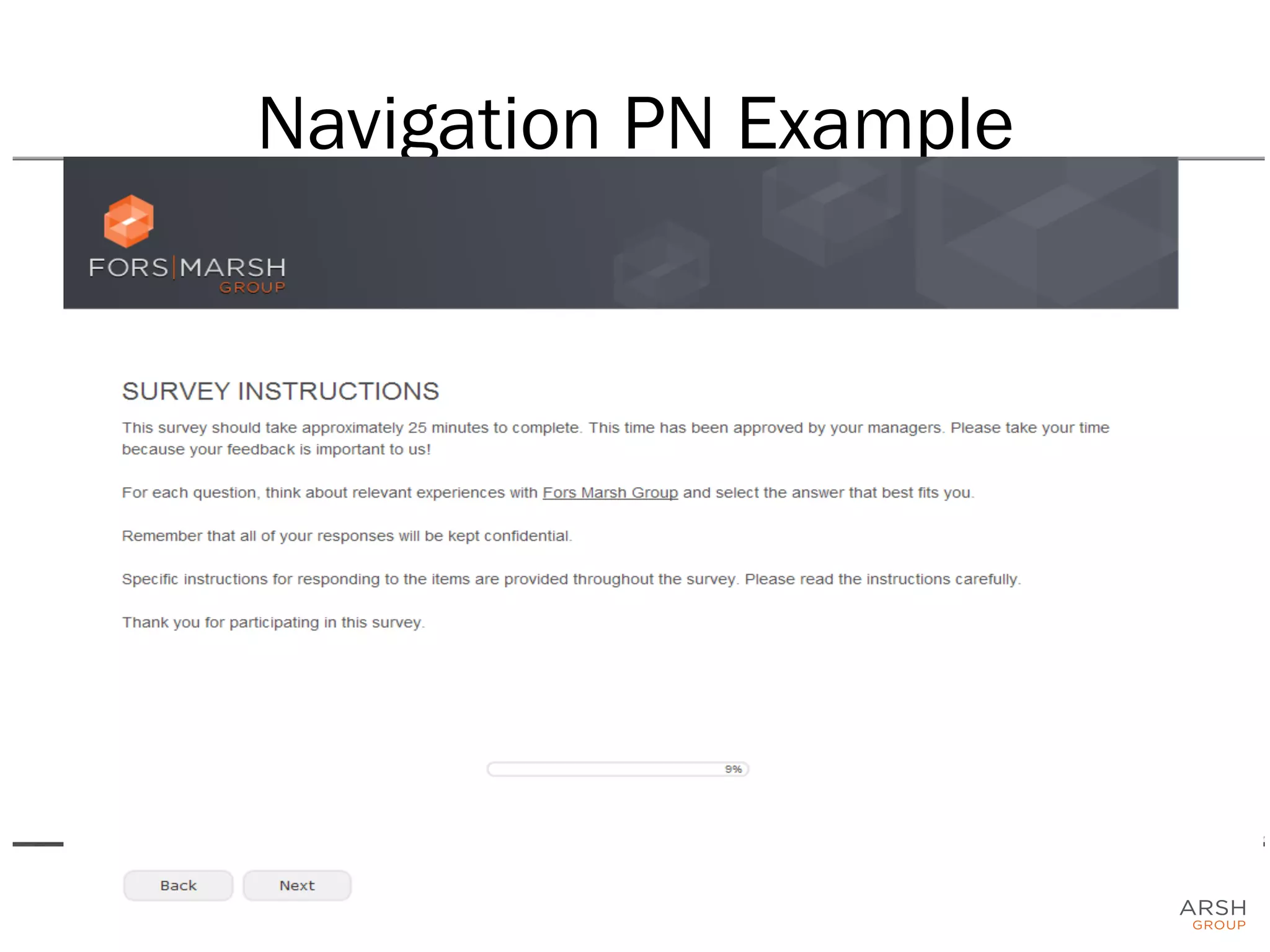

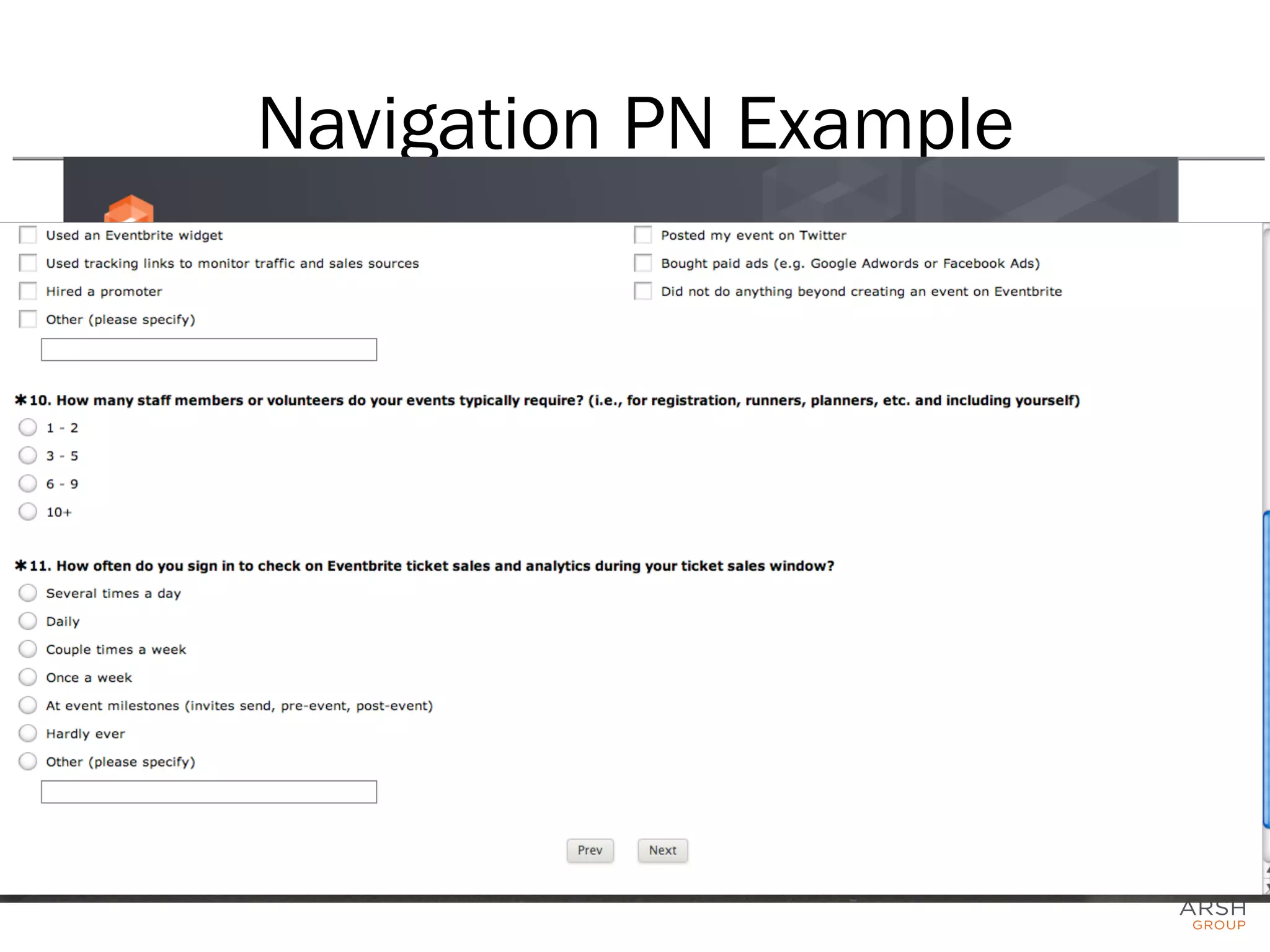

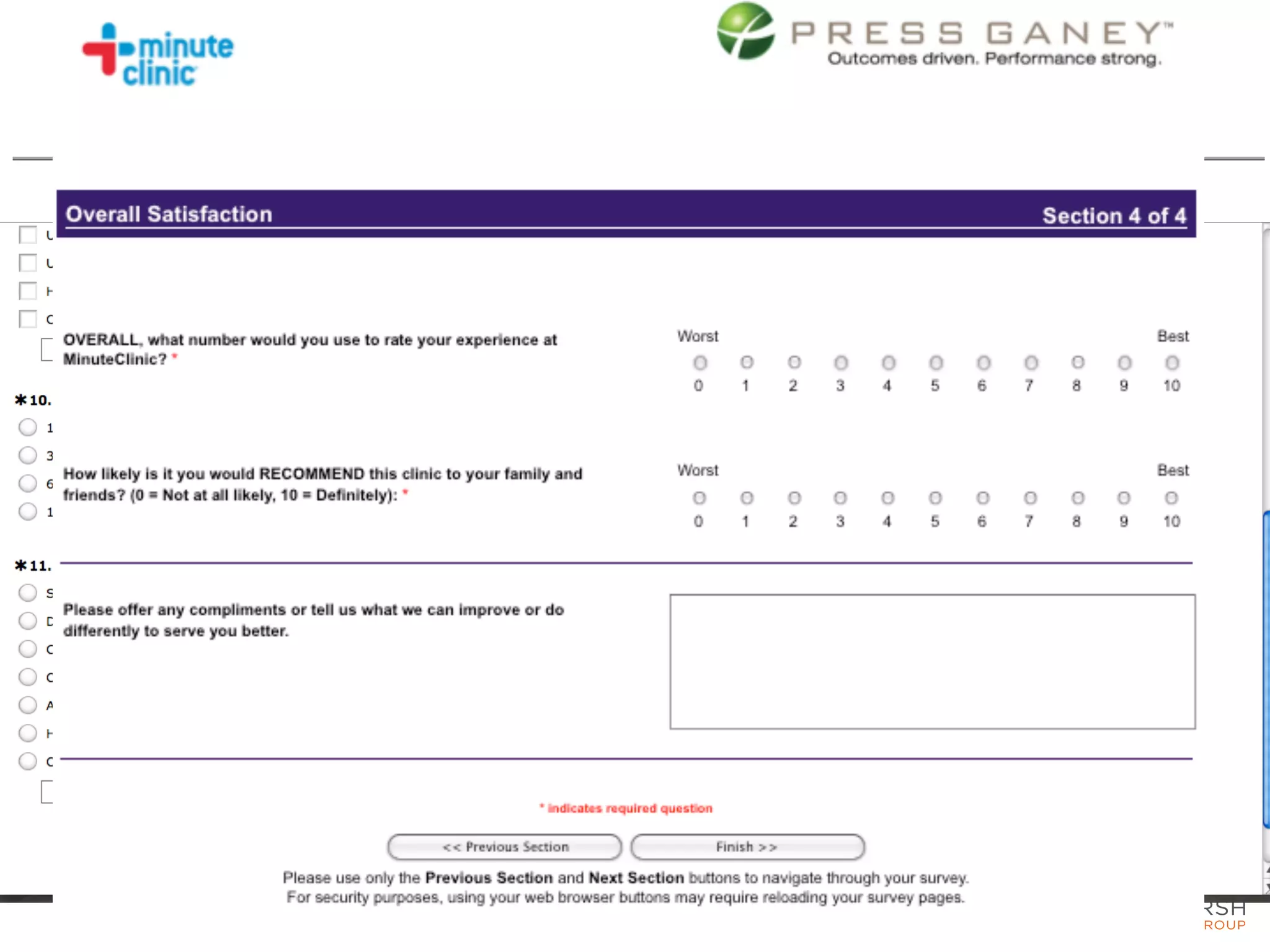

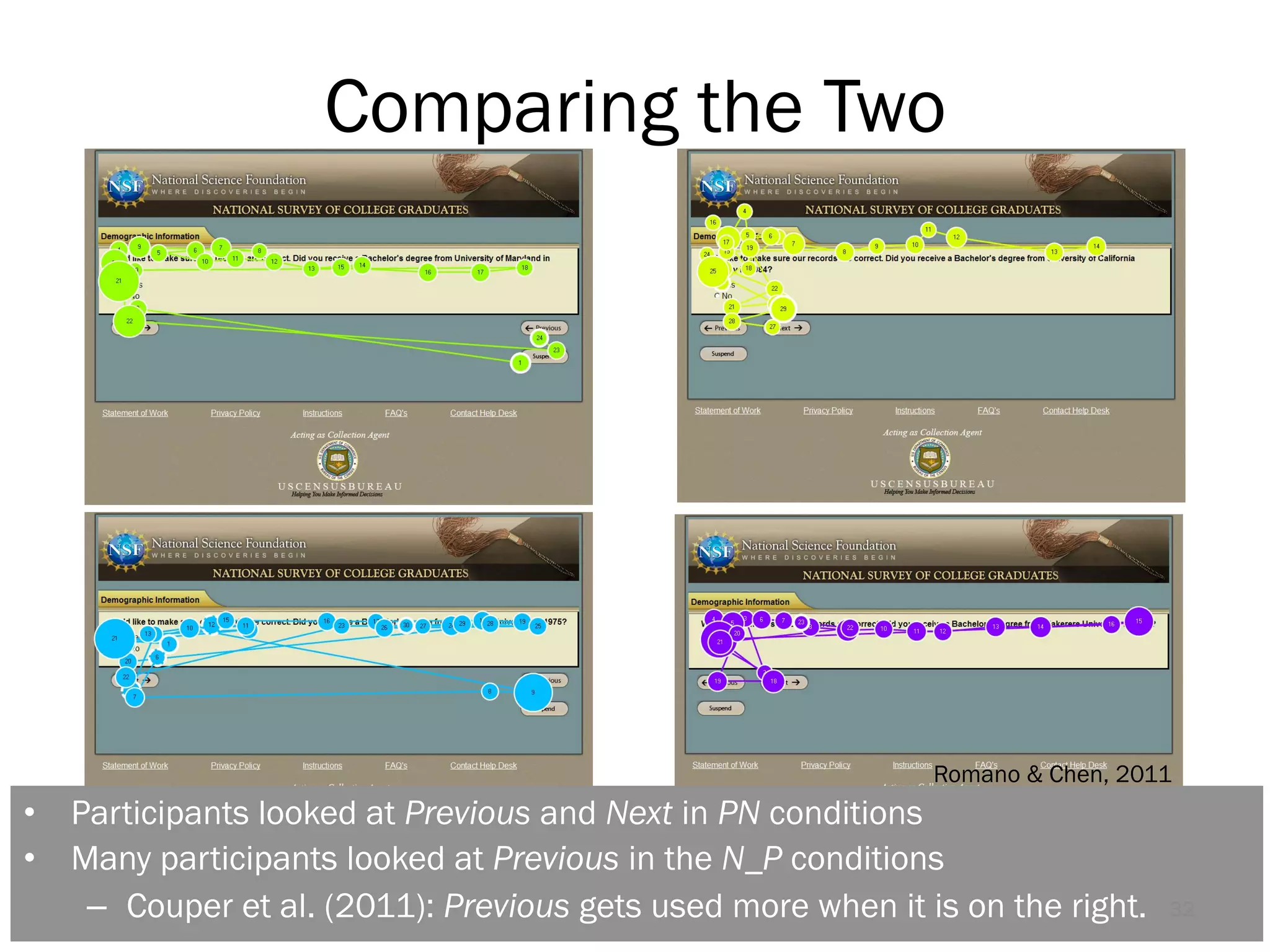

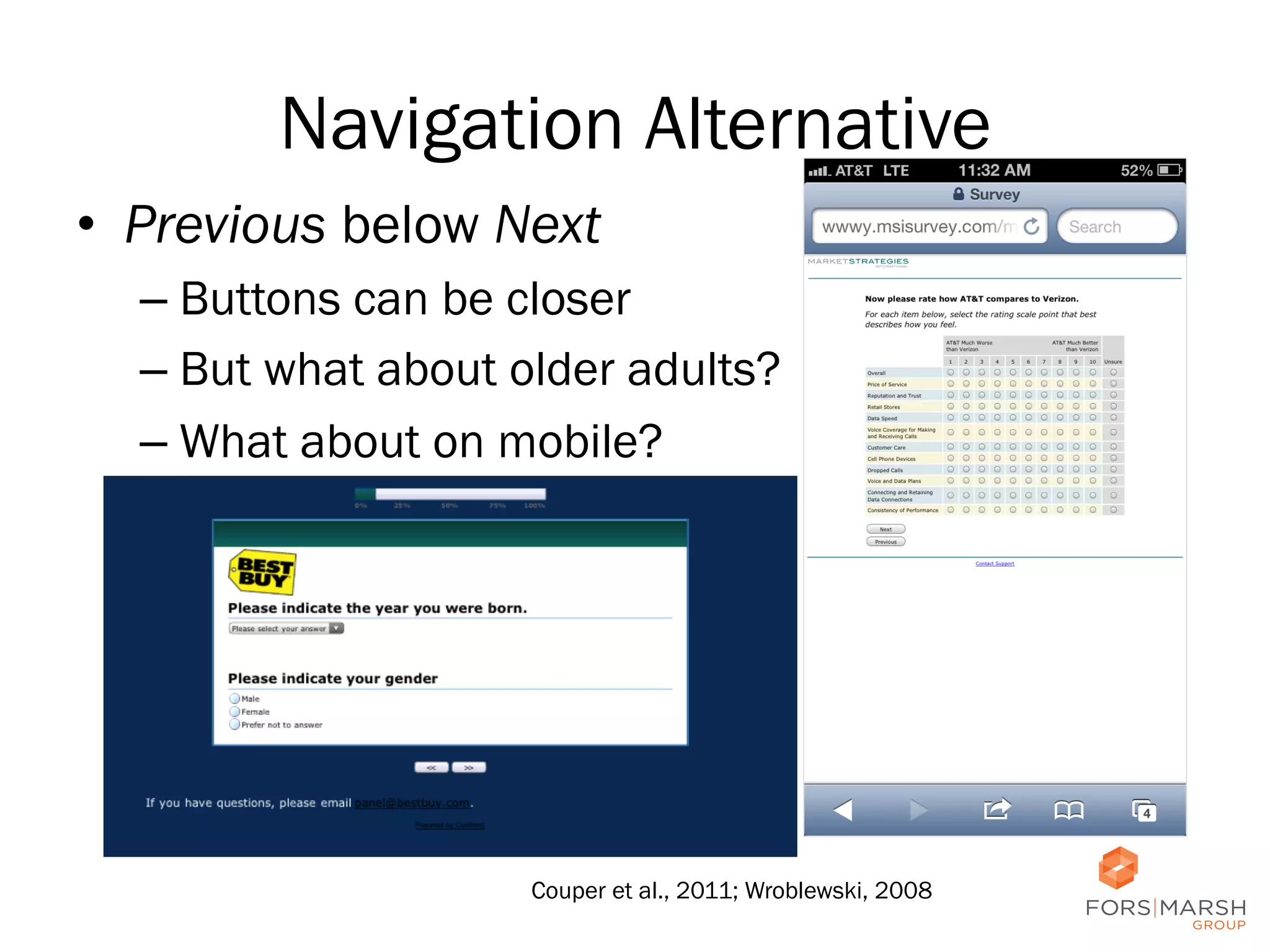

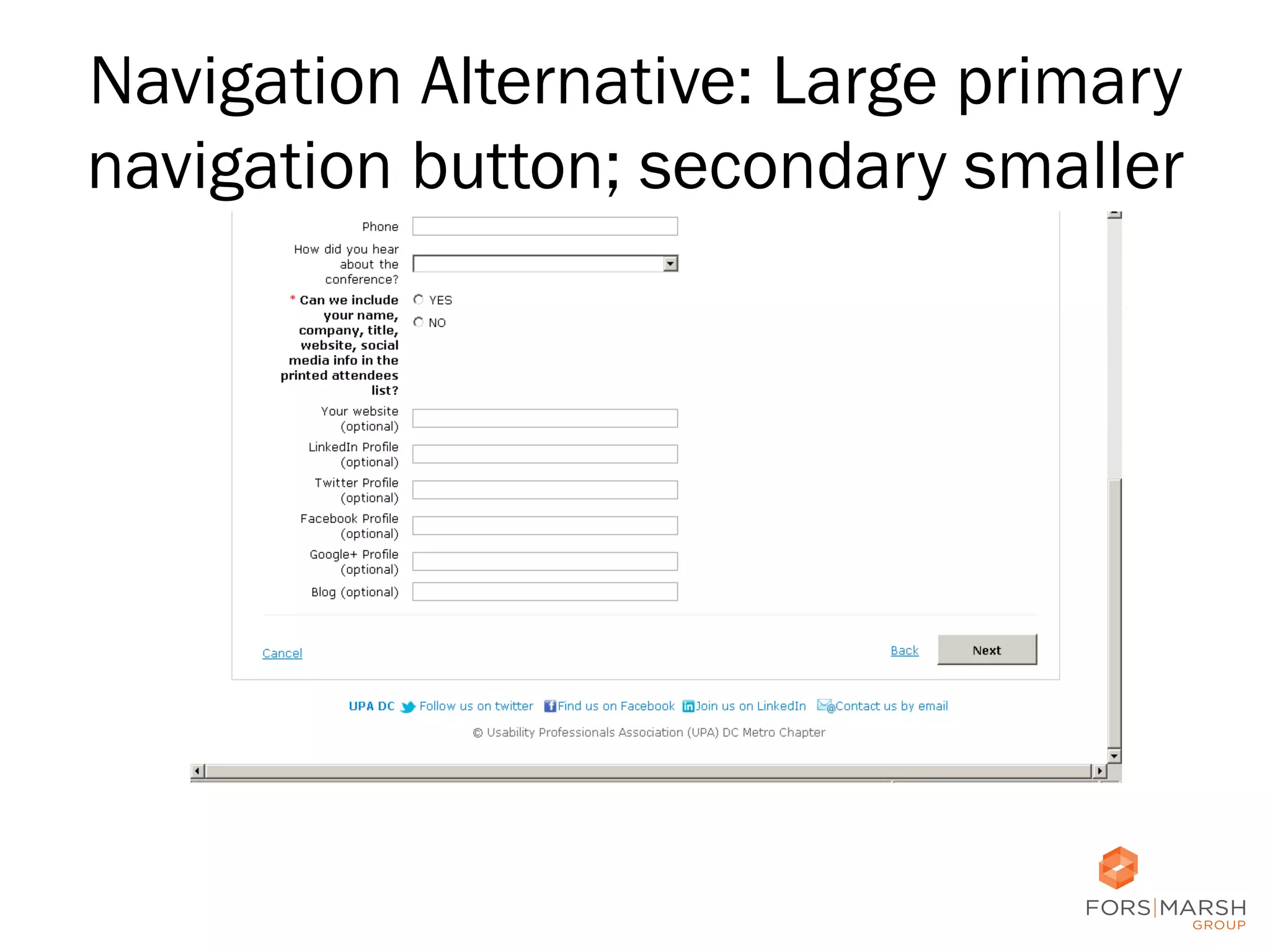

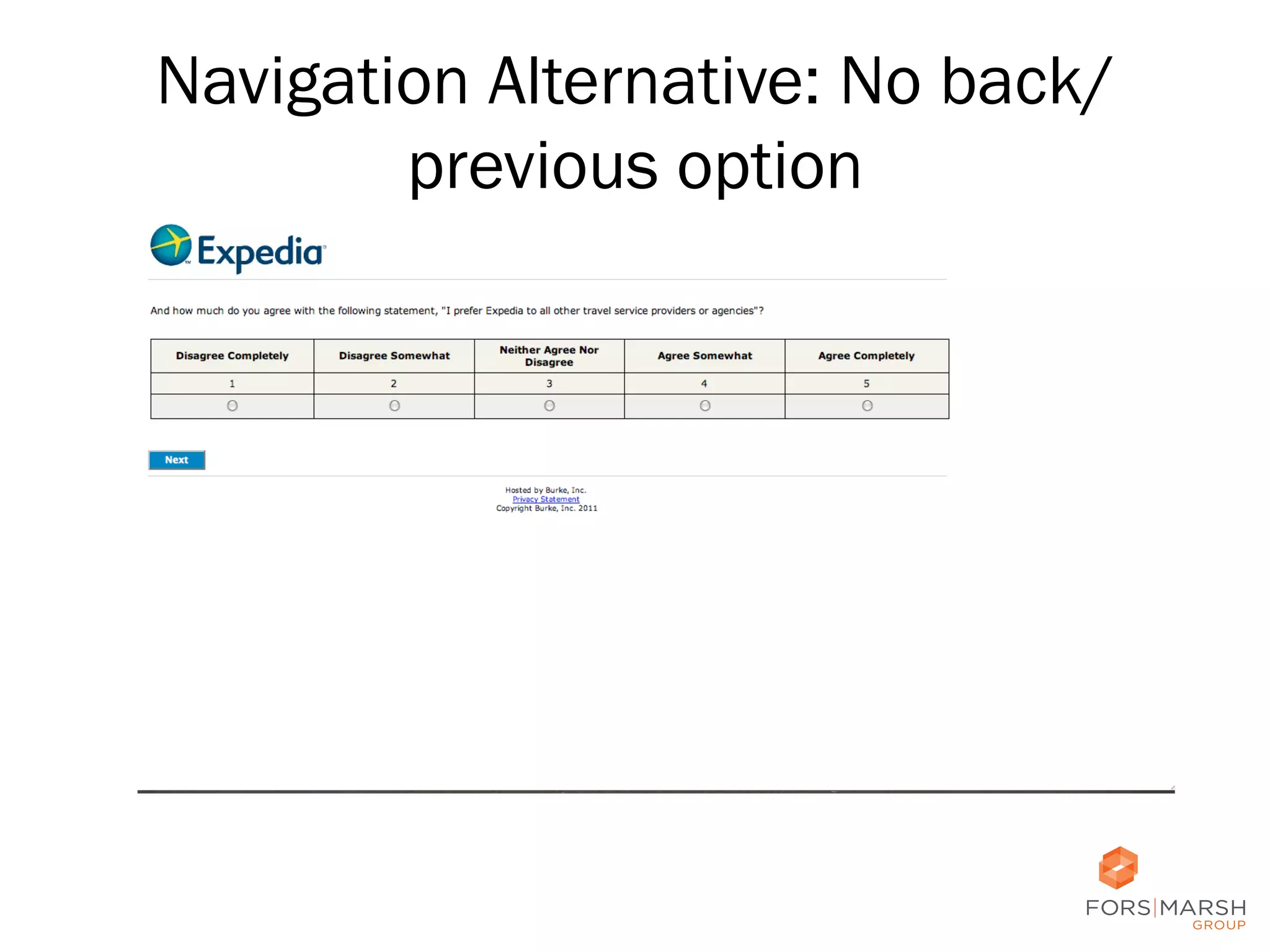

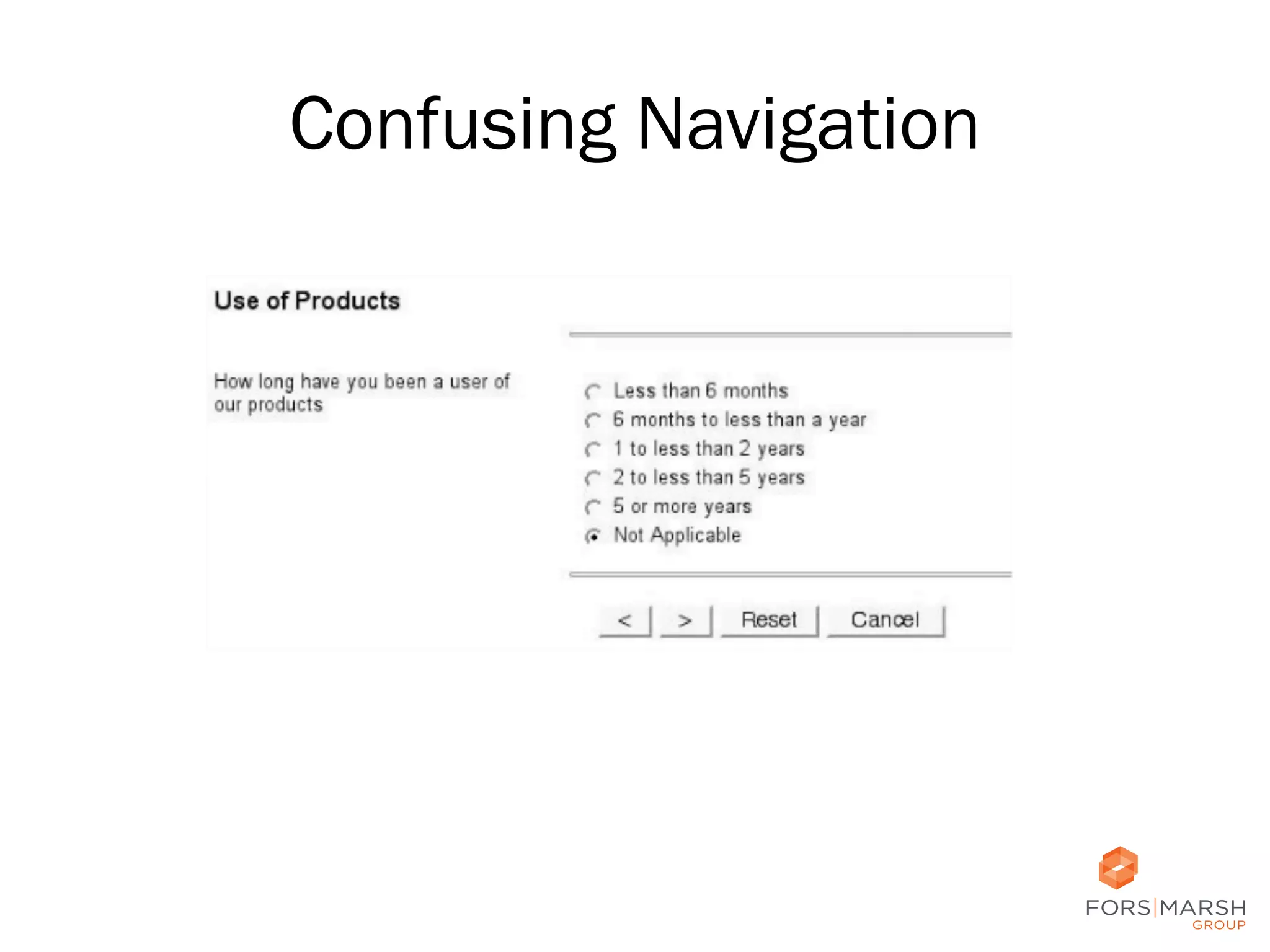

- Placing the "Next" button on the left for primary navigation

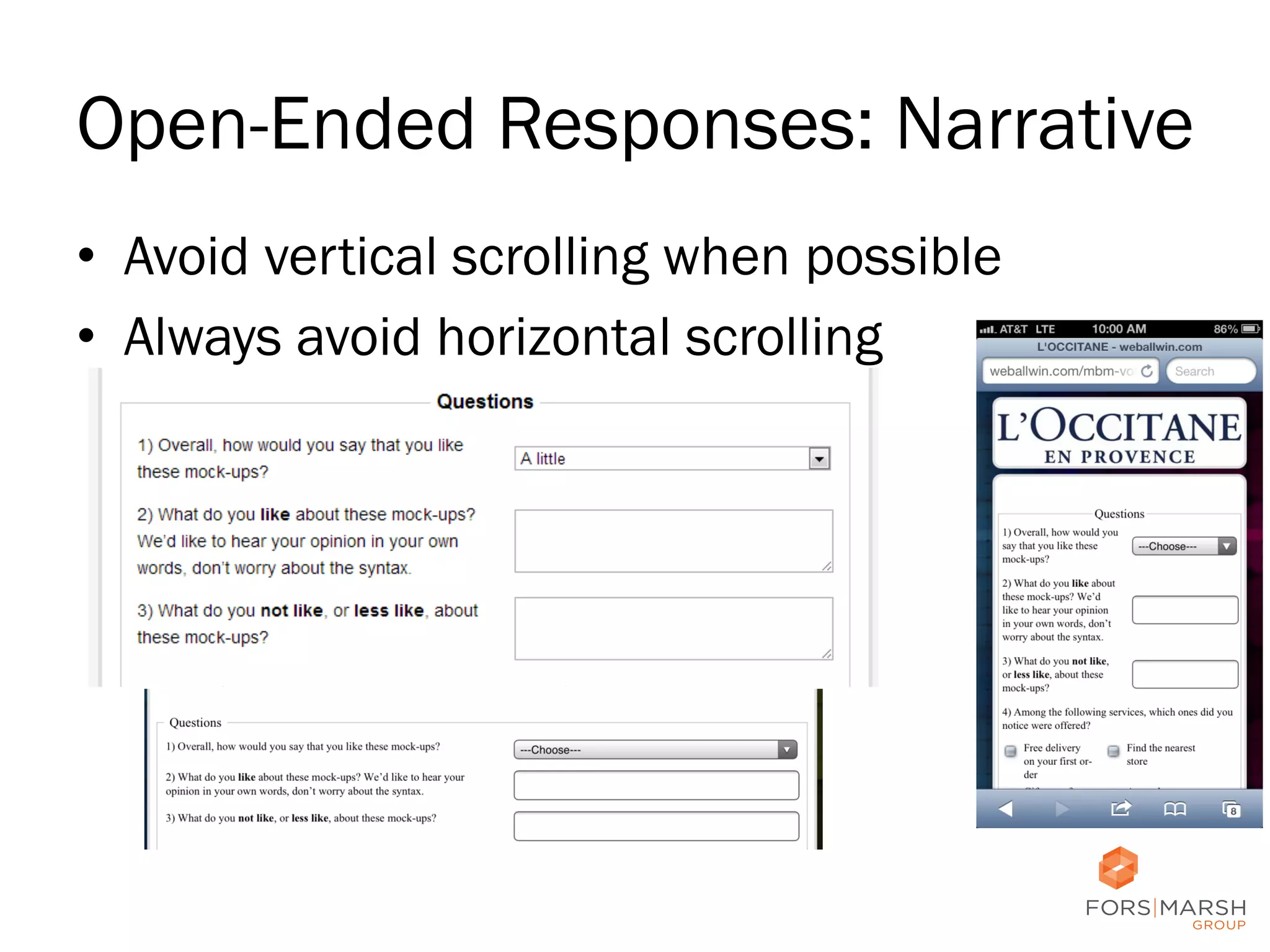

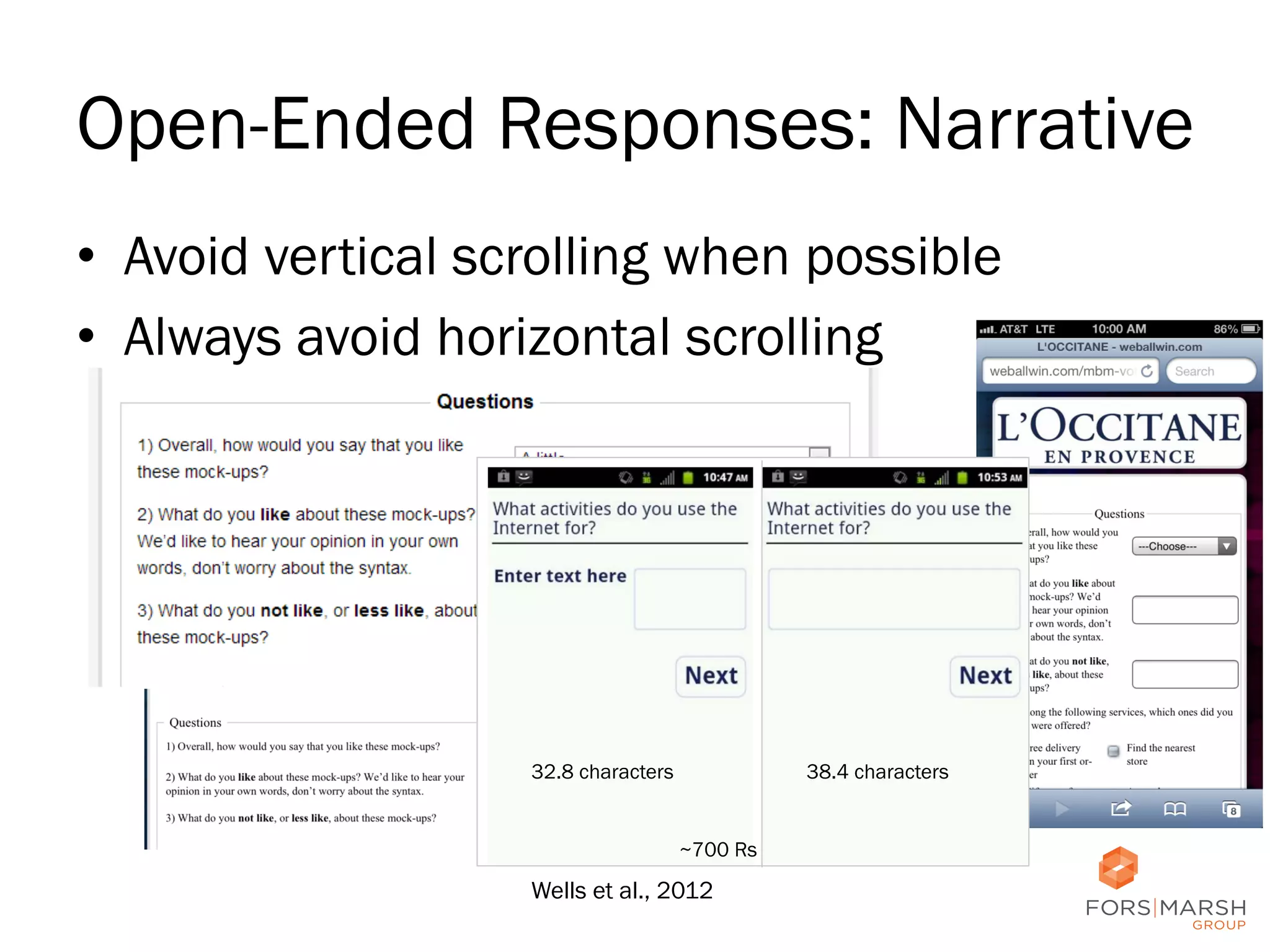

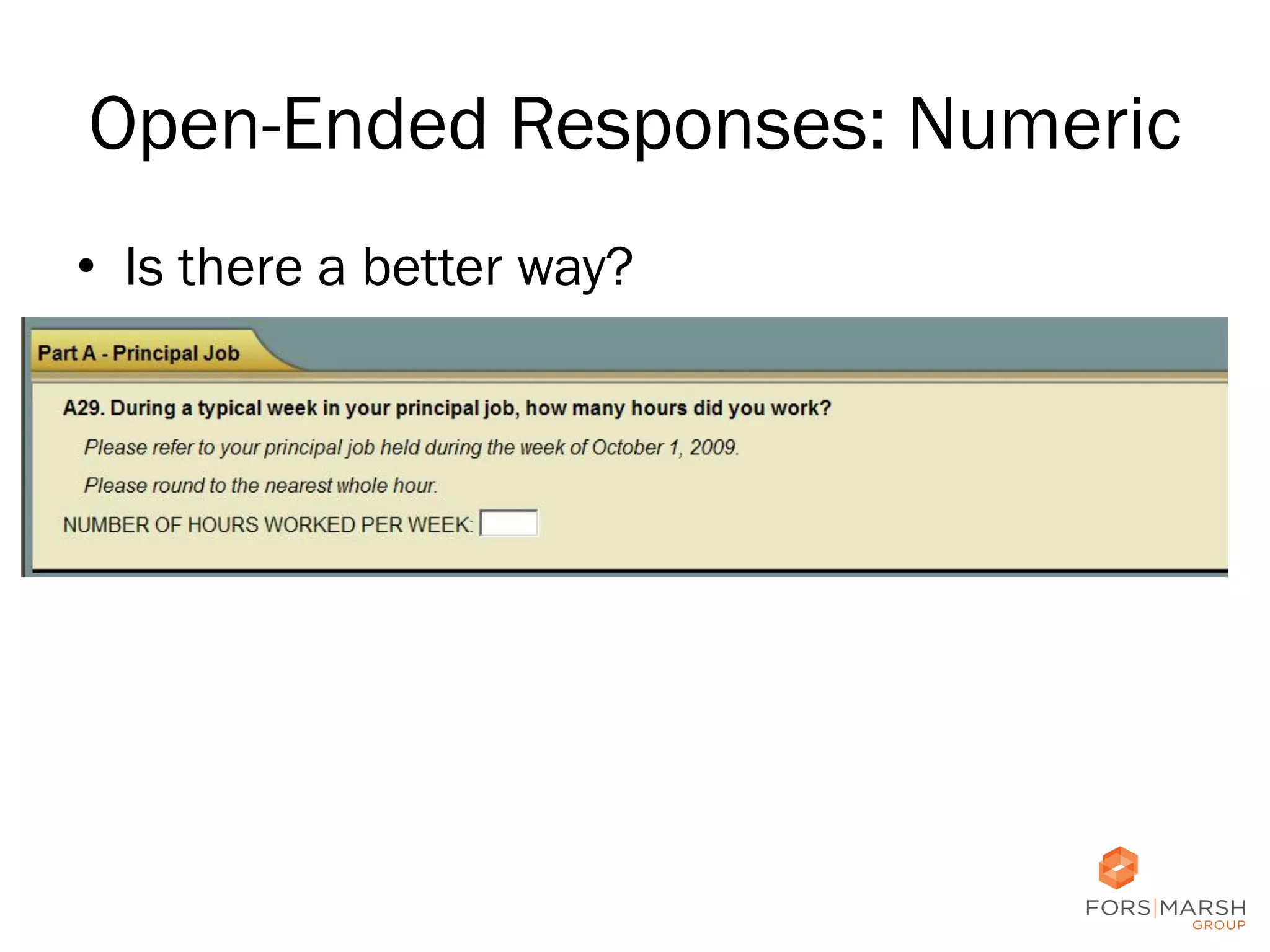

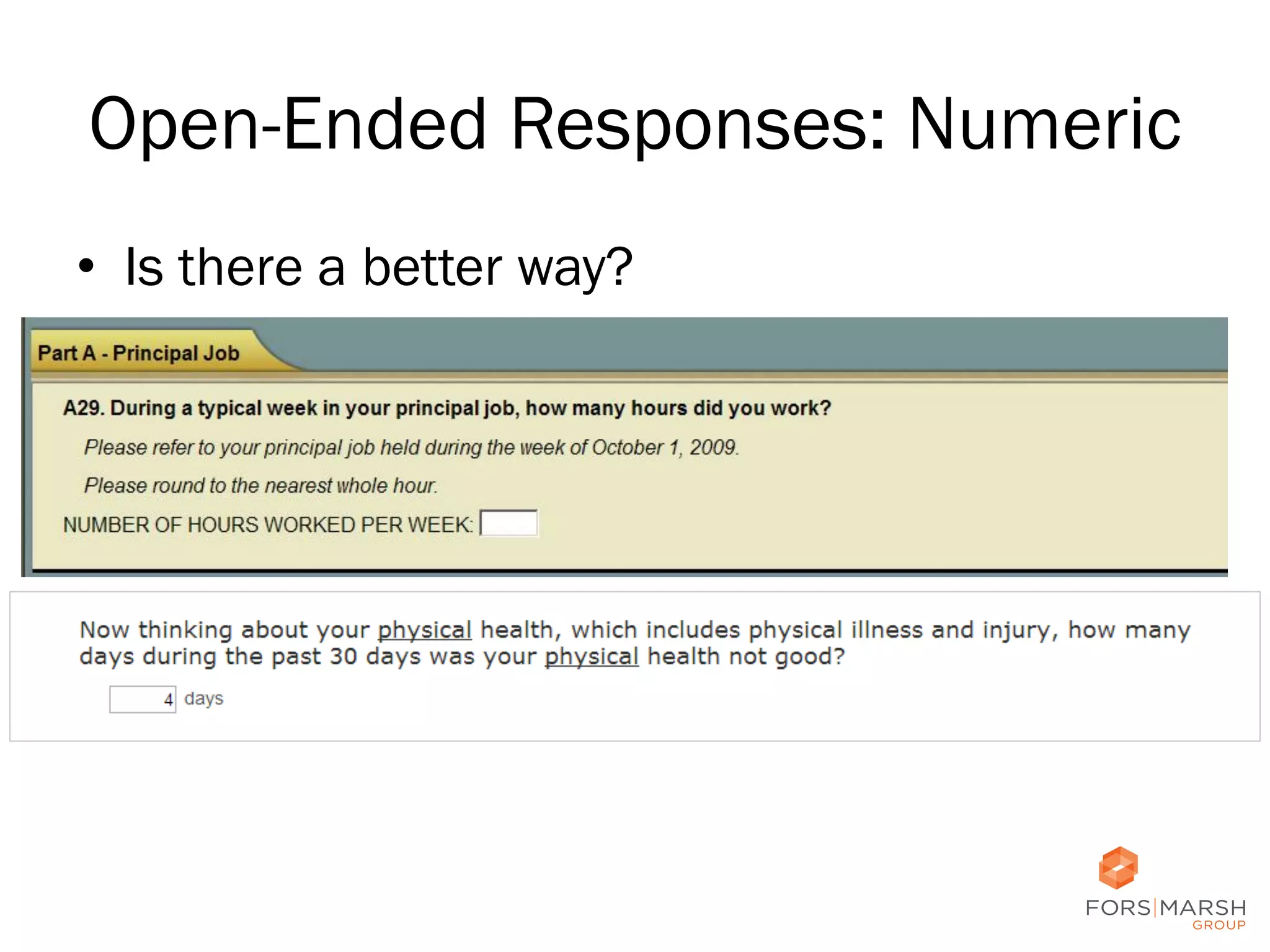

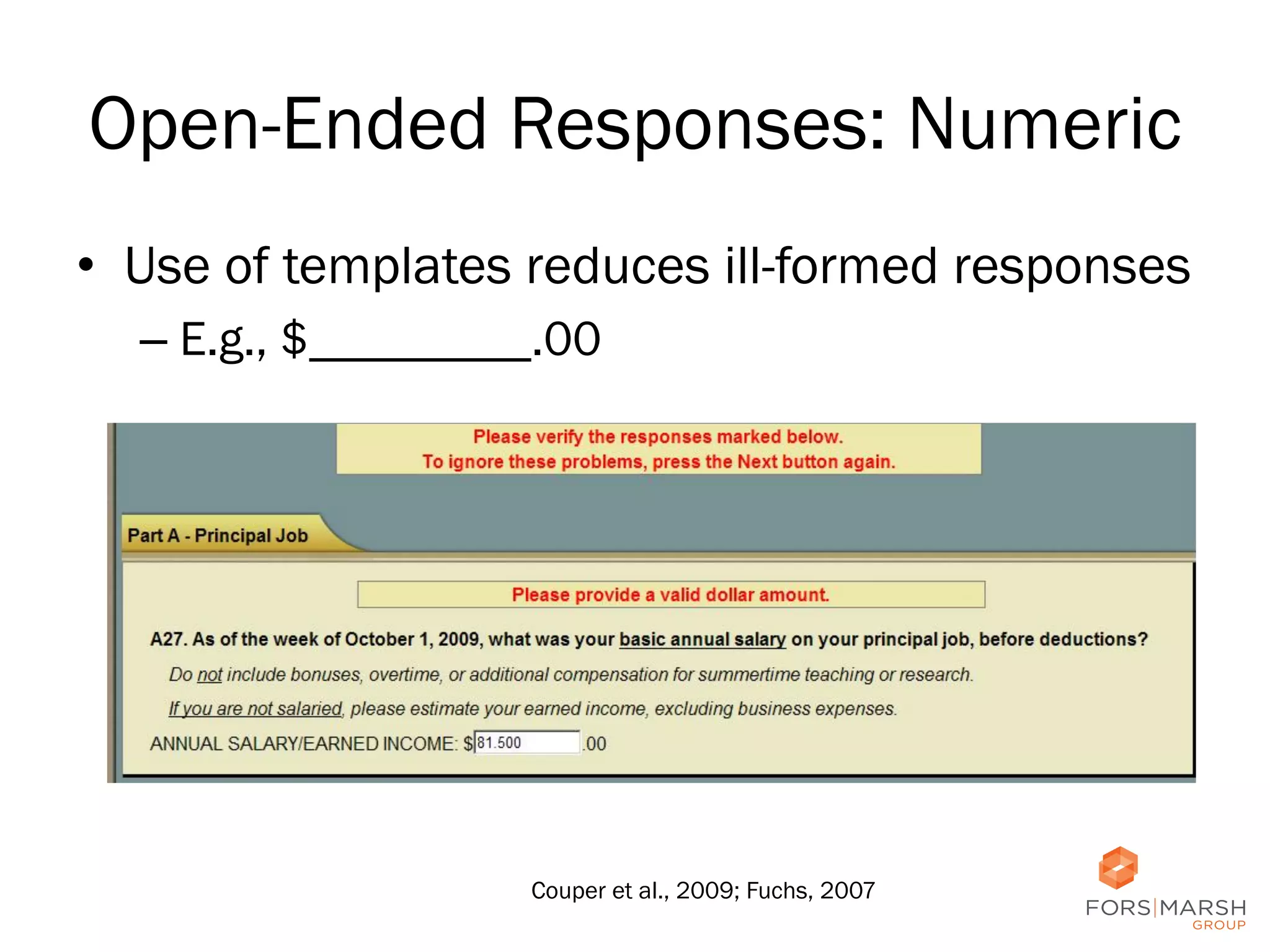

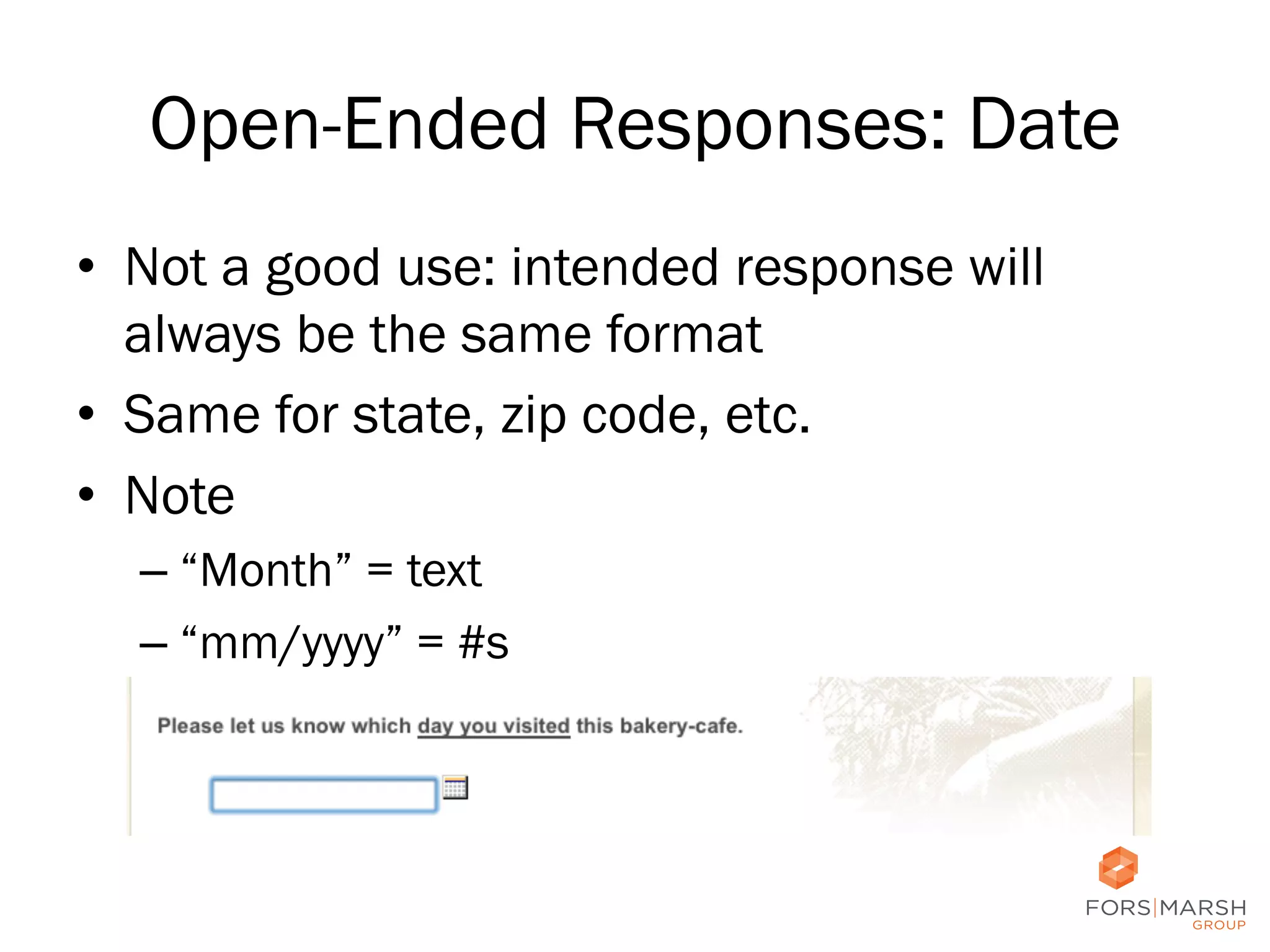

- Avoiding vertical and horizontal scrolling for open-ended responses

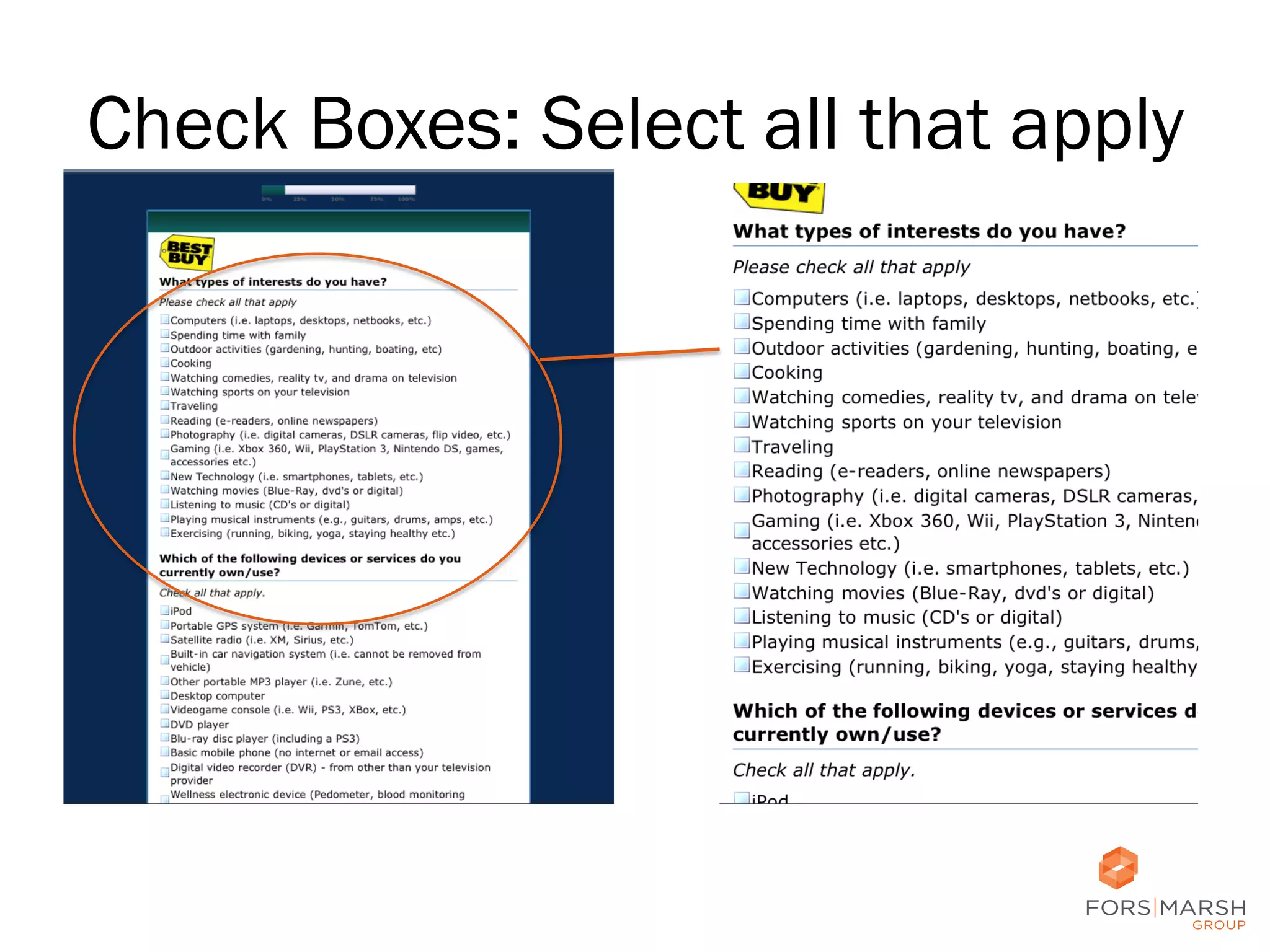

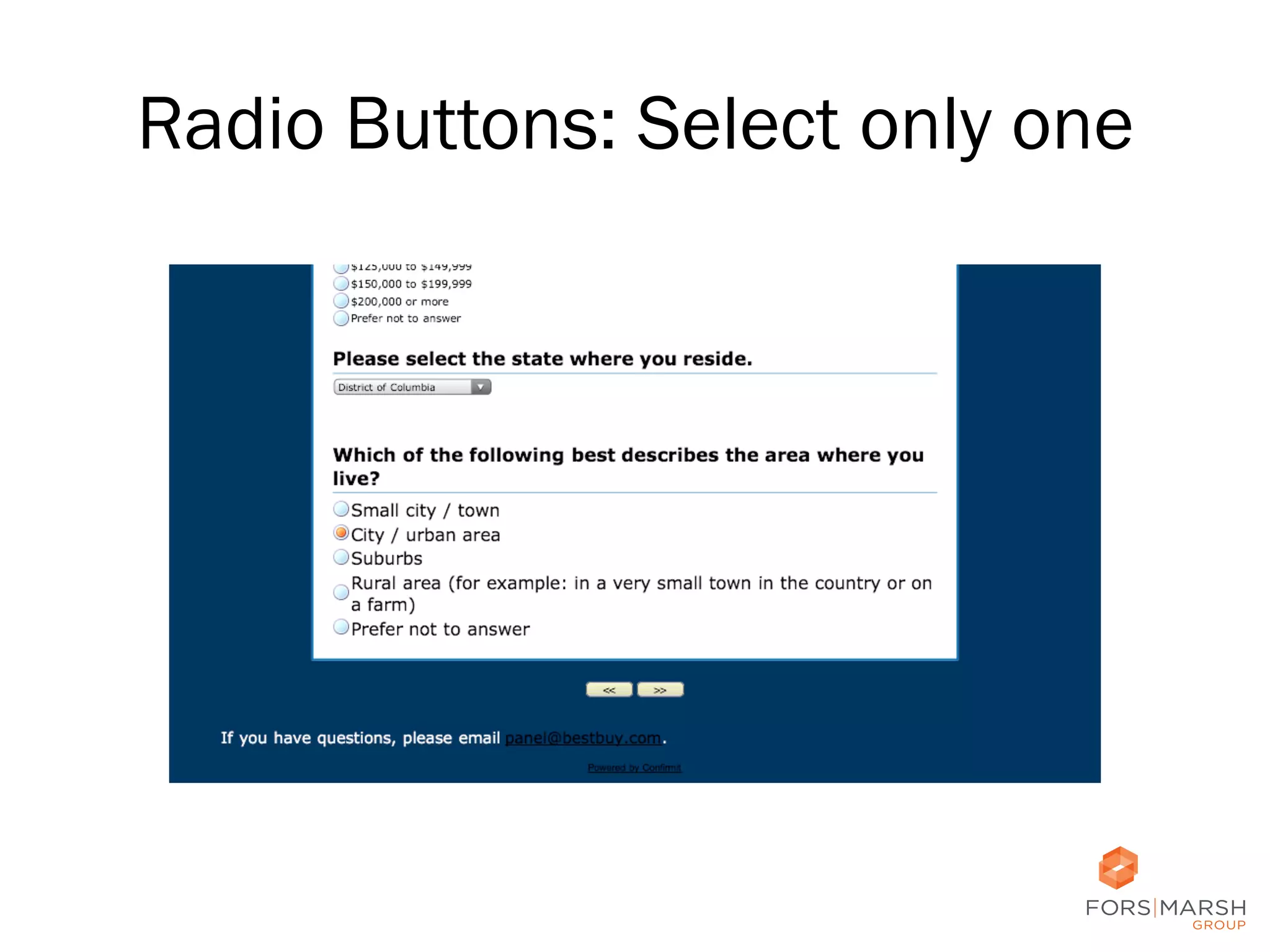

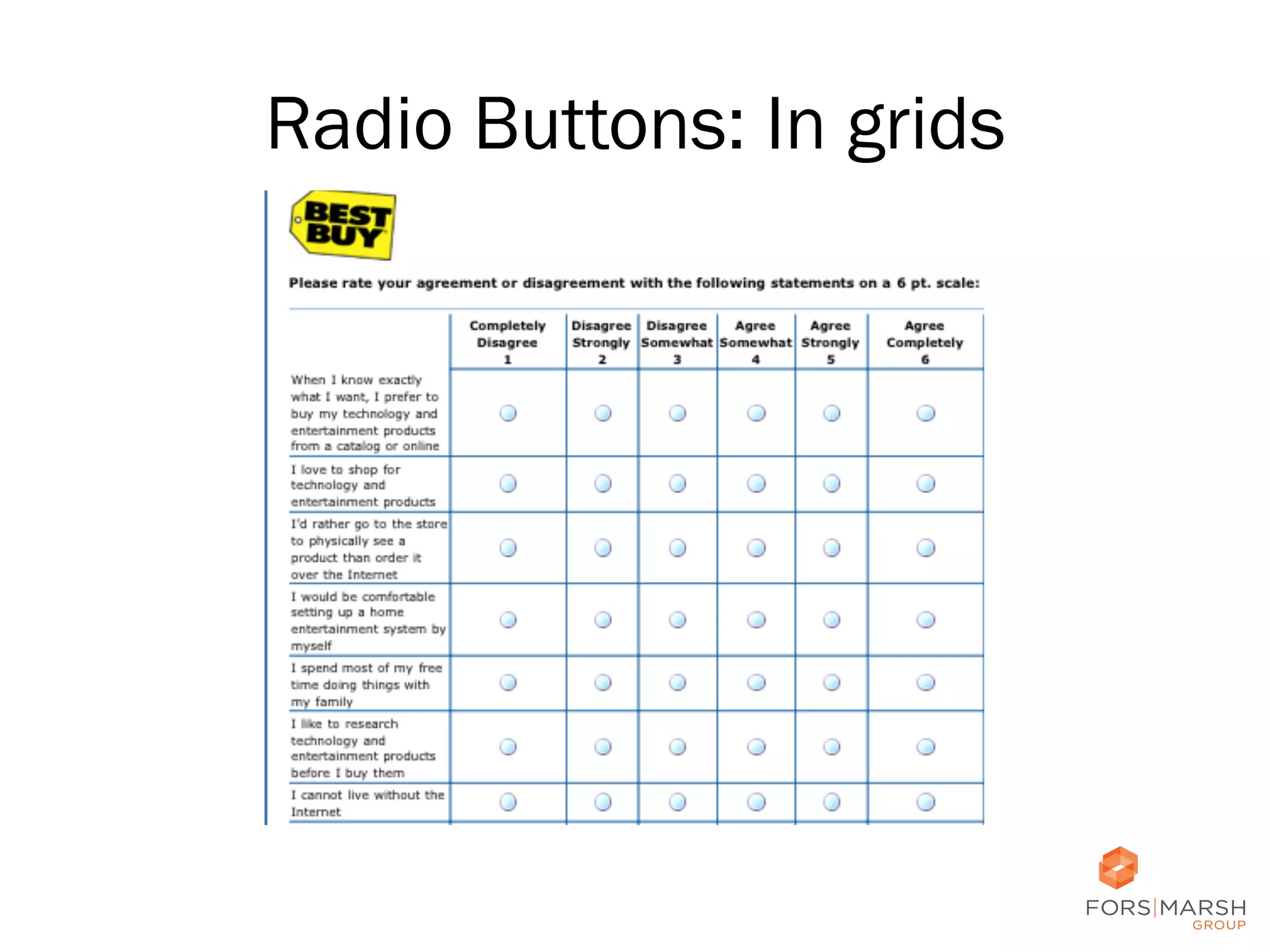

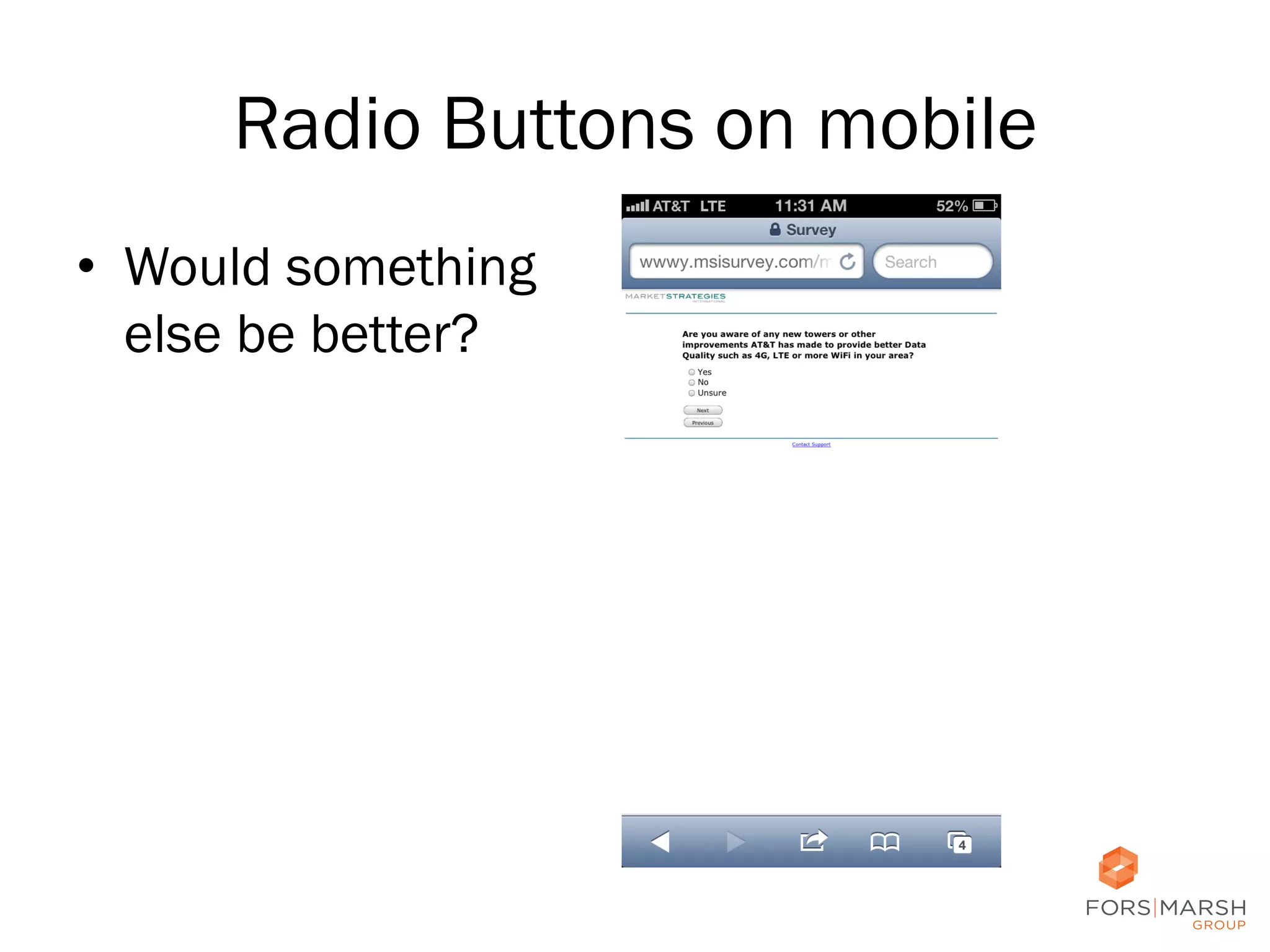

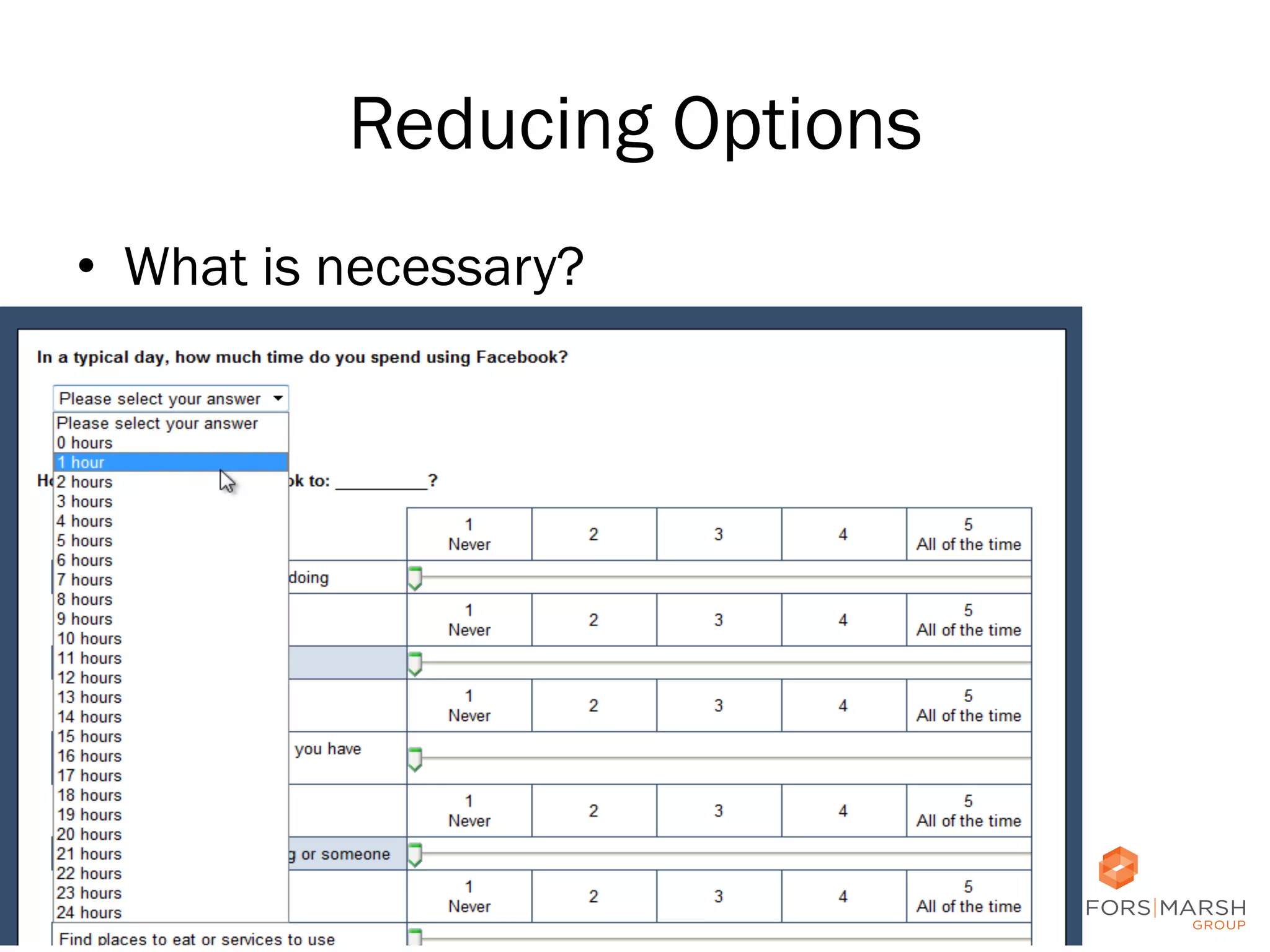

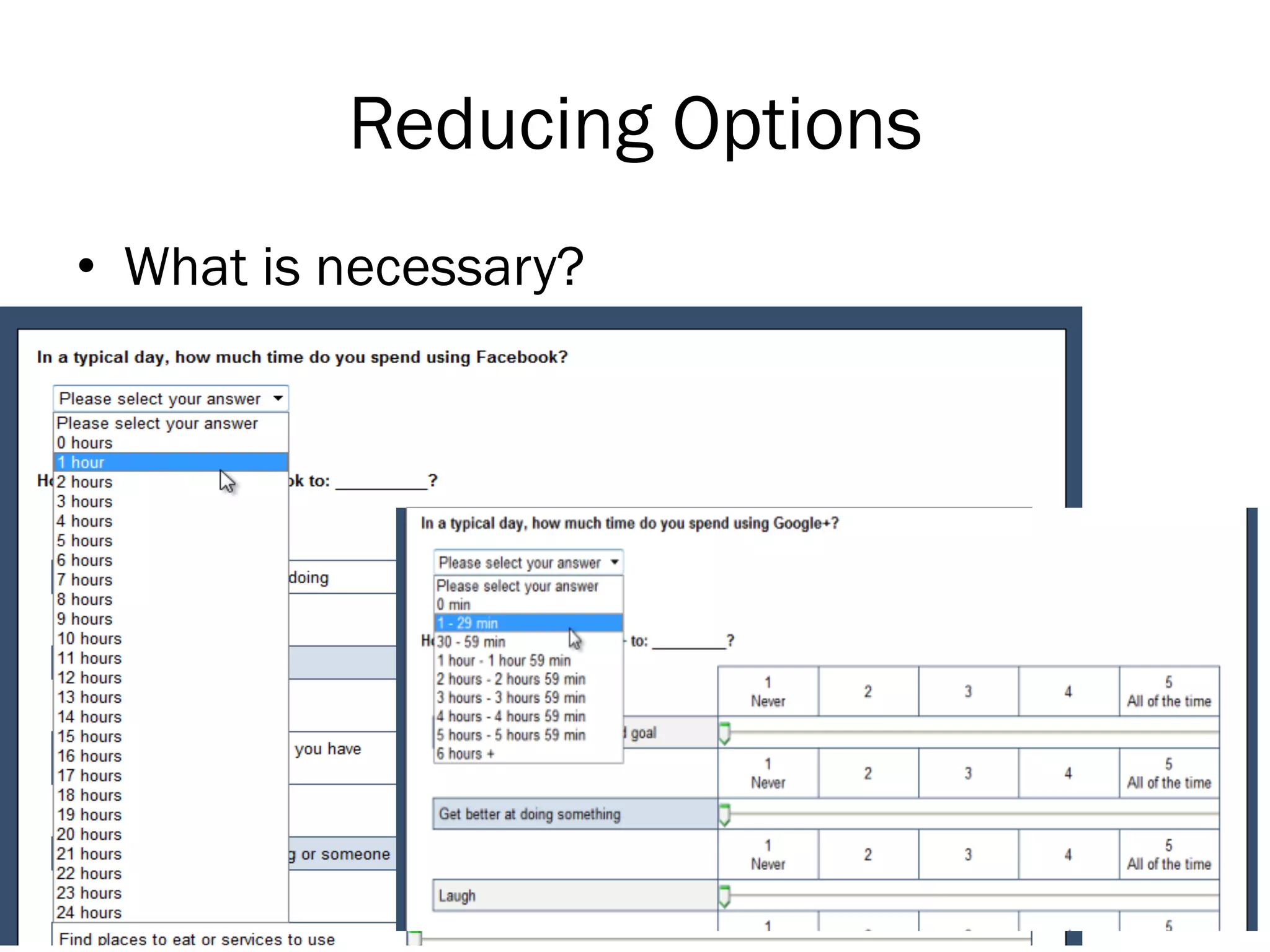

- Using radio buttons and checkboxes according to conventions

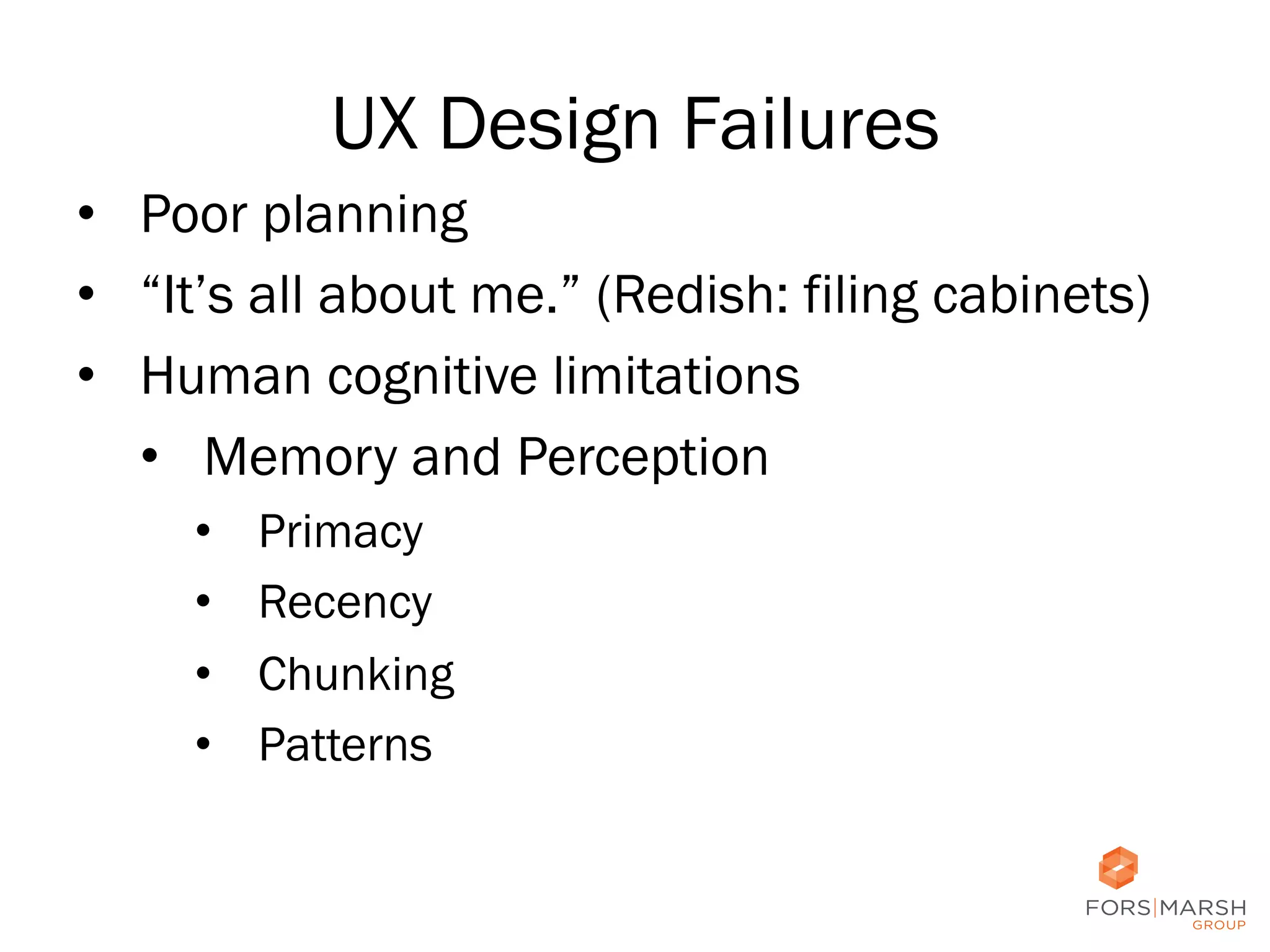

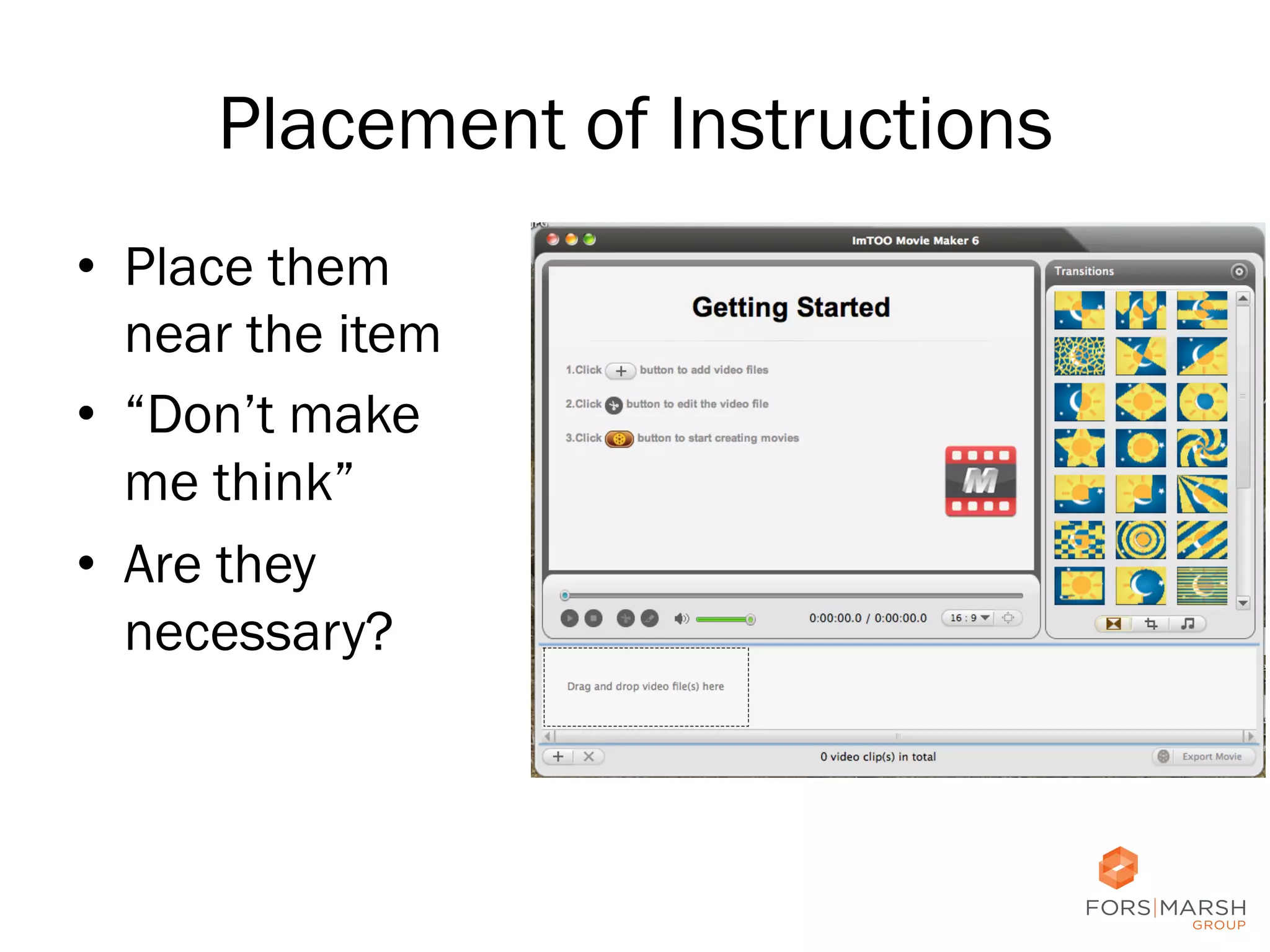

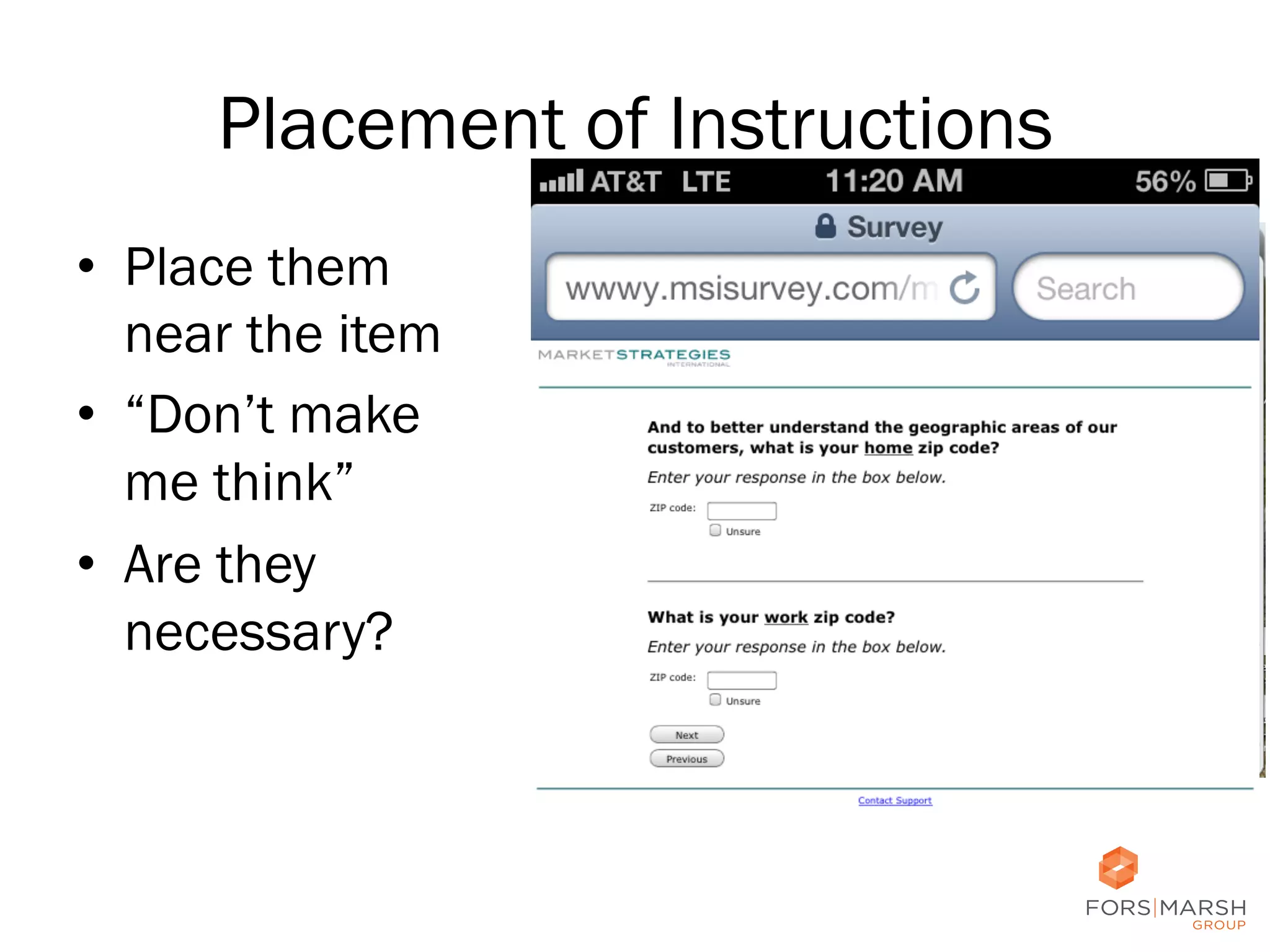

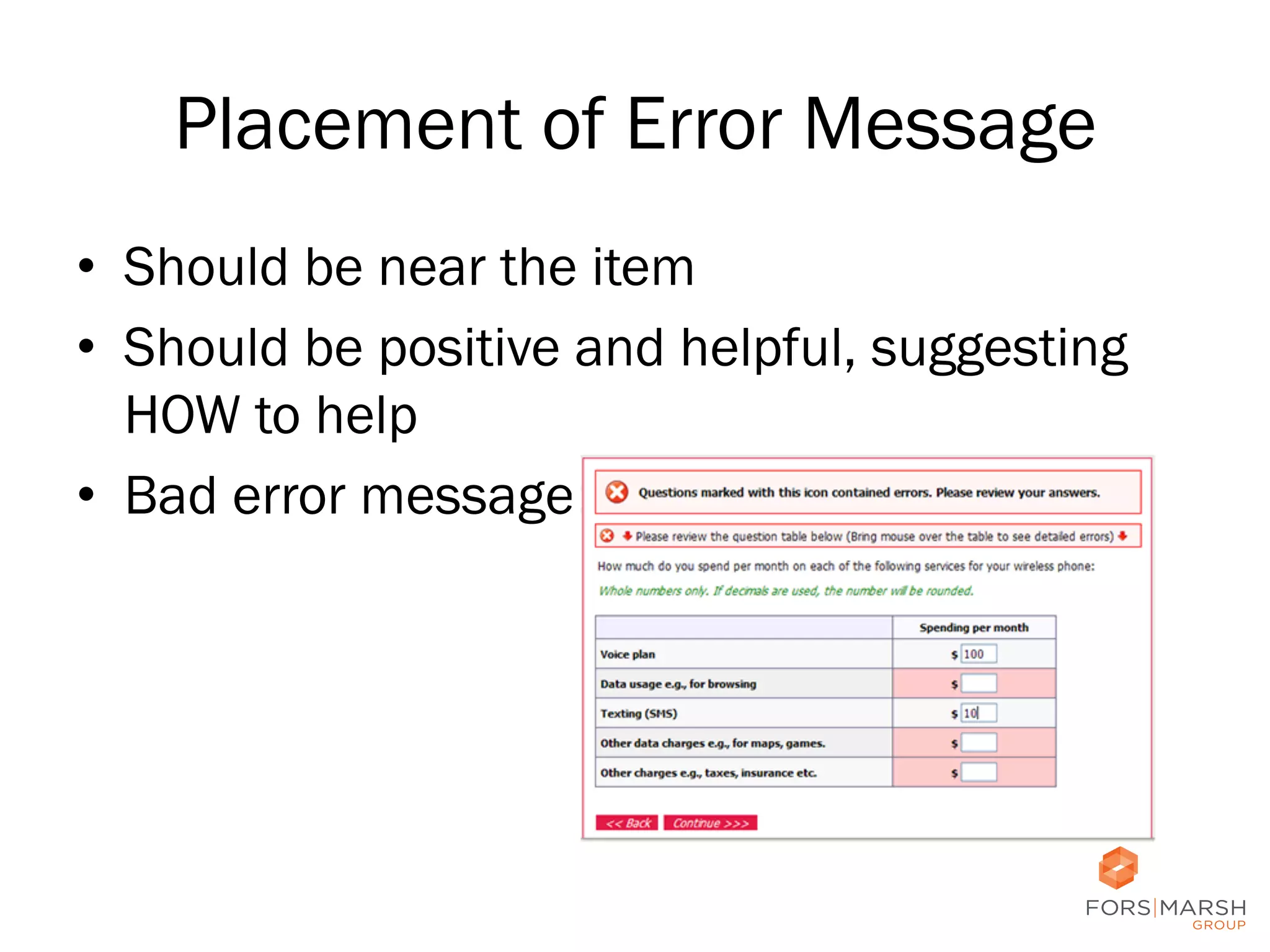

- Placing instructions near related questions to reduce cognitive load