This document discusses random number generation and random variate generation. It covers:

1) Properties of random numbers such as uniformity, independence, maximum density, and maximum period.

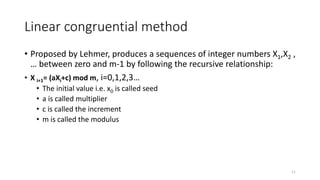

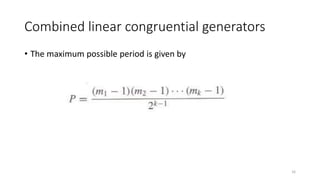

2) Techniques for generating pseudo-random numbers such as the linear congruential method and combined linear congruential generators.

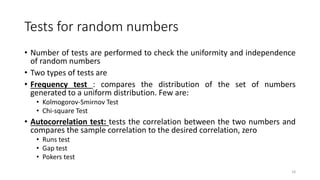

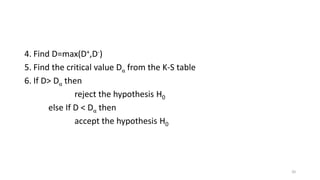

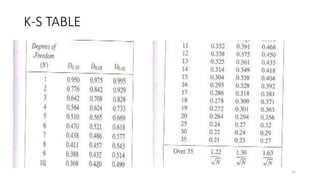

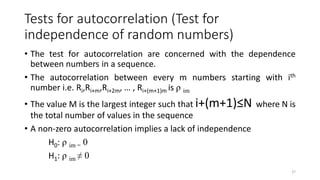

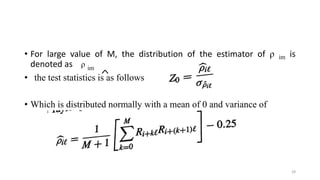

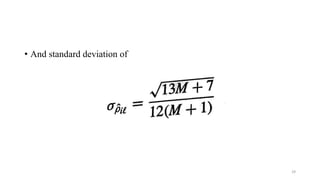

3) Tests for random numbers including Kolmogorov-Smirnov, chi-square, and autocorrelation tests.

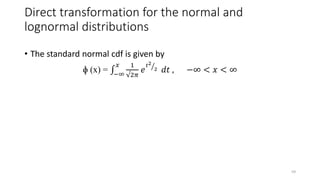

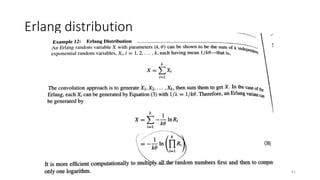

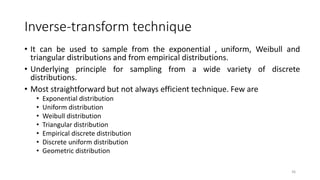

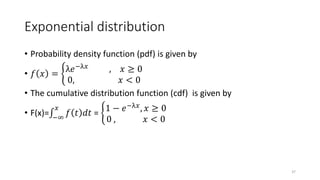

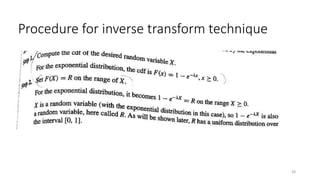

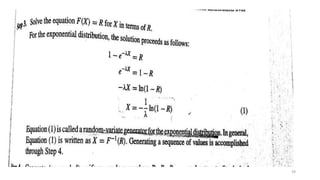

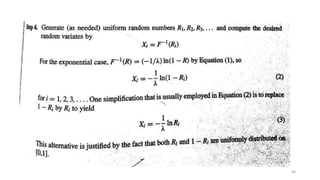

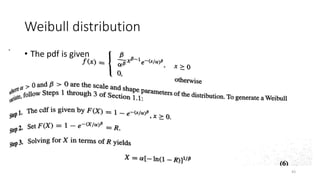

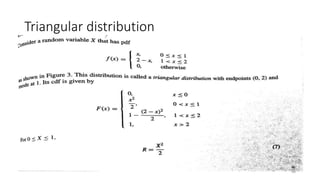

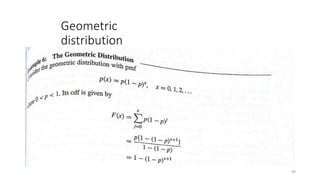

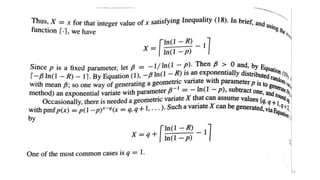

4) Random variate generation techniques like the inverse transform method, acceptance-rejection technique, and special properties for distributions like normal, lognormal, and Erlang.

![• The variance is given by

• V( R) = 0

1

𝑥2

− [𝐸 𝑅 ]2

=

1

12

• The following figure shows the pdf for random numbers

6](https://image.slidesharecdn.com/unit3random-numbergenerationrandom-variategeneration-170113151313/85/Unit-3-random-number-generation-random-variate-generation-6-320.jpg)

![Kolmogorov- Smirnov Test –for uniformity (Procedure)

1. Formulate the hypothesis

H0:Ri ~U[0,1]

H1:Ri ~U[0,1]

2. Rank the data from smallest to largest

R(1)≤R(2) ≤R(3)…

3. Calculate the values of D+ and D-

19](https://image.slidesharecdn.com/unit3random-numbergenerationrandom-variategeneration-170113151313/85/Unit-3-random-number-generation-random-variate-generation-19-320.jpg)

![Chi-square Test –for uniformity (Procedure)

1. Formulate the hypothesis

H0:Ri ~U[0,1]

H1:Ri ~U[0,1]

2. Divide the data into different class intervals of equal intervals

3. Find out how many random numbers lie in each interval and hence find Oi

(observed frequency) & expected frequency Ei using the formula

Ei =

𝑁

𝑛

where N is the total no of observation

n is the no of class interval

L=n-1 is known as degree of freedom

23](https://image.slidesharecdn.com/unit3random-numbergenerationrandom-variategeneration-170113151313/85/Unit-3-random-number-generation-random-variate-generation-23-320.jpg)

![Uniform distribution

• Consider a random variable X i.e. uniformly distributed on the interval

[a,b].

• Step 1 : the cdf is given by

F(x) =

0, 𝑥 < 𝑎

𝑥−𝑎

𝑏−𝑎

, 𝑎 ≤ 𝑥 ≤ 𝑏

1, 𝑥 > 𝑏

• Step 2: Set F(x)=

𝑥−𝑎

𝑏−𝑎

= R

• Step 3: on solving we get , X=a+(b-a)R which is the equation for

random variate generation using uniform distribution

42](https://image.slidesharecdn.com/unit3random-numbergenerationrandom-variategeneration-170113151313/85/Unit-3-random-number-generation-random-variate-generation-42-320.jpg)

![Acceptance – rejection technique

• Devising a method for generating random numbers ‘X’ uniformly

distributed between ¼ and 1 follows three steps

1. Generate a random number R

2. a) If R ≥ ¼ accept X=R the goto step 3

2. b) if R < ¼ reject R and return to step 1

3. If another uniform random variate on [1/4,1] is needed, repeat the

procedure beginning at step 1 , if not stop.

52](https://image.slidesharecdn.com/unit3random-numbergenerationrandom-variategeneration-170113151313/85/Unit-3-random-number-generation-random-variate-generation-52-320.jpg)