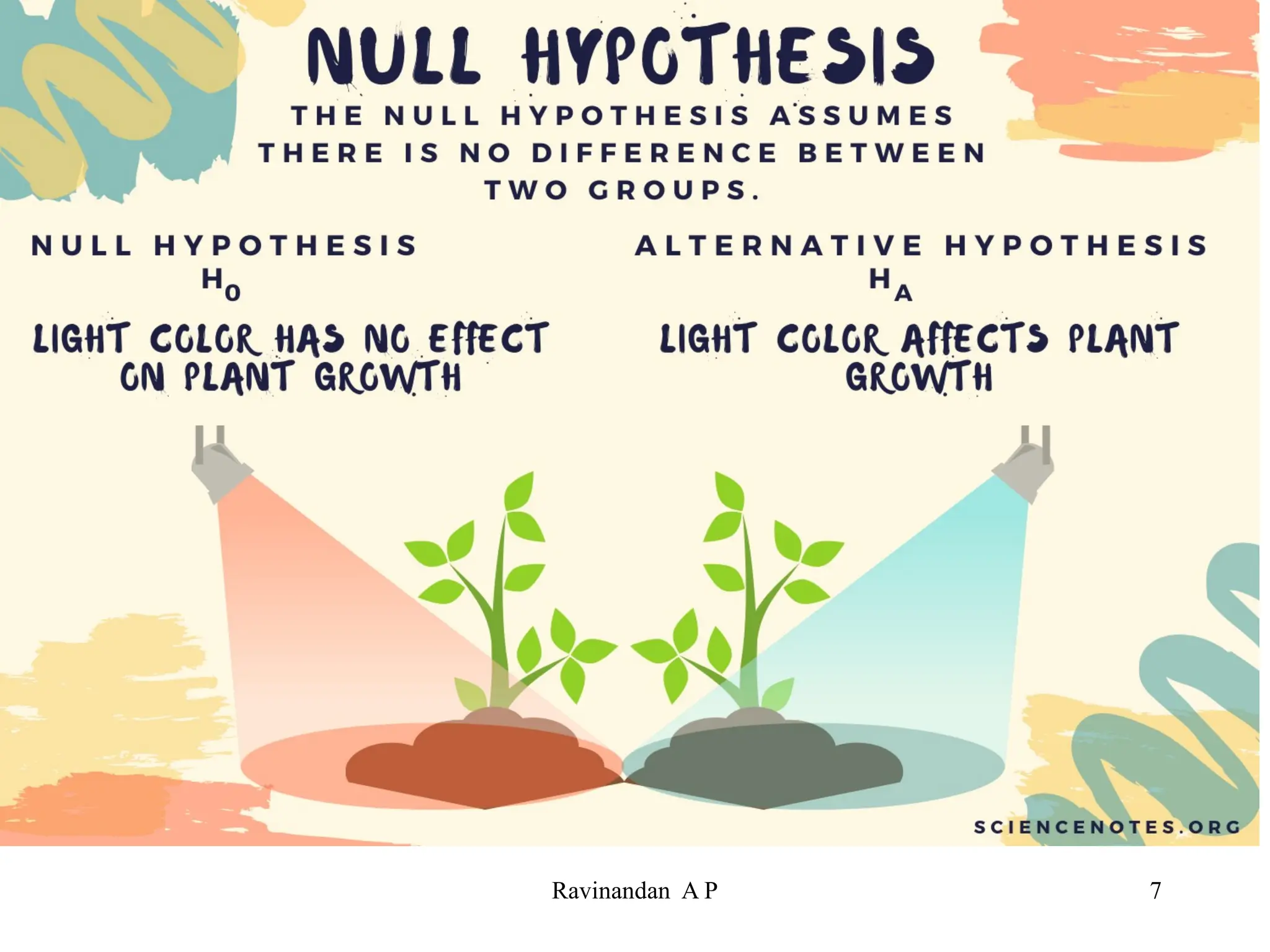

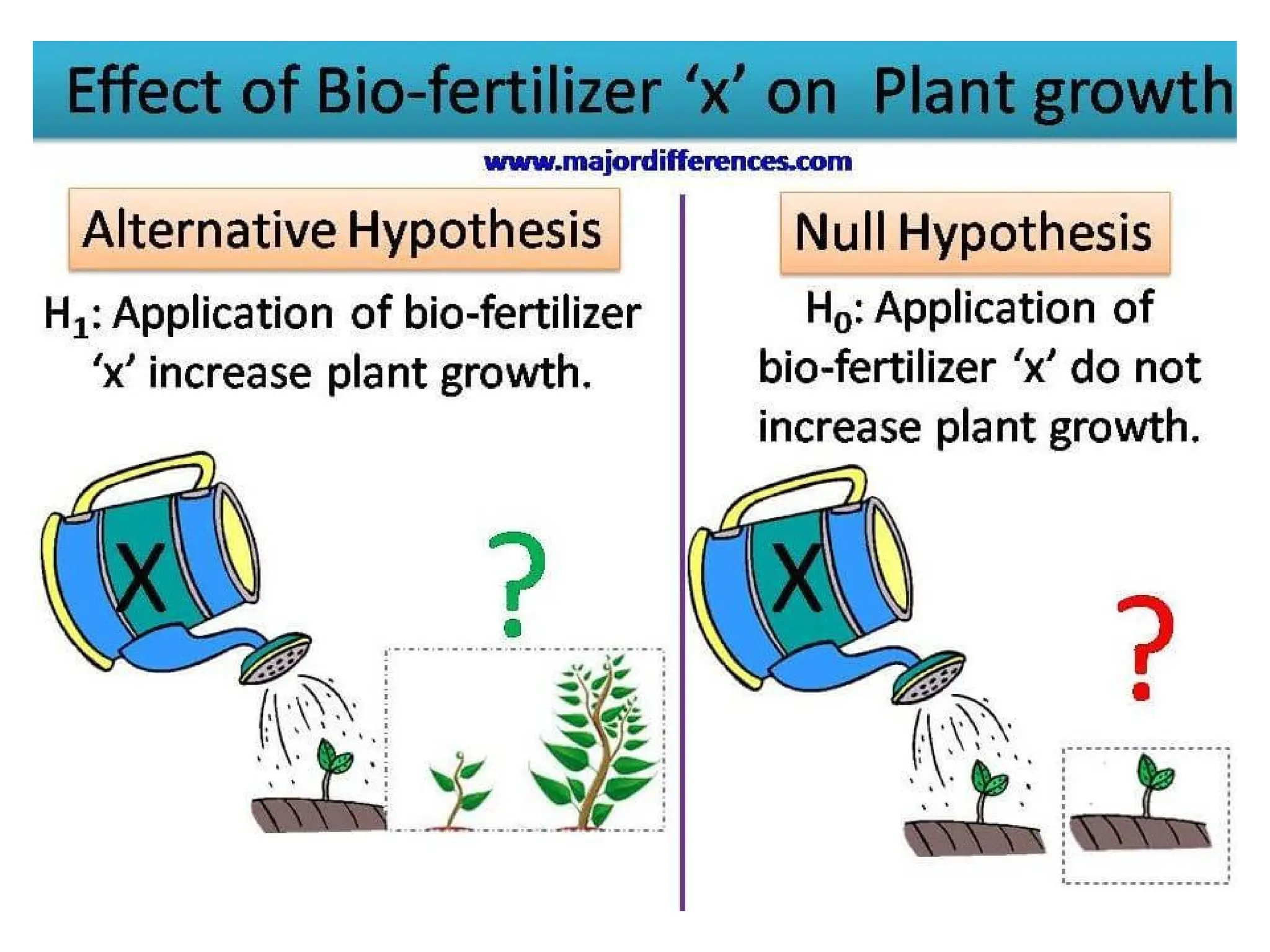

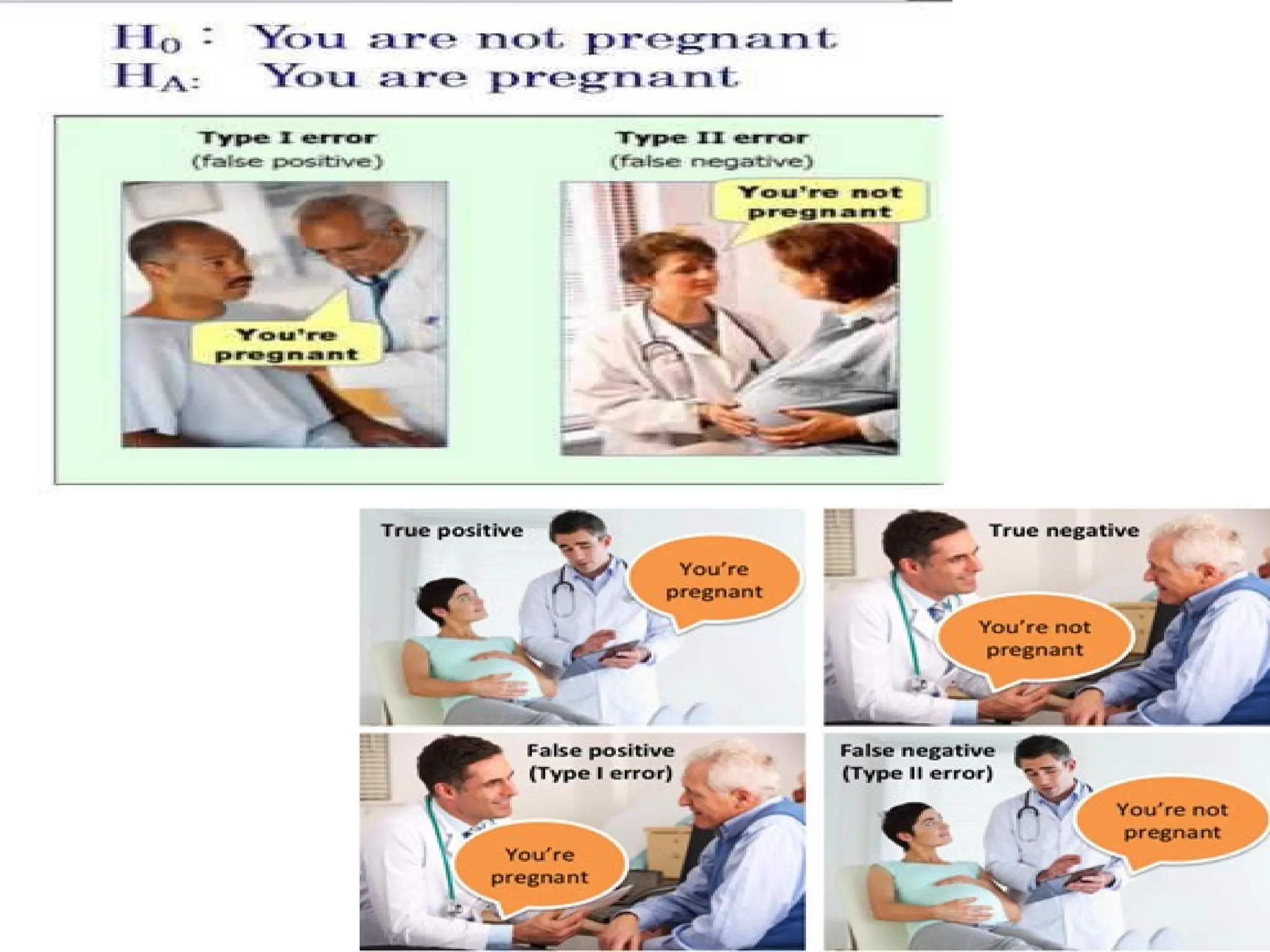

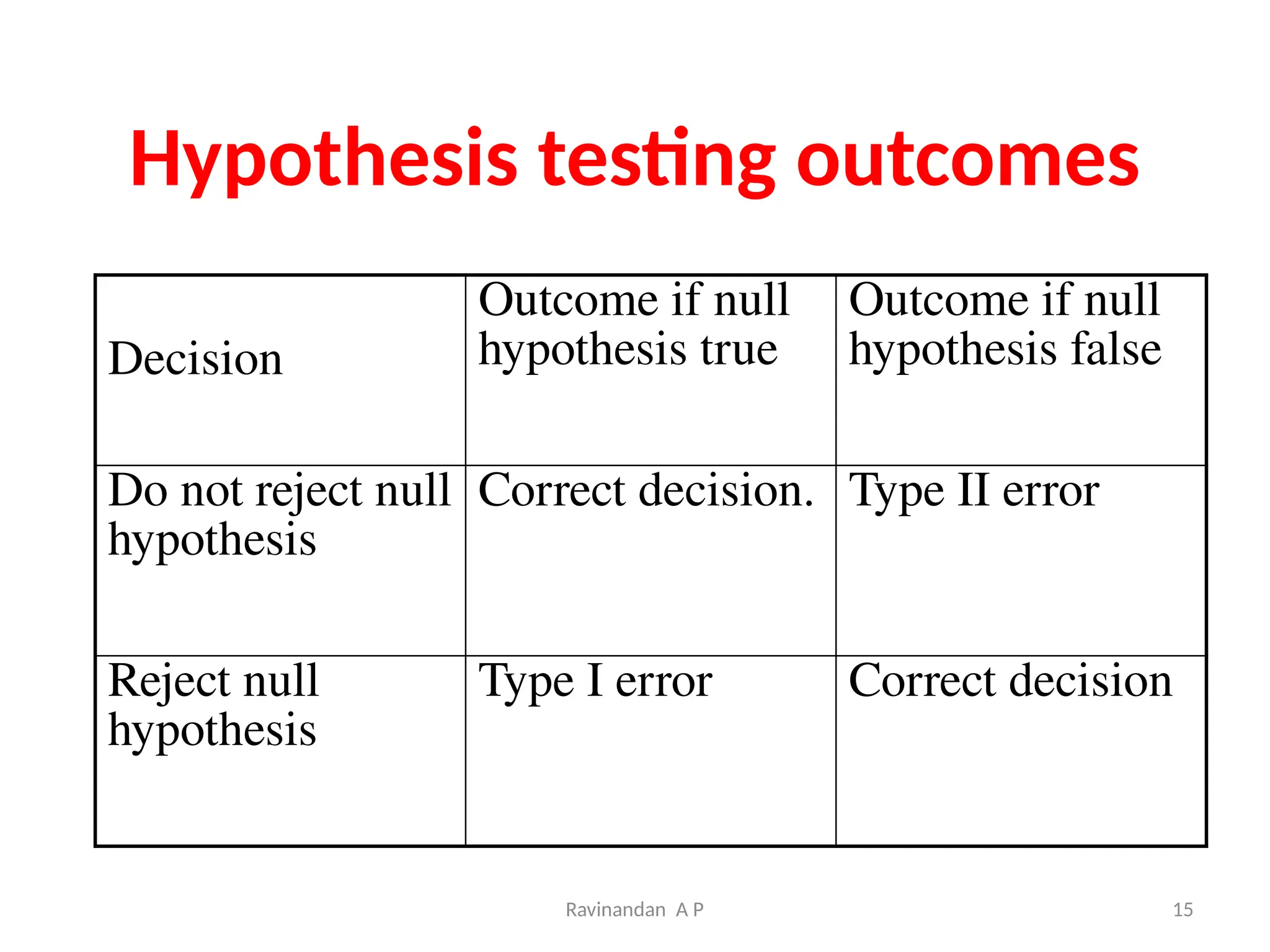

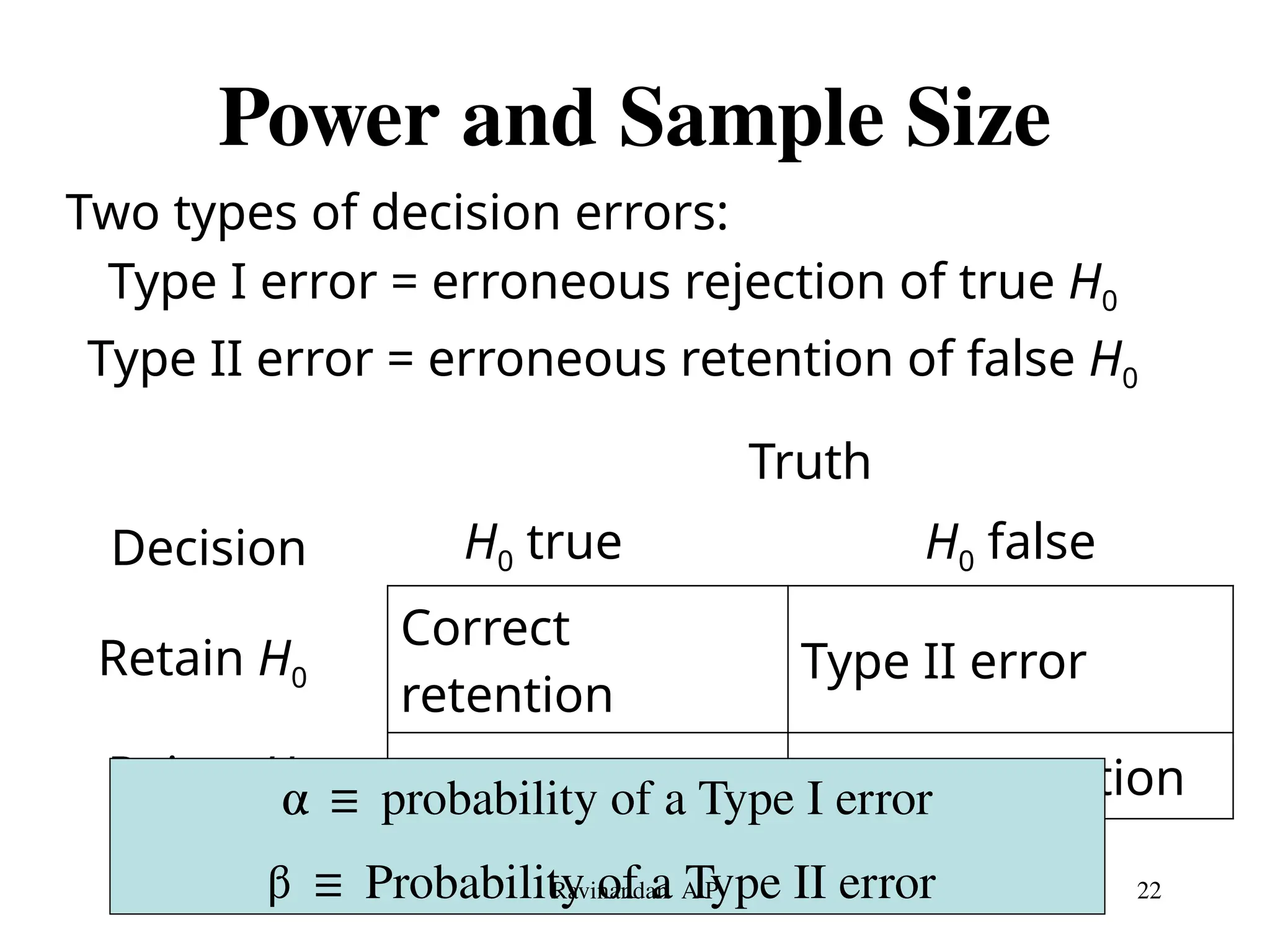

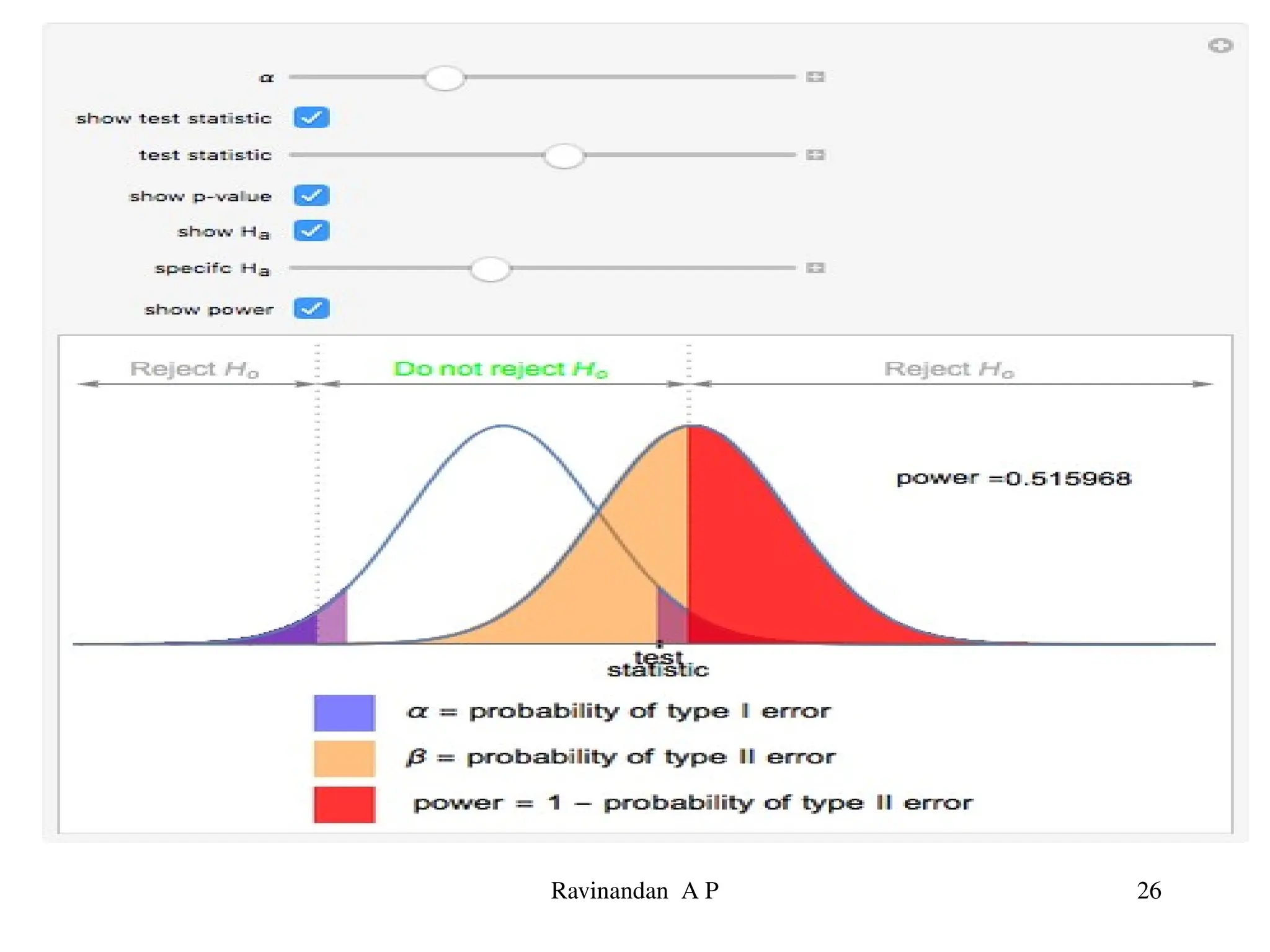

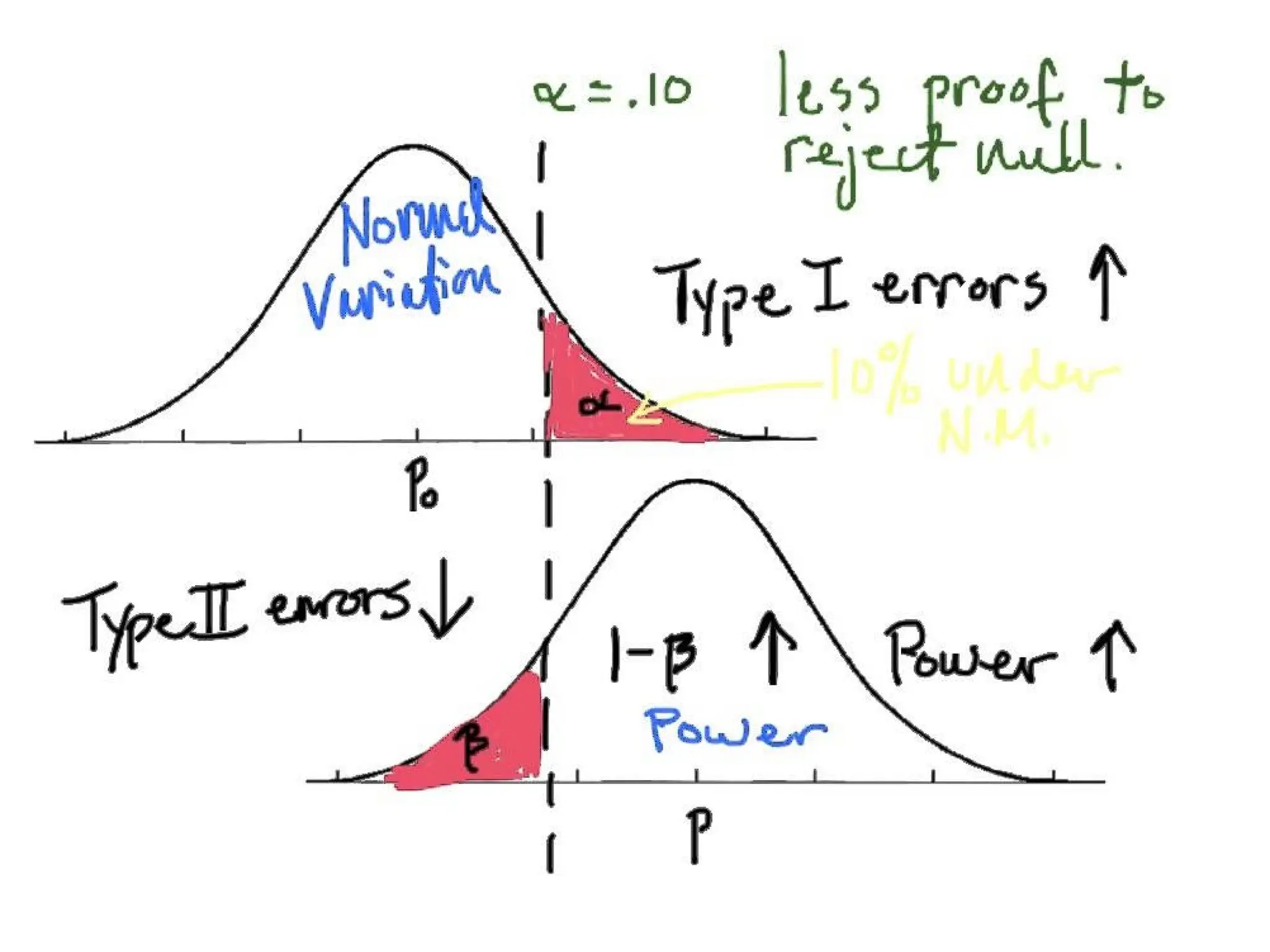

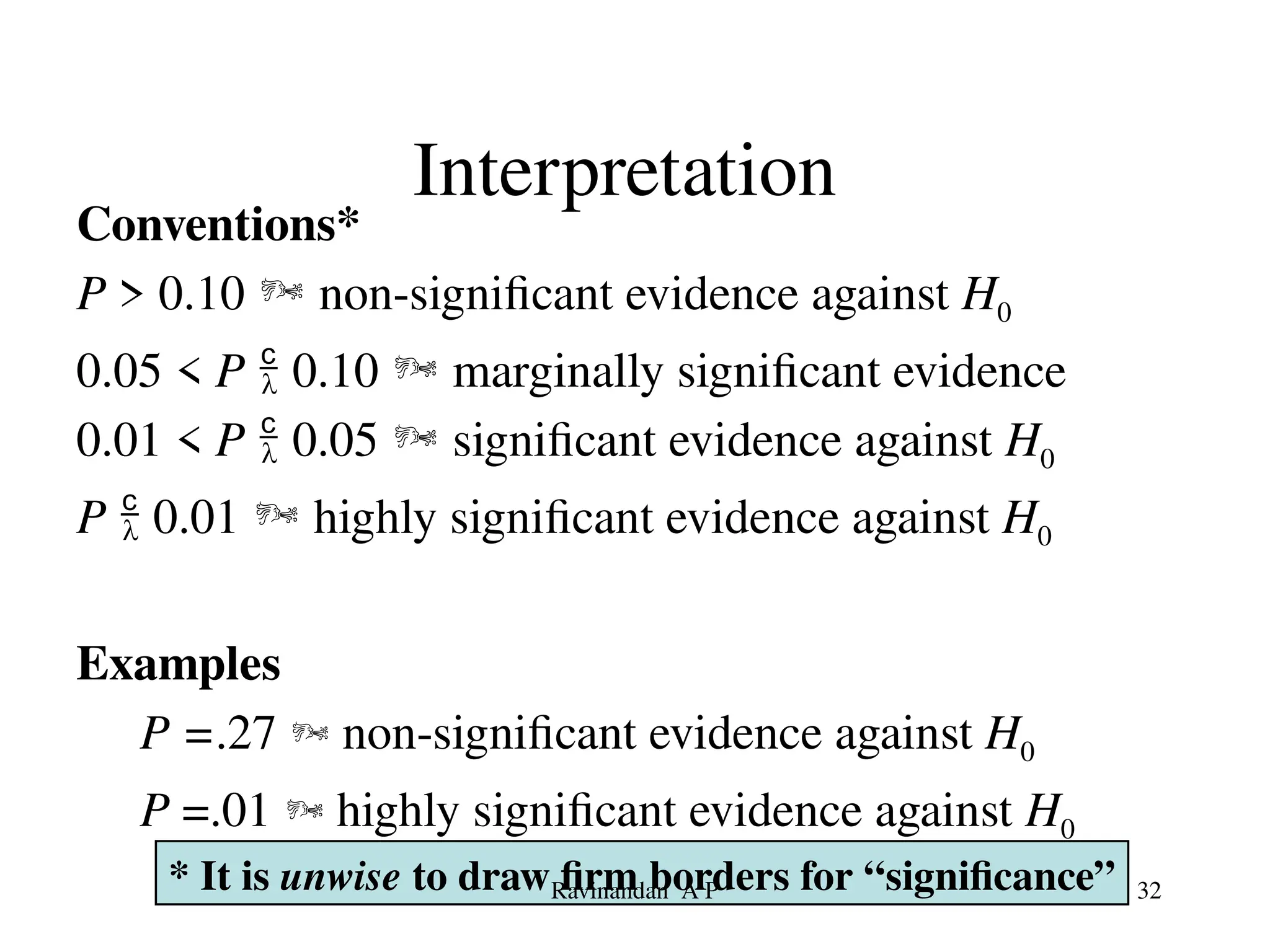

The document discusses the basics of hypothesis testing, including the concepts of null and alternative hypotheses, Type I and II errors, significance levels, and the role of p-values. It explains the importance of statistical tests in determining the likelihood that observed differences are due to chance, with examples relevant to pharmaceutical research. Additionally, factors affecting test power and interpretation of p-values are addressed, emphasizing their significance in assessing evidence against the null hypothesis.