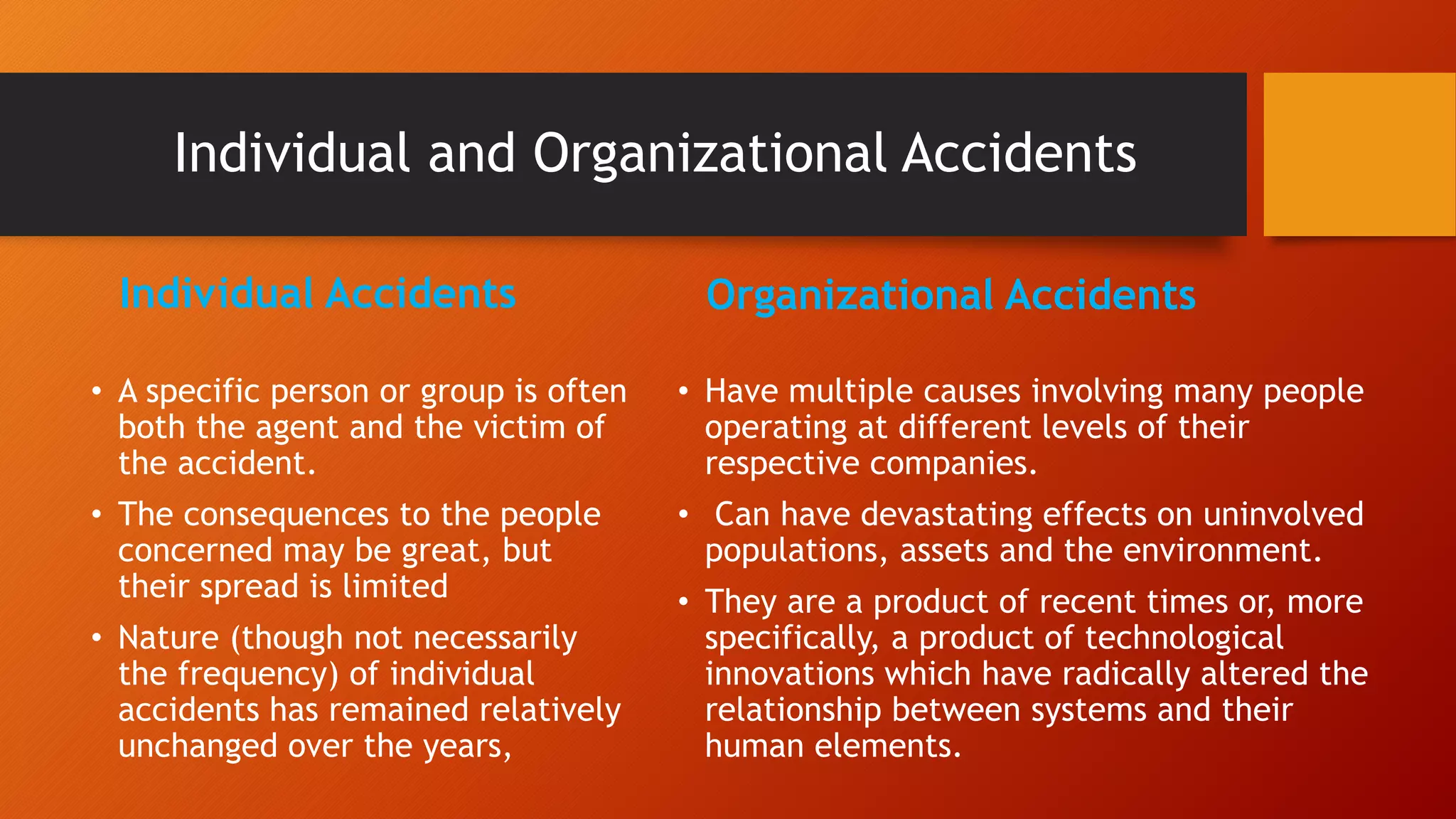

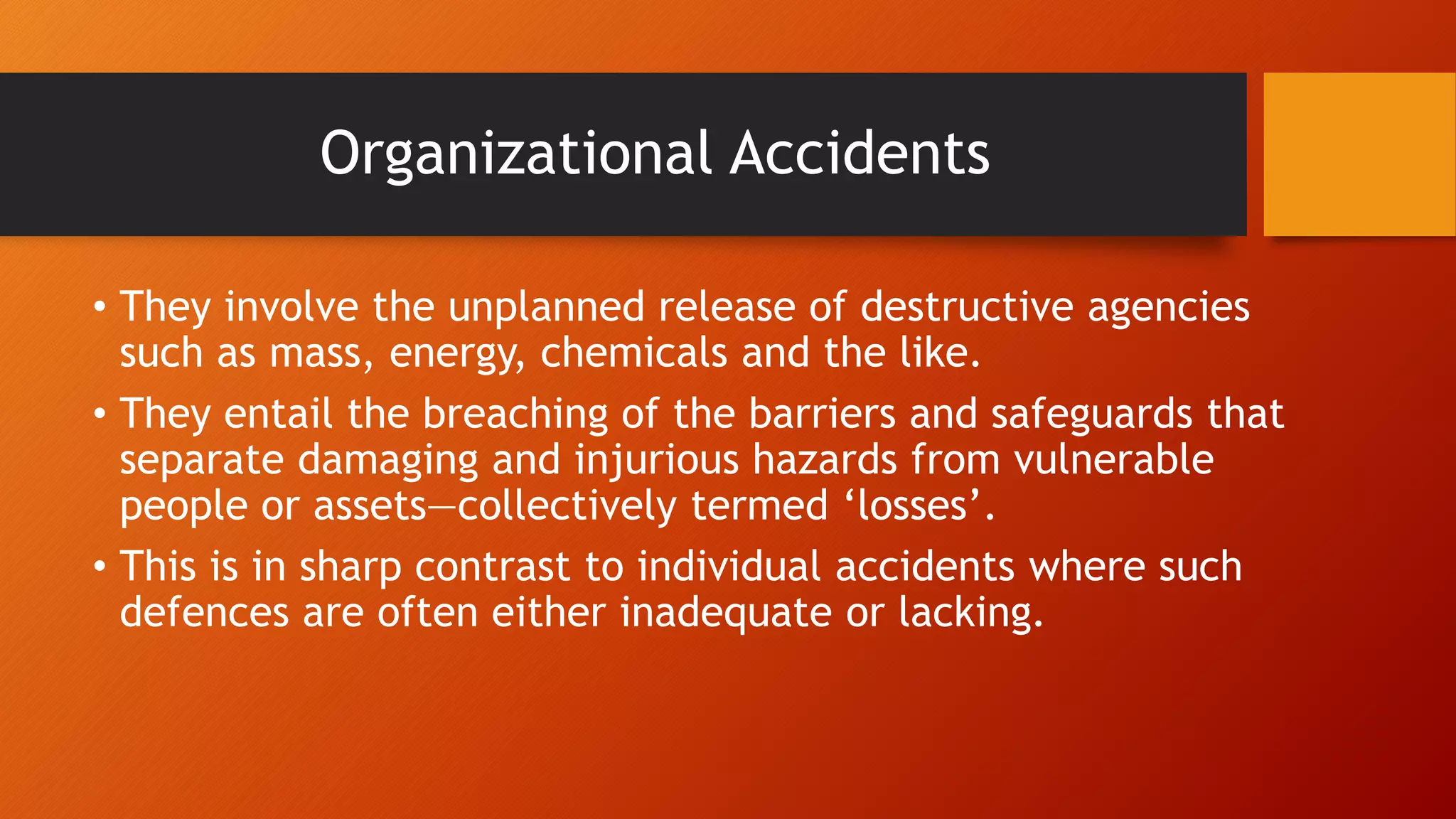

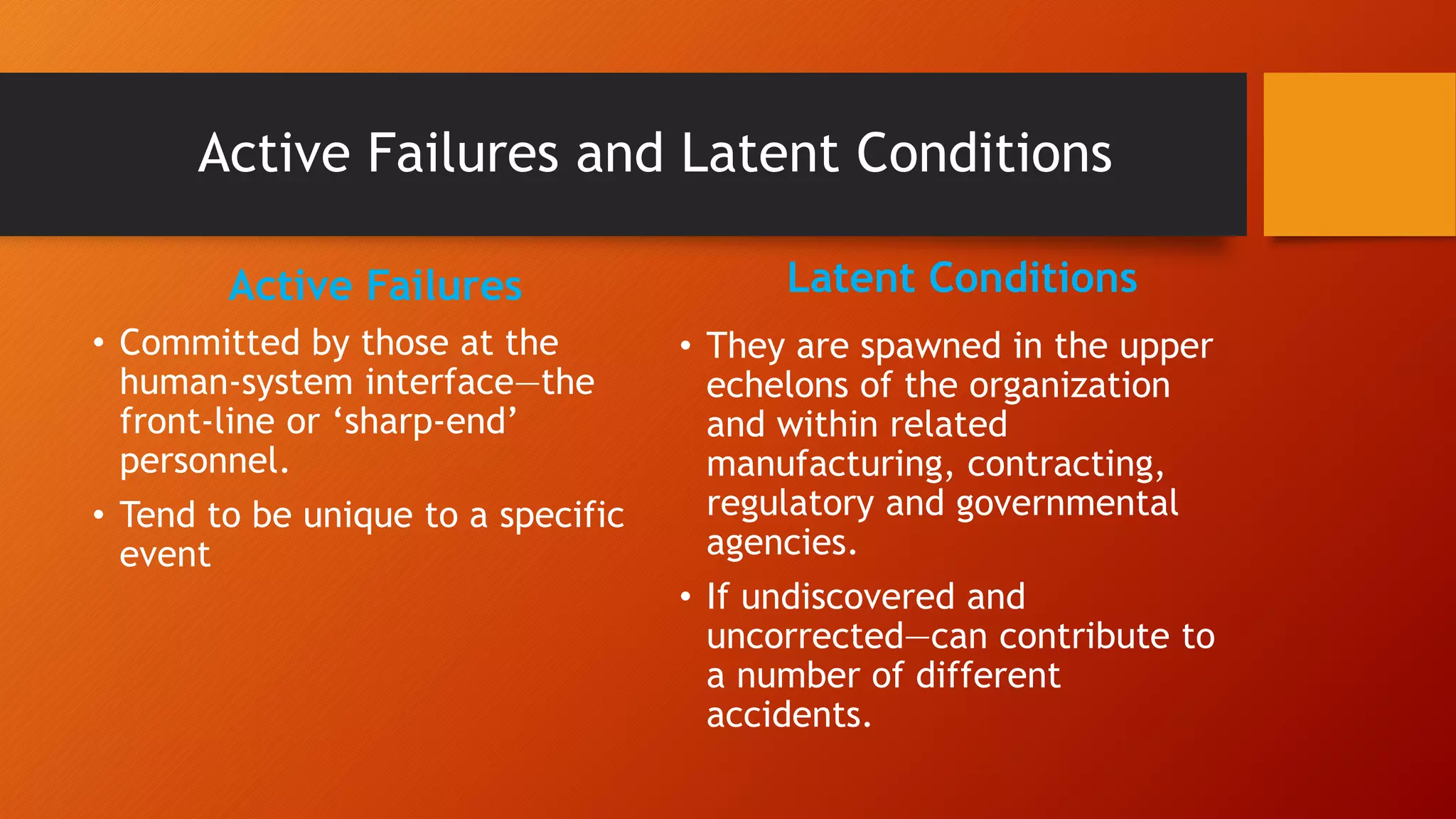

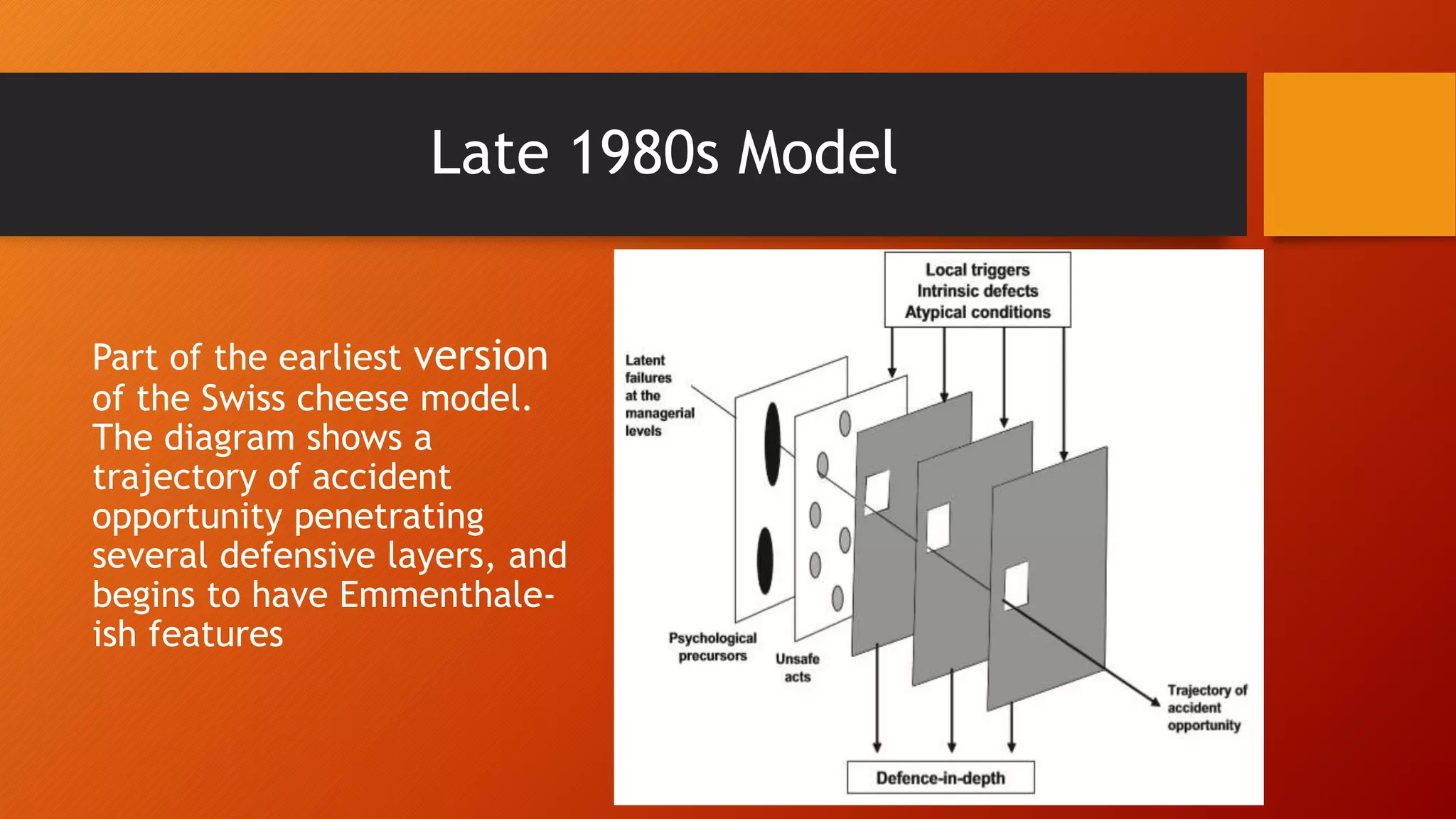

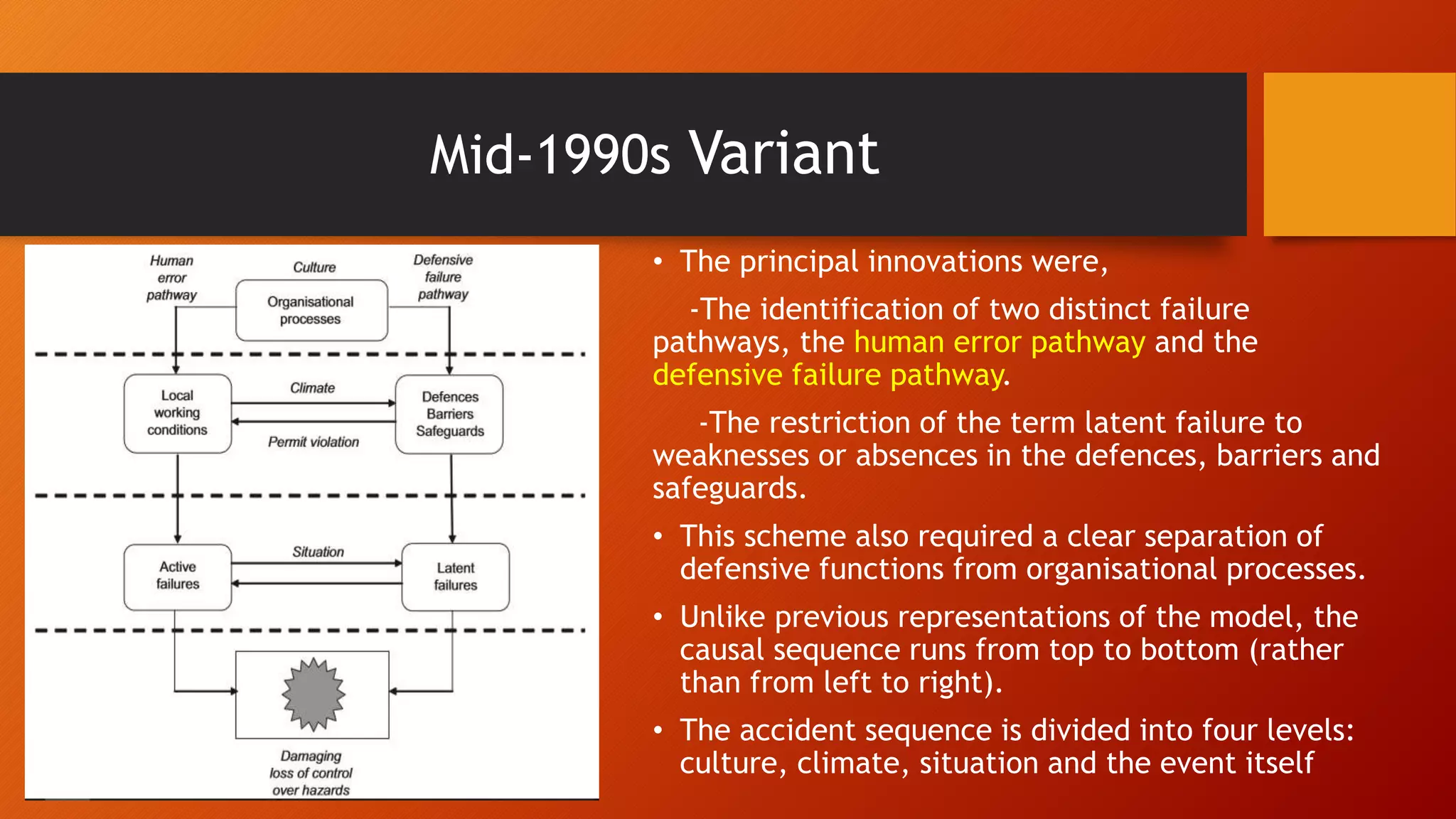

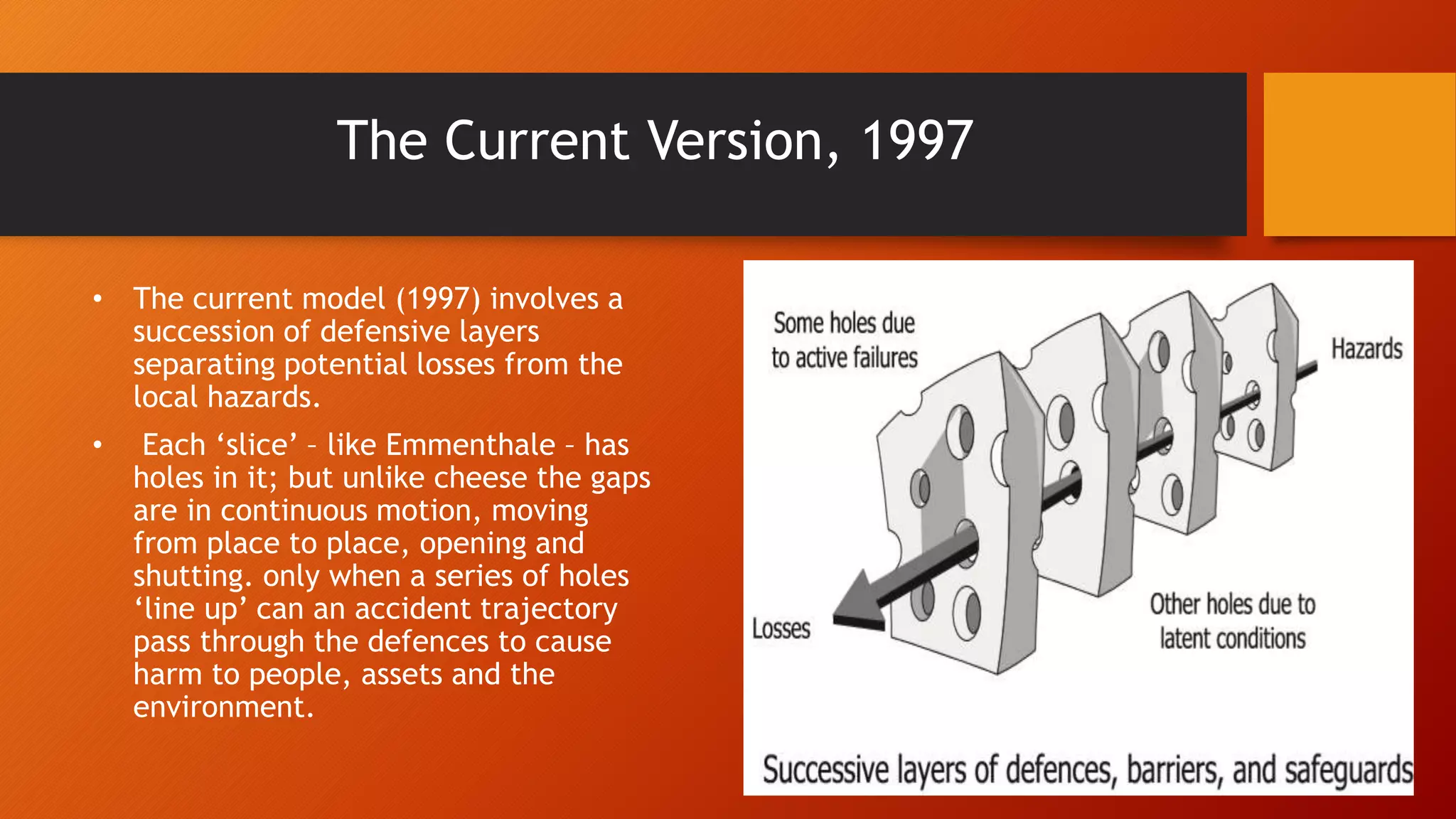

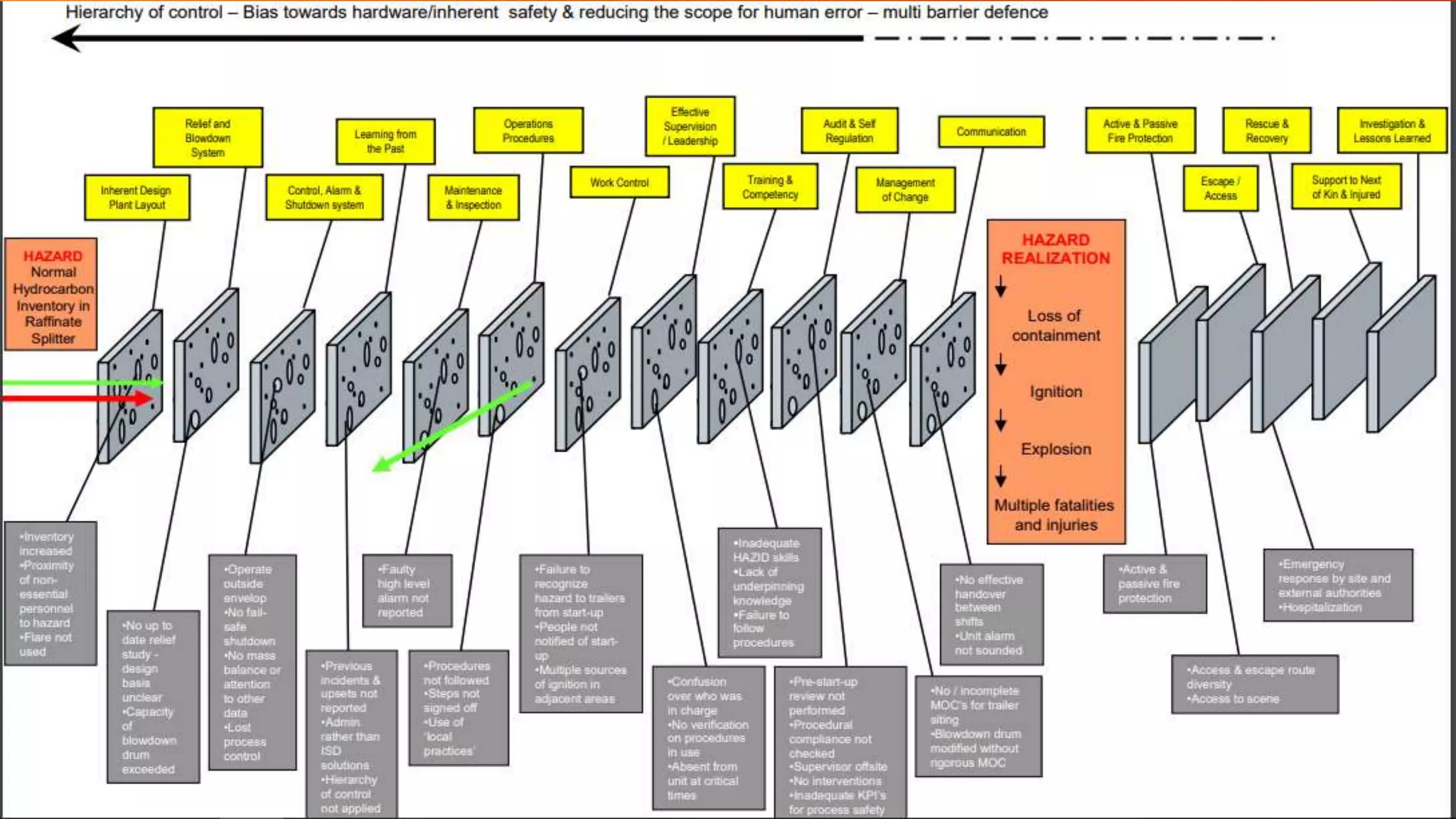

The Swiss Cheese Model document discusses models for analyzing accidents involving individuals and organizations. Individual accidents typically involve a single person as both the agent and victim, while organizational accidents involve multiple causal factors across different levels of an organization. The Swiss Cheese Model depicts accidents as resulting from the alignment of vulnerabilities ("holes") in multiple defensive layers, including human, technical, and organizational factors. It has evolved over time but generally represents defenses as multiple "slices" of cheese, with holes that open and close as causal factors. The model is applied to analyze the 2005 BP Texas City refinery explosion, identifying active failures by operators and latent failures in the organization that allowed defenses to be breached along an accident trajectory.