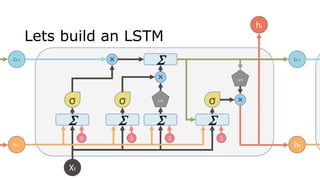

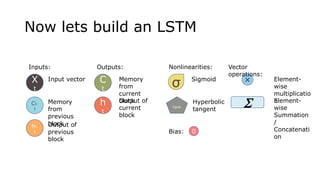

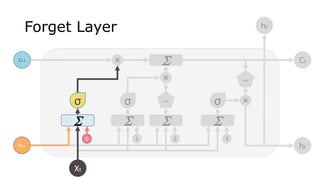

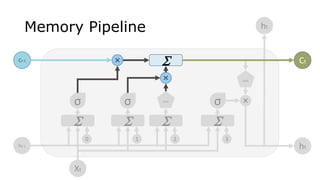

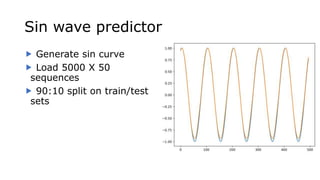

The document discusses the use of Long Short-Term Memory (LSTM) networks for time series predictions, detailing their architecture and functionality. It highlights methods to incorporate memory into neural networks to effectively model time series data and provides examples using sine wave prediction and power consumption datasets. Additionally, it includes several references for further understanding of LSTM networks and their application.