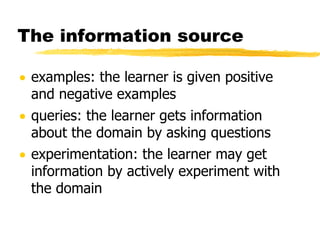

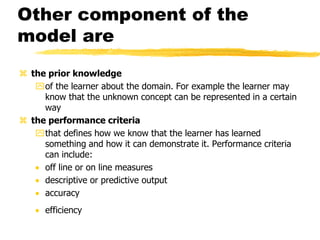

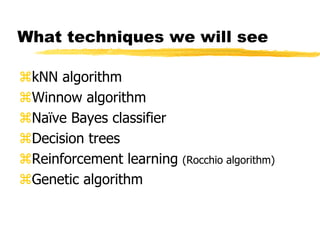

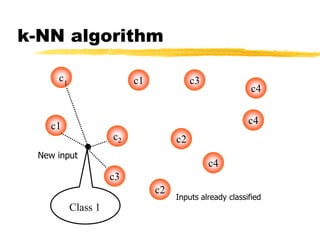

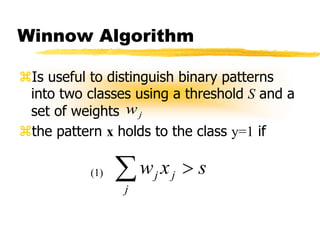

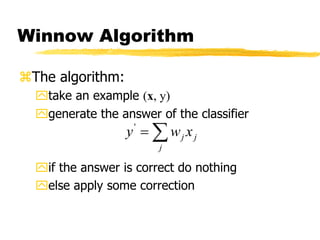

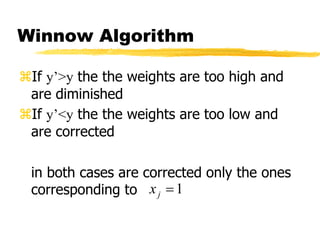

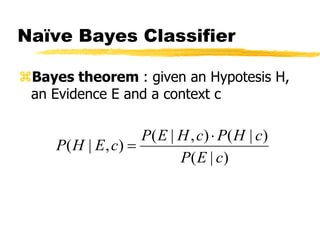

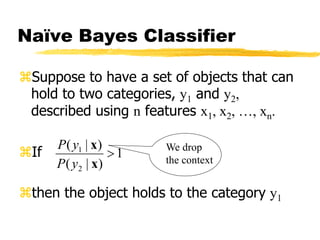

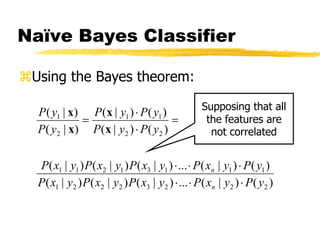

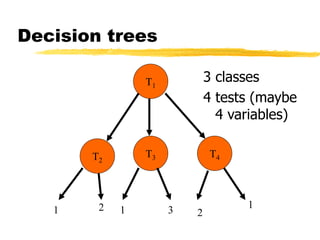

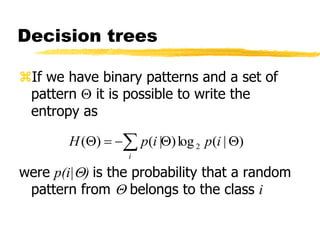

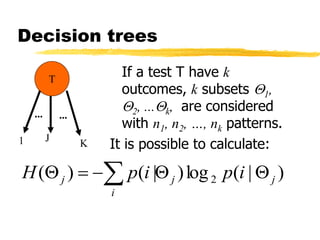

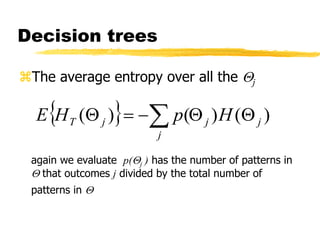

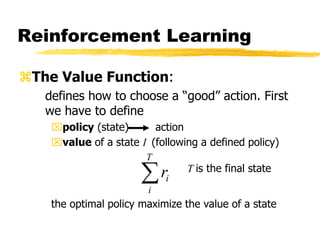

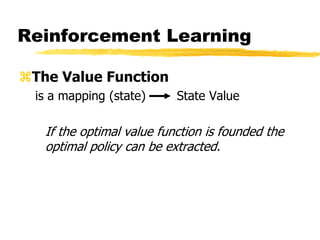

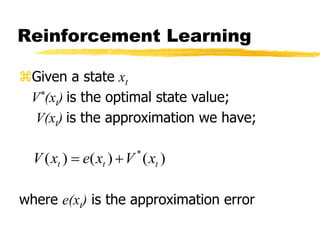

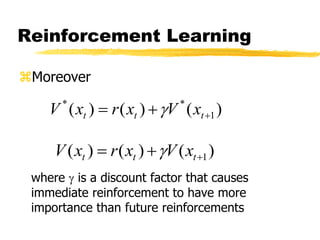

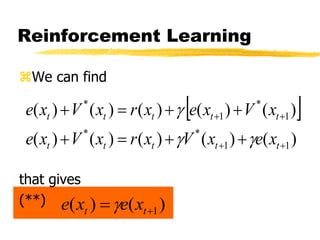

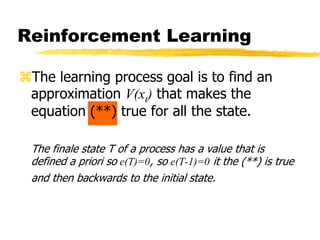

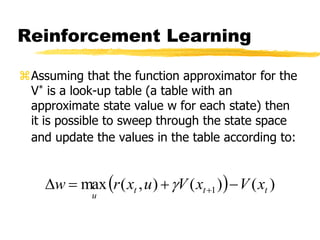

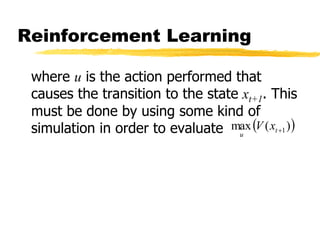

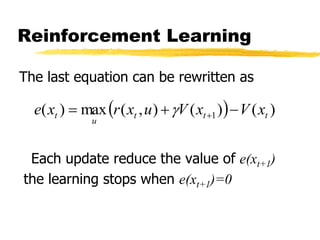

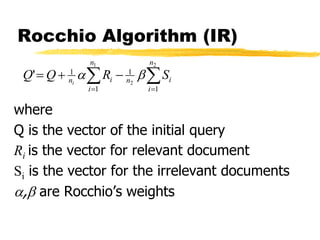

Machine learning and neural networks are discussed. Machine learning investigates how knowledge is acquired through experience. A machine learning model includes what is learned (the domain), who is learning (the computer program), and the information source. Techniques discussed include k-nearest neighbors algorithm, Winnow algorithm, naive Bayes classifier, decision trees, and reinforcement learning. Reinforcement learning involves an agent interacting with an environment to optimize outcomes through trial and error.