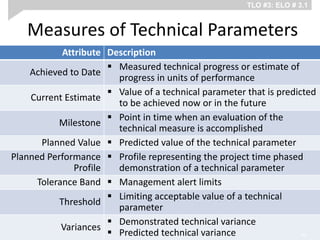

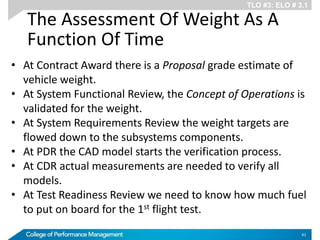

The document outlines an educational program on Technical Performance Measurements (TPMs) within the context of defense acquisition and project management. It defines learning objectives related to establishing and evaluating TPMs, such as identifying measures linked to Work Breakdown Structures and preparing descriptions to assess program progress. Additionally, it emphasizes the importance of integrating TPMs with Earned Value Management to enhance project visibility, accountability, and risk reduction.