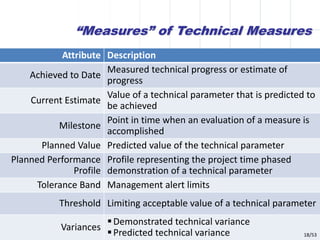

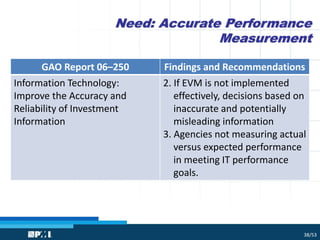

The document discusses the implementation and significance of Technical Performance Measures (TPMs) in managing defense acquisition programs, emphasizing their role in balancing cost, schedule, and system performance. It outlines the learning objectives related to TPMs, including their integration into program management processes and the importance of measures such as Measures of Effectiveness (MoEs), Measures of Performance (MoPs), and Key Performance Parameters (KPPs). The content highlights the necessity of a credible performance measurement baseline to assess program success and manage risks effectively.