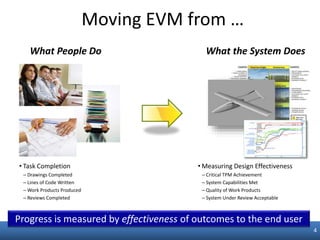

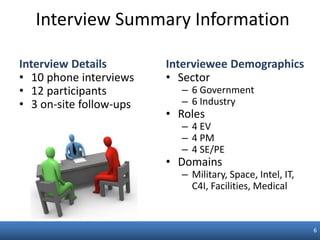

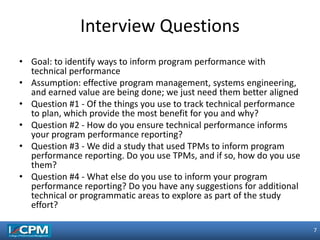

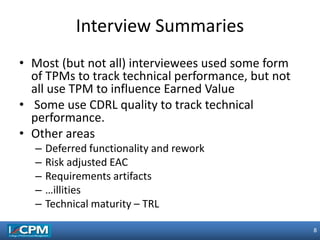

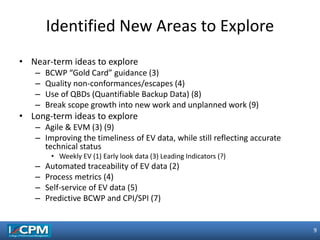

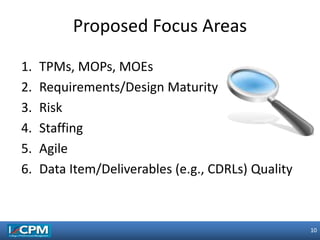

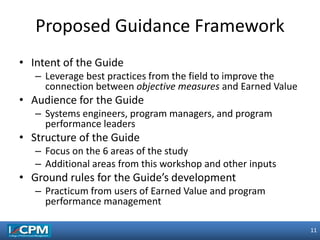

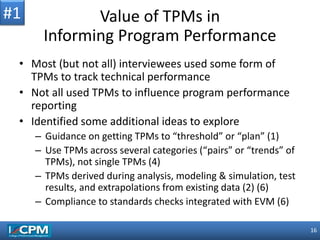

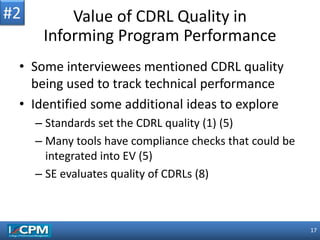

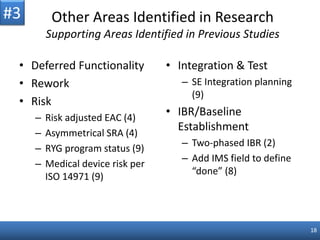

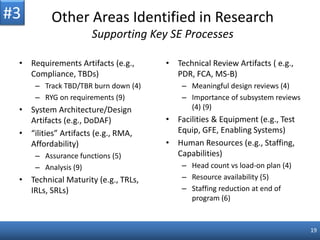

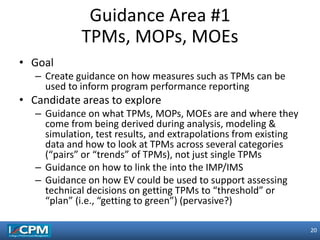

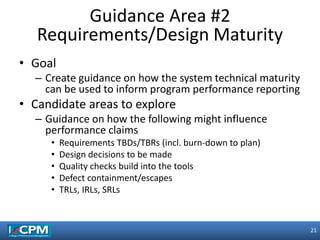

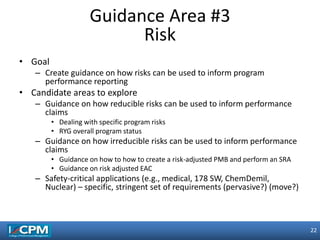

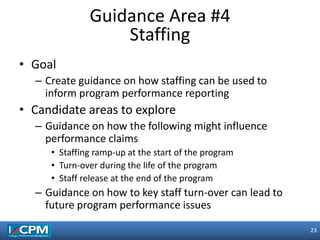

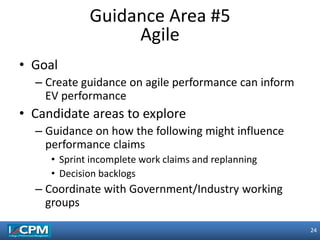

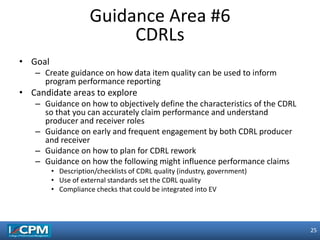

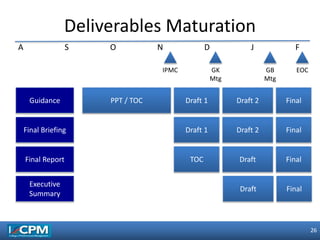

The document discusses a study aimed at improving the alignment between technical performance measures (TPMs) and earned value management (EVM) in program performance reporting. Through interviews with participants from both government and industry, various factors affecting project performance were identified, including the use of TPMs, quality checks, and risk assessments. The study aims to create a guide for program managers to leverage best practices in order to better connect objective measures with earned value.