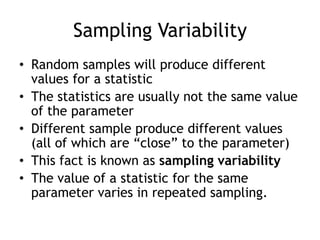

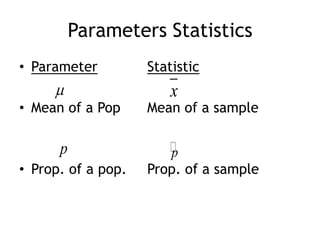

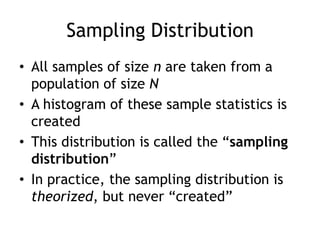

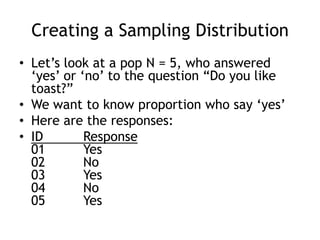

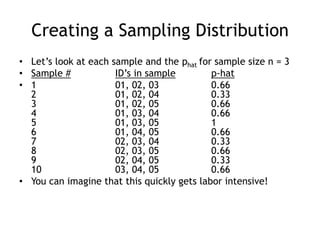

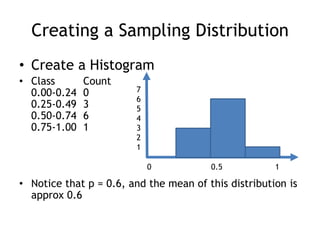

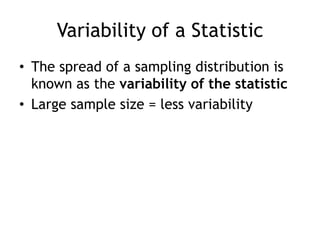

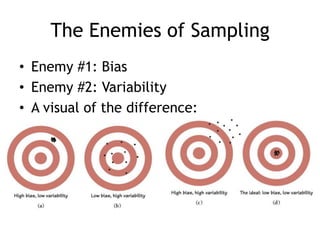

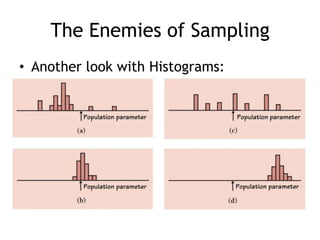

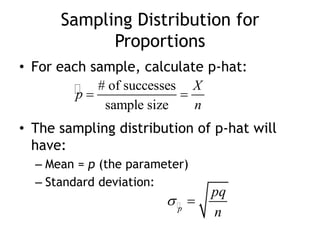

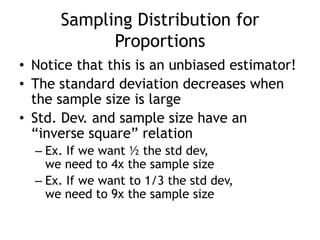

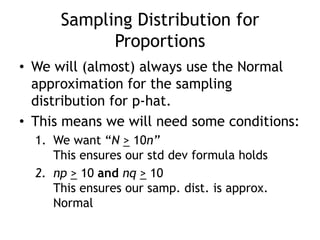

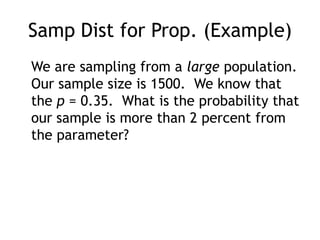

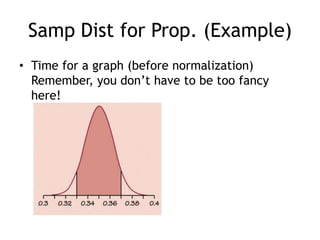

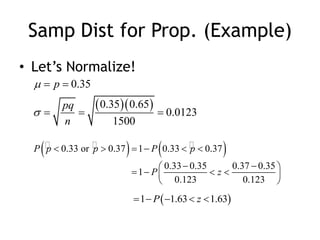

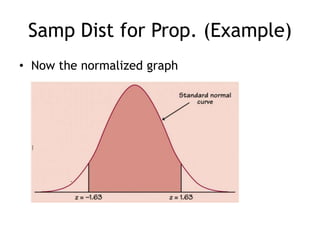

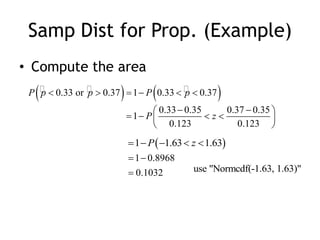

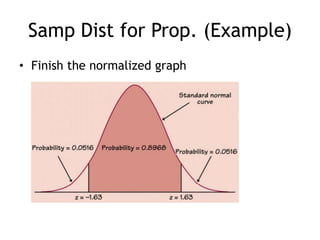

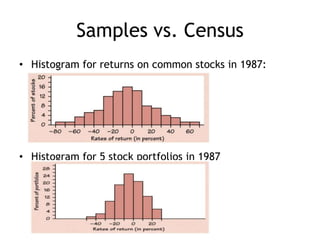

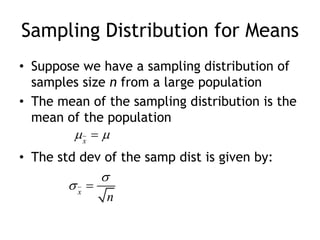

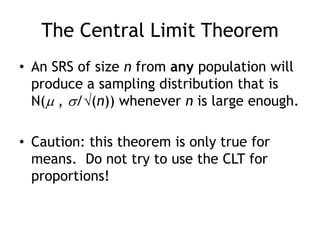

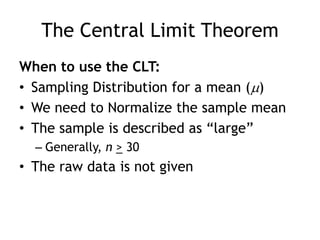

This document discusses sampling distributions and their properties. It defines key terms like parameter, statistic, sampling variability, and sampling distribution. It explains that sampling distributions describe the distribution of all possible sample statistics from repeated sampling. The document then discusses sampling distributions for proportions and means specifically. It provides the formulas for the standard deviation of the sampling distribution and conditions for using the normal approximation. An example problem demonstrates how to calculate the probability of a sample proportion being more than 2% from the population parameter using the normal approximation.