This document discusses estimation theory and the maximum likelihood principle. It introduces key concepts such as:

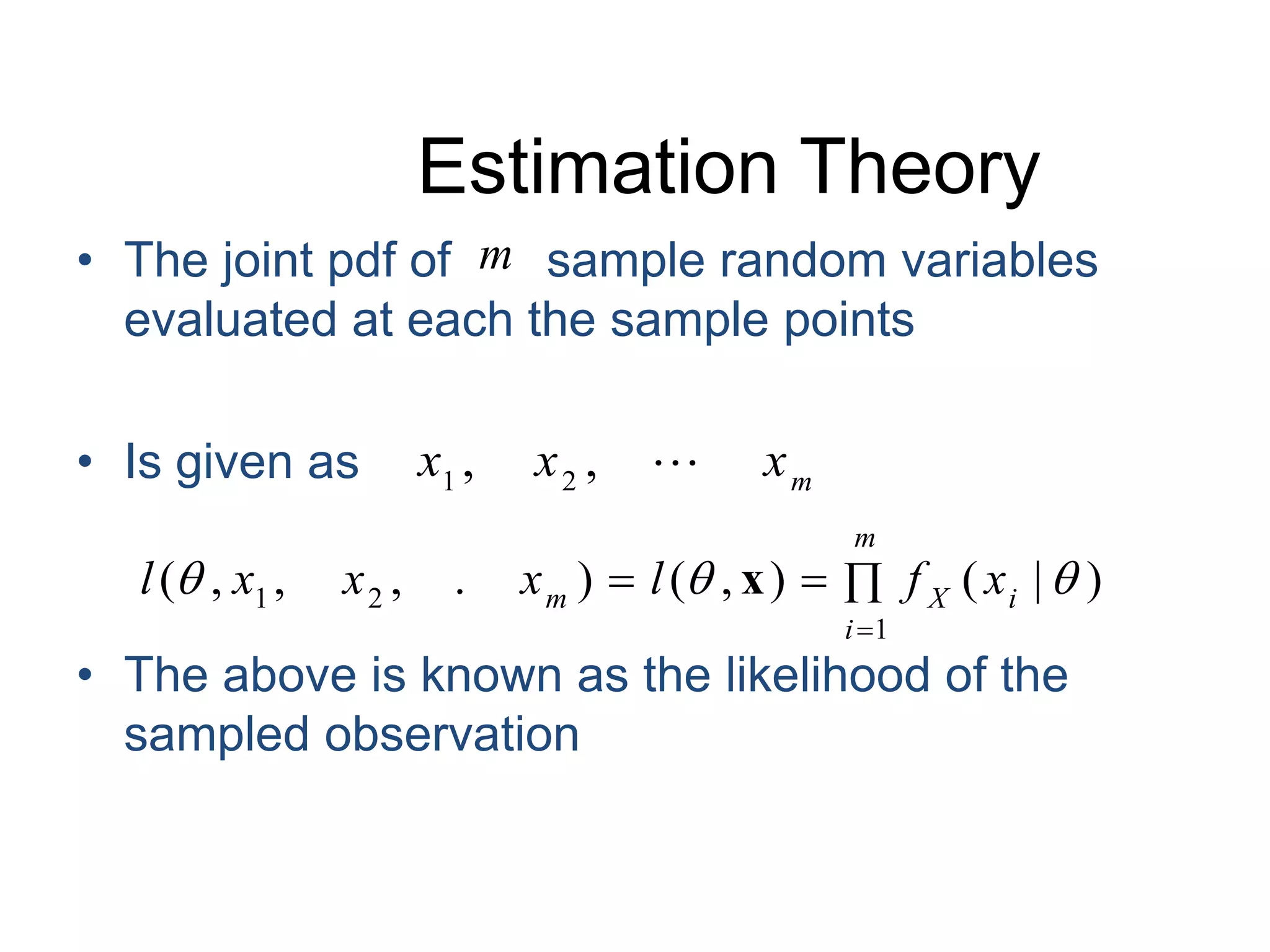

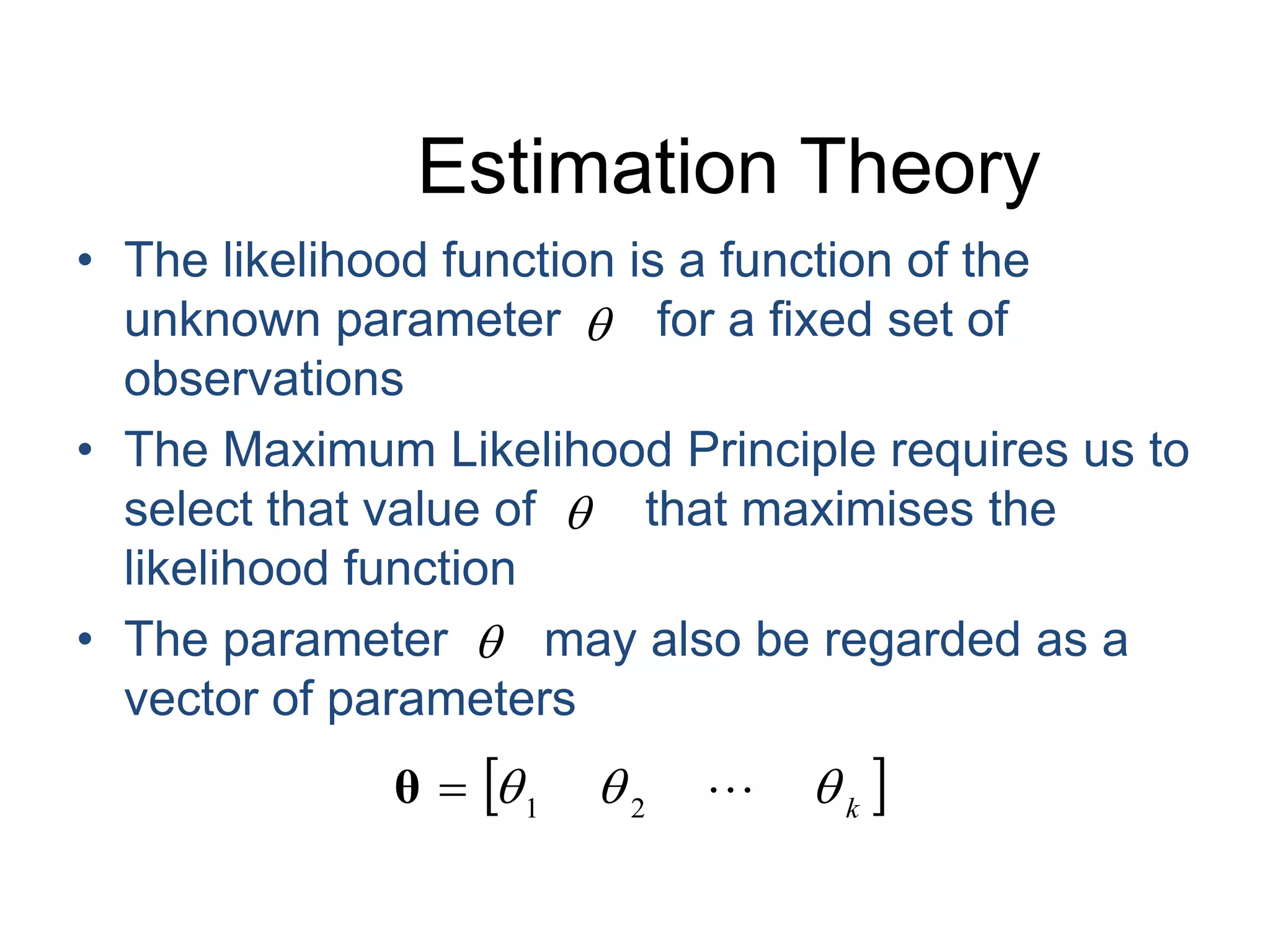

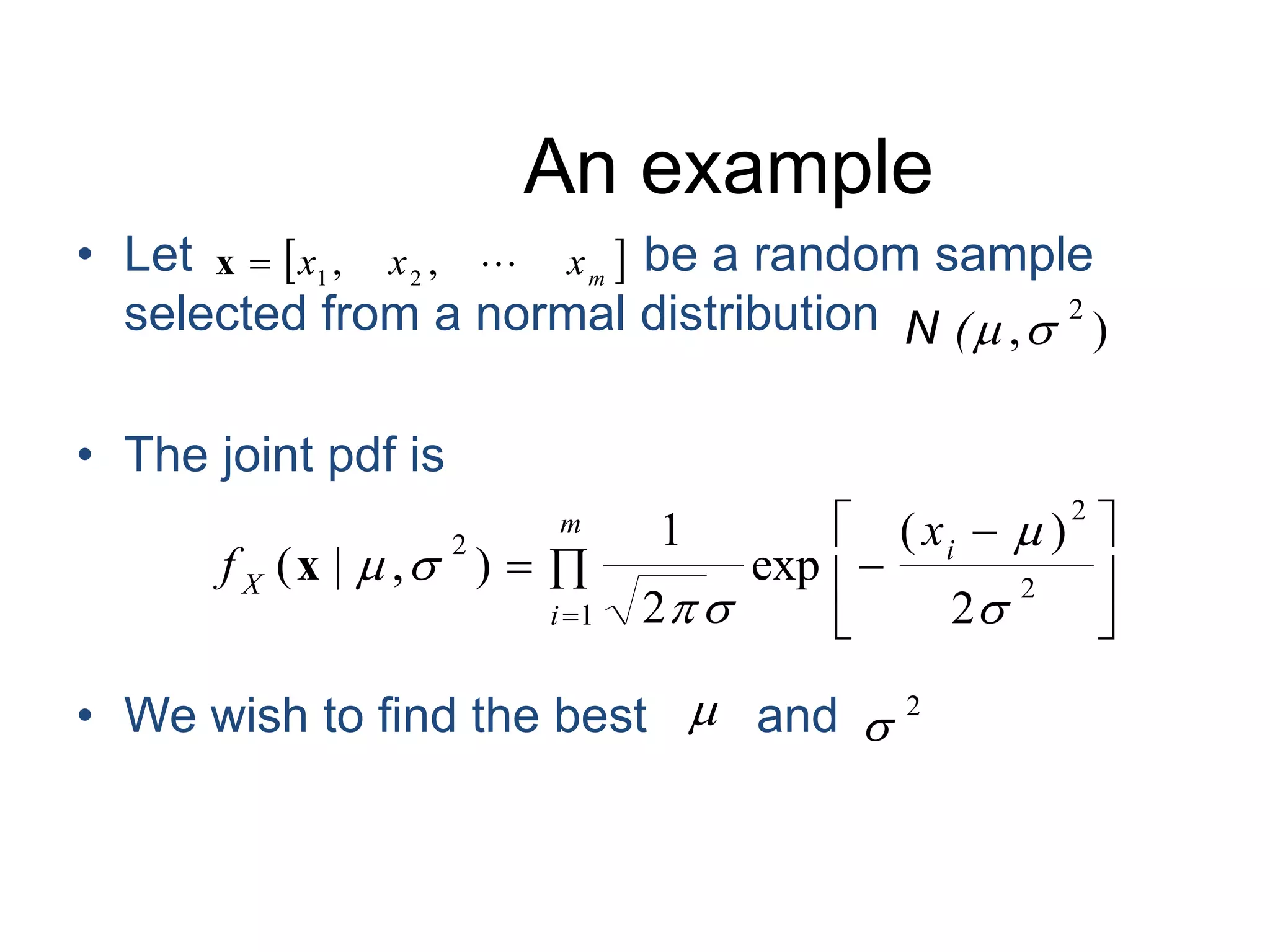

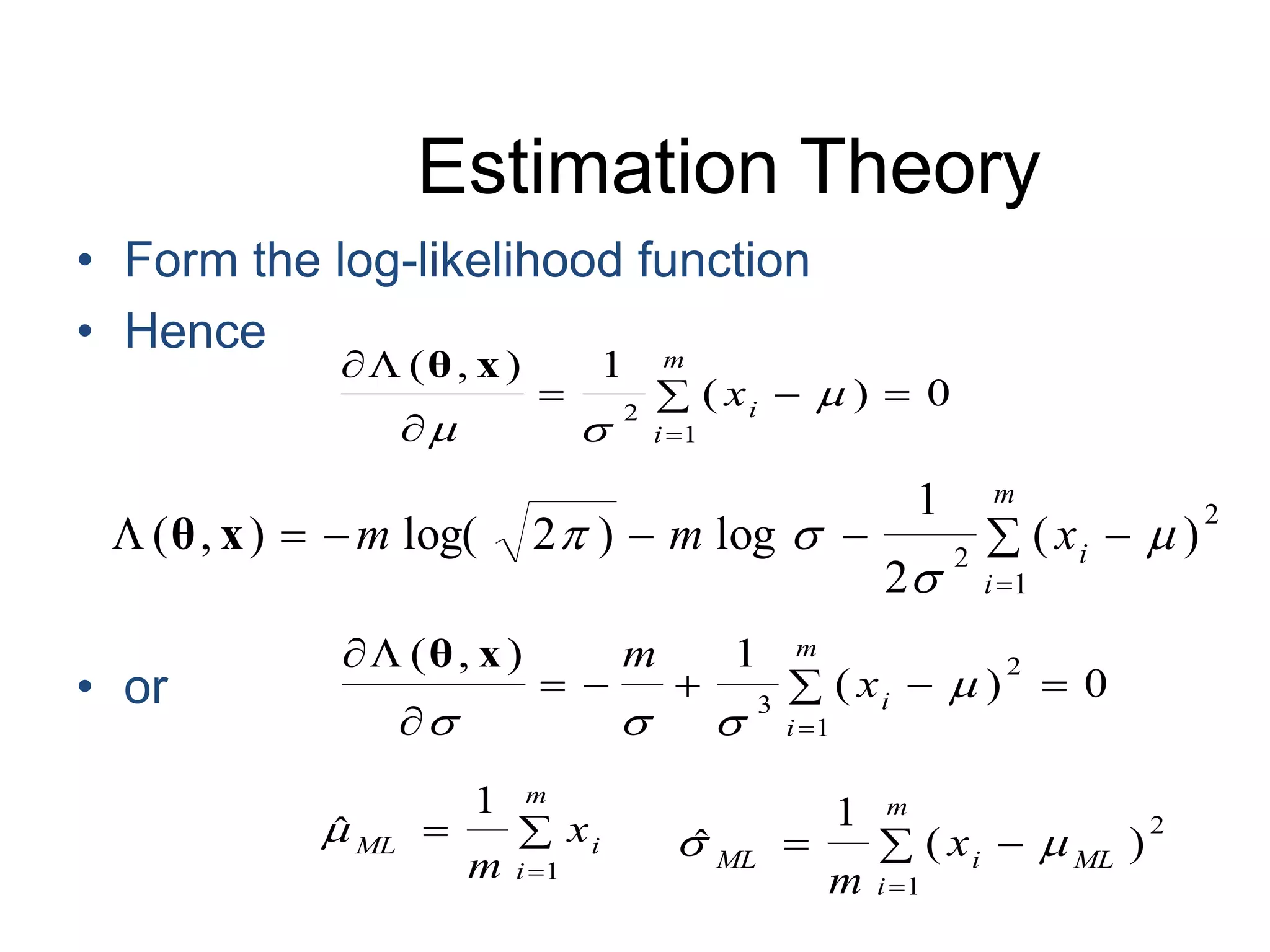

- Estimating unknown parameters from a set of data to obtain the highest probability of the observed data.

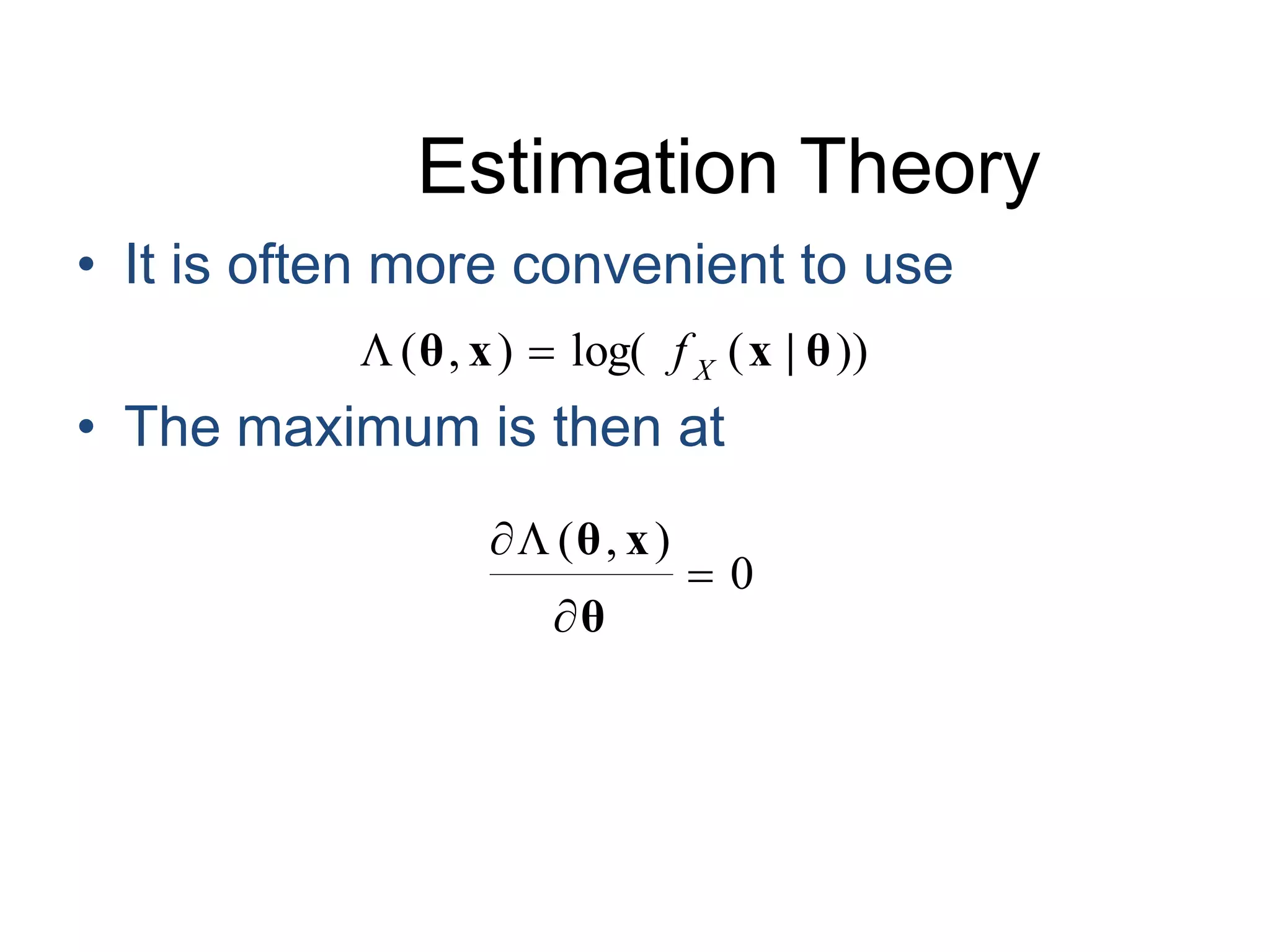

- The maximum likelihood principle estimates parameters such that the probability of obtaining the actual observed sample is maximized.

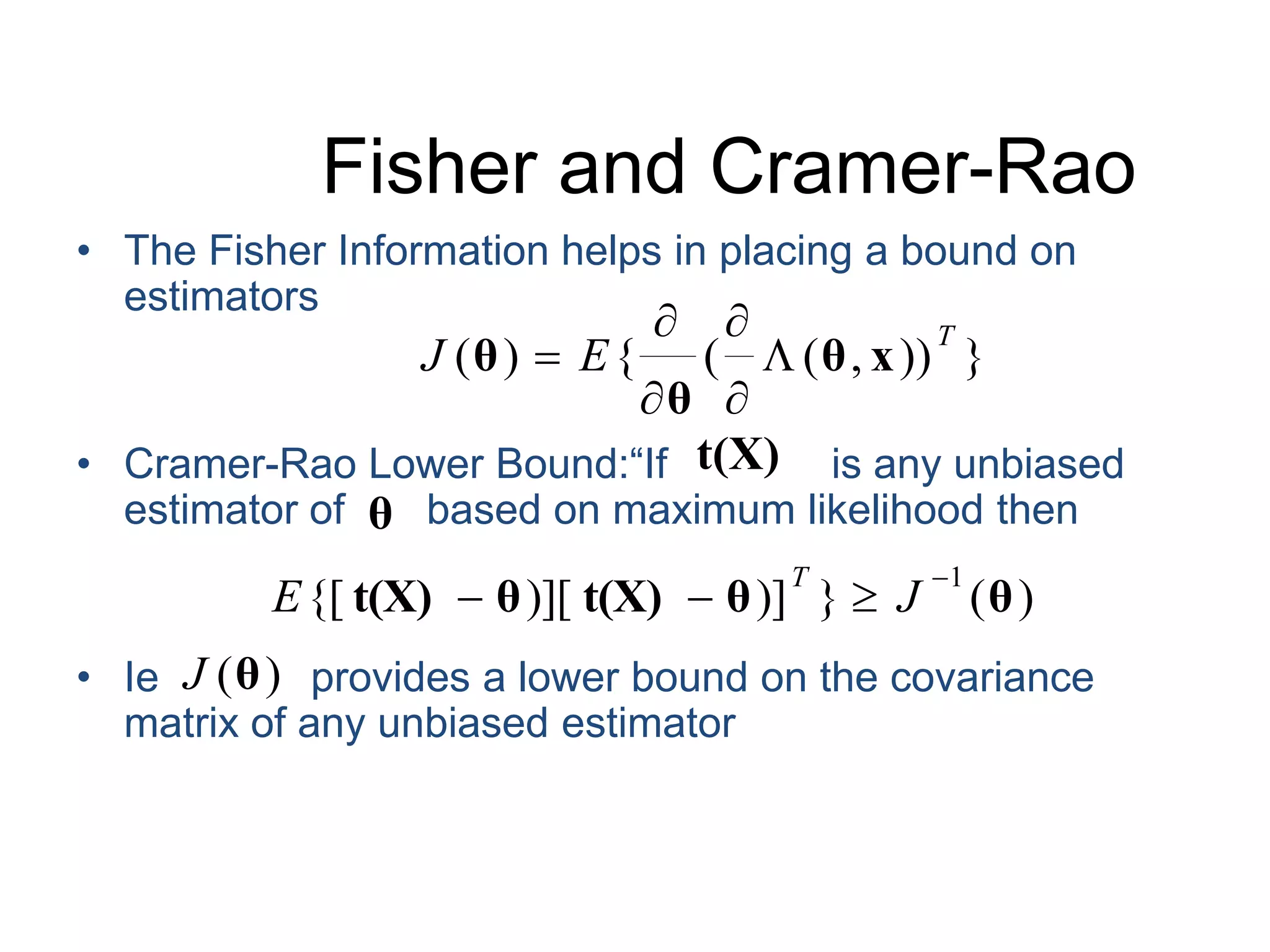

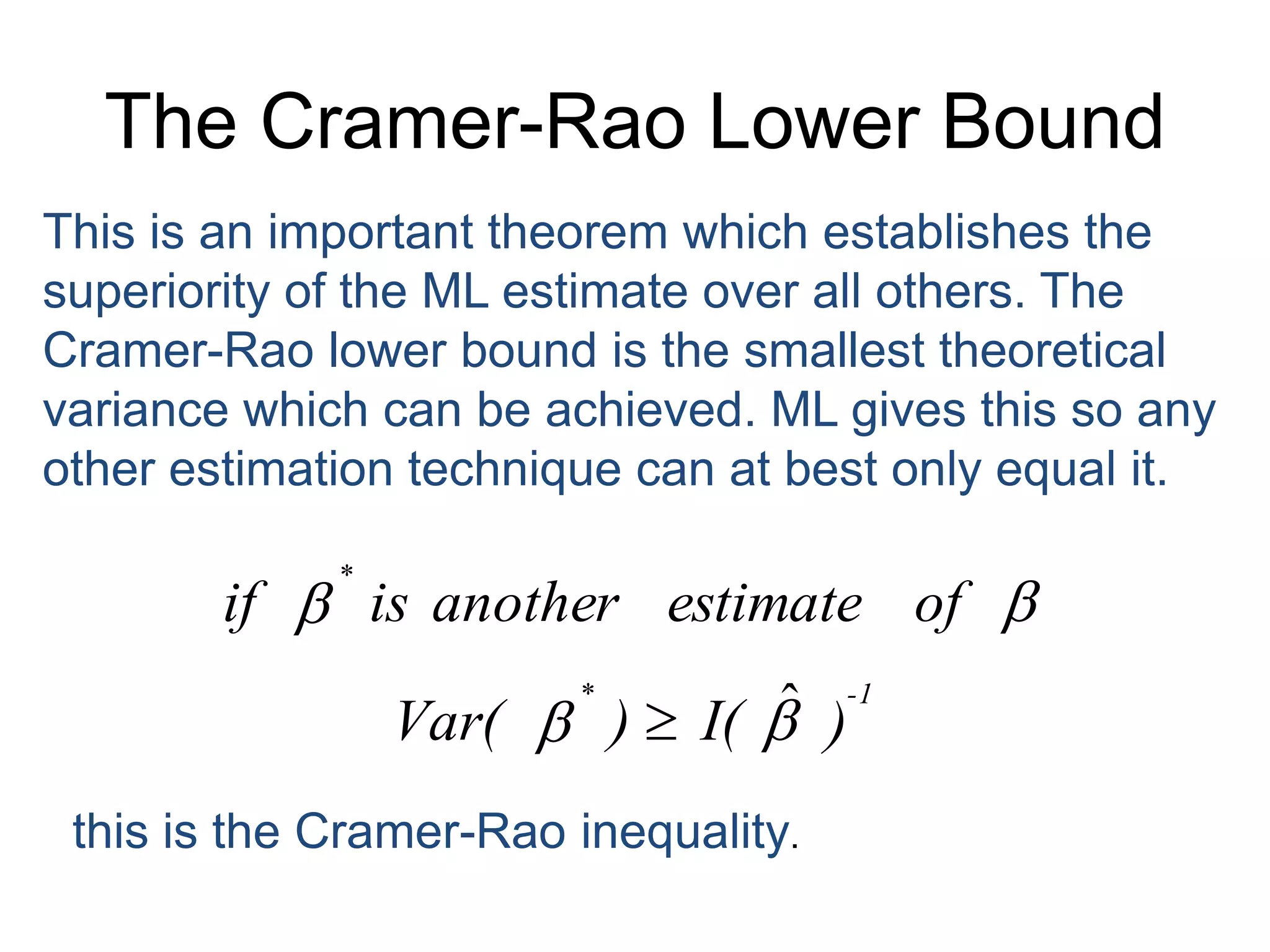

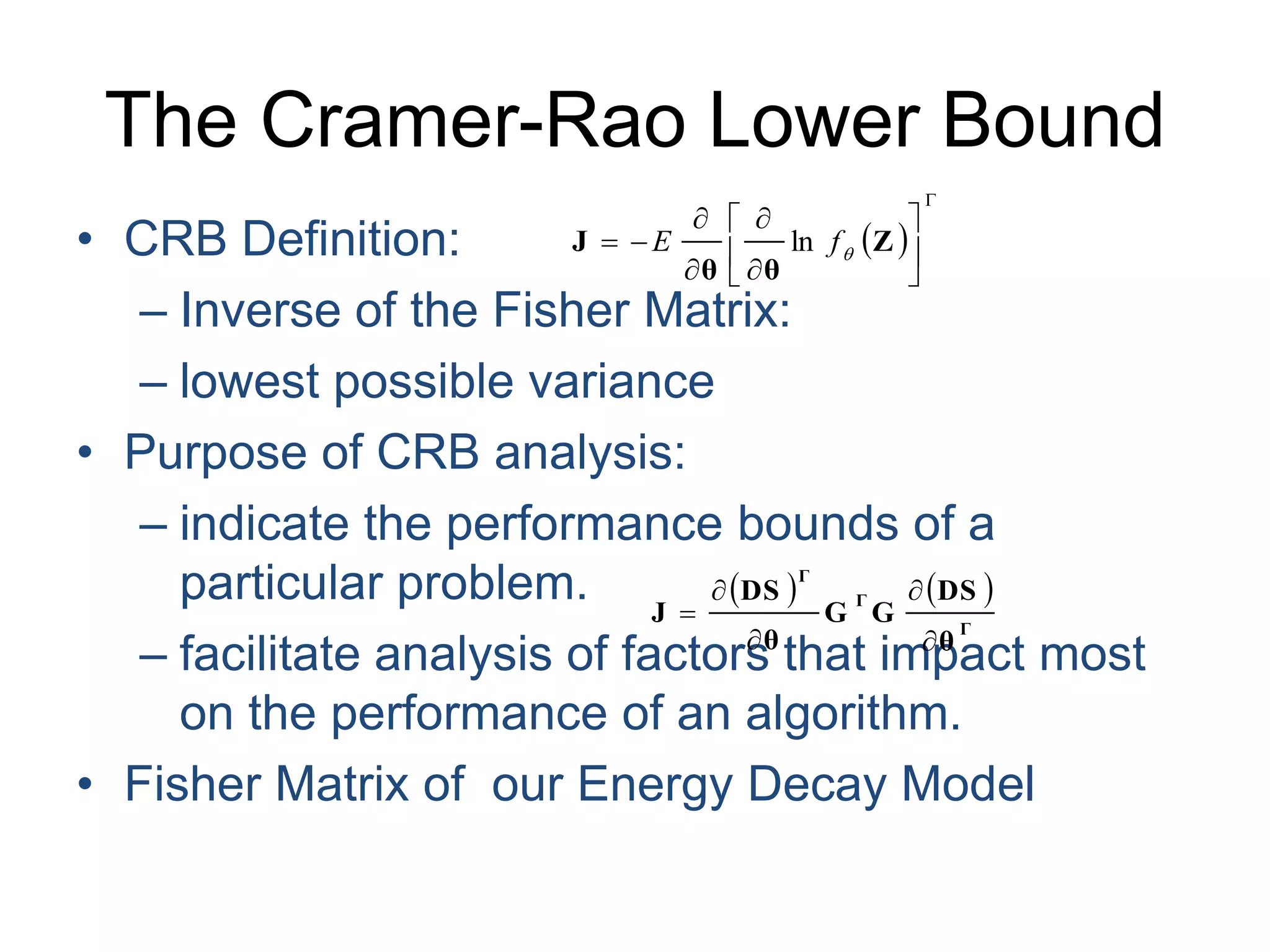

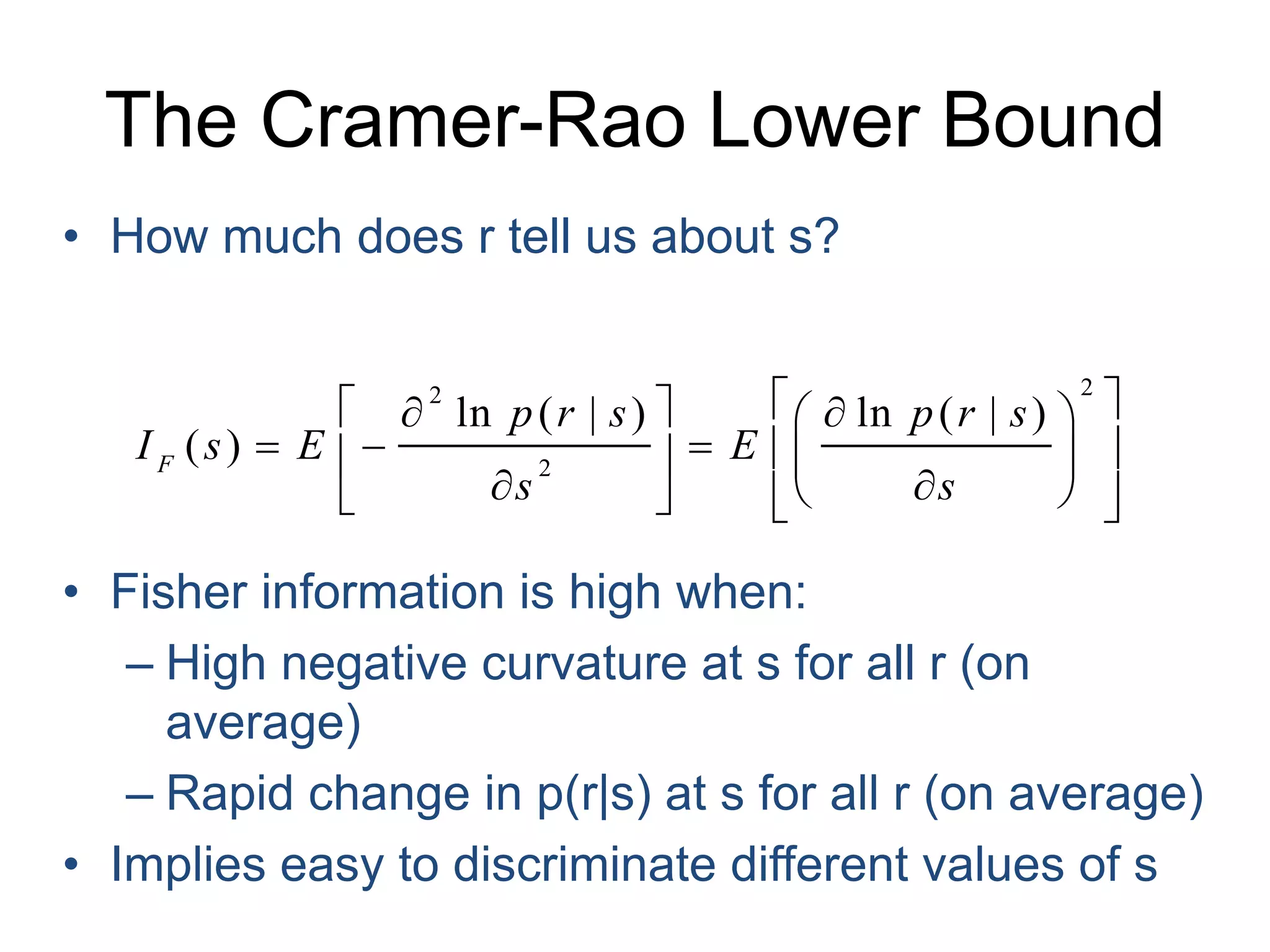

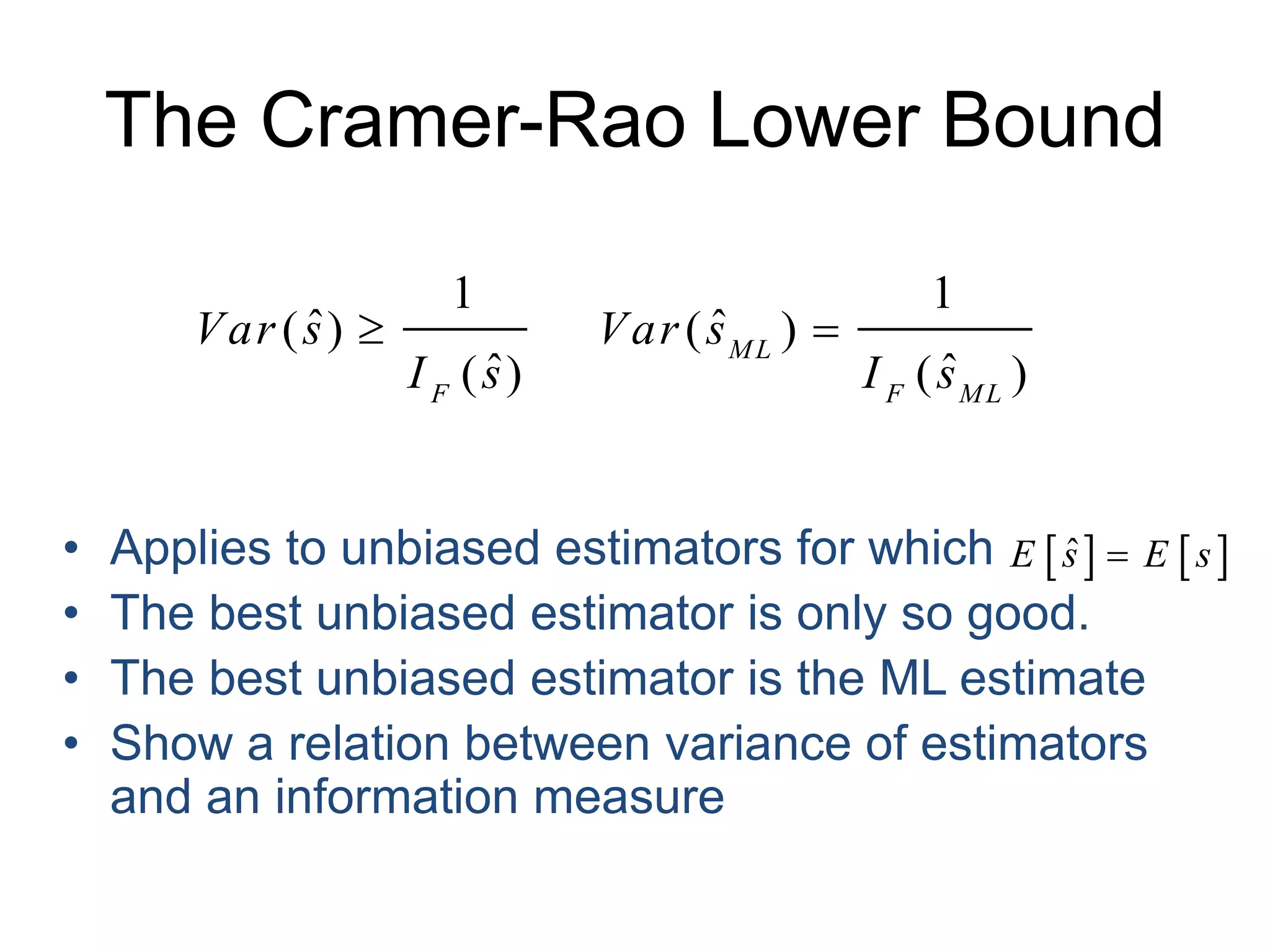

- The Fisher information and Cramer-Rao lower bound place a theoretical lower bound on the variance of unbiased estimators, with the maximum likelihood estimate achieving this lower bound.