The document provides guidance on statistics homework, specifically focusing on regression models and related calculations. It includes examples of linear regression for modeling the relationship between GPA and other factors, as well as residual analysis for assessing model fit. Additionally, it addresses fitting data from a proving ring experiment and comparing linear and quadratic models for better fit evaluation.

![Problems from John A. Rice, Third Edition. [

C hapter.Secti on.Problem ]

1. 14.9.2. See Rscript/html Problem_l4_9_2.r 2. 14.9.4.

For modeling freshman GPA to depend linearly on high school GPA, a standard linear

regression model is:

Yi = /3o+ /31xi + ei, i = 1, 2, ... , n.

Suppose that different intercepts were to be allowed for femalses and males, and write the

model as

Yi= l p(i )/3F + IM(i)/3M + /31xi + e,;, i = 1, 2, ... , n.

where Jp(i) and JM(i) are indicator variables takingon values of Oand

1 according to whether the generder of the ith person is female or

male.

The design matrix for such a model will be

I Jp(l)

I

IM(l) X1

Jp(2) JM(2) x2

X=

lp(n) IM(n) Xn

LFemale -l

Xi

n

M

'L

"

,"

"

M ale i X

i

L M ale ,; Xi

'L

",

"n"l X

•

7

where np and nM are the number of femals and males, respectively. The regression model is

setup as

Note that xr X =

0

Statistics Homework Helper](https://image.slidesharecdn.com/statisticshomeworkhelper-211023113539/85/statistics-assignment-help-2-320.jpg)

![3. 14.9.6. The 4 weighings correspond to 4 outcomes of the dependent variable y

For the regression parameter vector

/3= [ W1 ]

W2

the design matrix is

-

1

X= [ 1 i

1 1

The regression model is

Y = X /3+ e

(b). The least squares estimates of w1 and w2 are given by

= [ 1

]

= (X Tx )- l x T y

W2

Note that

(XT X) = [ ] so

and

9

(XT Y ) = [ 11

] ,

SO = [ 1 3 1 3 ] X [ 1

1 ] =

9

[ l

l

f

3 ]

(c). The estimate of a 2 is given by the sum of squared residuals divided by n - 2, where 2 is the number of columns of

X which equals the number of regression parameters estimated.

The vector of least squares residuals is:

3

= [ = [

3

e

= [ =

: !

:

l t J

:

l tJ

y4 - f;4 7 - 20/3 1/ 3

From this we can compute

Statistics Homework Helper](https://image.slidesharecdn.com/statisticshomeworkhelper-211023113539/85/statistics-assignment-help-3-320.jpg)

![Problem_14_9_2.r

Peter

Thu Apr 30 21:21:38 2015

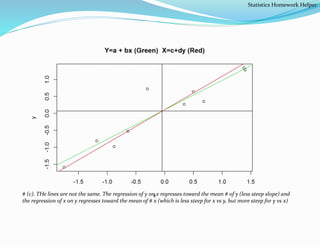

#Problem_14_9_2.r x=c(.34,1.38,-.64,.68,1.40,-.88,-.30, -1.18, .50, -1.75) y=c(.27,1.34,-.53,.35,1.28,-.98,0.72,-.81,.64,-1.59) # (a) Fit line y=a + bx

using lm() in r plot(x,y) lmfit1<-lm(y~x) summary(lmfit1)

## ## Call: ## lm(formula = y ~ x) ## ## Residuals: ## Min 1Q Median 3Q

Max ## -0.34954 -0.16556 -0.06363 0.08067 0.87278 ## ## Coefficients: ##

Estimate Std. Error t value Pr(>|t|) ## (Intercept) 0.1081 0.1156 0.935 0.377

## x 0.8697 0.1133 7.677 5.87e-05 *** ## --- ## Signif. codes: 0 '***' 0.001 '**'

0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.3654 on 8 degrees of

freedom ## Multiple R-squared: 0.8805, Adjusted R-squared: 0.8655 ## F-

statistic: 58.94 on 1 and 8 DF, p-value: 5.867e-05

abline(lmfit1,col='green') lmfit1$coefficients

## (Intercept) x ## 0.1081372 0.8697151

# (b) Fit line x=c+dy using lm() in r lmfit2<-lm(x~y) summary(lmfit2)

## ## Call: ## lm(formula = x ~ y) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.91406 -0.03117 0.07484 0.20963

0.44052 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -0.1149 0.1250 -0.919 0.385 ## y 1.0124

0.1319 7.677 5.87e-05 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error:

0.3942 on 8 degrees of freedom ## Multiple R-squared: 0.8805, Adjusted R-squared: 0.8655 ## F-statistic: 58.94 on 1 and

8 DF, p-value: 5.867e-05

lmfit2$coefficients

## (Intercept) y ## -0.1148545 1.0123846

# For x = b1 + b2y # we get the y vs x line as # y=-(b1/b2) + (1/b2)x abline(a=-

lmfit2$coefficients[1]/lmfit2$coefficients[2], b=(1/lmfit2$coefficients[2]), col="red") title(main="Y=a + bx

(Green) X=c+dy (Red)") abline(h=mean(y)); abline(v=mean(x)) abline(h=mean(y));abline(v=mean(x)) # Plot

horizontal/vertical lines at y/x means

Statistics Homework Helper](https://image.slidesharecdn.com/statisticshomeworkhelper-211023113539/85/statistics-assignment-help-5-320.jpg)