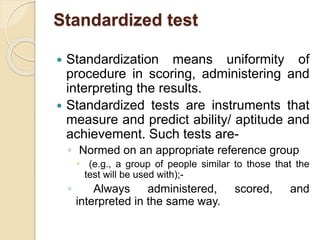

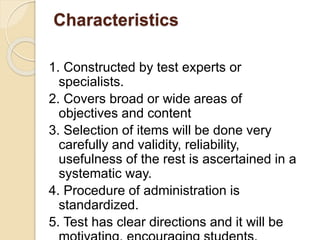

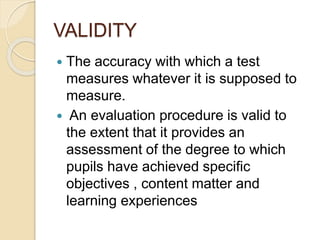

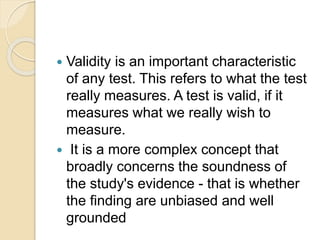

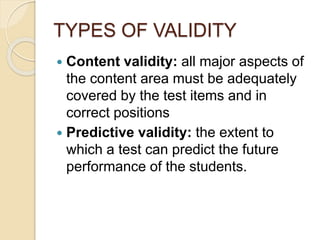

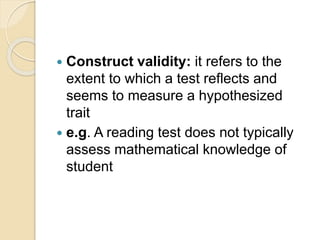

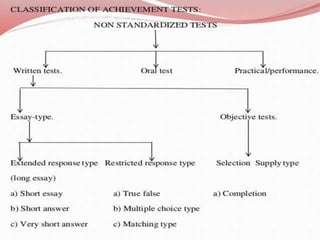

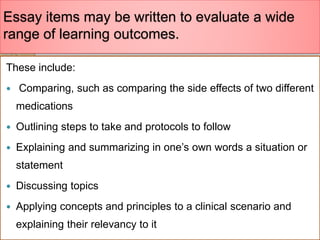

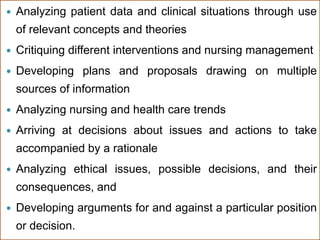

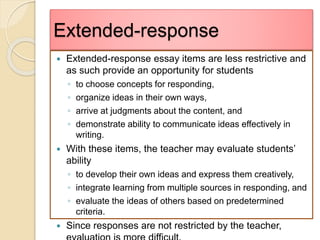

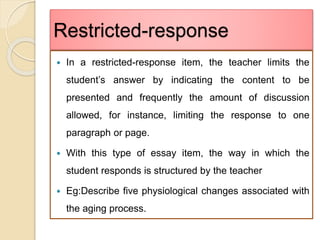

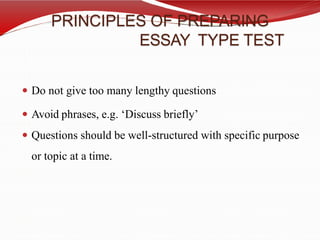

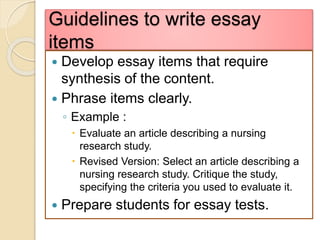

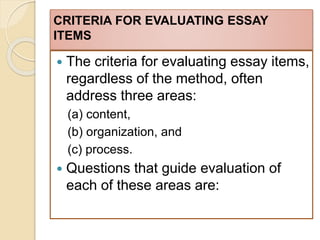

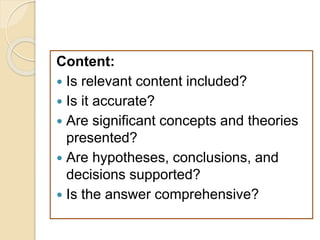

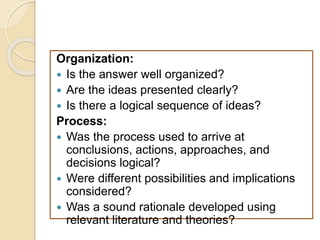

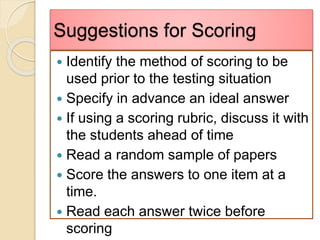

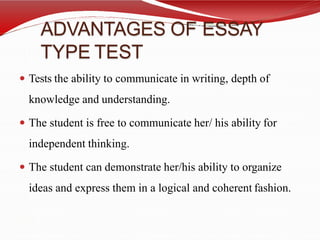

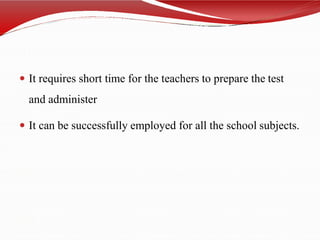

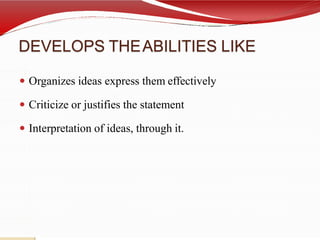

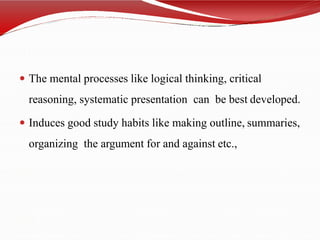

Standardized and non-standardized tests are used to assess students. [1] Standardized tests are administered uniformly with set procedures for scoring and interpretation, while non-standardized tests do not have uniform procedures. [2] For accurate measurement, tests must be valid, reliable, and usable to provide dependable results. [3] Different types of tests include essay tests which allow freedom of response and evaluate complex learning outcomes.