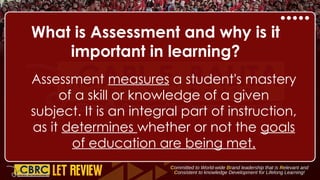

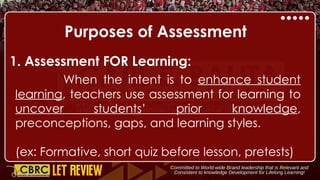

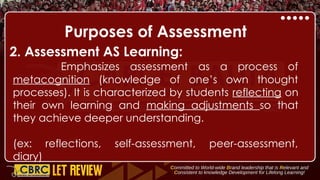

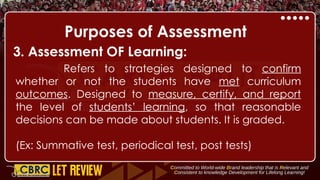

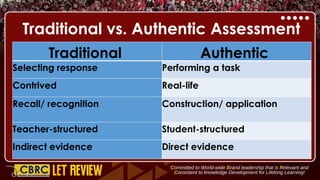

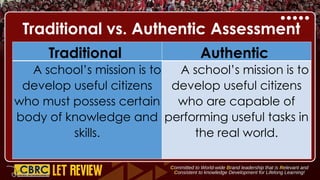

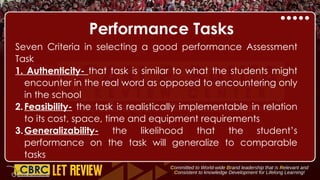

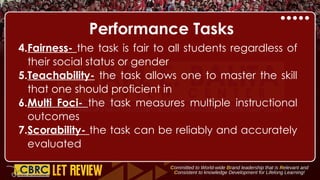

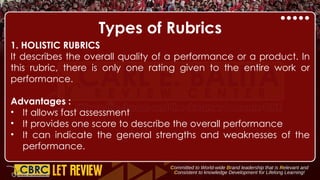

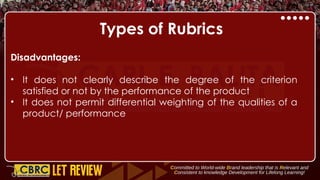

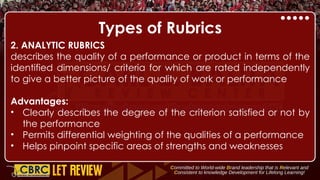

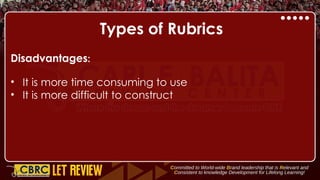

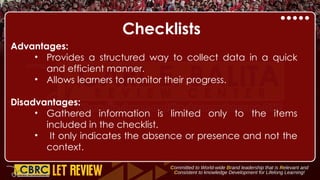

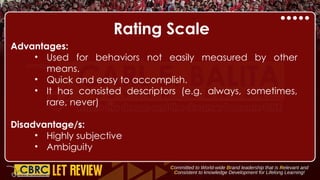

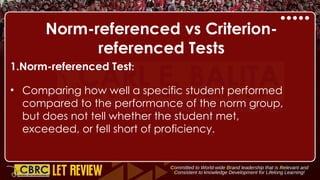

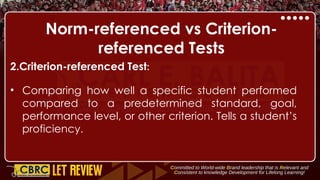

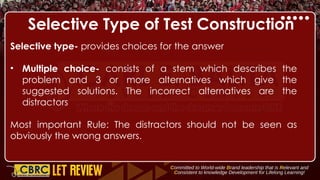

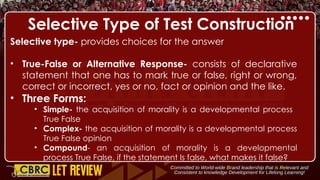

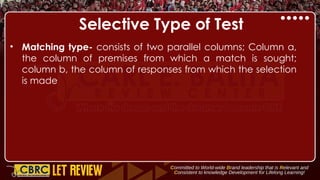

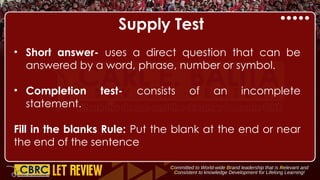

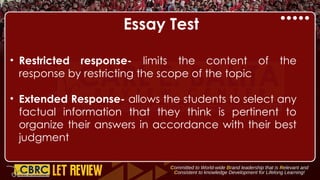

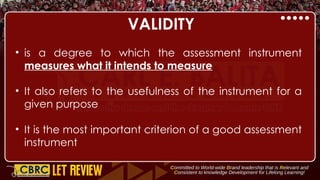

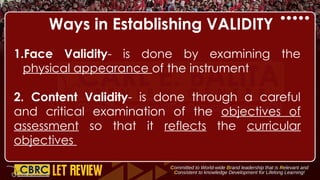

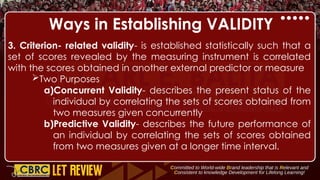

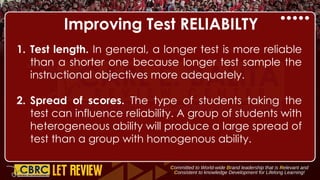

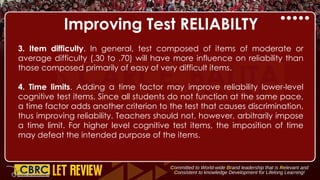

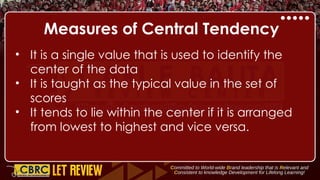

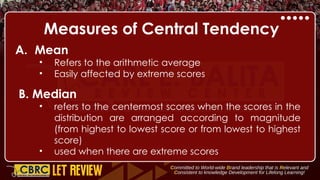

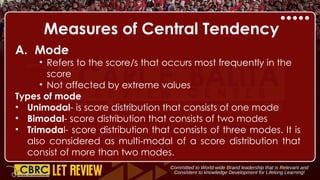

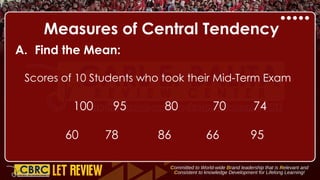

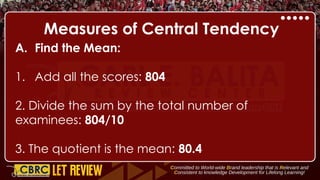

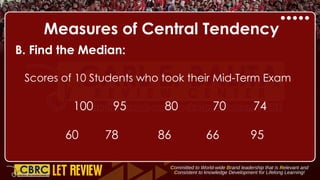

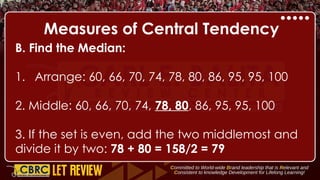

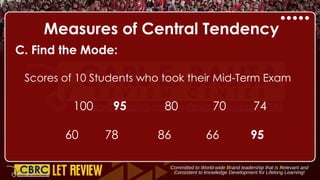

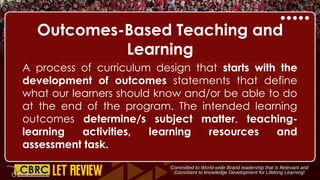

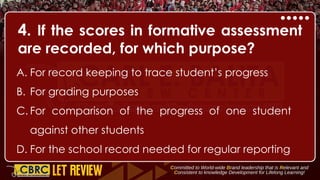

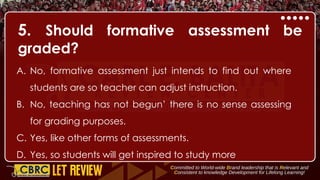

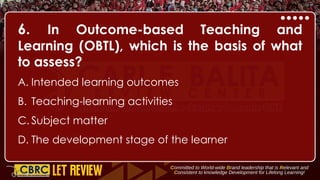

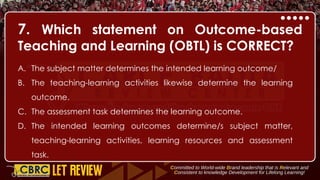

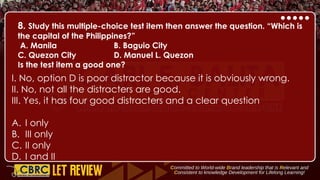

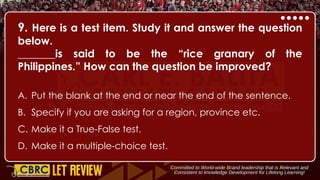

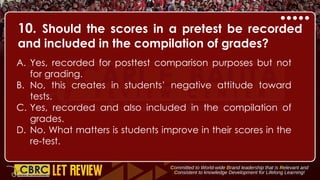

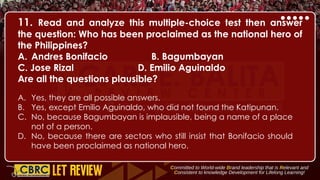

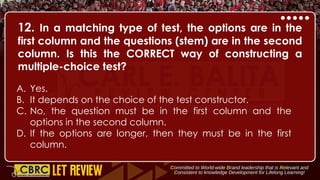

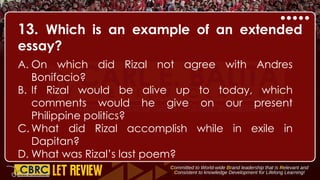

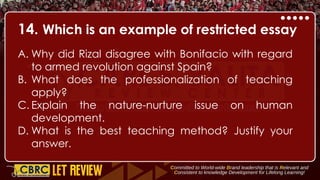

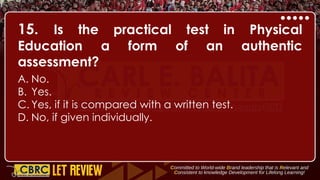

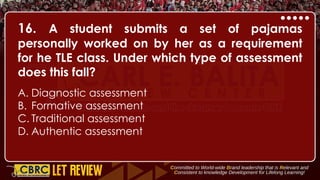

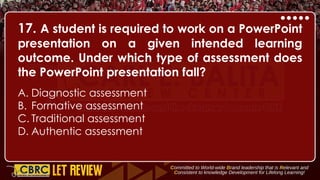

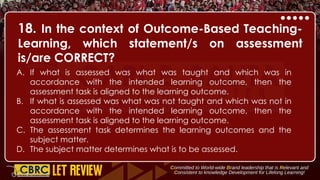

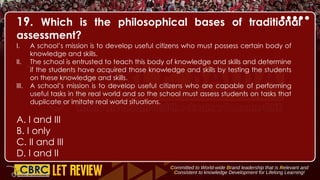

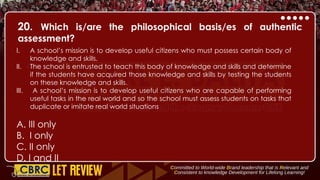

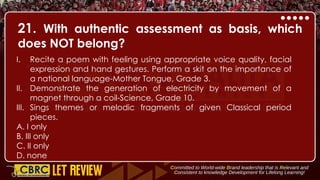

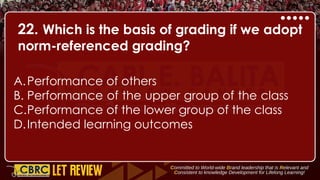

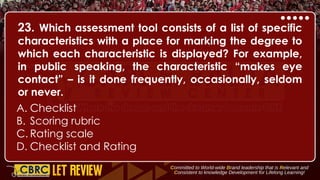

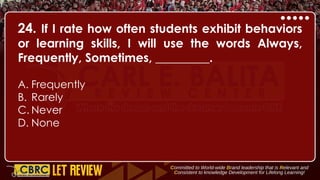

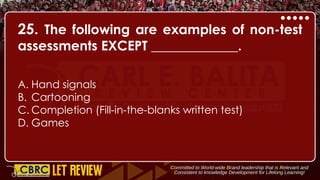

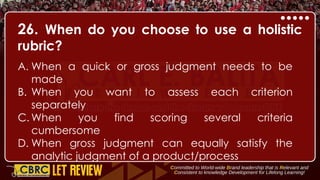

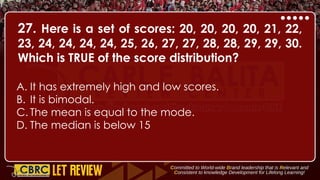

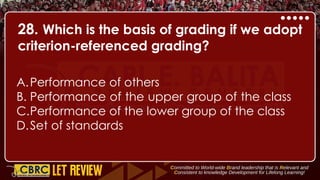

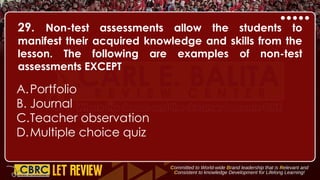

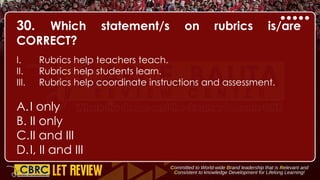

The document outlines the role of assessment in education, differentiating between types such as assessment for learning, as learning, and of learning. It discusses traditional vs. authentic assessments, criteria for performance tasks, types of rubrics, methods for ensuring validity and reliability in assessments, and provides practical examples of various types of tests and assessments. The text emphasizes the importance of aligning assessments with intended learning outcomes in outcomes-based education.