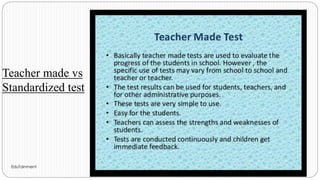

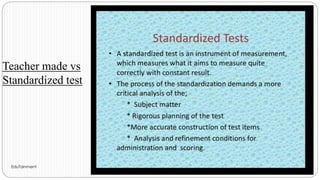

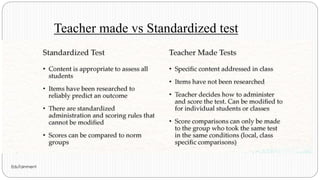

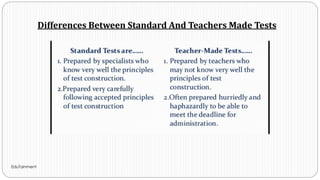

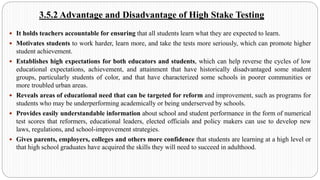

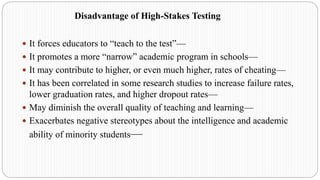

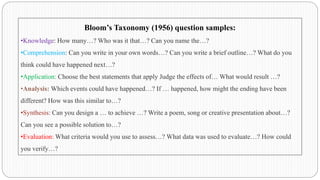

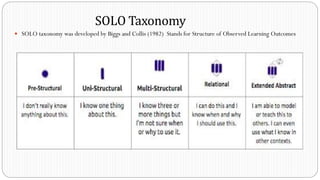

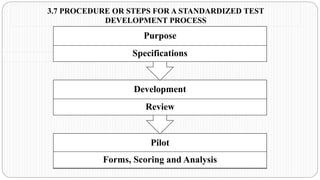

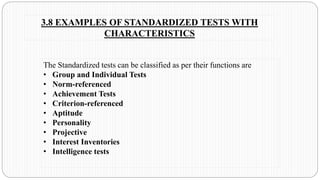

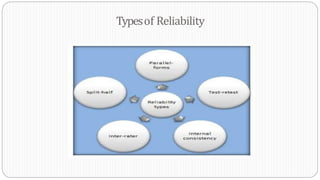

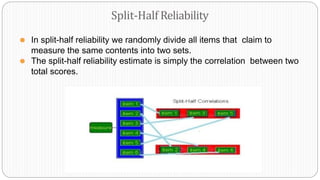

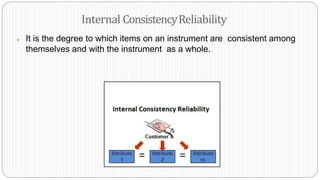

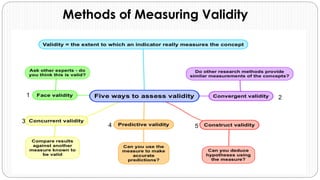

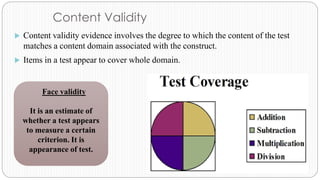

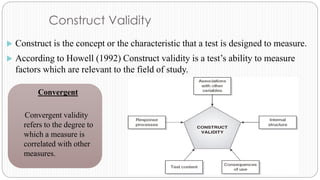

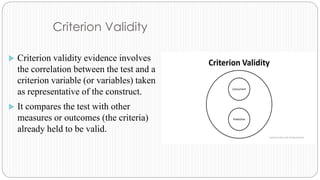

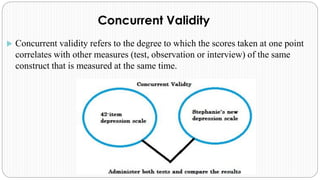

The document outlines a unit on test development and evaluation, focusing on classroom and high-stakes testing. It covers the concepts, advantages, and disadvantages of high-stakes testing, as well as methods for test creation using Bloom's and SOLO taxonomies. Additionally, it emphasizes the importance of validity and reliability in assessments and the processes involved in developing standardized tests.