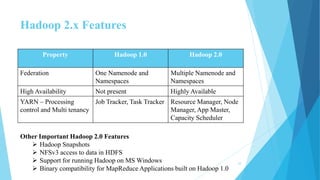

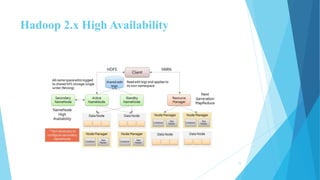

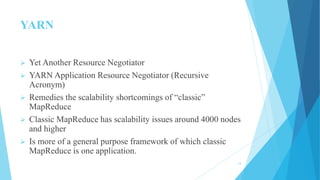

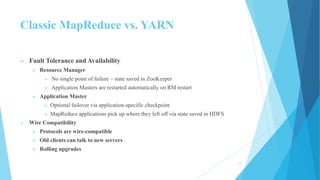

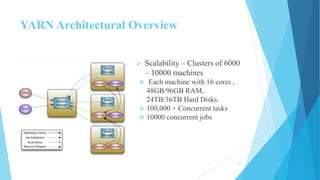

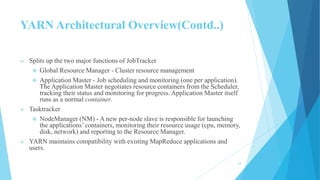

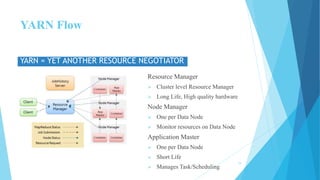

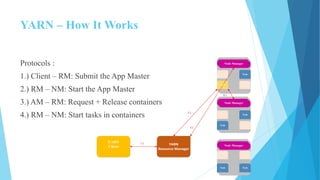

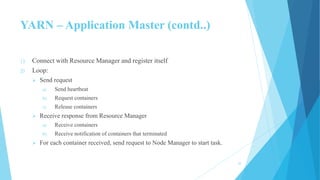

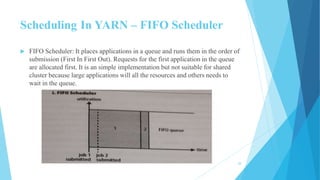

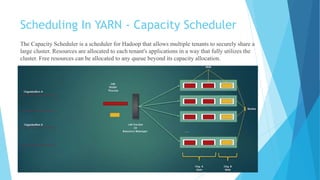

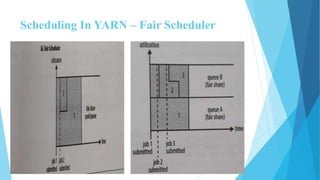

This document provides an overview of Hadoop 2.0 YARN architecture. It introduces YARN and describes how it addresses scalability issues in Hadoop 1.x by separating resource management and scheduling functions from application execution. It explains the key components of YARN including the ResourceManager, NodeManager, and ApplicationMaster. It also discusses scheduling in YARN, describing scheduling algorithms like FIFO, Capacity, and Fair scheduling and mechanisms like delay scheduling and dominant resource fairness.