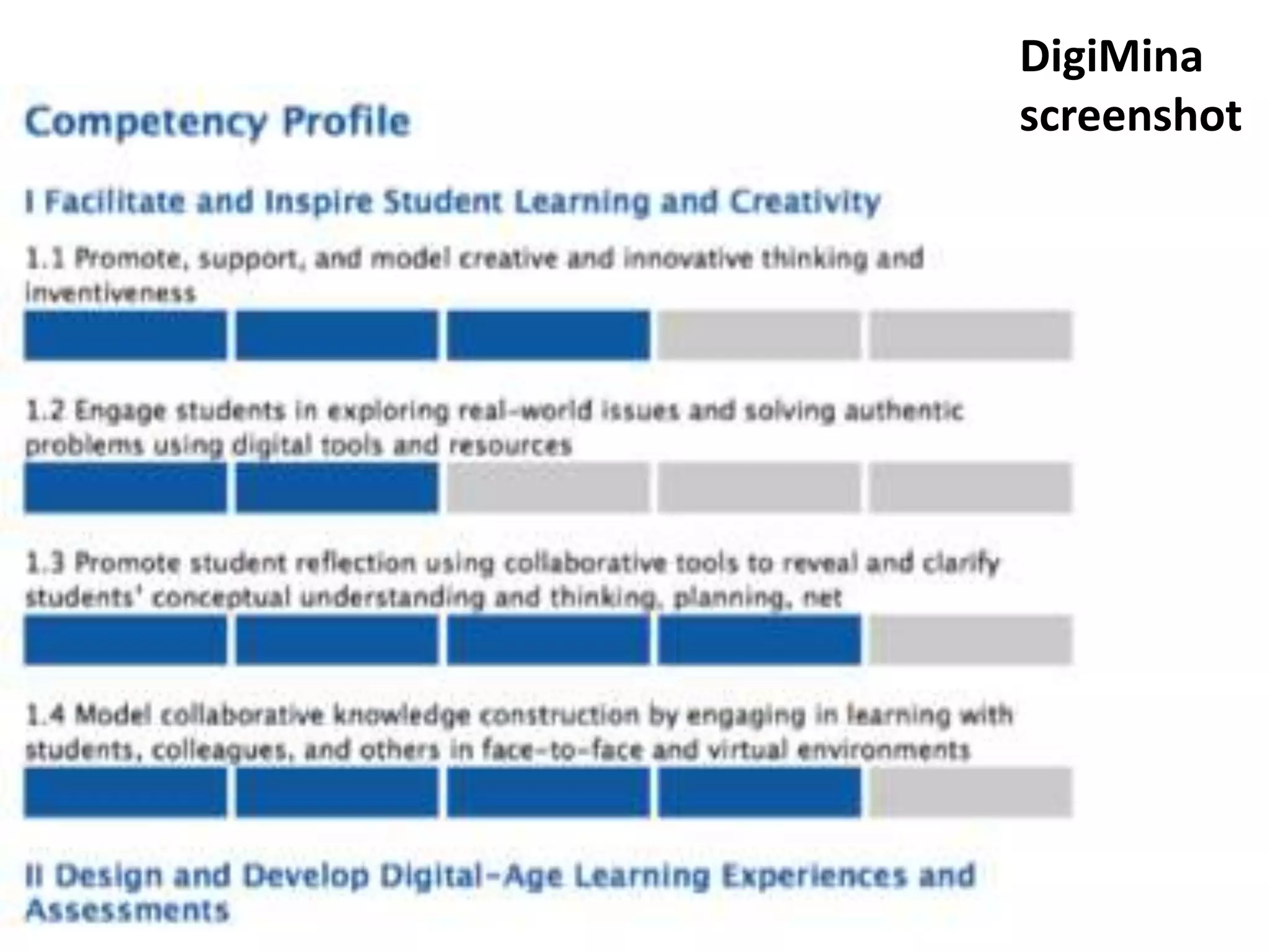

This document summarizes research evaluating an online tool and rubric for assessing Estonian teachers' digital competencies. Through focus groups with teachers and other stakeholders, the researchers found:

1) Some statements in the rubric were difficult to understand or irrelevant for teachers.

2) The workload of self-assessment was too high, reducing motivation.

3) Changing to a simpler 3-point scale and providing examples instead of definitions could increase usability.

4) Scenario-based discussions helped validate changes needed to the rubric and tool to better reflect teachers' experiences and increase adoption. Suggestions were made to simplify the rubric and requirements for a new online assessment tool.