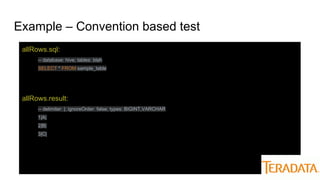

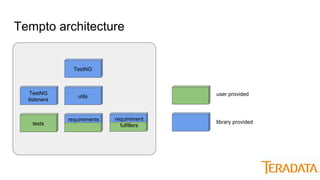

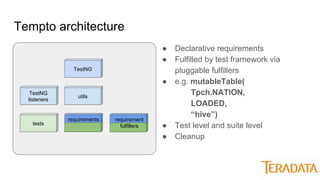

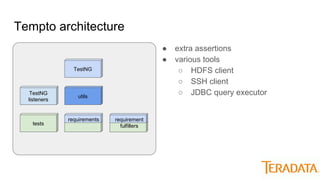

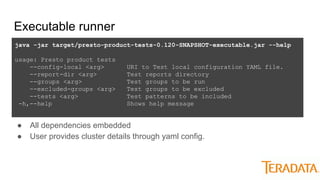

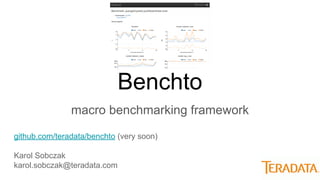

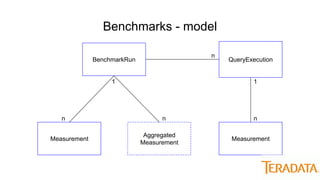

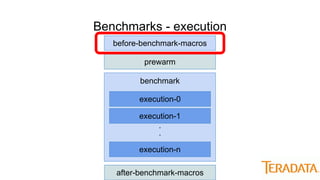

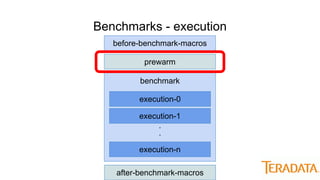

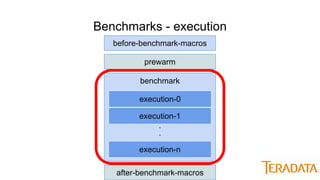

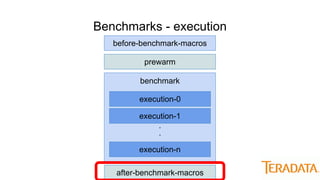

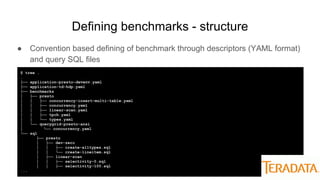

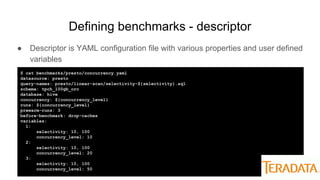

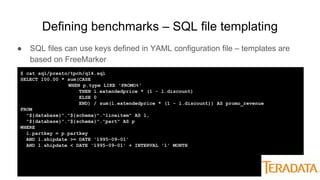

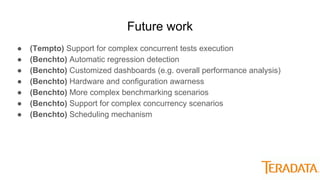

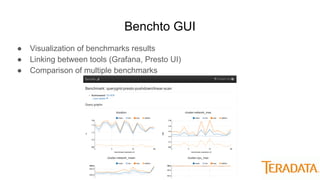

The document discusses testing tools, specifically Tempto and Benchto, used for product and performance testing in database systems like Presto and Hive. Tempto is an end-to-end testing framework for software engineers that simplifies test definition and execution, while Benchto provides a structured approach to defining and analyzing macro benchmarks for clustered environments. Future work includes enhanced support for complex tests, regression detection, and improved visualization tools like Grafana.