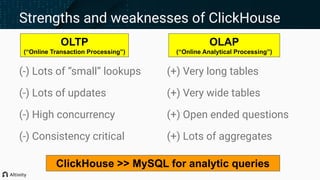

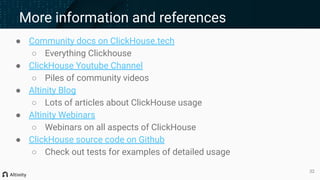

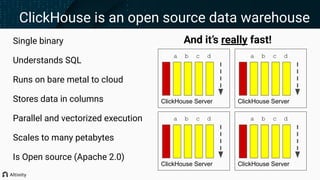

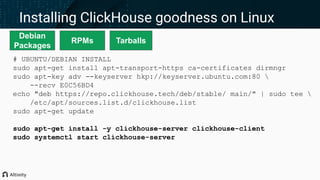

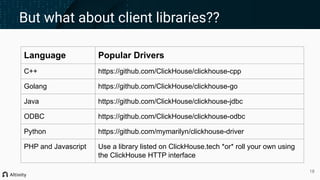

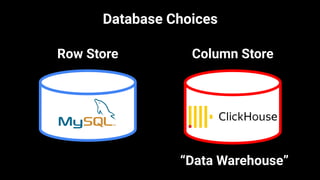

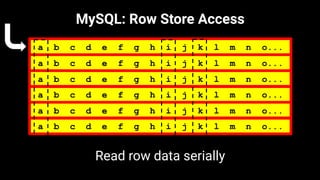

This document provides an overview and introduction to ClickHouse, an open source column-oriented data warehouse. It discusses installing and running ClickHouse on Linux and Docker, designing tables, loading and querying data, available client libraries, performance tuning techniques like materialized views and compression, and strengths/weaknesses for different use cases. More information resources are also listed.

![ClickHouse performance tuning is different...

The bad news…

● No query optimizer

● No EXPLAIN PLAN

● May need to move [a lot

of] data for performance

The good news…

● No query optimizer!

● System log is great

● System tables are too

● Performance drivers are

simple: I/O and CPU

● Constantly improving](https://image.slidesharecdn.com/yourfirstdatawarehouse-2020-12-02-201204014106/85/Your-first-ClickHouse-data-warehouse-29-320.jpg)