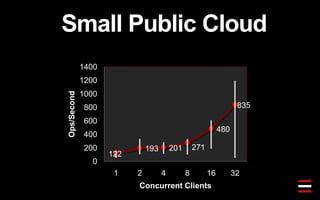

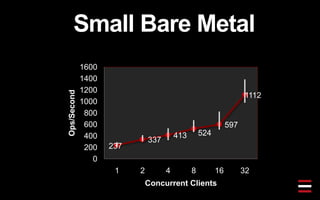

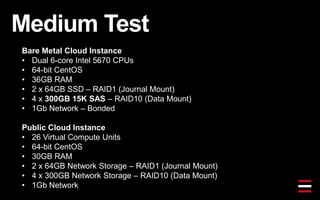

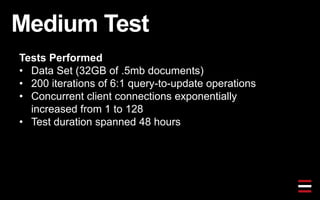

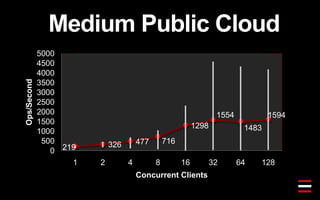

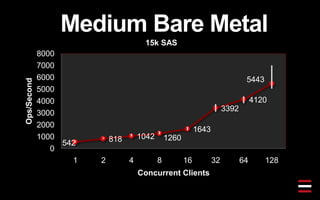

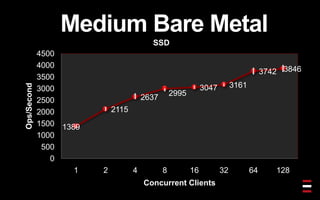

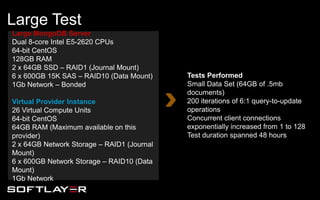

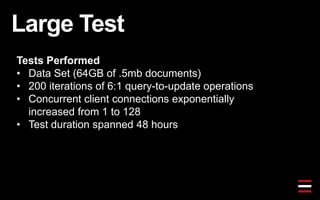

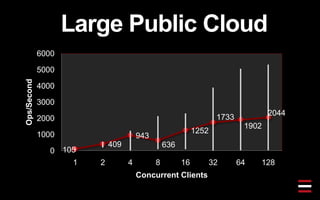

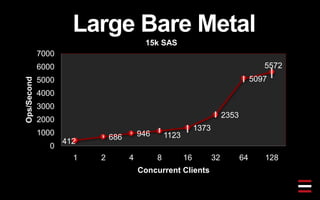

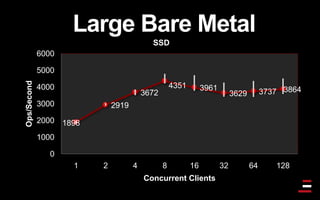

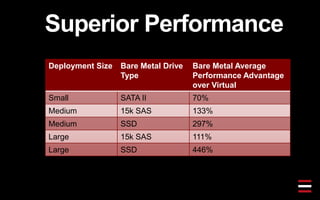

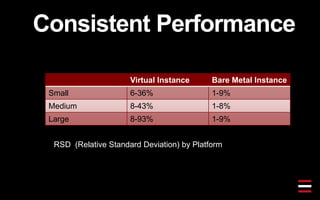

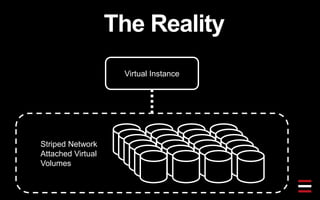

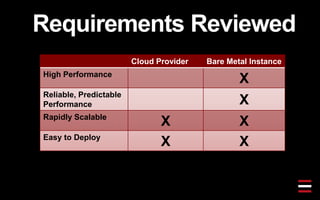

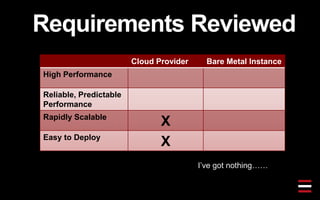

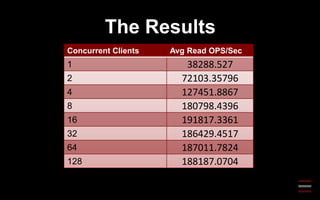

This document summarizes the results of performance testing MongoDB deployments on bare metal cloud instances compared to public cloud instances. Small, medium, and large tests were conducted using different hardware configurations and data set sizes. The bare metal cloud instances consistently outperformed the public cloud instances, achieving higher operations per second, especially at higher concurrency levels. The document attributes the performance differences to the dedicated, tuned hardware resources of the bare metal instances compared to the shared resources of public cloud virtual instances.

![db.foo.drop();

db.foo.insert( { _id : 1 } )

ops = [{op: "findOne", ns: "test.foo", query: {_id: 1}},

{op: "update", ns: "test.foo", query: {_id: 1}, update: {$inc: {x: 1}}}]

for ( var x = 1; x <= 128; x *= 2) {

res = benchRun( {

parallel : x ,

seconds : 5 ,

ops : ops

} );

print( "threads: " + x + "t queries/sec: " + res.query );

}

Quick Example](https://image.slidesharecdn.com/5-highperformanceharoldhannon-130624144137-phpapp02/85/High-Performance-Scalable-MongoDB-in-a-Bare-Metal-Cloud-23-320.jpg)

![{ "#RAND_INT" : [ min , max , <multiplier> ] }

[ 0 , 10 , 4 ] would produce random numbers between 0 and 10 and then multiply by 4.

{ "#RAND_STRING" : [ length ] }

[ 3 ] would produce a string of 3 random characters.

var complexDoc3 = { info: "#RAND_STRING": [30] } }

var complexDoc3 = { info: { inner_field: { "#RAND_STRING": [30] } } }

Dynamic Values](https://image.slidesharecdn.com/5-highperformanceharoldhannon-130624144137-phpapp02/85/High-Performance-Scalable-MongoDB-in-a-Bare-Metal-Cloud-26-320.jpg)

![var ops = [];

while (low_rand < high_id) {

if(readTest){

ops.push({

op : "findOne",

ns : "test.foo",

query : {

incrementing_id : {

"#RAND_INT" : [ low_rand, low_rand + RAND_STEP ]

}

}

});

}

if(updateTest){

ops.push({ op: "update", ns: "test.foo",

query: { incrementing_id: { "#RAND_INT" : [0,high_id]}},

update: { $inc: { counter: 1 }},

safe: true });

}

low_rand += RAND_STEP;

}

function printLine(tokens, columns, width) {

line = "";

column_width = width / columns;

for (var i=0;i<tokens.length;i++) {

line += tokens[i];

// token_width = tokens[token].toString().length;

// pad = column_width - token_width;

// while (pad--) {

if(i != tokens.length-1)

line += " , ";

// }

}](https://image.slidesharecdn.com/5-highperformanceharoldhannon-130624144137-phpapp02/85/High-Performance-Scalable-MongoDB-in-a-Bare-Metal-Cloud-35-320.jpg)