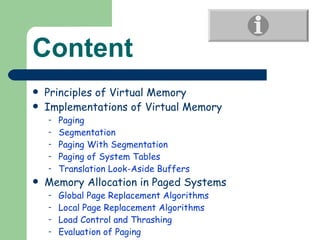

The document summarizes key concepts about virtual memory, including:

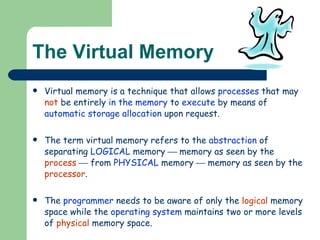

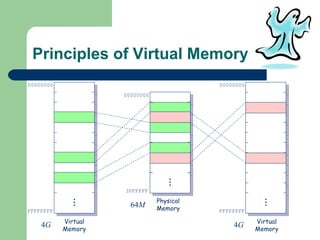

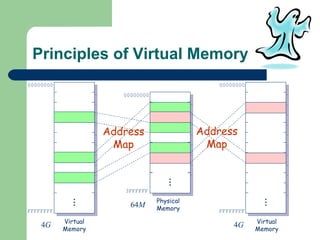

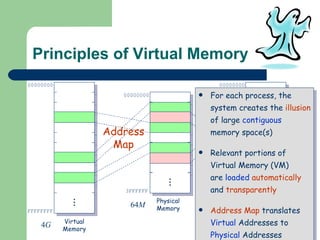

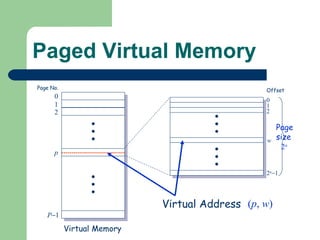

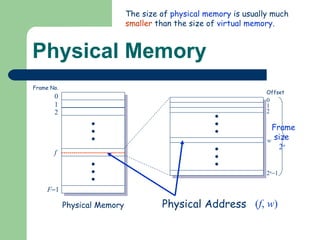

1) Virtual memory allows processes to execute even if not entirely in physical memory by automatically allocating storage upon request, creating the illusion of large contiguous memory spaces.

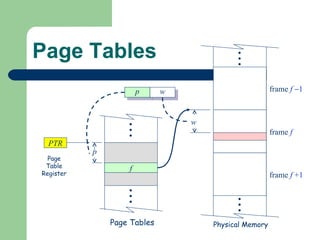

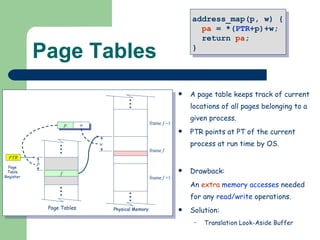

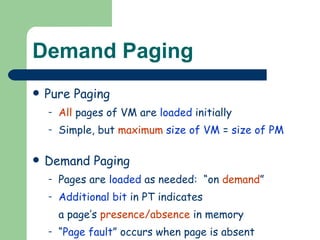

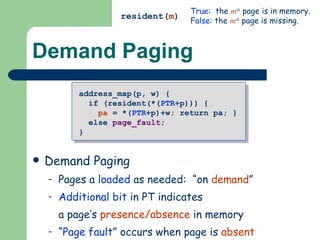

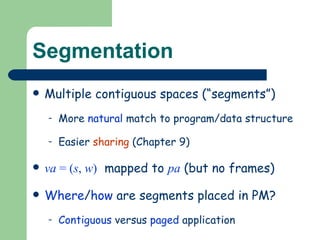

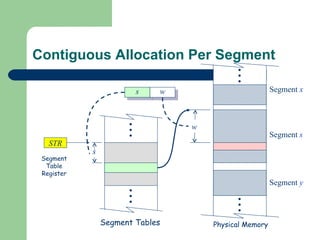

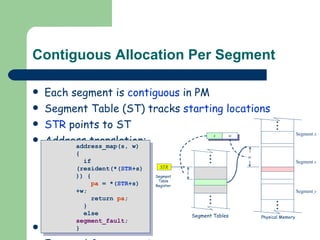

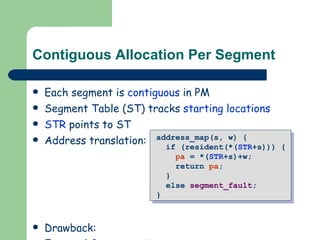

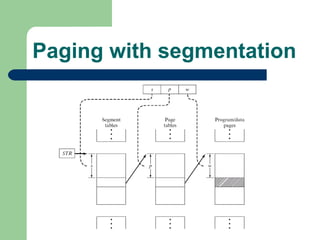

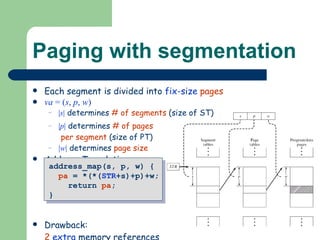

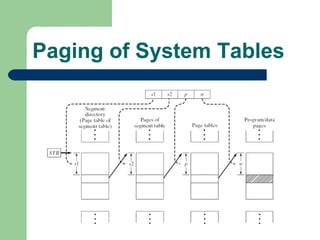

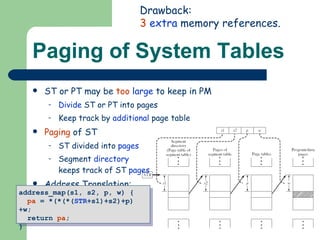

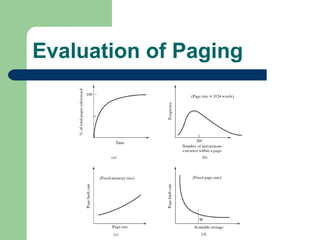

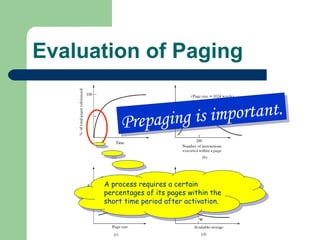

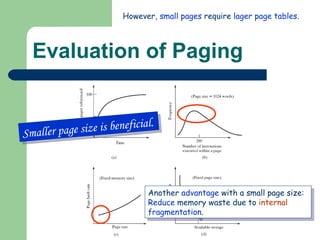

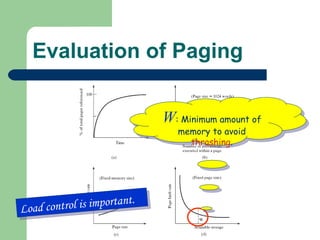

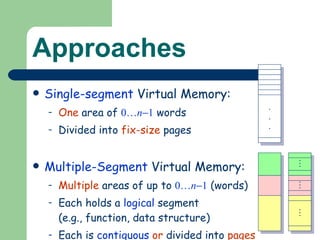

2) Common virtual memory implementations include paging, segmentation, and paging with segmentation. Paging divides memory into fixed-size pages while segmentation uses multiple logical segments.

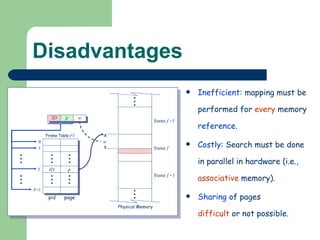

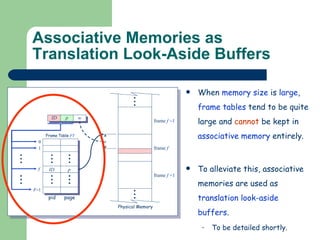

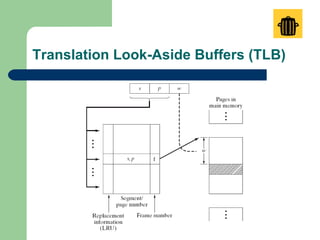

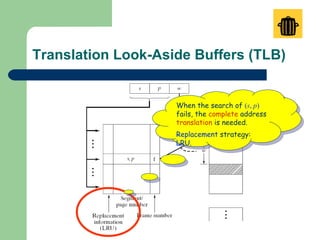

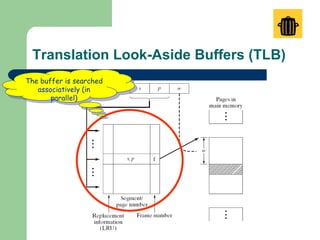

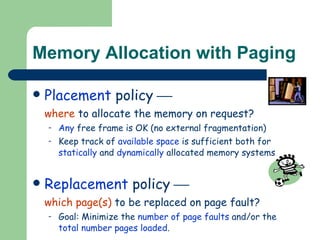

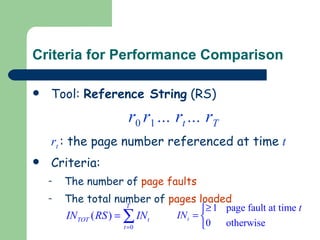

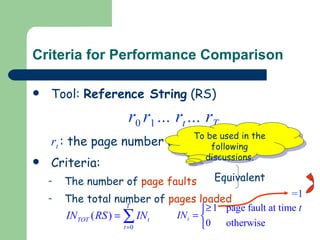

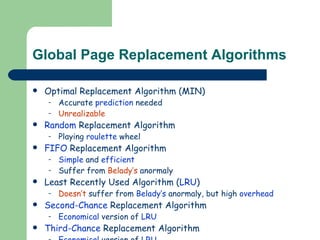

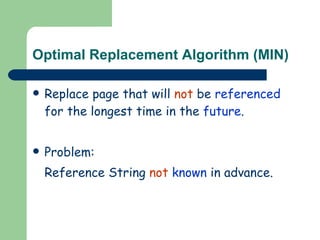

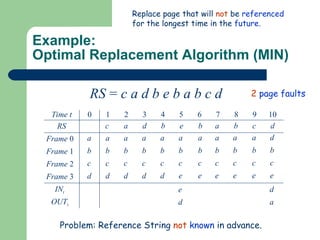

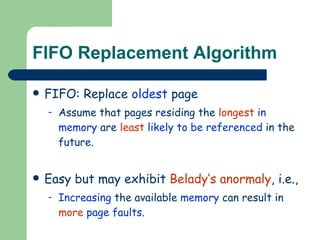

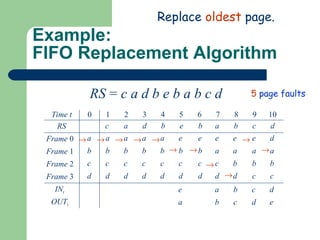

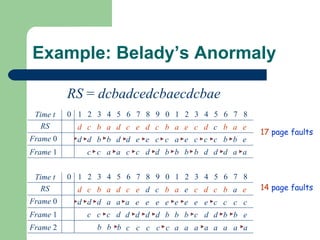

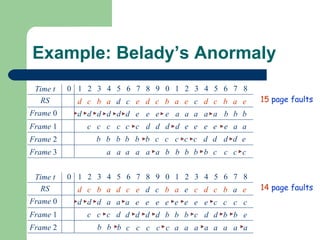

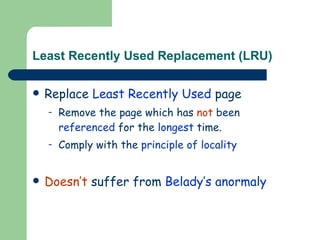

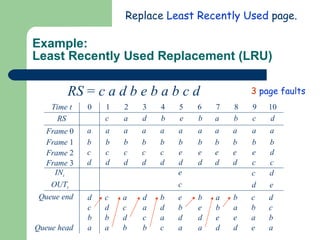

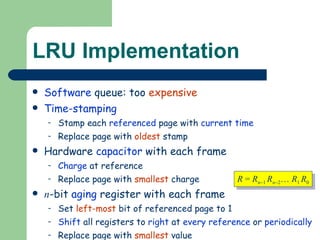

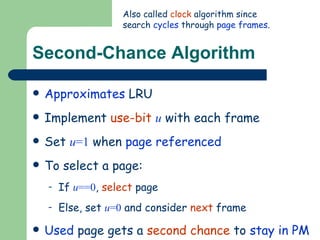

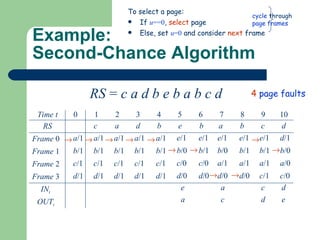

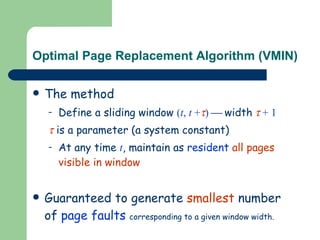

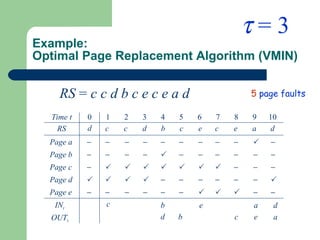

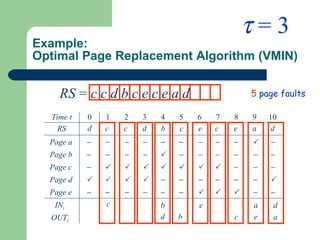

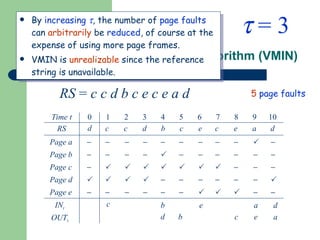

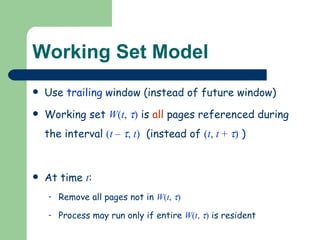

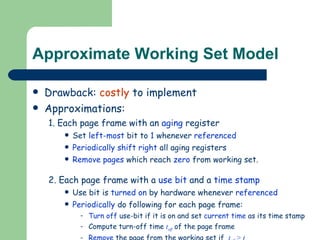

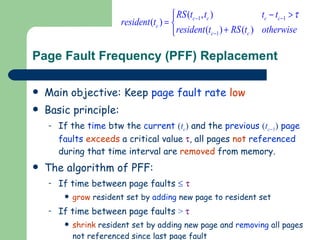

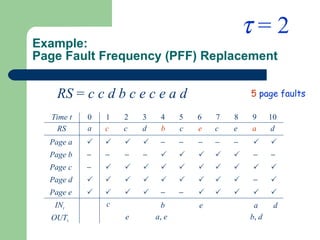

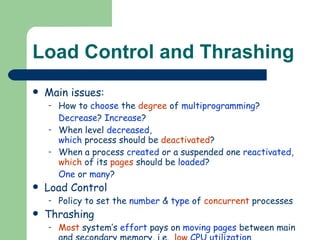

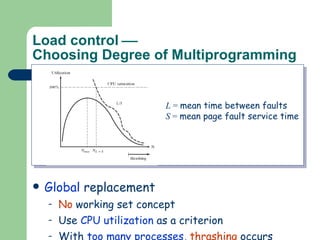

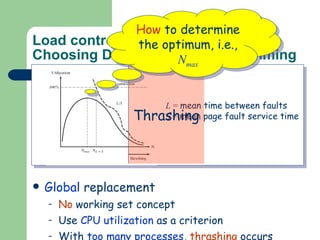

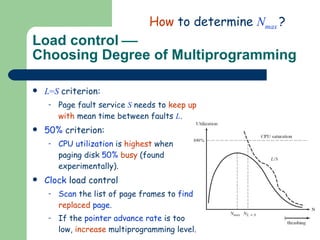

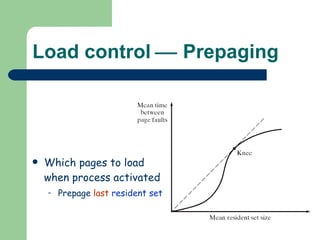

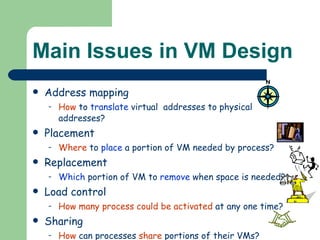

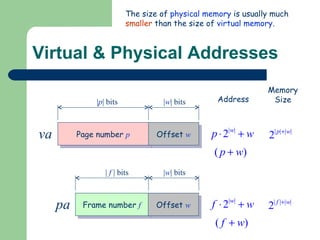

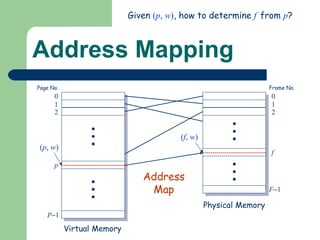

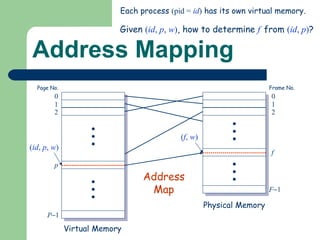

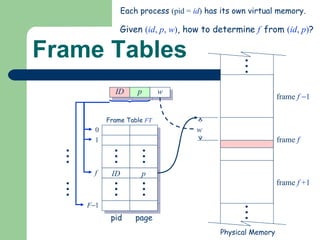

3) Issues in virtual memory design include address mapping, placement, replacement, load control, and sharing. Translation lookaside buffers help speed up address translation.

![Address Translation via Frame Table address_map(id,p,w){ pa = UNDEFINED; for( f =0; f <F; f++) if( FT [ f ].pid==id && FT [ f ].page==p) pa = f +w; return pa ; } Each process (pid = id ) has its own virtual memory. Given ( id , p , w ) , how to determine f from ( id , p ) ?](https://image.slidesharecdn.com/os8-2-100818024510-phpapp01/85/Os8-2-18-320.jpg)