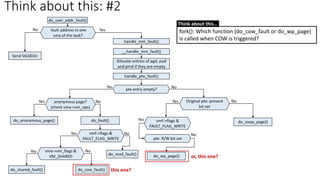

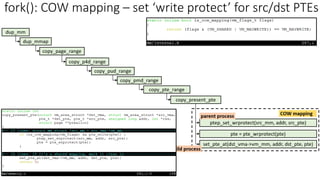

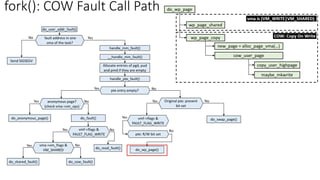

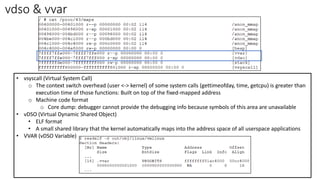

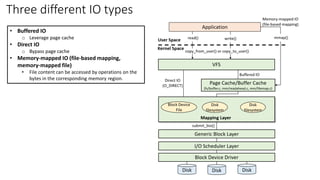

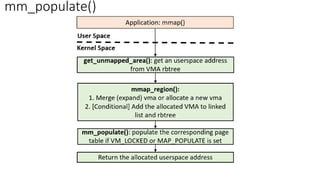

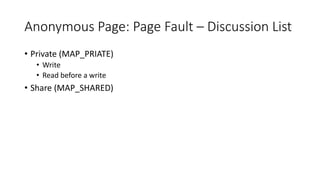

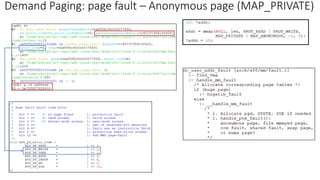

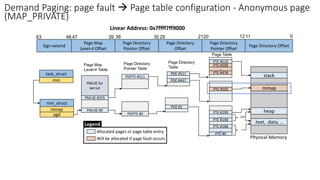

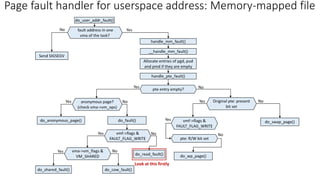

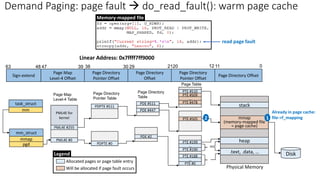

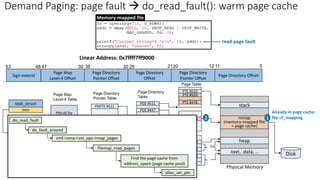

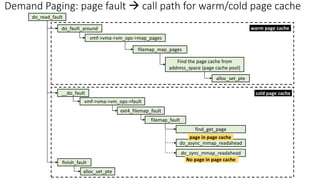

The document discusses the implementation of memory mapping (mmap) in the Linux kernel, focusing on various types of memory mappings and the mmap system call. It outlines the detailed handling of page faults, particularly for anonymous and memory-mapped files, and the structure of process address spaces through mm_struct and vma. Key operations and structures involved in demand paging and memory allocation, including handling read and write faults, are also examined.

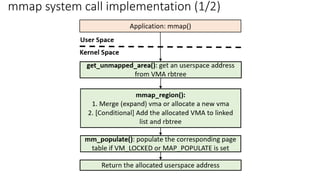

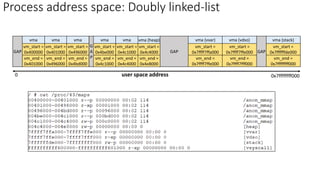

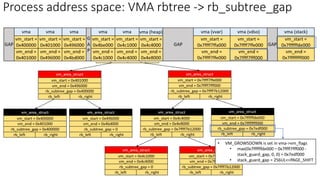

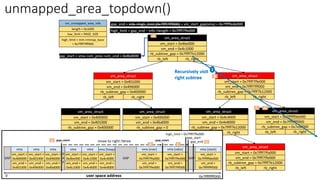

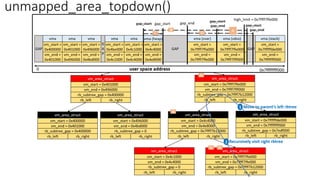

![unmapped_area_topdown() - Principle

vm_unmapped_area_info

length = 0x1000

low_limit = PAGE_SIZE

high_limit = mm->mmap_base

= 0x7ffff7fff000

gap_end = info->high_limit (0x7ffff7fff000)

high_limit = gap_end – info->length = 0x7ffff7ffe000

gap_start = mm->highest_vm_end = 0x7ffffffff000

unmapped_area_topdown(): Preparation

[Efficiency] Traverse vma rbtree to find gap space instead of traversing vma linked list](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-17-320.jpg)

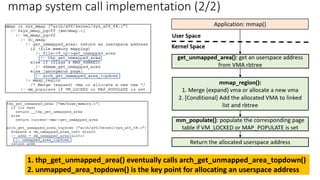

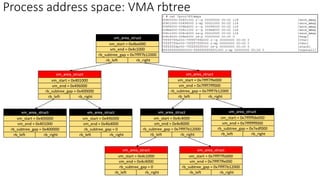

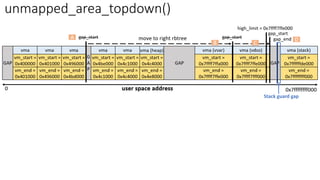

![__vma_link_file(): i_mmap for recording all memory mappings

(vma) of the memory-mapped file

task_struct

mm

files_struct

fd_array[]

file

f_inode

f_pos

f_mapping

.

.

file

inode

*i_mapping

i_atime

i_mtime

i_ctime

mnt

dentry

f_path

address_space

i_data

host

page_tree

i_mmap

page

mapping

index

radix_tree_root

height = 2

rnode

radix_tree_node

count = 2

63

0 1 …

page

2 3

radix_tree_node

count = 1

63

0 1 …

2 3

page page

slots[0]

slots[3]

slots[1] slots[3] slots[2]

index = 1 index = 3 index = 194

radix_tree_node

count = 1

63

0 1 …

2 3

Interval tree implemented

via red-black tree

Radix Tree (v4.19 or earlier)

Xarray (v4.20 or later)

files

mm

struct vm_area_struct *mmap

get_unmapped_area

mmap_base

page cache

vm_area_struct

vm_mm

vm_ops

vm_file

vm_area_struct

vm_file

.

.

pgd

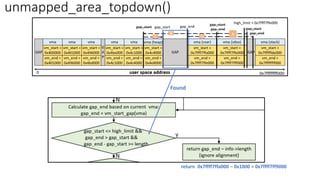

1. i_mmap: Reverse mapping (RMAP) for the memory-mapped file (page cache) → check __vma_link_file ()

2. anon_vma: Reverse mapping for anonymous pages -> anon_vma_prepare() invoked during page fault

RMAP](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-30-320.jpg)

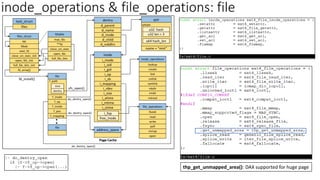

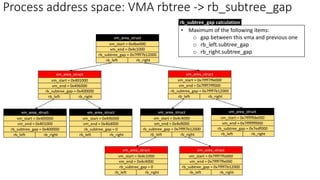

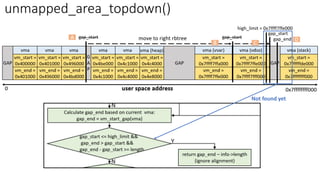

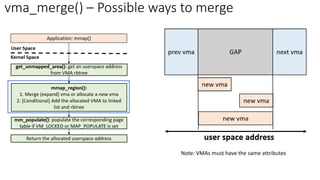

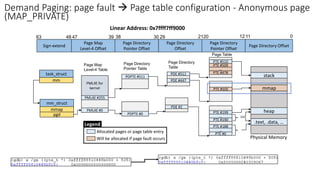

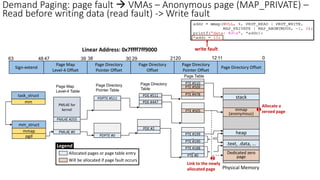

![Demand Paging: page fault → Page table configuration

[anonymous page: MAP_PRIVATE] page fault flow](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-35-320.jpg)

![man mmap

[gdb] backtrace

Demand Paging: page fault → Page table configuration - Anonymous page

(MAP_PRIVATE) [anonymous page: MAP_PRIVATE] page fault flow](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-36-320.jpg)

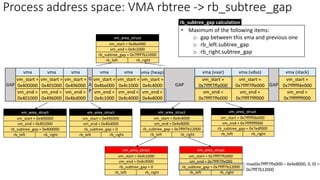

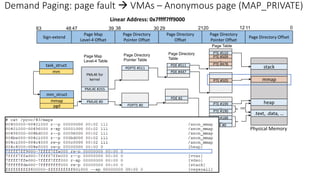

![Demand Paging: page fault → VMAs – Anonymous page (MAP_PRIVATE &

MAP_SHARED): first-time access

Anonymous page +

MAP_PRIVATE

Anonymous page +

MAP_SHARED + WRITE

Anonymous page +

MAP_SHARED + READ

[do_anonymous_page]

• Read fault: Apply the pre-allocated zero page](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-44-320.jpg)

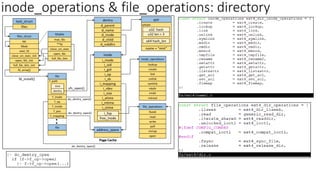

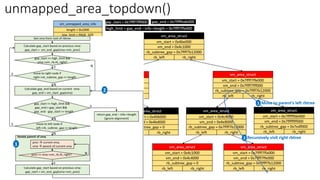

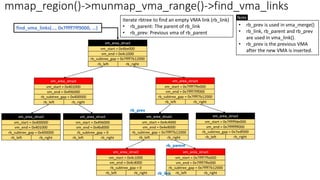

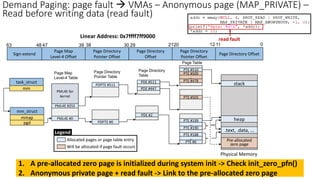

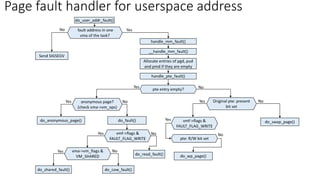

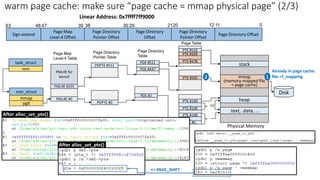

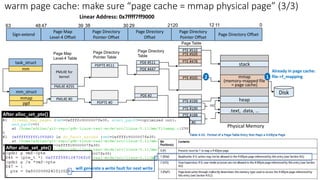

![Page Map

Level-4 Table

Sign-extend

Page Map

Level-4 Offset

30 21

39 38 29

47

48

63

Page Directory

Pointer Offset

Page Directory

Offset

Page Directory

Pointer Table

Page Directory

Table

PML4E #255

PML4E for

kernel

PDPTE #511

PTE #510

stack

task_struct

pgd

mm

mm_struct

mmap

PTE #509

…

PTE #478

PML4E #0

PDE #2

PDPTE #0

PTE #0

.text, .data, …

Linear Address: 0x7ffff7ff9000

PTE #188

…

PTE #190

PTE #199

…

heap

PTE #505 mmap

(memory-mapped file

= page cache)

12

20 11 0

Page Table

Page Directory

Pointer Offset

Page Directory Offset

Disk

PDE #511

PDE #447

Already in page cache:

file->f_mapping

1

2

warm page cache: make sure “page cache = mmap physical page” (1/3)

Physical Memory

Page Cache - Verification

page->mapping

• [bit 0] = 1: anonymous page → mapping field = anon_vma descriptor

• [bit 0] = 0: page cache → mapping field = address_space descriptor](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-55-320.jpg)

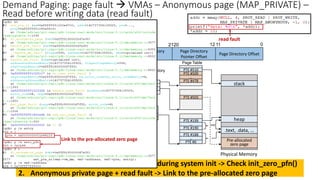

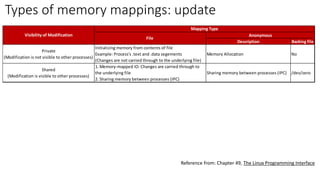

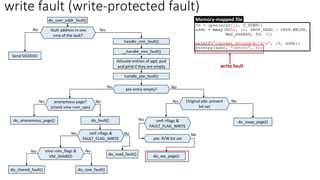

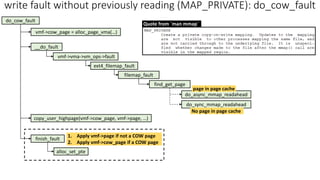

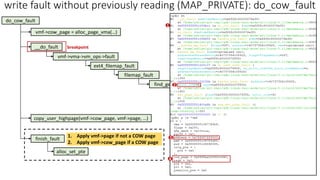

![do_wp_page

wp_page_copy

new_page = alloc_page_vma(…)

cow_user_page

copy_user_highpage

maybe_mkwrite

wp_page_shared

[MAP_PRIVATE] COW: Copy On Write

[MAP_SHARED] vma is (VM_WRITE|VM_SHARED)

write fault (write-protected fault): do_wp_page

write fault

[MAP_PRIVATE] COW: Quote from `man mmap`

Memory-mapped file](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-59-320.jpg)

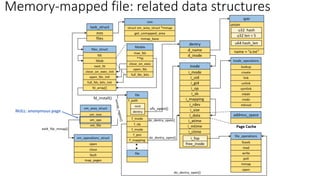

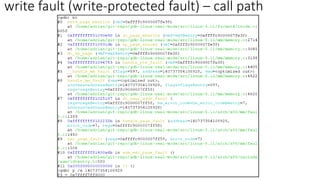

![[Recap] Think about this: #1

write fault

read fault

1

1

2

2

Memory-mapped file](https://image.slidesharecdn.com/mmap-220509131606-8a7a1e93/85/Memory-Mapping-Implementation-mmap-in-Linux-Kernel-69-320.jpg)