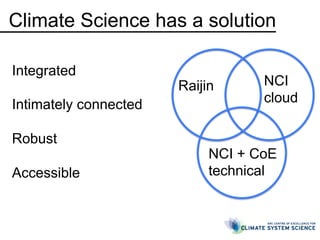

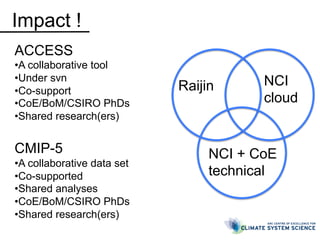

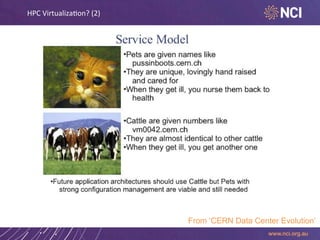

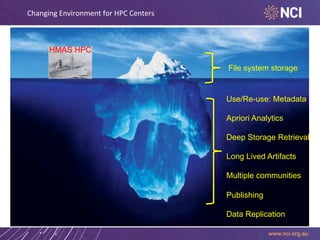

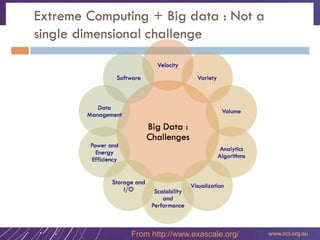

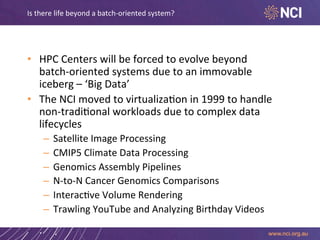

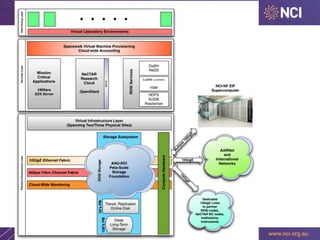

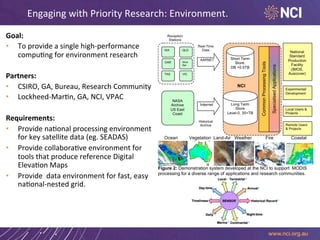

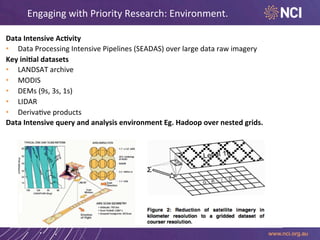

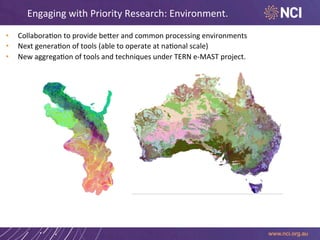

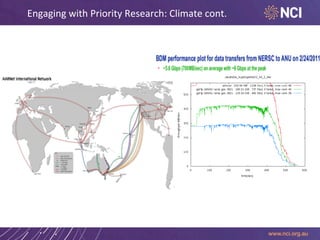

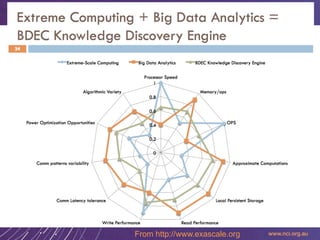

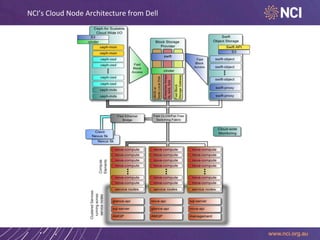

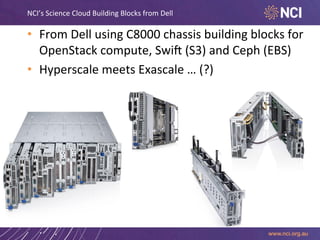

The document outlines the National Computational Infrastructure's (NCI) mission to facilitate ambitious research in Australia through high-end computing services while emphasizing the need for HPC centers to adapt to big data challenges. It discusses NCI's cloud services and collaborative efforts in various research domains, including climate science and genomics. Key requirements focus on providing national processing environments and developing next-generation tools for handling complex data pipelines.