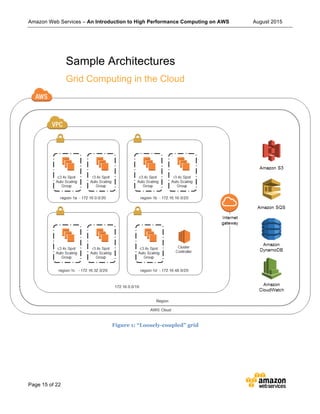

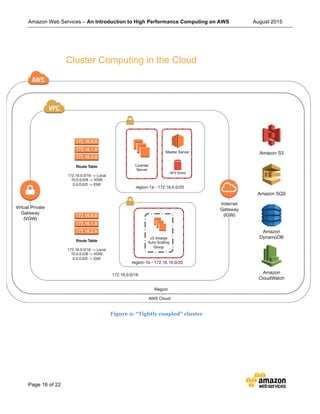

This document provides an introduction to high performance computing (HPC) on Amazon Web Services (AWS). It discusses what HPC is and how grids and clusters are commonly used to enable parallel processing. It also outlines a wide range of HPC applications and how they map to AWS features. Key factors that make AWS compelling for HPC are its scalability, agility, ability to enable global collaboration, and cost optimization options. Sample HPC architectures that can be implemented on AWS including grid and cluster computing are also presented.