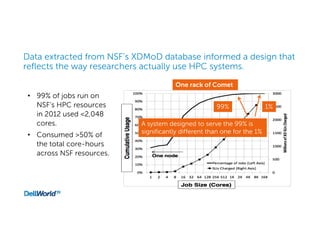

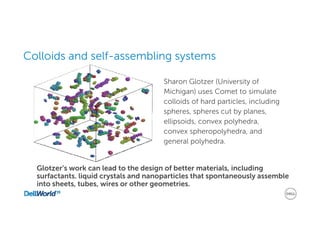

This document summarizes the benefits of High Performance Computing (HPC) and Dell's HPC solutions. It states that HPC is critical for solving big problems that require faster answers through integrated and scalable solutions. It then describes Dell's end-to-end HPC services including modular designs, expertise, and tools. Case studies show how Dell HPC solutions have benefited manufacturing, healthcare, finance, and energy customers. The document concludes by stating that Dell aims to help more organizations use HPC to drive innovation.